Trending Projects and Reports

Testimonials

Thanks to the help of the Zeno team, our investigations of Open LLM Leaderboard evaluations were much faster.

Clémentine, Research Scientist

Demystifying benchmarkings and evaluation practices, and communicating them to a wider audience effectively is very difficult. Zeno does a great job of encouraging taking a critical look at your metrics and your datasets, which is much needed in the field!

Hailey, Research Scientist

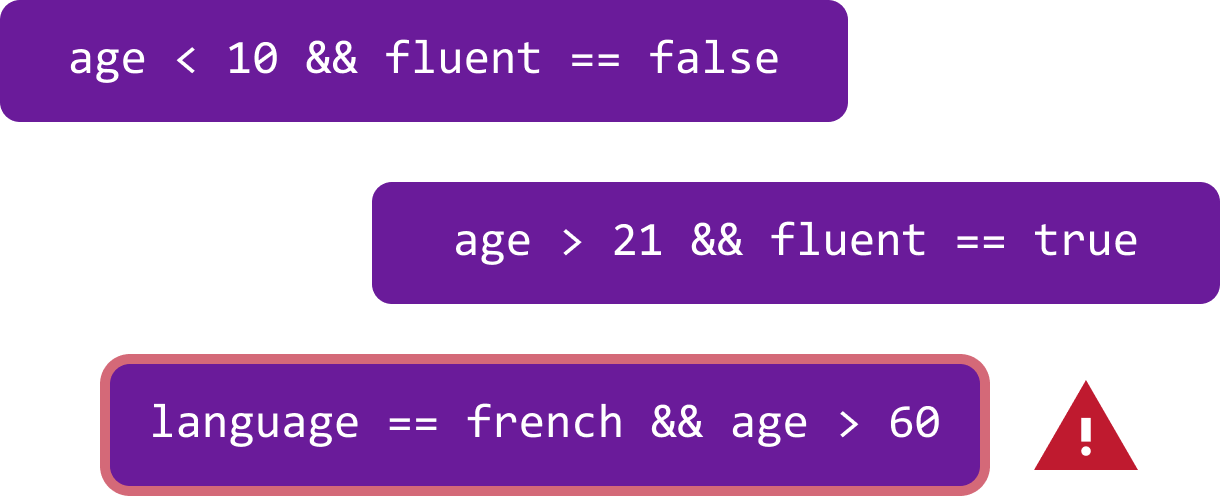

Error Discovery

Zeno includes advanced error discovery techniques such as slice finder to automatically surface your models' systematic failures.

Chart Building

Use Zeno's drag-and-drop interface to create interactive charts. Create a radar chart comparing multiple models on different slices of your data, or a beeswarm plot to compare hundreds of models.

Report Authoring

Visualizations can be combined with rich markdown text to share insights and tell stories about your data and model performance.

Reports can be authored collaboratively and published broadly.

1from zeno_client import ZenoClient, ZenoMetric

2

3

4client = ZenoClient(YOUR_API_KEY)

5

6

7project = client.create_project(name="Writing Assistant", view="text-classification")

8

9

10df = ...

11project.upload_dataset(df, id_column="id", data_column='text', label_column="label")

12system_df = ...

13proj.upload_system(system_df, name="GPT-4", id_column="id", output_column="output")