Nvidia is raking in nearly 1,000% (about 823%) in profit percentage for each H100 GPU accelerator it sells, according to estimates made in a recent social media post from Barron's senior writer Tae Kim. In dollar terms, that means that Nvidia's street-price of around $25,000 to $30,000 for each of these High Performance Computing (HPC) accelerators (for the least-expensive PCIe version) more than covers the estimated $3,320 cost per chip and peripheral (in-board) components. As surfers will tell you, there's nothing quite like riding a wave with zero other boards on sight.

Kim cites the $3,320 estimated cost for each H100 chip as coming from financial consulting firm Raymond James. It's unclear how deep that cost analysis goes, however: if it's a matter of pure manufacturing cost (averaging the price-per-wafer and other components while taking yields into account), then there's still a significant expense margin for Nvidia to cover with each of its sales.

Even so, it can't be denied that there are advantages at being the poster company for AI acceleration workloads. By all accounts, Nvidia GPUs are flying off the shelves without even getting personal with the racks they're piled on. This article in particular looks to be the ultimate playground for anyone trying to understand exactly what the logistics behind the AI HPC boom means. What that actually translates to, however, is that it seems that orders for Nvidia's AI-accelerating products are already sold through until 2024. And with expectations of the AI accelerator market being worth around $150 billion by 2027, there's seemingly nothing else in the future but green.

And of course, that boom is better for some than others: due to the exploding interest in AI servers compared to more traditional HPC installations, DDR5 manufacturers have had to revise their expectations on how much the new memory products will penetrate the market. It's now expected that DDR5 adoption will only hit parity with DDR4 by 3Q 2024.

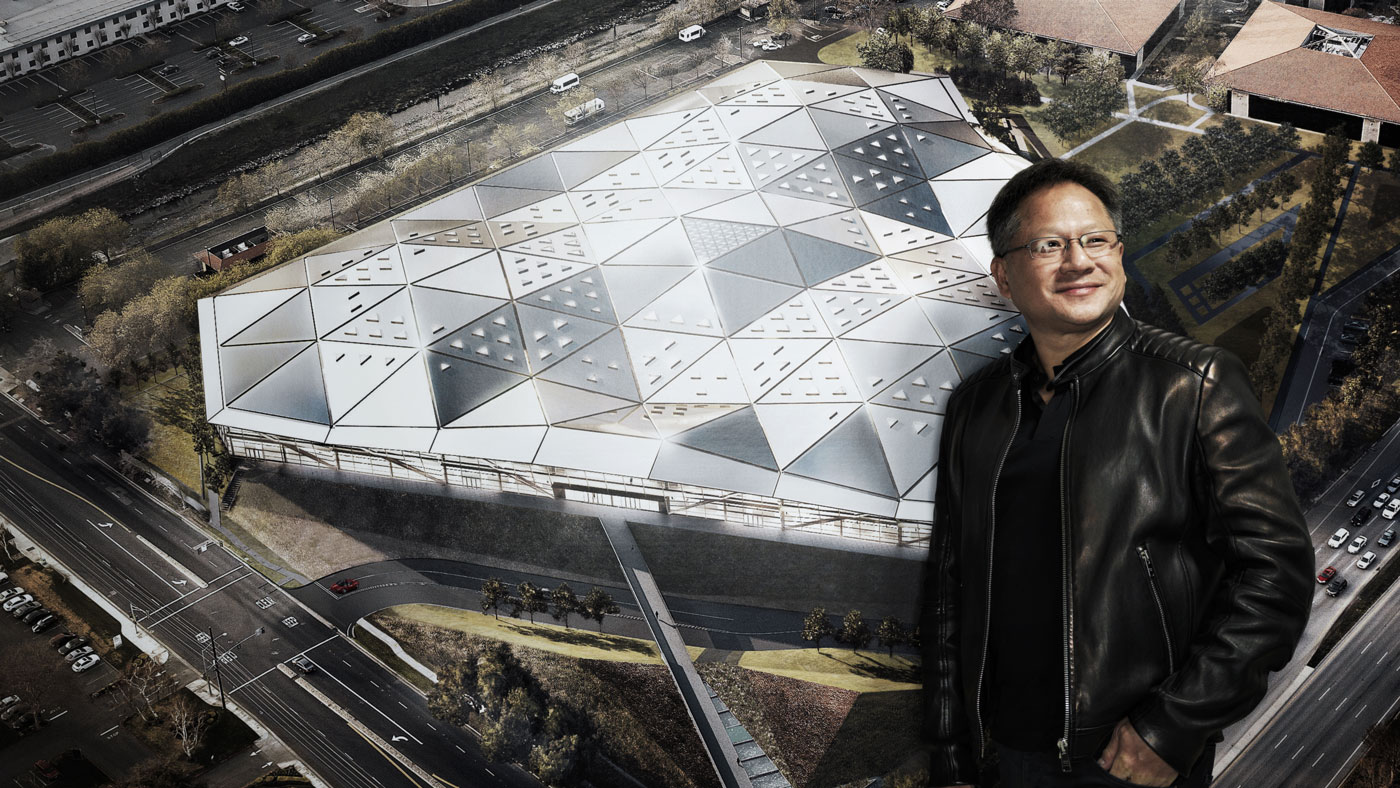

When all is said and done, Nvidia's bottom line as buoyed by its AI chip sales could now cover inane amounts of leather jackets. At least from that perspective, Nvidia CEO Jensen Huang has to be beaming left and right.