It’s easy to assume that software infrastructure for B2B products has little impact on sales. However, choices like hosting on AWS or Azure, or using Kubernetes, can significantly influence positioning, marketing and sales. Here’s a breakdown of some key implications, ordered by least to most surprising.

In this context, software infrastructure includes hosting options (self-hosted, on-prem, cloud providers), infrastructure components (database services like RDS; container orchestration like Fargate, Kubernetes; message brokers like Kafka), and architectural choices (e.g., single-tenant deployments).

As a rule of thumb, the security requirements of a prospect are proportional to their headcount, the criticality of data being stored by the product, and the riskiness of the capabilities of the product. For example, a prospect with 1,000 employees will likely have a stricter security review process compared to a prospect with 100 employees.1

Security teams weigh the value of a product along with the risk introduced by using it, therefore reduction in perceived risk may directly improve sales. Security features might also be useful marketing collateral for security-focused products. We focus this section on a key architectural decision that impacts security.

Software tenancy relates to how co-located customer data is within the application. For example, single-tenant applications tend to have some architectural separation between data between two customers. Anecdotally, security-conscious prospects in the procurement process often include questions about tenant isolation controls, and many products market tenancy controls to appeal to security-conscious buyers.

Most SaaS applications are multi-tenant, which implies the same application and data-storage is shared across multiple customers. That doesn't completely preclude enterprise sales: Large-scale products like AWS S3, Dropbox and Box have multi-tenant architectures, and are still used by sensitive enterprise customers, since prospects have developed trust over time.

Enterprises are concerned for the right reasons: multi-tenant applications are, unsurprisingly, prone to cross-tenant data leakages. These are tricky problems to prevent due to their heterogeneous nature.

Some products claim to have better data isolation via a single-tenant architecture. Data is still controlled by the product, but the architecture separates customer data at some layer of the stack, so code bugs are less likely to lead to cross-tenant data leaks. For example, Material Security separates data and application at the Google Cloud Platform (GCP) project layer, and Pinecone and Turso are database platforms that offer single-tenancy as a first-class construct.

Single-tenant architectures tend to have higher infrastructure costs as well as developer productivity slowdowns. For example, managing a database per customer is generally more expensive than managing a single larger multi-tenant database. There’s also additional tooling required to manage accounts or databases across separated tenants. This is a very common problem - before public cloud, many B2B software products were deployed on-premise, and to support cloud versions, the shortest path often involved taking on-premise software and running it single-tenant in the cloud. In conversations with Chief Technology Officers (CTOs), I’ve noticed several such products aim to migrate to multi-tenant architectures as the most feasible route to reduce infrastructure costs and maintenance burdens.

But there has been significant recent innovation to offset single-tenant costs. For example, a combination of server-less architectures for application logic, along with smaller, cheaper databases like SQLite rewired for single-tenant production storage may make it palatable to run single-tenant architectures efficiently.

Some products like Planetscale, GitPod and Hasura have split control and data-planes, where data resides in customer cloud accounts, but control and configuration management is in the product’s cloud account.

In this approach, the control plane is deployed as a traditional SaaS application to the product’s cloud, but it communicates to the data plane - a smaller, well-scoped system in the customer’s cloud accounts, which is where the customer’s data is, or where the risky actions take place. The communication between the control and data plane is secured by methods like mTLS because both the server and the client need to know and trust each other.

This provides both security and developer productivity (therefore product) improvements. For example, security teams can fully audit access to their data, while products can quickly iterate on feature-heavy control-planes.

However, this may not be sufficient for some security teams, as the product still controls the customer’s cloud account.

For even more security-conscious customers, products may be hosted on non-cloud servers, or customers-managed cloud accounts. Many regulated organizations, especially in finance and healthcare, tend to require this setup for complete control - a security vulnerability in the product doesn’t matter, as long as it’s correctly air-gapped by the customer.

There is a significant tradeoff here: these have the highest maintenance burden. For example, Gitpod gave up seven-figure revenue by no longer supporting their on-prem product due to high installation and maintenance costs.

Even popular enterprise-focused products like ServiceNow discourage on-premise usage via complex decision-flowcharts and warnings.

On-premise maintenance costs are often high due to inconsistent release cadence, version skew, limited debugging workflows, and limited observability. Customers often control the upgrade process (and tend not to upgrade), which implies the product often stays several versions behind. Getting access to logs is challenging if the system is air-gapped or not allowed to ship logs to product owners. I’ve noticed that products tend to avoid these completely, or significantly increase deal size to make the costs worth it.

Products that sell to sensitive customer segments need to meet the regulatory/compliance requirements of their customers, which in turn can force changes to your architecture.

For more involved compliance procedures like Fedramp, a product will find it difficult to be compliant if their cloud provider or database is not compliant. This is another case where cloud-prem deployments help - a product itself may not need to be Fedramp compliant if it can be hosted in the customer’s cloud environment.

Many cloud providers support specific regions/data-centers for government customers. For example, AWS has a GovCloud region, and Azure supports a version for the US Government. Products selling to governments often market that they’re hosted in these environments. For example, GitHub has a landing page that demonstrates that it can run on AWS GovCloud.

Therefore, if a product wants to reduce friction in government sales, it’s advantageous to use a cloud provider that has government region support.

Products selling across the globe often support deployments to specific regions. Products like Vanta, Merge and Sentry support EU deployments for customers who prefer that their data lives in the EU, and cloud providers like AWS have also responded by launching Digital Sovereignty functionality.

Many states, countries, and regions are increasingly passing privacy/data residency legislations that add compliance friction to the sales process.

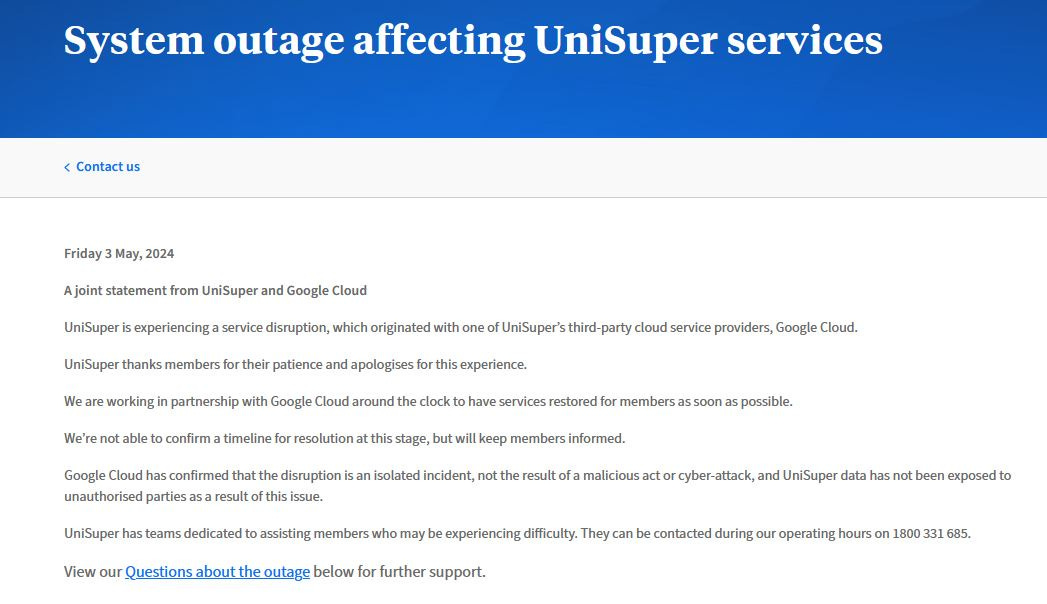

Even the most discerning buyers (like the CIA) seem to use products hosted on AWS, Azure, or GCP. These services aren’t perfect - Azure is prone to many security issues, and GCP is prone to too many bugs and freak incidents. Still, the benefits provided by the top cloud providers are so high, that the security/reliability risks are often a “cost of doing business”.

Anecdotally, enterprise customers in certain segments tend to prefer products based on the Microsoft stack, especially if they already use Microsoft products. For example, Harvey, a legal startup, markets that its deployed on Microsoft Azure.

If a product is targeted towards a certain segment of enterprise customers, it’s worth understanding whether that segment would feel more comfortable with the Microsoft stack. For example, the Microsoft CEO mentioned that 65% of the Fortune 500 use Azure OpenAI. This is consistent with the idea that once a product is trusted to safeguard customer data, it doesn’t necessarily need to be hosted on-premise.

There’s increasing value for B2B products to sell on cloud marketplaces. Big companies often have “spend commitments” on their cloud providers that go unused. Therefore, there’s a large financial incentive to spend on the cloud, and software sold on cloud marketplaces count towards these spend commitments. Additionally, cloud marketplace products go through the same billing process as the rest of cloud spend, which helps the customers’ finance team with one less relationship to manage.

To take advantage of a cloud provider's distribution channel, products need to host significant portions of their software with that cloud provider. For example, here’s a screenshot of GCP’s marketplace requirements.

Therefore, many new B2B products seem to be available on all clouds, so that they can sell on all marketplaces. For example, Sigma Computing announced that their product is available on all three major cloud providers, and is also available on all three marketplaces. Therefore, it may make sense to use cloud-agnostic infrastructure components, like Kubernetes and Kafka, so that it’s simple to deploy to multiple clouds early on.

There’s a growing number of tradeoffs to consider when considering a product’s architectural choices due to the surprising number of non-engineering related implications.

On a personal note, I’m looking to learn how multi-tenant products can better prevent cross-tenant breaches. If you’re struggling with the same problem, want to share any resources, or are willing to chat - please send me an email to reliability [at] substack.com