Main

For more than a decade, innovation in high-throughput DNA sequencing (that is, transforming information stored in DNA into human- and machine-readable sequences) has propelled research in the biomedical domain and led to an exponential growth in worldwide sequencing capacity14,15. A large proportion of these data is deposited in publicly funded repositories, such as the European Nucleotide Archive (ENA) maintained by the European Molecular Biology Laboratory’s European Bioinformatics Institute (EMBL-EBI)16, the Sequence Read Archive (SRA) maintained by the National Center for Biotechnology Information (NCBI)7 and the DDBJ Sequence Read Archive (DRA) maintained by the DNA Data Bank of Japan17. Although the rate of data deposition has slowed in recent years, the number of sequenced nucleotides contained in the ENA still doubles roughly every 45 months and currently comprises more than 10.8 × 1016 nucleotide bases (108 Pbp)18 of raw sequencing data, of which 6.7 × 1016 nucleotide bases (67 Pbp) are publicly available (as of 11 January 2025; Supplementary Fig. 13).

The classical pattern for accessing sequencing data in such large repositories is to identify relevant records using descriptive metadata and to retrieve a copy or slice of the data for further processing. Downloading the records or accessing them on a cloud platform for analysis often requires considerable resources. For the longest time, these petabase-scale raw sequencing data remained inaccessible for full-text search (that is, the task of retrieving all datasets containing a sequence similar to a given sequence query), substantially limiting its potential for future research. Consequently, we propose a highly scalable approach to index petabase-size repositories of raw biological sequencing data, transforming them into a highly compressed representation that is portable and yet fully accessible for downstream analysis. Inspired by recent advances in sequence algorithms and data structures1,2,19,20,21,22,23, we focused on specific technical developments for higher compression, as well as faster exact search and accurate alignment4,6 to demonstrate that searching public sequence archives as a whole is not only theoretically possible, but practically feasible at high accuracy and affordable costs.

Indexing petabases of biological sequencing data gives rise to a variety of technical challenges, for which some recent solutions have been proposed. Naturally, a focus is on making genetic variation in large cohorts, especially in humans, accessible for research and clinical use. Recent frameworks for variation graphs, such as VG24, and methods for compressing haplotypes or other graph paths25 have improved variation-aware alignment and variant calling23,26. However, these methods show strong limitations in the scalability of the input size and sequence variability. A second focus of algorithmic work is on taxonomic classification and read annotation, where the task is to match a given query sequence against a (large) set of known reference sequences or taxonomic labels. The classic approach for alignments against a collection of assembled genomes is BLAST27. Although the tool is heavily used and has gradually improved in speed28 over the past 30 years, it still lacks the scalability required for high-throughput searches in petabase-scale archives. With approaches like Centrifuge29, promising speed-up has at least been gained for querying large sets of assembled genomes. A third technical focus is on the sequence or experiment discovery problem: querying a sequence of interest (such as a transcript) against all samples available in a sequence repository. Current approaches for experiment discovery can be grouped into three categories: (1) sketching techniques for approximate set similarity and containment queries, which produce small hash-based summaries of the input data and then use these summaries (that is, sketches) to estimate the distances between query sequence sets and a target30,31; (2) approximate membership query data structures (such as Bloom filters) for approximately querying individual short sequences against sequence sets22,32,33,34; and (3) methods for the exact representation of annotated de Bruijn graphs, also called coloured de Bruijn graphs, storing additional metadata as annotations of its nodes or edges19, such as Mantis20, Fulgor35,36 and others22,37,38. All methods approach experiment discovery by matching short substrings of a fixed length k, called k-mers, from query sequences against those stored in the index. Although some of them are efficiently scalable, such as the recently presented PebbleScout approach39, this comes at the cost of lacking support for sensitive alignment and a comparatively high false-positive rate34,39.

A major challenge faced by all the methods discussed is finding a desirable balance between efficient operation on petabase-scale sequence collections and support for a versatile array of query operations, such as exact k-mer lookup and sequence-to-graph alignment for experiment discovery. To bridge this evident gap and to demonstrate the practical feasibility of economical and accurate full-text indexing of biological sequence repositories, we present MetaGraph, a versatile framework for the indexing and analysis of biological sequence libraries at the petabase scale.

Efficiency, modularity and extensibility

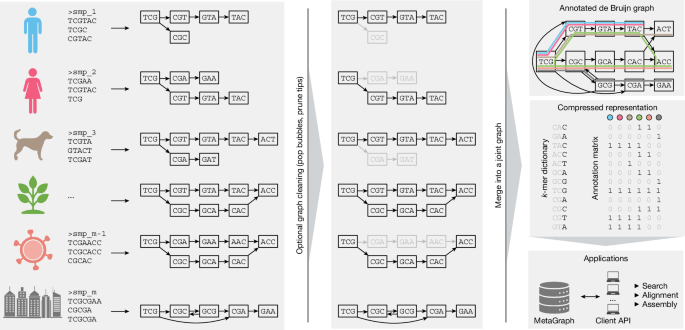

Building on recent advances in the field1,21 and by developing additional approaches for sequence analysis3,4,5,6,40, we devised the MetaGraph framework, which forms the core of this work and scales from use on single desktop computers up to distributed compute clusters. Notably, MetaGraph can index biological sequences of all kinds, such as raw DNA-sequencing and RNA-sequencing (RNA-seq) reads, assembled genomes and amino acid sequences. A MetaGraph index consists of an annotated sequence graph (Fig. 1 (top right)) that has two main components: the first is a k-mer dictionary representing a de Bruijn graph (Fig. 1 (middle right)). The k-mers stored in this dictionary serve as elementary tokens in all operations on the MetaGraph index. The second component encodes any metadata as a relationship between k-mers and categorical features (called annotation labels) such as sample IDs, geographical locations8, quantitative or positional information. This relationship is represented as a sparse matrix (annotation matrix; Fig. 1 (middle right)). We use various techniques to represent the de Bruijn graph and the annotation matrix in a highly compressed form1,3,4,5 (Methods). MetaGraph supports using different graph and annotation representations interchangeably, adapting to different storage requirements and analysis tasks, and allowing easy adoption of new algorithmic developments. Consequently, we made certain design choices when developing MetaGraph: (1) the use of succinct data structures and efficient representation schemes for extremely high scalability; (2) efficient algorithmic use of succinct data structures (such as preferring batched operations); and (3) modular open-source-licensed architecture supporting several graph and annotation representations and enabling the addition of new algorithms with little code overhead. These strengths allow MetaGraph to easily adapt to the rapid methodological advancements for sequence set representation35,37,41.

Schematic of graph construction and representation. Individual sequencing samples (left) are assembled into graphs (sample graphs), which are then cleaned to remove spurious paths and misassemblies (middle). Next, the sample graphs are merged to form a MetaGraph index (top right), consisting of the compressed k-mer dictionary index and a compressed annotation matrix (middle right; the grey colour indicates parts that can be efficiently compressed and do not have to be stored explicitly). The index is then used as the basis for downstream applications, such as sequence search, assembly and other queries (bottom right). Created in BioRender. Kahles, A. (2025) https://BioRender.com/3w8zkl3.

Scalable multi-sample index construction

Without loss of generality, we will assume that the indexing is applied to read sets that stem from sequencing biological samples, where each annotation label encodes a sample ID. Thus, the annotation matrix encodes the samples to which each k-mer belongs. Our indexing workflow proceeds in three steps: (1) data preprocessing; (2) graph construction; and (3) annotation construction. (1) Data preprocessing involves the construction of separate de Bruijn graphs (sample graphs) from raw input samples (Fig. 1 (left)). We optionally apply a subsequent cleaning step to each sample graph to reduce the impact of potential sequencing errors (Fig. 1 (middle)). Recently, the Logan project provided preprocessed and cleaned data of the Nucleotide archives13 as a public resource which covers this first step. (2) In graph construction, all of the sample graphs obtained in the first stage are merged into a single joint de Bruijn graph (Fig. 1 (top right)). (3) In annotation construction, we build the columns of the annotation matrix to indicate the membership of different k-mers in their respective sample graphs (Fig. 1 (middle right)). Finally, graph and annotation are compressed into representations that are best suited for their target application (Methods).

First-in-class scalability of MetaGraph

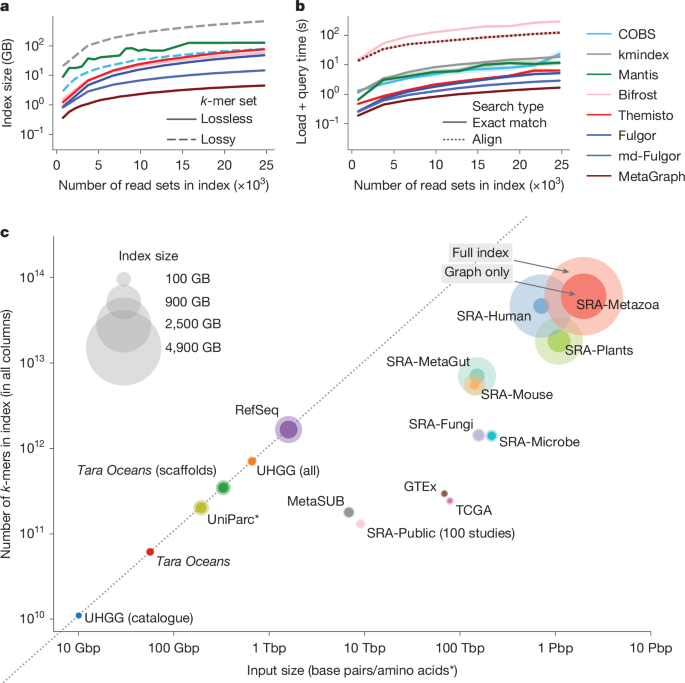

We evaluated MetaGraph’s scalability against other state-of-the-art indexing tools20,33,34,35,36,37,38 on subsets of increasing size up to 25,000 read sets randomly drawn from the BIGSI collection of microbial genomic read sets32 (Fig. 2a). The space taken by MetaGraph indexes is 3–150× smaller than that of the other evaluated approaches, despite some of them33,34 using a lossy compression approach (while Mantis20, Bifrost38, Themisto37, Fulgor35,36 and MetaGraph provide lossless representations of the indexed k-mer sets, COBS33 and kmindex34 both use probabilistic data structures that lead to false-positive matches).

a, The size of evaluated index data structures for representing a set of microbial whole-genome sequencing (WGS; BIGSI dataset) experiments of increasing size, shown for both lossy indexing methods COBS and kmindex, and for lossless Mantis, Bifrost, Themisto, Fulgor and MetaGraph with the SuccinctDBG and RowDiff<Multi-BRWT> compression schemes to encode the graph and the annotation, respectively. The dashed lines indicate lossy methods. b, The times for querying human gut metagenome AMPLICON sequencing reads (SRA: DRR067889) against indexes constructed with MetaGraph and other state-of-the-art tools from sets of microbial WGS experiments of increasing size. All curves show the performance of exact k-mer matching, except for the dotted MetaGraph curve, which shows the query performance with the more sensitive search strategy involving alignment. c, Overview of all MetaGraph indexes. For all datasets, we show the total number of input characters on the x axis and index size (given as the total number of unique k-mers) on the y axis. The marker size represents the size of the index. The solid portion of each marker represents the fraction of the total size taken by the graph and the translucent portion represents the fraction taken by the annotation (Table 1). Asterisk indicates that the inputs of the UniParc dataset are amino acid sequences, not base pairs.

However, the exceptional space efficiency of MetaGraph does not compromise query time. To evaluate that, we queried amplicon reads from a human gut metagenome sample (DDBJ: DRR067889) and found that MetaGraph offers highly competitive query times (Fig. 2b) compared with existing raw sequencing data indexing methods, despite using substantially less space. To achieve this, we devised several efficient algorithms to identify matching paths in the de Bruijn graph with their corresponding annotations and a batch query algorithm (Methods and Extended Data Fig. 1e), exploiting the presence of k-mers shared between individual queries by forming a fast intermediate query subgraph, increasing throughput up to 32-fold (Supplementary Fig. 9) for repetitive queries (such as sequencing read sets). In addition to exact k-mer matching (Extended Data Fig. 1c), we developed more-sensitive sequence-to-graph alignment5,6 algorithms that identify the closest matching path in the graph (Methods and Extended Data Fig. 1d). While exact k-mer matching can be viewed as a simplified version of semi-global alignment, it becomes less accurate as the data become more complex, necessitating sequence-to-graph alignment. With sufficient sequencing depth, we find that the more sensitive alignment substantially improves search accuracy (Extended Data Fig. 2).

Building petabase-scale indexes

To demonstrate the practical feasibility of accurate, cost-effective search across entire sequence archives, we used MetaGraph to index a substantial part of the public NCBI SRA, including DNA and RNA, together with restricted-access human cohorts and UniParc amino acid sequences. Our indexed datasets range from large RNA-seq cohorts (The Cancer Genome Atlas (TCGA)42, Genotype Tissue Expression (GTEx)43) and vast archives of genome sequencing records from the SRA7 (comprising 2,347,037 of microbial, fungal, plant and metazoa samples) to large, highly diverse whole-metagenome sequencing (WMGS) cohorts (MetaSUB8; all public SRA human gut metagenome samples) and collections of reference and assembled sequences (Reference Sequence (RefSeq)9, Unified Human Gastrointestinal Genome (UHGG)10, Tara Oceans11). Our MetaGraph index of the complete UniParc dataset12 demonstrates the straightforward use of protein sequences as input data. For selected datasets, we generated indexes preserving k-mer counts or coordinates using the concept of counting de Bruijn graphs5. The key statistics of all datasets presented in this work and the MetaGraph indexes constructed from them are provided in Table 1 and visualized in Fig. 2c. These indexes form a valuable community resource, as they succinctly summarize large sequencing datasets while supporting a variety of sequence queries against them. Indexes of public sequencing data are available at S3 Bucket s3://metagraph. When processing raw sequencing data, we applied moderate input cleaning (Methods), which led to considerable reductions in the required storage space without a substantial effect on index completeness and query sensitivity. In total, we processed 4.8 Pbp of raw input sequences (around 2.5 petabytes (PB) of gzipped FASTQ files), making these data fully and efficiently searchable by sequence.

To estimate the total size of an index for all publicly available sequences, we indexed a random subset of 100 studies from the entire SRA with MetaGraph (9.6 terabase pairs (Tbp)), which resulted in an index of size 32 gigabytes (GB) (Table 1). The entire public sequence repository has 534,254 studies and 66,912.1 Pbp (as of 11 January 2025); the size of a complete index is therefore estimated to be 223.3 terabytes (TB) (Table 1) (note that this would fit on a few consumer hard drives, at a total cost of around US$2,500). We repeated this estimate for an additional set of 47 chunks, each comprising 100 random studies, using preprocessed samples from the set of Logan contigs13 (Methods), resulting in a cumulative index size of 1.1 TB. Using the same extrapolation rules above, we estimate a complete index size of 172.9 TB. Lastly, we indexed a much larger subset of the Logan contigs (over 16 million accessions), confirming our estimated compression ratios (Table 1).

Data redundancy drives compression ratio

MetaGraph’s compression performance greatly depends on the properties of the indexed sequence sets. As input data often has different formats (such as FASTA, FASTQ, SRA), we measured the final compression ratio in characters per byte (that is, the average number of input characters per byte of the MetaGraph index), making it comparable across different datasets. We decompose the compression ratio into two factors: data redundancy and indexing efficiency, shown in the last two columns of Table 1. Intuitively, redundancy shows the amount of data duplication within a sample, while indexing efficiency reflects data duplication across different samples and increases for sets of related samples.

While the GTEx43 and TCGA42 cohorts total over 100 TB of raw compressed RNA-seq data, they show only limited diversity. Our MetaGraph index represents each in around 10 GB space, thereby achieving the highest compression ratios among all indexed datasets (up to 7,416 bp per byte). Even when adding k-mer counts, the final compression ratio is around 1,000 bp per byte.

The medium complexity range mainly consists of whole-genome sequencing read sets, showing less redundancy. Notably, our representation of the SRA-Microbe dataset takes only 57 GB, which is 28× smaller than the 1.6 TB BIGSI index32 and 2.2× smaller than the smallest Fulgor36 representation (Supplementary Fig. 7).

On the opposite end, we selected the MetaSUB cohort8, containing 4,220 environmental metagenome samples comprising 7.2 Tbp, and the SRA-MetaGut cohort, containing all human gut metagenome samples available on SRA at this time, comprising around 156 Tbp. These inputs span very diverse organism populations and include many rare sequences, yielding lower compression ratios (140–155 bp per byte), but still compact indexes (46.7 GB for MetaSUB; 1,111 GB for SRA-MetaGut). Lastly, collections of assembled genomes and protein sequences exhibit the greatest diversity and only minimal data redundancy, with intersample similarity reflecting evolutionary distance; despite this, MetaGraph compactly indexes them.

Highly accurate, near-complete indexes

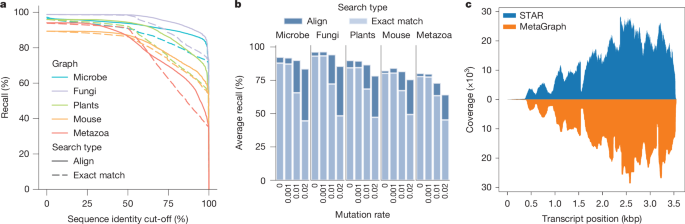

When performing an experiment discovery query with a given sequence, some true labels may not be retrieved due to k-mers discarded by our per-sample cleaning workflow. In this light, we evaluated the accuracy of experiment discovery on our SRA-derived MetaGraph indexes. We validated our cleaning and alignment approaches by showing that the individual sample graphs accurately represent their respective input read sets, with around 82% of our query reads realigning back to their respective sample graphs with at least 80% sequence identity (Extended Data Fig. 3a). When mapping these reads to their corresponding annotated graph indexes, we similarly observe that 75–95% of the query reads retrieve their true labels with at least 75% sequence identity (Fig. 3a).

a, Complementary estimator of the cumulative distribution function (ECDF) curve, showing the fraction of reads that are retrieving the correct label from the index (label recall, y axis) at different sequence identity cut-offs (x axis) for a range of indexed SRA datasets. When calculating recall at a given sequence identity cut-off, a read mapped to a graph sequence (that is, the spelling of a graph walk) with a given label is only considered if the percentage of the read’s nucleotides exactly matching the sequence is above the cut-off. We define the realignability, or average recall, of a graph–query pair as the area under this curve. b, Realignability of each graph for increasing error rates in the query (an evaluation of realignability at higher error rates is shown in Supplementary Fig. 5). c, Coverage plot for the human transcript SFTPB-207 in GTEx sample SRR599154 determined by STAR alignment against the hg38 reference genome (top, blue) and retrieved from the MetaGraph GTEx index with counts (bottom, orange).

Moreover, we evaluated the robustness of our mapping algorithms to sequence variation using altered versions of the query reads with varying mutation rates. Generally, we observe that the experiment discovery accuracy difference between exact k-mer matching and sequence-to-graph alignment increases with increasing sequence variation (Fig. 3b). Especially crucial for functional sequencing data, such as RNA-seq, is MetaGraph’s ability to encode per-sample count information. As an example, we provide the expression of transcript SFTPB-207 (ENST00000519937.6) in a sample from the GTEx cohort (Fig. 3c). Even though our representation compresses the original data 1,000-fold, we can closely reproduce the coverage profile generated from aligning the original raw sequences to the reference using STAR. As all count information is encoded per sample, tissue specificity is preserved. For this example, we find the same surfactant transcript mainly expressed in the lung, and partially in the testis, but not elsewhere (Extended Data Fig. 3b), as expected.

Exploring the gut resistome and phageome

To showcase MetaGraph’s use in real-time omics analysis on large-scale datasets, we queried the full CARD antimicrobial resistance (AMR) database44 and all bacteriophages from RefSeq release 218 (ref. 45) against the 241,384 human gut microbiome samples in our SRA-MetaGut database (Methods). Using classical approaches, this analysis would have required access to hundreds of terabytes of raw sequencing data. With compressed sequence representations, it can be done on a single compute node in about an hour.

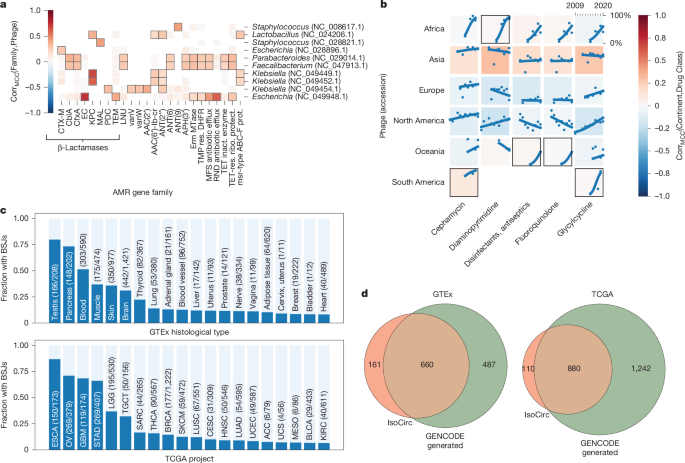

We recover strong associations between the Escherichia λ phage ev017 and the Escherichia coli β-lactamase gene, two Klebsiella phages and carbapenem-resistant β-lactamases from Klebsiella pneumoniae, and λ ev017 and the RNA antibiotic efflux pumps (Fig. 4a). Moreover, we studied trends in antibiotic resistance over time across various continents from whole-genome sequencing samples. We find significant strong growth trends over time in resistance against diaminopyrimidines in Africa, antiseptics and fluoroquinolones in Oceania, and against cephamycin and one of the ‘last resort’ antibiotics tigecycline46 (a glycylcycline) in South America (Fig. 4b).

a, Associations between AMR gene families and RefSeq bacteriophages (phages) discovered in publicly available human gut microbiomes deposited to the NCBI SRA from 2008 to 2022. Squares with a black border indicate a statistically significant association and missing squares indicate hypotheses that were not tested due to an insufficient number of matching samples. inact., inactivation; prot., protein; protect., protection; res., resistant; ribo., ribosomal. b, The growth in the prevalence of resistance to antibiotics in human gut microbiomes across six continents from 2010 to 2020. Each point represents the normalized proportion of samples from a continent matching genes conferring resistance in a given year, and each line represents a binomial regression fit. Squares with a black border indicate regressions with significantly high fit scores and missing squares indicate experiments with an insufficient number of samples. CorrMCC, Matthews correlation coefficient. c, The relative fraction of GTEx (top) and TCGA (bottom) samples per tissue (x axis) that carry recurrent BSJs. The per-tissue ratio is computed as samples that carry at least 1 BSJ (dark blue) over all samples of that tissue (light blue)—both on the y axis. The absolute numbers are given in parentheses. d, The overlap between BSJ predicted from GENCODE and found through MetaGraph with the junction set experimentally provided through IsoCirc for GTEx (left) and TCGA (right) cohorts. Only junctions sufficiently highly expressed in the respective cohort are counted.

Demonstrating MetaGraph’s interactive abilities, we provide public example scripts (https://github.com/ratschlab/metagraph_paper_resources/tree/master/notebooks) querying the full CARD database against an index of 4,220 WMGS samples from the MetaSUB cohort. We reproduce a ranking of cities based on the average number of AMR markers per sample (Extended Data Fig. 4b), consistent with the analysis performed on the raw data using orthogonal strategies8. We also show other exploratory analyses, linking sample metadata, such as surface material at the sampling location, to the query results (Extended Data Fig. 4c).

Survey of back-splicing in GTEx and TCGA

MetaGraph indexes can easily express transcriptome features that are difficult to represent in a classical linear coordinate system. One example is back-splice junctions (BSJs) that connect the donor of an exon site to the acceptor site of a preceding exon, forming circular RNAs, which have been found to commonly occur also in humans47 (Fig. 4d). When systematically querying all 4,052,768 possible intragenic BSJs using the GENCODE annotation (v.38) and the hg38 reference genome (Methods), we found a total of 1,113 and 2,093 candidates perfectly matching to the GTEx and TCGA indexes, respectively, that did not match the reference genome or transcriptome, and had a coverage of all k-mers of at least 10 in at least one sample. While such features are hard to map using linear RNA-seq aligners (Extended Data Fig. 4e), it is a simple task using our graph index. After further filtering (Methods), we found several BSJs to be recurrently detectable in a large fraction of GTEx tissues and TGCA cancer types (Fig. 4c), where testis, pancreas, blood, muscle, skin and brain tissues showed the largest relative fraction in GTEx, and oesophageal carcinoma (ESCA), ovarian serous cystadenocarcinoma (OV), glioblastoma multiforme (GBM) and stomach adenocarcinoma (STAD) the largest relative fractions in TCGA. When overlapping our candidate set to a set of BSJ derived from recent long read data48 that we also aligned against the GTEx and TCGA indexes, we found large overlaps of 660 and 880 BSJs (Fig. 4d), respectively, which had high tissue specificity (Extended Data Fig. 4f). Subsetting to the COSMIC Cancer Gene Census and comparing the TCGA and GTEx cohorts suggests a differential use of BSJ between cancer and normal tissues (Extended Data Fig. 4f).

Constructing a community resource

All indexes constructed from public-access data are available in AWS under s3://metagraph and can be downloaded for large-scale local search and analyses or directly accessed from the cloud. Instructions for access are provided at GitHub (https://github.com/ratschlab/metagraph-open-data). To make our indexes accessible for online queries, we developed a search engine for biological sequences—MetaGraph Online (Methods)—which currently hosts a large subset of the various indexes constructed and presented in this work, ranging from SRA samples to several collections of assembled and reference genomes (Supplementary Fig. 12). The service is publicly available for web and API queries (https://metagraph.ethz.ch/search). It uses the MetaGraph framework for hosting the indexes and performing queries on them in real time, showing the result of a query practically immediately. However, the service has limited throughput; thus, for large-scale searches, the indexes provided in the cloud should be used instead.

The cost and accuracy of global search

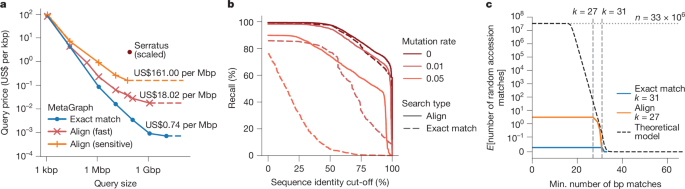

To estimate the total cost of exact search and alignment against an entire sequence repository (such as the ENA or SRA), we indexed 47 random subsets of 100 studies each from the Logan contig set13 using MetaGraph (Methods), leading to performance-optimized (35.6 GB median) and space-optimized (23.2 GB median) indexes. On average, indexing required 0.2 core-hours per sample, which would cost US$0.0025 per sample on Amazon AWS. We created an additional index from raw read sets across 100 random studies sampled from the entire SRA with MetaGraph, using our sample cleaning strategy (Table 1). The construction cost per sample was US$0.028, where more than 90% of the effort went into preprocessing the raw sequencing data (Supplementary Table 4). We then estimated the throughput of k-mer matching and alignment for different query sizes (Methods). We assume on-demand querying, where we download each index from cloud storage into local RAM before querying. For each query size, we choose a cost-optimal Amazon AWS cloud compute node, considering the indexes’ data transfer and querying times. We estimated costs by querying reads against our random subset indexes, then scaling up to the entire SRA (33,337,531 public accessions, 67 Pbp as of 11 January 2025) by multiplying the costs by 252.6 (Fig. 5a and Methods). This realistically estimates the costs of searching against the whole set of publicly available sequences. Figure 5a shows the scaled query price per kilobase pair (kbp) for different query sizes. For large queries, for which index loading is a minor cost, queries become as cheap as US$0.74 per Mbp for exact k-mer matching and US$18.02 per Mbp for alignment. For small queries, the index loading time dominates, but a single query ranging from 1 to 10 kbp can still be done at a price as low as US$100 even for search with alignment against the entire SRA. We find that these results are robust against changes to the composition of indexed samples. Even querying complex metagenomes incurs the same cost (Supplementary Fig. 17). Next, to assess how our search sensitivity depends on the mutation rate and how it is affected by the cleaning during preprocessing, we searched our 2 Mbp query set (18,889 reads) against the index of 100 random studies from which they originated (Fig. 5b), as well as the same reads with added random mutations at rates of 0.01 and 0.05. We observe a high recall for the original reads without mutations, reaching 96% at a 0.5 sequence identity cut-off for both k-mer matching and alignment, and 81% and 85% at a sequence identity cut-off of 0.75 for k-mer matching and alignment, respectively. For mutated reads, alignment substantially improves on the exact matching strategy. At a mutation rate of 0.05, it retrieves around 58% instead of 0.5% of the reads at a 0.75 sequence identity cut-off. Finally, despite using a lossless index, even completely random sequences can occur by chance in any read set (with probabilities dropping exponentially with read length) and may accumulate when querying millions of samples. To estimate the expected number of matches that a random query sequence would encounter within the index of all publicly available samples for different search strategies, we generated a set of random sequences and queried them against the same 100-study index, then scaled it to the 33,337,531 public accessions in the SRA to 11 January 2025 (Fig. 5c). We also derived a theoretical model that predicts the number of random accession matches in the entire SRA (Methods).

a, The cloud compute costs for sequence queries of increasing size calculated by mapping query sets with reads of length 100–250 randomly selected from the SRA-Public (100 studies) data against 47 100-random-study indexes of Logan contigs13, and then scaled up to the whole set of publicly available sequences (33,337,531 public accessions as of 11 January 2025). We use the minimum cost attainable when querying both the performance- and space-optimized index format (the costs of both formats are shown in Supplementary Fig. 16). For comparison, we plotted the estimated cost of the Serratus alignment query60 if it were performed on the 11 January 2025 SRA snapshot. b, Query sequence recall to the 100-study index at different mutation rates for exact matches and alignments. We use the Fast alignment profile for the 0.0 mutation rate and the Sensitive profile for the 0.01 and 0.05 mutation rates. When calculating recall at a given sequence identity cut-off, a read mapped to a graph sequence (that is, the spelling of a graph walk) with a given label is only considered if the percentage of the read’s nucleotides exactly matching the sequence is above the cut-off. c, The expected numbers of random matches of a sequence of 100–250 bp length to the whole set of public sequences (as of 11 January 2025) reported by different search approaches. The dotted horizontal line represents the total number of public SRA accessions, and the dashed vertical lines represent the k-mer size (31) for exact matching and the seed length (27) used for alignment. The theoretical model curve represents the theoretically expected number of matches of a random string of length 130 bp to the SRA (y axis) where at least one k-mer of a given length (x axis) is found exactly. A comparison to other tools is shown in Supplementary Fig. 14.

Discussion

Here we set out to demonstrate the practical feasibility of indexing entire sequencing archives, such as the EBI’s ENA or the NCBI’s SRA, with the goal of making them accessible for accurate and cost-efficient full-text search. The major challenge of this task is to hold pace with the constantly growing amount of biological sequencing data. As a solution, we present MetaGraph—a highly scalable and modularized framework designed to index and analyse very large collections of biological sequencing data.

In total, we have processed almost 5 Pbp of public SRA/ENA data and transformed them into compressed indexes that are accessible for full-text search, reducing the input data size up to a factor of around 7,400 for highly redundant sequence sets and a factor of about 300 on average (as in our random 100-study subset). This not only makes the data better accessible, but also makes the data easily transportable across analysis sites. Specifically, we have indexed various collections of DNA and RNA sequences, including a substantial portion of all publicly available whole-genome sequencing samples from the NCBI SRA. In particular, we have indexed a substantial portion of all microbe, fungi, plant, human and human gut wmetagenome and a substantial part of the metazoan samples from the SRA, which together alone make up 2.6 Pbp in 1,903,327 read sets. Moreover, we indexed several other diverse and biologically relevant datasets, from reference genomes to raw metagenomic reads. Finally, we indexed over 16 million public SRA samples that were pre-assembled into contigs within the Logan project13. The MetaGraph indexes require orders of magnitude less storage than the original gzip-compressed FASTQ inputs and provide insights into the biological structure and composition of their respective input data (Table 1), while demonstrating consistently high realignability (Fig. 3).

Owing to the sheer size of the data, and because our indexes perform optimally when present in main memory, we have split the input into batches. This enables us to arbitrarily scale the index representation and enable efficient parallel search, while only marginally reducing compression performance. This partitioning induces a natural growth strategy for a whole database of biological sequences: newly deposited samples are indexed at regular intervals to form index chunks. The already indexed samples can optionally be repartitioned, improving inter-sample similarity and thus further improving compression. On all datasets (except for assembled references), we apply a strategy of moderate cleaning to not accumulate sequencing noise in the final indexes. Although this slightly reduces our query sensitivity (Supplementary Fig. 8), we generally observe a tenfold reduction in index size.

We provide all indexes generated from public data as an open resource to the community that can be accessed within the compute cloud for queries. By additionally providing a web service for interactive queries at MetaGraph Online, we allow life-science researchers and other communities easy and cost-efficient access to the sequencing data for exploration and search. Although this still represents only a fraction of all publicly available sequencing data, our effort was carried out by a single research laboratory, demonstrating general feasibility and serving as a proof of concept.

During the completion of this work, the Logan resource13 became available. In this seminal effort, all read datasets published in the SRA up to December 2023 were transformed into k-mer spectra (discarding singleton k-mers), reducing their data size 25-fold for contigs and 6-fold for unitigs. The resulting Logan contigs are analogous to MetaGraph’s preprocessed sequence sets and can be used directly to build MetaGraph indexes. Recently, Logan Search49 has become available, presenting a website with online search capabilities for all Logan contigs. A direct comparison of query cost and accuracy is beyond the scope of this work.

Based on a representative random sample taken from SRA, we provide an extrapolated cost to index the entire public archive and show the expected query sensitivity and precision (Fig. 5). These results show that continuous indexing is technically feasible for institutions such as the EBI or the NCBI.

The results presented in this study are an important milestone in computational genomics, demonstrating the feasibility of accurate and cost-effective search in petabase-scale biological sequence repositories and making them more accessible for exploration and computational analyses—a current, pressing problem of high practical relevance. We have demonstrated that it is feasible to accurately and cost-efficiently search for and align nucleotide sequences in all available raw and assembled sequencing data across the tree of life. What was deemed to be very challenging a few years ago, such as indexing and searching in a few thousand read sets, now is tractable and can easily be done on a modern laptop. We envision MetaGraph to serve as a versatile and modular framework that enables researchers to perform large-scale comparative analyses in genomics and medicine using typical academic compute clusters, making public datasets truly open and interactively accessible. We envision that MetaGraph indexes could facilitate large-scale learning tasks on biological sequences, such as training large language models. For example, MetaGraph indexes can act as a database to efficiently generate sequences for model training, including both previously observed and de novo sequence recombinations.

Lastly, MetaGraph has been developed in the context of a highly active research community. Its design as a modular framework enables us to benefit from future technological improvements. Many new exciting approaches for representing k-mer sets35,50, the use of approximate-membership-query data structures34,51,52,53, dynamically changing the k-mer set54, improved annotation compression55,56, as well as alternative alignment and seeding approaches37,57,58,59 will be interesting avenues to explore for future extensions of MetaGraph.

Limitations

The quality of any sequence index can only be as good as the indexed input data. The presence of technical sequence noise, for example, can excessively increase index sizes. We have attempted to remove sequencing noise in a computationally efficient manner, at the cost of removing potentially useful sequence information. Consequently, our search indexes are lossy in the sense that they only represent a user-chosen subset of a sample’s k-mers losslessly, as opposed to losslessly representing the input sequences or their complete k-mer sets. On the other hand, our indexing of assembled sequences (RefSeq9, UHGG10 and Tara Oceans11) is completely lossless, encoding genomic coordinates and avoiding any sequence cleaning5. A second limitation of our approach is the use of static data structures for maximal query performance and minimal memory consumption, which makes it hard to directly extend an existing index with additional samples. Although we have shown that this is not an issue for the presented use cases, there are situations in which extending a large index with a few samples is desirable. In such situations, either the index has to be fully reconstructed or the delta needs to be indexed and queried separately from the existing pre-constructed index. Third, all k-mer based indexes show limited sensitivity when querying noisy (such as nanopore sequencing) or distantly homologous sequences. Lastly, MetaGraph has been optimized for DNA, RNA and amino acid alphabets. Many recent sequencing technologies can also record sequence modifications, resulting in much larger alphabet sizes. Although MetaGraph can handle such inputs in principle, current search algorithms are not optimized for this setting.

Conclusions

We are confident that the approaches presented here can be used and integrated into the infrastructure of large data repositories, such as ENA and SRA, to make all sequencing data stored in these repositories searchable, thereby providing a small, yet efficient prototype of a ‘Google for DNA’. Notably, the cost of providing this whole service would be relatively small compared with the price paid to generate these data in the first place and the cost of storing it in the ENA and SRA.

Methods

Representing de Bruijn graphs

According to the formal definition of a de Bruijn graph, every pair of k-mers (short contiguous subsequences of length k) with an overlap of k − 1 characters are connected with a directed edge. It can be seen that in the de Bruijn graph, the edges can always be derived from the nodes alone, and hence, it is sufficient to store only the k-mer set. These k-mers are used as elementary indexing tokens in MetaGraph.

MetaGraph provides several data structures for storing k-mer sets, which are used as a basis to implement different representations of the de Bruijn graph abstraction. In addition to the simple hash table, the k-mers may be stored in an indicator bitmap61 (a binary vector represented as a succinct bitmap of size \(|\,\varSigma \,{|}^{k}\) indicating which k-mers are present in the set) or in the BOSS table1 (a data structure proposed by Bowe, Onodera, Sadakane and Shibuya for storing a set of k-mers succinctly; see Supplementary Fig. 3 and the corresponding Supplementary Table 2 for an example BOSS graph and table). We call our de Bruijn graph implementations based on these data structures HashDBG, BitmapDBG and SuccinctDBG, respectively. For detailed descriptions and the properties of these representations, refer to Supplementary Section A.3.1 and Supplementary Table 1. All these data structures support exact membership queries, and they map k-mers to positive indexes from 1 to n, where n is the number of k-mers in the represented set (or zero if the queried k-mer does not belong to the set). While HashDBG is mostly used internally (for example, for batched sequence search), SuccinctDBG typically exhibits the best compression performance and, therefore, serves as the default compressed representation.

Scalable k-mer enumeration and counting

To count and de-duplicate k-mers, MetaGraph uses the following approach to generate the so-called k-mer spectrum: the input k-mers are appended to a list, which is sorted and de-duplicated every time it reaches the allocated space limit or after all input k-mers have been processed. During de-duplication, the k-mer counts are summed up to maintain the total count of each unique k-mer. We call this approach SortedSet. Moreover, MetaGraph offers the SortedSetDisk approach, which implements a similar algorithm in external memory. Using a pre-allocated fixed-size buffer limits memory usage and allows for constructing virtually arbitrarily large k-mer spectra but requires a larger amount of disk I/O. Lastly, MetaGraph supports passing precomputed outputs from the KMC3 (ref. 62) k-mer counting tool (fork karasikov/KMC commit b163688) as an input to make use of its exceptionally efficient counting algorithm and filters. Once the entire k-mer spectrum is obtained and all k-mers are sorted, they are converted into the final data structure to construct the target graph representation.

Extracting contigs and unitigs from graphs

All sequences encoded in the graph (or any defined subgraph) can be extracted from it and stored in FASTA format through graph traversal63,64. The graph is fully traversed and its paths, formed by consecutive overlapping k-mers, are converted into sequences (contigs) that are returned as a result of this operation. Each k-mer of the graph (or subgraph) appears in the assembled contigs exactly once. Thus, the resulting set of sequences is a disjoint node cover of the traversed graph.

MetaGraph provides efficient parallel algorithms for sequence extraction and distinguishes two main types of traversal: (1) traversal in contig mode extends a traversed path until no further outgoing edge is present or if all the next outgoing edges have already been traversed; while (2) traversal in unitig mode only extends a path if its last node has a single outgoing edge, and this edge is the single edge incoming to its target node. This definition of a unitig matches the one described previously65.

Basic, canonical and primary graphs

When indexing raw reads sequenced from unknown strands, we supplement each sequence with its reverse complement, which is then indexed along with the original sequence. As a result, the de Bruijn graph accumulates each k-mer in both orientations. Such graphs (which we call canonical) can be represented by storing only one orientation of each k-mer and simulating the full canonical graph on-the-fly (for example, for querying outgoing edges, return not only the edges outgoing from the source k-mer, but also all edges incoming to its reverse complement k-mer).

Storing only canonical k-mers (that is, the lexicographically smallest of the k-mer and its reverse complement) effectively reduces the size of the graph by up to two times. However, this cannot be efficiently used with the succinct graph representation based on the BOSS table. The BOSS table, by design, requires that each k-mer in it has other k-mers overlapping its prefix and suffix of length k − 1 (at least one incoming and one outgoing edge in the de Bruijn graph). However, it is often the case that among two consecutive k-mers in a read, only one of them is canonical. Thus, storing only canonical k-mers in the BOSS table would often require adding several extra dummy k-mers for each real k-mer (Supplementary Fig. 4), which makes this approach memory inefficient. We overcome this issue by constructing primary graphs, where the word primary reflects the traversal order, as described in the next paragraph.

When traversing a canonical de Bruijn graph, we can additionally apply the constraint that only one of the orientations of a given k-mer is called. More precisely, the traversal algorithm works as usual, but never visits a k-mer if its reverse complement has already been visited. Whichever orientation of the forward or reverse complement k-mer is visited first is considered to be the primary k-mer of the pair (an example illustration is shown in Supplementary Fig. 6). This results in a set of sequences, which we call primary (primary contigs or primary unitigs). Note that the traversal order of the graph may change the set of primary sequences extracted from it, but it may never change the total number of k-mers in these sequences (primary k-mers). This is relevant when extracting primary contigs with multiple threads since the node traversal order may differ between runs. We call graphs constructed from primary sequences primary graphs. In contrast to the common approach in which only canonical k-mers are stored, primary de Bruijn graphs can be efficiently represented succinctly using the BOSS table, and effectively enable us to reduce the size of the graph part of the MetaGraph index by up to two times.

Graph cleaning

When a graph is constructed from raw sequencing data, it might contain a considerable number of k-mers resulting from sequencing errors (erroneous k-mers). These k-mers do not occur in the biological sequences and make up spurious paths in the graph, which one may desire to prune off. True-signal k-mers may also originate from contaminant organisms in the biological sample. Pruning the graph to discard either or both of these classes of undesirable k-mers is called graph cleaning.

MetaGraph provides routines for graph cleaning and k-mer filtering based on the assumption that k-mers with relatively low abundance (low k-mer counts) in the input data were probably generated due to sequencing errors or contamination and should therefore be dropped. To identify potentially undesirable k-mers, we use an algorithm proposed previously65. In MetaGraph, we adapted and scaled up this algorithm to work not only for small but also for very large graphs (up to trillions of nodes).

In brief, the decision to filter out a k-mer is based on the median abundance of the unitig to which this k-mer belongs. That is, k-mers with low abundance are preserved if they are situated in a unitig with sufficiently many (more precisely, at least 50%) highly abundant (solid) k-mers. Then the entire unitig is considered solid and is kept in the graph. All solid unitigs (which may also be concatenated into contigs called clean contigs) are extracted from the graph and output in FASTA format. Connected unitigs (those with non-zero degree) that are discarded due to lack of abundance typically originate from sequencing errors, and their removal is traditionally called bubble popping66. Optionally, all tips (that is, unitigs where the last node has no outgoing edges) that are shorter than a given cut-off (typically 2k) are discarded as well. Afterwards, a new graph can be constructed from these clean contigs, which we call a cleaned graph.

The abundance threshold for solid unitigs can be set either manually or computed automatically from the full k-mer spectrum. It is assumed that k-mers with an abundance of at most 3 are likely to be generated by sequencing errors and that all erroneous k-mers follow a negative binomial distribution. After fitting a negative binomial distribution to these low-abundance k-mers, the abundance threshold is set to the 99.9th percentile of this distribution65. Finally, in case the chosen threshold leads to preserving less than 20% of the total coverage, the automatic estimation procedure is deemed unsuccessful and a pre-defined value (typically 2) is used as a fallback threshold instead.

Constructing a joint graph from multiple samples

According to our workflow, when indexing multiple read sets (especially when indexing vast collections of raw sequencing data), the recommended workflow for constructing a joint de Bruijn graph from the input samples consists of the following three steps. First, we independently construct a de Bruijn graph from each input read set. As each graph is constructed from a single read set (or sample), we call these graphs sample graphs. If desired, these sample graphs are independently cleaned with the graph cleaning procedure described above. Then, each sample graph is decomposed into a set of (clean) contigs, either by extracting the contigs directly or as a result of the graph cleaning procedure. Finally, a new de Bruijn graph is constructed from all these contigs, which is then annotated to represent the relation between the k-mers and the input samples. As this graph represents the result of merging all sample graphs, we refer to it as the joint de Bruijn graph. In practice, the size of the contigs extracted from sample graphs is up to 100 times smaller than the raw input, which makes the construction of the joint de Bruijn graph by this workflow much more efficient compared with constructing it directly from the original raw read sets.

Graph annotations

Once a de Bruijn graph is constructed, it can already be used to answer k-mer membership queries, that is, to check whether a certain k-mer belongs to the graph or not. However, the de Bruijn graph alone can encode no additional metadata (such as sample ID, organism, chromosome number, expression level or geographical location). Thus, we supplement the de Bruijn graph with another data structure called an annotation matrix. Each column of the annotation matrix A ∈ {0,1}n×m, where n is the number of k-mers in the graph and m is the number of annotation labels, is a bit vector indicating which k-mers possess a particular property:

$${A}_{j}^{i}:= \{\begin{array}{cc}1 & k \mbox{-} {\rm{m}}{\rm{e}}{\rm{r}}\,i\,{\rm{i}}{\rm{s}}\,{\rm{i}}{\rm{n}}\,{\rm{r}}{\rm{e}}{\rm{l}}{\rm{a}}{\rm{t}}{\rm{i}}{\rm{o}}{\rm{n}}\,{\rm{w}}{\rm{i}}{\rm{t}}{\rm{h}}\,{\rm{a}}{\rm{t}}{\rm{t}}{\rm{r}}{\rm{i}}{\rm{b}}{\rm{u}}{\rm{t}}{\rm{e}}\,j,\\ 0 & {\rm{o}}{\rm{t}}{\rm{h}}{\rm{e}}{\rm{r}}{\rm{w}}{\rm{i}}{\rm{s}}{\rm{e}}\end{array}$$

Without loss of generality, we will assume that the annotation matrix encodes the membership of k-mers to different samples, that is, encodes sample IDs. In this case, \({A}_{j}^{i}=1\) indicates that k-mer i appears in sample j (note that the same k-mer may also appear in multiple samples).

The number of rows of the annotation matrix corresponds to the number of k-mers indexed in the de Bruijn graph, and the annotation matrix can therefore be of enormous size, containing up to 1012 rows and 109 columns. However, this matrix is typically extremely sparse and can therefore be efficiently compressed.

Representing graph annotations in MetaGraph

Independent of the choice of graph representation, a variety of methods are provided in MetaGraph for compressing annotation matrices to accommodate different query types. These different matrix representation schemes can be split into row-major and column-major. The row-major representations (such as RowSparse4, RowFlat67,68) enable fast row queries but have a poor performance of column queries. By contrast, the column-major representations (such as ColumnCompressed, Multi-BRWT3) provide fast access to individual columns of the annotation matrix but typically have a poorer row query performance. Despite being mathematically equivalent, up to the transposition of the represented matrix, these schemes are in fact algorithmically different due to the number of rows of the annotation matrix typically being orders of magnitude larger than the number of columns. A detailed description of these and other representation schemes is provided in Supplementary Information A.4. The size and query time benchmarks, respectively, of the compression methods used in our representations are provided in Supplementary Figs. 1 and 2.

The RowDiff compression technique

Due to the nature of de Bruijn graphs and the fact that adjacent nodes (k-mers) usually originate from the same sequences, it turns out that, in practice, adjacent nodes in the graph are likely to carry identical or similar annotations. The RowDiff compression technique4,5 exploits this regularity by replacing the annotations at nodes with their relative differences. This enables us to substantially sparsify the annotation matrix and, therefore, considerably improve its compressibility. Notably, the transformed annotation matrix can still be represented with any other available scheme, including those described above. MetaGraph provides a scalable implementation of this technique with efficient construction algorithms, which allow applying it to virtually arbitrarily large annotation matrices. The algorithm essentially consists of two parts. First, for each node with at least one outgoing edge, it picks one of the edges and marks its target node as a successor. Second, it replaces the original annotations at nodes with their differences from the annotations at their assigned successor nodes. This delta-like transform is applied to all nodes in the graph except for a small subset of them (called anchors), which keep their original annotation unchanged and serve to end every path composed of successors and break the recursion when reconstructing the original annotations (for example, in cycles).

Counting de Bruijn graphs

Finally, MetaGraph supports generalized graph annotations for representing quantitative information such as k-mer positions and their abundances in input sources, encoded with non-binary matrices5.

Annotation construction

The typical workflow for constructing an annotation matrix for a large input set consists of the following steps. After the joint de Bruijn graph has been constructed from the input sequences, we iterate over the different samples (corresponding to the different annotation labels) in parallel and map all k-mers of each sample to the joint graph, generating a single annotation column. To avoid the mapping of identical k-mers multiple times and to prevent the processing of erroneous k-mers (k-mer with sequencing errors), we use the unitigs extracted from the cleaned sample graphs instead of the raw sequences when annotating the graph. This substantially reduces the annotation construction time, especially when the joint graph is represented with SuccinctDBG, for which the traversal to an adjacent k-mer is several times faster than a k-mer lookup performed from scratch (Supplementary Information A.3.2).

Once an annotation matrix has been constructed (typically in the ColumnCompressed representation), it can be transformed to any other representation to achieve the desired trade-offs between the representation size and the performance of the required operations. In particular, for sequence search, the recommended workflow is to apply the RowDiff transform on the annotation matrix and then convert the sparsified columns to the Multi-BRWT or RowSparse representation, depending on the desired speed versus memory trade-off.

Dynamic index augmentation and batch updates

Generally, there are three strategies for extending a fully constructed MetaGraph index (a joint de Bruijn graph and its corresponding annotation matrix). First, the batch of new sequences can be indexed separately and that second MetaGraph index can be hosted on the same or on a different server. Then, these two indexes can be queried simultaneously, as it is done for a distributed MetaGraph index.

Second, the graph can be updated directly if it is represented using a dynamic data structure that supports dynamic updates (for example, SuccinctDBG (dynamic)). Then, the annotation matrix needs to be updated accordingly. This approach allows making instant changes. However, it does not enable large updates because of the limited performance of dynamic data structures69.

Finally, for large updates, the existing index can be reconstructed entirely. For the reconstruction, the index is first decomposed into contig buckets, where each bucket stores contigs extracted from the subgraph induced by the respective annotation column. Then, these buckets are augmented with the new data (either by adding the new sequences directly or by pre-constructing sample graphs from the new sequences and adding contigs extracted from them), and a new MetaGraph index is constructed from these augmented buckets. Notably, this approach uses a non-redundant set of contigs and does not require processing raw data from scratch again. Furthermore, instead of extracting contigs from the old index, it is also possible to use the inputs initially used to construct the old index (for example, the contigs extracted from sample graphs), which can substantially simplify the process.

Speeding up k-mer matching

For higher k-mer matching throughput, we implemented several techniques to speed up this procedure. First, when mapping k-mers to a primary graph (defined above), each k-mer may generally have to be searched twice (first that k-mer and then its reverse complement). Nevertheless, if a k-mer has been found, there is no need to search for its reverse complement. In fact, it is guaranteed in that case that the reverse complement k-mer would be missing in the graph. However, if a certain k-mer from the query is missing in the graph but its reverse complement is found, it is likely that, for the next k-mer from the query sequence, which is adjacent to the current one, the same applies. Thus, in such cases, we directly start the search for the next k-mer by querying the graph with the reverse complement and checking for the original k-mer only if that reverse complement k-mer is not found in the graph.

When the graph is represented with the BOSS table, indexing k-mer ranges in the BOSS table (as described in Supplementary Information A.3.2) greatly speeds up k-mer lookups, especially relevant when querying short sequences or arbitrary sequences against a primary graph.

Another optimization consists of querying the annotation matrix in batches, which improves cache locality and removes possible row duplications. To go further and speed up k-mer mapping as well, we developed the batch query algorithm described below in detail.

Batched sequence search

To increase the throughput of sequence search for large queries (for example, sets of sequencing reads or long sequences), we have designed an additional batch query algorithm schematically shown in Extended Data Fig. 1e. The algorithm exploits possible query set redundancy: the presence of k-mers shared between individual queries. More precisely, query sequences are processed in batches and an intermediate batch graph is constructed from each batch. This batch graph is then effectively intersected with the large joint graph from the MetaGraph index. The result of this intersection operation forms a relatively small subgraph of the joint graph, which we call a query graph. It is represented in a fast-to-query uncompressed format (HashDBG). In practice, this intersection is performed as follows. First, the batch graph is traversed (step 2 in Extended Data Fig. 1e) to extract a non-redundant set of contigs that are afterwards mapped against the joint graph through exact k-mer matching (step 3) and the respective annotations are extracted from the compressed index accordingly to construct the query graph with its respective annotations representing the intersection of the batch graph with the full MetaGraph index (step 4). All of the query sequences from the current batch are then queried against this query graph (step 5). Depending on the structure of the query data, this algorithm achieves a 10- to 100-fold speedup compared to unbatched queries.

Sequence search with alignment

For cases in which the sensitivity of sequence search through exact k-mer matching is insufficient, we developed several approaches for aligning sequences to the MetaGraph index, a process known as sequence-to-graph alignment5,6,23,70,71,72,73,74,75,76. Note that each approach has its target use cases and the choice should be made based on the particular application and the problem setting.

Each alignment algorithm takes a classical seed-and-extend approach27,71,72,73,74. Given an input sequence, the seeds are composed by joining consecutive k-mer matches within the graph’s unitigs (called unitig maximal exact matches, or Uni-MEMs72,77). Although, by default, this restricts the seeds to be at least of length k, representing the graph with the BOSS table allows for relaxing this restriction by mapping arbitrarily short sequences to suffixes of the k-mers indexed in the graph (as described in Supplementary Information A.3.2).

Each seed is extended in the graph forwards and backwards to produce a complete local alignment. Similarly to how GraphAligner78,79 builds on Myers’ algorithm80, our extension algorithm is a generalization of the Smith–Waterman–Gotoh local alignment algorithm with affine gap penalties81. The user can choose to report multiple alignments for each query, which may be found if seeds to multiple locations in the graph are discovered.

We now describe the extension algorithm in more detail. Given a seed, let \(s={s}_{1}\cdots {s}_{{k}^{{\prime} }}\) denote the suffix of the query sequence starting from the first character of the seed. We use a dynamic programming table to represent the scores of the best partial alignments. More precisely, each node v has three corresponding integer score vectors Sv, Ev, and Fv of size equal to the query length \({\ell }\). Sv[i] stores the best alignment score of the prefix \({s}_{1}\cdots {s}_{i}\) ending at node v. Ev[i] and Fv[i] represent the best alignment scores of \({s}_{1}\cdots {s}_{i}\) ending with an insertion and deletion at node v, respectively.

Let vS be the first node of the seed S. We define an alignment tree TS = (VS,ES) rooted at vS encoding all walks traversed during the search starting from vS, where VS ⊂ V × ℕ contains all the nodes of the paths originating at vS and ES ⊂ VS × VS contains all the edges within these paths. TS is constructed on-the-fly during the seed extension process by extending it with new nodes and edges after each graph traversal step.

As the size of TS can grow exponentially if all paths are explored (and is, in fact, of infinite size if the graph is cyclic), we traverse the graph and update TS in a score-guided manner. For this, we maintain a priority queue graph nodes and corresponding score vectors to be traversed, prioritizing nodes whose traversal led to the best local score update78. We use several heuristics to restrict the alignment search space. First, we use the X-drop criterion82,83, skipping an element if it is more than X units lower than the current best-computed alignment score. Moreover, we maintain an aggregated score column for each graph node storing the element-wise maximum score achieved among the score columns of each node in TS. Using this, we discard nodes in TS from further consideration if their traversal did not update the aggregate score column. Finally, we apply a restriction on the total number of nodes which can be explored as a constant factor of \({\ell }\).

To find seeds of length k′ < k (by default, we use a seed length of 19) matching the suffixes of nodes in the canonical graph, a three-step approach is taken. First, seeds corresponding to the forward orientation of the query are found, which correspond to contiguous node ranges in the BOSS representation of the graph (a description of the node range matching algorithm is provided in Supplementary Information A.3.2). The next two steps then retrieve suffix matches which are represented in their reverse complement form in the graph. In the second step, the reverse complements of the query k-mers are searched to find node ranges corresponding to suffix matches of length k′. Finally, these ranges are traversed forwards k − k′ steps in the graph to make the prefixes of these nodes correspond to the sequence matched. The reverse complements of these nodes are then returned as the remaining suffix matches.

While primary graphs act as an efficient representation of canonical de Bruijn graphs, special considerations need to be made when aligning to these graphs to ensure that all paths that are present in the corresponding canonical graph are still reachable. For this, we introduce a further extension of the alignment algorithm to allow for alignment to an implicit canonical graph while only keeping a primary graph in memory. During seed extension, the children of a given node are determined simply by finding the children of that node in the primary graph, along with the parents of its reverse complement node. Finding exact matching seeds of length k′ ≥ k can be achieved in a similar manner, searching for both the forward and reverse complement of each k-mer in the primary graph.

MetaGraph maintains three different alignment approaches that determine how the graph is traversed during seed extension, called MetaGraph-Align, SCA and TCG-Aligner, each applying different restrictions to traversal.

MetaGraph-Align

In this approach, the sequences are aligned against the joint de Bruijn graph to compute their respective closest walks in the graph. After computing a set of alignments, they are used in place of the original sequences to fetch their corresponding annotations. This approach allows for aligning to paths representing recombinations of sequences across annotation labels.

Label-consistent graph alignment

When label recombination is not desired, we support an alternative approach in which queries are aligned to subgraphs of the joint graph induced by single annotation labels (columns of the annotation matrix). We call this approach label-consistent graph alignment (or alignment to columns), and it is implemented by the SCA algorithm6. However, instead of aligning to all the subgraphs independently, we perform the alignment with a single search procedure while keeping track of the annotations corresponding to the alignments.

Trace-consistent graph alignment (TCG-Aligner)

Finally, when input sequences are losslessly encoded in a MetaGraph index using the methodology introduced and evaluated previously5, the alignment can be done against those original input sequences of which the respective walks in the graph are called traces. This method is called the trace-consistent graph aligner (TCG-Aligner)5.

Column transformations

In addition to the operations mentioned above, MetaGraph supports operations aggregating multiple annotation columns to compute statistics for the k-mers and their counts (abundances). In general, the following formula is used in the aggregation to compute the ith bit of the new annotation column:

$${a}_{\min }\le \mathop{\sum }\limits_{j=1}^{m}1[{v}_{\min }\le {c}_{{ij}}\le {v}_{\max }]\le {a}_{\max },$$

where cij is the count (abundance) of the ith k-mer in the jth label, and 1[A] is a Boolean predicate function that evaluates as 1 if the statement A is true and as 0 otherwise. If no counts are associated with the column, we assume that cij = 1 for every set bit in the jth annotation column and 0 otherwise. If the sum \({\sum }_{j=1}^{m}1[{v}_{\min }\le {c}_{{ij}}\le {v}_{\max }]\) falls within specified minimum and maximum abundance thresholds amin and amax, the bit in the aggregated column for this k-mer is set to 1, and the value of the sum is written as the count associated with that bit. In other words, the resulting aggregated column is always supplemented with a count vector representing the number of original annotation columns with k-mer counts between vmin and vmax, which can be used in downstream analyses as an ordinary count vector.

Seamless distribution and interactive use

In addition to the single-machine use case, where the index is constructed and queried locally, MetaGraph also supports querying indexes provided on a remote server through a client-server architecture. In this approach, a set of graphs and annotations can easily be distributed across multiple machines. Each machine runs MetaGraph in server mode, hosting one or multiple indexes and awaiting queries on a pre-defined port (Extended Data Fig. 1b). This setup makes it straightforward to execute user queries across all indexes hosted on multiple servers. For easy integration of results and coordination of different MetaGraph instances, we provide client interfaces in Python (Extended Data Fig. 1a). Notably, our distribution approach can be used not only for hosting multiple indexes of distinct sources but also when indexing a single dataset of extremely large size, such as SRA. This distribution approach enables virtually unlimited scalability.

MetaGraph API and Python client

For querying large graph indexes interactively, MetaGraph offers an API that allows clients to send requests to a single or multiple MetaGraph servers. When started in server mode, the MetaGraph index will be persistently present in server memory, which will accept HTTP requests on a pre-defined port. To make the querying more convenient, we have also implemented a Python API client as a Python package available at GitHub (https://github.com/ratschlab/metagraph/tree/master/metagraph/api/python).

MetaGraph Online

The search engine MetaGraph Online has a clean and intuitive graphical web user interface (UI; Supplementary Fig. 11), enabling the user to paste an arbitrary sequence and search it against a selected index. Restrictions to search multiple sequences at once are only in place to limit hosting costs. By default, the search is performed through basic k-mer matching. For greater sensitivity, it is also possible for all indexes to additionally align the searched sequence to the annotated graph. If k-mer coordinates or counts are represented in the queried index, the web UI allows retrieving them for the query sequence as well. In addition to user interaction with the web interface, MetaGraph Online provides a web API that allows connecting to the respective servers via their endpoints. That is, any of the hosted indexes can be queried through Python API by connecting to the respective endpoint of the server hosting that index (Extended Data Fig. 1a).

Indexing public read sets from the NCBI SRA

We have split the set of all read sets from SRA (excluding sequencing technologies with high read error rates, see more details in the sections below) into different groups of related samples and constructed a separate MetaGraph index for each group. The groups were defined either using dataset definitions of previous work32 or using the metadata provided by NCBI SRA. As a result, we constructed the following six datasets: SRA-MetaGut, SRA-Microbe, SRA-Fungi, SRA-Plants, SRA-Human and SRA-Metazoa. All of these datasets are listed in the supplementary tables available at GitHub (https://github.com/ratschlab/metagraph_paper_resources) and make up a total of 4.4 Pbp and 2.3 PB of gzip-compressed input sequences, while the indexes make up only 11.6 TB, which corresponds to the overall compression ratio of 193×, or 376 bp per byte.

For constructing the SRA-Microbe index, we used cleaned contigs downloaded from the European Bioinformatics Institute FTP file server provided as supplementary data to BIGSI32. Thus, no additional data preprocessing was needed for this dataset.

For all other datasets, each sample was either transferred and decompressed from NCBI’s mirror on the Google Cloud Platform or, if not available on Google Cloud, downloaded from the ENA onto one of our cloud-compute servers and subjected to k-mer counting with KMC3 (ref. 62) to generate the full k-mer spectrum. If the median k-mer count on the spectrum was less than 2, the sample was further processed without any cleaning. Otherwise, the sample was subjected to cleaning with the standard graph cleaning procedure implemented in MetaGraph, with pruning tips shorter than 2k (for all these datasets k was set to 31) and using an automatically computed k-mer abundance threshold for pruning low-coverage unitigs, with a fallback threshold value of 3. This cleaning procedure was applied for SRA-Fungi, SRA-Plants, SRA-Human and SRA-Metazoa.

For read sets of the SRA-MetaGut dataset, the sequencing depth was typically low, and we therefore applied a more lenient cleaning strategy. Namely, we switched off the singleton filtering (that is, we initially kept all k-mers that appear only once) on the k-mer spectrum and used a constant cleaning threshold of 2 during graph cleaning to remove all unitigs with a median k-mer abundance of 1.

For each dataset, we first constructed a joint canonical graph with k = 31 (including for each indexed k-mer its reverse complement) from the cleaned contigs and then transformed it into a primary graph (storing only one form of each k-mer and representing the other implicitly). Finally, using the same cleaned contigs, we annotated the joint primary graph with sample IDs to construct the annotation matrix. Each input sample thereby formed an individual column of the annotation matrix. The annotation matrix was then transformed to the RowDiff<Multi-BRWT> representation for higher compression and faster queries. The graph was, in turn, transformed to the small representation. The exact commands and scripts are available at GitHub (https://github.com/ratschlab/metagraph_paper_resources).

SRA subset composition

Here we provide a detailed description of each of the 6 datasets.

SRA-Microbe

This dataset was first used to construct the BIGSI index32. Consisting of 446,506 microbial genome sequences, this dataset once posed the largest indexed set of raw sequencing data. However, at the time of performing our experiments, it represented only an outdated snapshot of the corresponding part of the SRA. Nevertheless, we decided to keep the same sequence set for this work to enable direct comparison and benchmarking. A complete list of SRA IDs contained in this set is available as file TableS1_SRA_Microbe.tsv.gz (with further information available in TableS10_SRA_Microbe_McCortex_logs.tsv.gz and TableS11_SRA_Microbe_no_logs.tsv) at GitHub (https://github.com/ratschlab/metagraph_paper_resources). For details on how the set of genomes was selected, we refer to the original publication32.

SRA-Fungi

This dataset contains all samples from the SRA assigned to the taxonomic ID 4751 (Fungi) specifying the library sources GENOMIC and METAGENOMIC and excluding samples using platforms PACBIO_SMRT or OXFORD_NANOPORE. In total, this amounts to 149,607 samples processed for cleaning. Out of these, 138,158 (92.3%) could be successfully cleaned and were used to assemble the final MetaGraph index. All sample metadata were requested from NCBI SRA on 25 September 2020 using the BigQuery tool on the Google Cloud Platform.

SRA-Plants

This dataset contains all samples from the SRA assigned to the taxonomic ID 33090 (Viridiplantae), specifying the library source GENOMIC and excluding samples using platforms PACBIO_SMRT or OXFORD_NANOPORE. In total, this amounts to 576,226 samples processed for cleaning. Out of these, 531,736 (92.3%) could be successfully cleaned and were used to assemble the final MetaGraph index. All sample metadata were requested from NCBI SRA on 17 August 2020 using the BigQuery tool on the Google Cloud Platform.

SRA-Human

This dataset contains all samples of assay type WGS, AMPLICON, WXS, WGA, WCS, CLONE, POOLCLONE, or FINISHING from the SRA assigned to the taxonomic ID 9606 (Homo sapiens) specifying the library source GENOMIC and excluding samples using platforms PACBIO_SMRT or OXFORD_NANOPORE. In total, this amounts to 454,252 samples processed for cleaning. Out of these, 436,502 (96.1%) could be successfully cleaned and were used to assemble the final MetaGraph index. All sample metadata were requested from NCBI SRA on 12 December 2020 using the BigQuery tool on the Google Cloud Platform.

SRA-Metazoa

This dataset contains all samples from the SRA assigned to the taxonomic ID 33208 (Metazoa) specifying the library source GENOMIC and excluding samples using platforms PACBIO_SMRT or OXFORD_NANOPORE. In total, this amounts to 906,401 samples processed for cleaning. Out of these, 805,239 (88.8%) could be successfully cleaned and were used to assemble the final MetaGraph index. All sample metadata were requested from NCBI SRA on 17 September 2020 using the BigQuery tool on the Google Cloud Platform.

SRA-MetaGut (human gut microbiome)