- Article

- Open access

- Published:

Nature volume 645, pages 702–711 (2025)Cite this article

-

126k Accesses

-

8 Citations

-

908 Altmetric

Subjects

Abstract

Since the advent of computing, humans have sought computer input technologies that are expressive, intuitive and universal. While diverse modalities have been developed, including keyboards, mice and touchscreens, they require interaction with a device that can be limiting, especially in on-the-go scenarios. Gesture-based systems use cameras or inertial sensors to avoid an intermediary device, but tend to perform well only for unobscured movements. By contrast, brain–computer or neuromotor interfaces that directly interface with the body’s electrical signalling have been imagined to solve the interface problem1, but high-bandwidth communication has been demonstrated only using invasive interfaces with bespoke decoders designed for single individuals2,3,4. Here, we describe the development of a generic non-invasive neuromotor interface that enables computer input decoded from surface electromyography (sEMG). We developed a highly sensitive, easily donned sEMG wristband and a scalable infrastructure for collecting training data from thousands of consenting participants. Together, these data enabled us to develop generic sEMG decoding models that generalize across people. Test users demonstrate a closed-loop median performance of gesture decoding of 0.66 target acquisitions per second in a continuous navigation task, 0.88 gesture detections per second in a discrete-gesture task and handwriting at 20.9 words per minute. We demonstrate that the decoding performance of handwriting models can be further improved by 16% by personalizing sEMG decoding models. To our knowledge, this is the first high-bandwidth neuromotor interface with performant out-of-the-box generalization across people.

Similar content being viewed by others

Main

Interactions with computers are increasingly ubiquitous, but existing input modalities are subject to persistent trade-offs between portability, throughput and accessibility. While keyboard text entry, texting, trackpads and mice are important, our aim is to enable computation in settings in which these conventional methods are not feasible, for example, seamless input to mobile computing with smartphones, smart watches or smart glasses.

A neural interface that can obviate trade-offs and provide seamless interaction between humans and machines has long been sought, but has been slow to emerge. In recent years, intracortical neural interfaces that directly interface with brain tissue have advanced the premise2,5, demonstrating translation of thought into language at bandwidth rates comparable with conventional computer input systems3,4. However, existing high-bandwidth interfaces require invasive neurosurgery, and the models that translate neural signals to digital inputs remain bespoke.

Non-invasive approaches relying on recording of electroencephalogram (EEG)6 signals at the scalp have offered more generality across people, for example, for gaming7, but EEG can require lengthy setup, and the low signal-to-noise ratio of these devices has limited their use8.

Regardless of the modality, issues of signal bandwidth, generalization across populations and the desire to avoid per-person or session-to-session calibration remain key technical hurdles in the field of brain–computer interfaces (BCIs)5,9,10,11,12.

To build an interface that is both performant and accessible, we focused on an alternative class of non-invasive neuromotor interfaces based on reading out the electrical signals from muscles using electromyography (EMG). Myoelectric potentials are produced by the summation of motor unit action potentials (MUAPs) and represent a window into the motor commands issued by the central nervous system. Surface EMG (sEMG) recordings offer a high signal-to-noise ratio by amplifying neural signals in the muscle13, enabling real-time single-trial gesture decoding. The nature of the sEMG signal lends itself naturally to human–computer interface (HCI) applications because it is not subject to problems that vex computer-vision-based approaches, such as occlusion, insufficient lighting or gestures with minimal movement. Indeed, sEMG has been deployed for diverse applications in clinical settings14,15, for diagnosis and rehabilitation16, as well as prosthetic control1,17,18.

However, current EMG systems, including those for prosthetic control17, have many limitations for wide-scale use and deployment. Laboratory systems are generally encumbered with wires to external power sources and amplifiers, and placed over uncomfortable locations such as the target muscle belly. Commercially available EMG-based neuromotor interfaces have been historically challenging to control19, relating to myriad technical issues such as poor robustness across postures20, a lack of standardized data21, electrode displacement22, and a lack of both cross-session23 and cross-user generalization24. More recently, deep learning techniques have shown some success at addressing these limitations25, but a general lack of available EMG data and low sample sizes are believed to limit their efficacy21.

To validate the hypothesis that sEMG can provide an intuitive and seamless computer input that works in practice across a population, we developed and deployed robust, non-invasive hardware for recording sEMG at the wrist. We chose the wrist because humans primarily engage the world with their hands, and the wrist provides broad coverage of sEMG signals of hand, wrist and forearm muscles while affording social acceptability26,27. Our sEMG research device (sEMG-RD) is a dry-electrode, multichannel recording platform with the ability to extract single putative MUAPs. It is comfortable, wireless, accommodates diverse anatomy and environments and can be donned or doffed in a few seconds.

To transform sEMG into commands that drive computer interactions, we architected and deployed neural networks trained on data from thousands of consenting human participants. We also created automated behavioural-prompting and participant-selection systems to scale neuromotor recordings across a large and diverse population. We demonstrated the ability of our sEMG-RD to drive computer interactions such as one-dimensional (1D) continuous navigation (akin to pointing a laser-pointer based on wrist posture), gesture detection (finger pinches and thumb swipes) and handwriting transcription.

The sEMG decoding models performed well across people without person-specific training or calibration. In open-loop (offline) evaluation, our sEMG-RD platform achieved greater than 90% classification accuracy for held-out participants in handwriting and gesture detection, and an error of less than 13° s−1 error on wrist angle velocity decoding. On computer-based tasks that evaluate these interactions in closed-loop (online), we achieved 0.66 target acquisitions per second in wrist-based continuous control, 0.88 acquisitions per second on discrete gestures and 20.9 words per minute (WPM) with handwriting.

To our knowledge, this is the highest level of cross-participant performance achieved by a neuromotor interface. Our approach opens up directions of sEMG-based HCI research and development while solving many of the technical problems fundamental to current and future BCI efforts.

Scalable sEMG recording platform

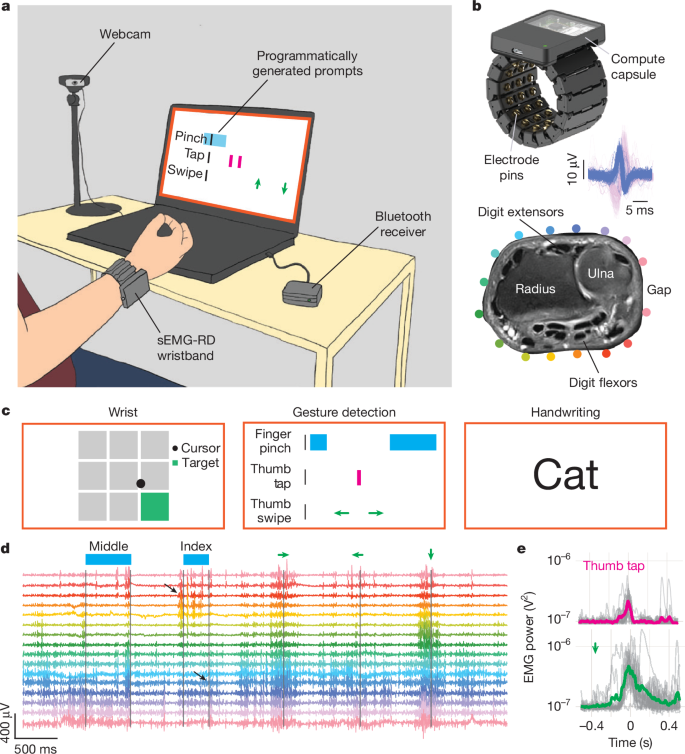

To build generic sEMG decoding models capable of predicting user intent from neuromuscular signals, we developed a hardware and software platform capable of quickly and robustly coupling the neuromotor interface with computers across a diverse population (Fig. 1a). Consenting participants (Methods) were seated in front of a computer while wearing the sEMG-RD at the wrist; the sEMG-RD is a dry electrode, multichannel recording device with a high sample rate (2 kHz) and low-noise (2.46 μVrms), and is compatible with everyday use26,27 (Fig. 1a and Methods). We fabricated the device in four different sizes to ensure coverage across a range of wrist circumferences. The device streamed wirelessly over secure Bluetooth protocols and provided a battery life of more than 4 h.

a, Overview of sEMG data collection. A participant wears the sEMG wristband, which communicates with a computer through a Bluetooth receiver. The participant is prompted to perform diverse movements of the hand and wrist. A webcam captures their hand and wrist, excluding the face. Between sessions within a single day, the participants remove and slightly reposition the sEMG wristband to enable generalization across different recording positions. b, The sEMG wristband consists of 48 electrode pins configured into 16 bipolar channels with the sensing axis aligned with the proximal–distal axis of the forearm and the remainder serving as shield and ground electrodes (top). A 3D printed housing encloses cabling and analogue amplifiers for each channel. A compute capsule digitizes the signal and streams sEMG data using Bluetooth. Inset: overlay of 62 and 72 individual instances of two putative MUAPs evoked by subtle thumb (blue) and pinky extension (pink) movements, respectively, from a single sEMG channel (Methods). Bottom, a proton-density-weighted axial plane magnetic resonance imaging (MRI) scan of the wrist; relevant bone and muscle landmarks are labelled. The coloured dots indicate the approximate position of electrodes, with an adjustable gap between electrodes placed over an area of low muscle density. c, Schematic of the prompters for the three tasks (Methods and Extended Data Fig. 4). In the wrist task, the participants controlled a cursor using wrist movements tracked in real time with motion capture. In the discrete-gesture task, gesture prompts scrolled from right to left. In the handwriting task, the participants wrote words presented on the screen. d, Representative sEMG signals, high-pass filtered at 20 Hz, recorded during performance of discrete gestures reveal intricate patterns of activity across multiple channels accompanying each gesture, with prompt timings above (for example, ‘middle’ indicates middle pinch, and the green left arrow indicates a leftward thumb swipe). Channel colouring corresponds to electrode locations in b. The black arrows highlight activation of flexors and extensors during an index-to-thumb pinch and release, respectively. e, Representative examples of variability in gestural sEMG activations across gesture instances (thumb taps (top) and downward thumb swipe (bottom)). The grey lines show the instantaneous high-pass-filtered sEMG power, summed across channels, for all instances of a gesture during a single band placement. The bold traces show the average. The mean was subtracted from all traces, and the power was offset by 10−7 V2 to plot on a logarithmic scale without visually exaggerating the baseline variance.

We optimized the sEMG-RD for recording subtle electrical potentials at the wrist (Extended Data Fig. 1). We manufactured the device in four sizes, with a circumferential interelectrode spacing of 10.6, 12, 13 or 15 mm, approaching the spatial bandwidth of EMG signals at the forearm (~5–10 mm)28, while minimizing the device’s form factor. We placed the gap in electrodes to allow for tightening adjustments along the ulna bone, where muscles are reduced in density. Together, this enabled the sensing of putative MUAPs across the wrist during low-movement conditions (Fig. 1b and Extended Data Fig. 2).

To collect training data for models, we recruited an anthropometrically and demographically diverse group of participants (162–6,627 participants, depending on the task; Extended Data Fig. 3) to perform three different tasks: wrist control, discrete-gesture detection and handwriting. In all cases, the participants wore sEMG bands on their dominant-side wrist and were prompted to perform actions using custom software run on laptops (Fig. 1c). For wrist control, the participants controlled a cursor, the position of which was determined from wrist angles tracked in real time using motion capture. During the discrete-gesture detection task, a prompter instructed participants to perform nine distinct gestures with a randomized order and intergesture interval. During the handwriting task, the participants were prompted to hold their fingers together (as if holding an imaginary writing implement) and ‘write’ the prompted text. Further training data protocol details are provided in the Methods.

We designed the data-collection system to facilitate supervised training of sEMG decoding models. During data collection, we recorded both sEMG activity and the timestamps of labels on the prompter using a real-time processing engine. We designed the engine to be used during recording and model inference to reduce online–offline shift (Methods). To precisely align prompter labels to actual gesture times, which may vary due to a participant’s reaction time or compliance, we developed a time-alignment algorithm that enabled post hoc inference of gesture event times (Methods).

Examination of raw sEMG traces revealed highly structured patterns of activity (Fig. 1d). Discrete gestures evoked patterned activity across a set of channels that roughly corresponded to the position of flexor and extensor muscles for the corresponding movement (Fig. 1d and Extended Data Fig. 1c). Fine differences in sEMG power across instances of a given gesture performed during a session (Fig. 1e) highlight the power of the platform in acquiring repeated time-aligned examples for supervised learning and some of the challenges facing generalization of EMG decoders.

Single-participant models do not generalize

It is well known across BCI modalities that both across-session and across-user generalization are difficult problems5,11,24,29. We wanted to evaluate the difficulty of these generalizations for sEMG decoders. Inspection of the raw data revealed pronounced variability in the sEMG for the same action across different participants and band donnings (which we refer to as sessions), reflective of variations in sensor placement, anatomy, physiology and behaviour that make generalization challenging (Fig. 2a,b). As an example of this variability, we found that the cosine distances between waveforms of the same gesture across sessions and users heavily overlapped with the distribution of distances between waveforms of different gestures (Extended Data Fig. 5a), and intermingled even in a nonlinear embedding of gesture distances (Fig. 2b), highlighting the challenge of the generalization problem.

a, Cross-participant (columns) and cross-session variability (light lines) in gestural sEMG for four discrete gestures (different rows and colours) across seven participants. Four of the possible nine gestures are shown for clarity. The light lines show the high-pass-filtered sEMG power averaged across all channels and all gesture instances during a single band placement. The bold lines correspond to the average across all band placements. b, t-SNE embedding of sEMG activations (Methods) across participants for the four different gestures in a. Gesture colour map as in a, with shading reflecting different participants (n = 20). Each dot reflects an individual gestural instance. c,d, Single-participant models trained and tested on the same participant (c) or different participants (d). Generalization across sessions improves as more training data are used. Generalization across participants remains poor even when more training data are used. Statistical analysis was performed using two-sided Wilcoxon signed-rank tests; all pairwise comparisons are significant; P < 10−10. n = 100 single-participant models. The boxes show the median (centre line) and lower and upper quartiles (box limits), and the whiskers extend to ±1.5 × interquartile range. e–g, The decoding error of models trained to predict wrist angle velocity (e), classify nine discrete gestures (f) and classify handwritten characters (g) as a function of the training set size. Data are the mean ± s.e.m. decoding error evaluated on a test set of held-out participants (n = 22 for wrist, 100 for discrete gestures and 50 for handwriting) (Methods). The dashed lines and inset equations show fitted scaling curves (N is measured in units of hundreds of participants and D in millions of parameters). For discrete gestures, the open circle represents varying numbers of sessions per participant (Methods).

To evaluate the ability of obtaining performant sEMG decoders across sessions for a given participant, we trained single-participant models for 100 participants who had collected at least five sessions on the discrete-gesture-classification task. For each participant, we held out one session for evaluation and then trained models on two, three or four of the remaining sessions (Methods). As an offline evaluation metric, we used the false-negative rate (FNR), defined as the proportion of prompted gestures for which the correct gesture was not detected by the model.

Single-participant models trained and tested on the same participant achieved offline performance that improved substantially with more training data (Fig. 2c). By contrast, models trained on one participant and then tested on another showed substantially worse performance and benefited only mildly from an increasing amount of training data (Fig. 2d), indicating a greater domain shift across people compared with across sessions. For 98% of participants, the model trained on their own data performed better compared with all other single-participant models (Extended Data Fig. 5b).

We wondered whether cross-participant generalization was difficult because there was structure or clusters across people, or whether every participant required a relatively unique single-participant model. The former could motivate an approach where a set of models trained on a small population (within each cluster) could achieve a high level of population coverage. The absence of overt structure in a t-distributed stochastic neighbour embedding (t-SNE; Extended Data Fig. 5c) of the average model transfer FNR between participant pairs suggests that there are no obvious participant clusters. Moreover, there are no people who exhibit the ability to generate performant models for other people, nor are there any people for whom other people’s models always perform well (Extended Data Fig. 5d).

Offline evaluation of generic models

To avoid the need to train and tune models for each individual, we trained generic models that are able to generalize to entirely held-out participants. To do this, we collected data from hundreds to thousands of data-collection participants for each task. These data were then used to train neural network decoding models. In each case, we used preprocessing techniques and network architectures designed for processing multidimensional time series (Methods and Extended Data Fig. 6): multivariate power frequency (MPF) features and a long short-term memory (LSTM) layer for the wrist task, a 1D convolution layer followed by an LSTM layer for the discrete-gesture task, and MPF features and a conformer30 for the handwriting task, which we anticipated would require an architecture with richer context information (provided through the attention mechanism).

Previous studies on large language models31 and vision transformers32 have shown that performance shows power-law scaling with the amount of training data and the model size. To investigate whether such scaling holds for sEMG decoding, we examined the offline decoding performance of models trained on data from varying numbers of participants (Fig. 2e–g). Across all tasks, we observed reliable performance improvements as a function of the increasing number of participants in the training corpus. Consistent with other domains, empirical performance follows a power law both as a function of parameters and data quantity, with the parameters of the scaling relationship shared across architecture sizes (Methods). The largest models showed promising offline performance.

Online evaluation of generic models

Ultimately, closed-loop performance of our sEMG decoding models is the critical evaluation that confirms their viability as a computer interface. For each task, closed-loop evaluation was performed on naive participants who had not previously had meaningful experience using any sEMG decoder on that task (n = 17 (wrist), n = 24 (discrete gestures) and n = 20 (handwriting)). The core tasks involved using the wrist-angle decoder to continuously control a 1D cursor to acquire targets, the discrete-gesture decoder to navigate and perform actions in a discrete lattice, and the handwriting decoder to write out prompted phrases that were then visualized on the screen (Fig. 3a–c; the evaluation tasks are described in the Methods; see Supplementary Videos 1–3 for representative performance, and Extended Data Fig. 7 for a depiction of the task dynamics). For each task, the participants performed three distinct blocks of trials to allow for characterization of learning (50 trials per block for wrist, 10 trials for discrete gestures and handwriting), with the first block always being a practice block that allowed them to adapt to the controller.

For all of the tasks, we observed learning effects, whereby the participants improved with experience. During the practice block, the supervisor gave verbal coaching—for example, “swipe faster” or “write more continuously”—as needed to improve participant’s performance. The participants were typically able to perform each task on their own after the initial practice block but, for the discrete gestures and handwriting tasks, we found that coaching during the evaluation block was valuable for a subset of participants on trials that they struggled to complete (Methods).

Every participant was able to complete every trial of the three tasks. For wrist control, all of the participants were able to successfully navigate to each target and stay on the target for 500 ms to acquire it. Performance was characterized by time to target acquisition (Fig. 3d) and dial-in time, which measures the time taken to acquire the target after having exited it prematurely (Fig. 3e; definitions are provided in the Methods). We found learning effects in which participants improved in both of these metrics from the practice block to the evaluation blocks, and the majority of them subjectively reported that the cursor moved in the intended direction >80% of the time (Extended Data Fig. 8e).

a–c, Schematics of the three closed-loop tasks. a, Horizontal cursor (wrist): the participants control a cursor (red circle) to acquire a target (green rectangle) in a row of possible targets (grey rectangles). b, Discrete grid navigation: the participants use thumb swipe gestures to navigate, and perform activation gestures prompted by coloured shapes. c, Text entry: the participants handwrite prompted text. (Methods, Extended Data Fig. 7 and Supplementary Videos 1–3). d,e, The performance of n = 17 naive test participants using the wrist decoder in the horizontal cursor task. d, The mean target-acquisition time (excluding the 500 ms hold) in each task block. e, The mean dial-in time in trials in which the cursor prematurely exited the target before completing the hold. Inset: the fraction of trials with premature exits. The dashed red and orange lines in panels e and d show the median task performance with the ground truth wrist angles measured by motion capture (n = 162, with no previous task exposure) and with the native MacBook trackpad (n = 17, with previous task exposure), respectively (Methods). f–h, The performance of n = 24 naive test participants using the discrete-gesture decoder in the grid navigation task. f, The fraction of prompted gestures in each block for which the first detected gesture matches the prompt (first-hit probability). g, The mean gesture completion rate in each task block. The dashed red lines in panels f and g show the median task performance of a different set of n = 23 participants using a gaming controller (Methods). h, Confusion rates (normalized to expected gestures) in evaluation blocks, averaged across participants. Early release denotes a hold of less than 500 ms. i,j, The performance of n = 20 naive test participants using the handwriting decoder on the text entry task. i, The online CER in each block. j, The WPM in each block. The dashed red line shows the median WPM of a different set of n = 75 participants handwriting similar phrases in open loop without a pen (Methods). For each participant, the online CER and WPM are calculated as the median over trials in each block. For all panels, statistical analysis was performed using two-tailed paired sample Wilcoxon signed-rank tests; *P < 0.05, **P < 0.005; not significant (NS), P > 0.05. The boxes show the median (centre line) and lower and upper quartiles (box limits), and the whiskers extend to ±1.5 × interquartile range. The printed numbers show the median and outliers are marked with open circles. For each baseline device, the dashed lines show the median over participants and the shading shows the 95% confidence intervals estimated using the reverse percentile bootstrap with 10,000 resamples.

For discrete gestures, all of the participants were able to complete the task by navigating with the swipe gestures and performing the activation gestures (thumb tap, index pinch and hold, middle pinch and hold) when required. Performance on the discrete-gesture task was characterized by a measure of how often the first detected gesture following a prompt matched the prompted gesture (Fig. 3f) as well as how long it took to complete each prompted gesture (Fig. 3g). The confusion matrix across discrete gestures is shown in Fig. 3h. Note that errors on this task (reflected in both confusions and first-hit probabilities) are a combination of model decoding errors as well as behavioural errors, whereby the participant performed the wrong gesture. This is evident in the fact that confusions were also present when performing this task using a gaming controller rather than an sEMG decoder (Extended Data Fig. 8b–d). Index and middle holds were sometimes released too early (that is, the detected release followed the detected press less than 0.5 s later), and this was indicated in the confusion matrix as an ‘early release’.

The performance of the closed-loop handwriting decoder was evaluated by participants entering prompted phrases and was characterized by the online character error rate (CER; Fig. 3i) and speed of text entry (Fig. 3j). Improvements from practice to evaluation blocks indicate that participants were able to use the practice trials to discover handwriting movements that were effective for writing accurately with the decoder.

For each of these interactions, we also provide performance metrics for a baseline interface that does not rely on decoding sEMG (dashed horizontal lines in each panel). For 1D continuous control, we find that a MacBook trackpad and motion capture ground-truth wrist-based control lead to improved median acquisition times of 0.68 s and 0.96 s, respectively, compared with 1.51 s for the sEMG wrist decoder. For discrete grid navigation, using a Nintendo Joy-Con game controller showed a median gesture completion rate of 1.45 completions per second versus 0.88 with the sEMG discrete-gesture decoder. For prompted text entry, the participants performed open-loop handwriting on a surface, without a pen, at 25.1 WPM, higher than the 20.9 WPM achieved with the sEMG handwriting decoder (and below 36 WPM achievable with mobile phone keyboard33). While our sEMG decoders therefore have room to improve relative to these baseline devices, they are sufficiently performant to reliably complete each task, while not requiring the use of hand-encumbering devices or external instrumentation.

Representations learned by the discrete-gesture model

To develop an intuition about how the generic sEMG decoders function, we visualized the representations learned by the intermediate layers of the discrete-gestures decoder. The network architecture consisted of a 1D convolutional layer, followed by three recurrent LSTM layers (Fig. 4a) and, finally, a classification layer.

a, Schematic of network architecture. Conv1d denotes a 1D convolutional layer. The final linear readout and intermediate normalization layers are not shown (Methods). b, Representative convolutional filter weights (16 input channels × 21 timesteps) from the first layer of the trained model. c, Example heat maps of the normalized voltage across all 16 channels for putative MUAPs recorded with the sEMG wristband (Methods and Extended Data Fig. 2) after high-pass filtering (Methods). d,e, The frequency response of the channel with maximum power (d) and the root mean square (RMS) power per channel (e), both normalized to their respective peaks, for each example convolutional filter (blue lines) and putative MUAP (orange lines) from b and c, respectively (see also Extended Data Fig. 9). For comparison, the dashed black lines show these curves calculated over an entire recording session, averaged over ten randomly sampled sessions from the model training set. For d, we used the mean temporal frequency response over all 16 sEMG-RD channels. The sharp frequency response cut off at 40 Hz is from high-pass filtering (Methods). f–h, Principal component analysis projection of LSTM representations of 500 ms sEMG snippets aligned with instances of each discrete gesture, from three participants held out from the training set, each with three different band placements. Each row shows the representation of each LSTM layer. Each column shows the same data, coloured by discrete gesture category (f), participant identity and band placement (g) or sEMG RMS power (h) at the time of the gesture. i, The proportion of total variance accounted for by each variable, for each layer (n = 50 test participants; Methods). Statistical analysis was performed using two-tailed paired sample t-tests; ***P < 0.001. The error bars (barely visible) show the 95% Student’s t confidence interval for the mean.

To interpret the convolution layer, we visualized representative spatiotemporal filters (Fig. 4b) alongside putative MUAPs (Fig. 4c) detected using the wristband during low-movement conditions (Extended Data Fig. 2). The filters appear to form a coarse basis set spanning the statistics of MUAPs; specifically, Fig. 4d,e shows the general similarity in temporal frequency content and spatial envelope between the putative MUAPs and emergent convolutional filters (Extended Data Fig. 9).

To examine the intermediate LSTM representations, we visualized the changing representational geometry across layers. We analysed the representations of four properties: gesture category, participant identity, band placement and gesture-evoked sEMG power (a proxy for behavioural variability over executions of the same gesture). Figure 4f–h shows LSTM hidden-unit activity at each layer evoked by snippets of sEMG activity triggered on discrete-gesture events, coloured by one of the four aforementioned properties. By examining the dominant principal components (PCs), we observed a trend of gesture category becoming more separable deeper in the network as the representations of each gesture become more tightly clustered and less or equally sensitive to nuisance variables (participant identity, band placement and power). With increasing depth in the network, gesture category accounted for an increasing proportion of the variance in the representation of each layer (Fig. 4i and Methods). In summary, the network learns to solve this task by progressively shaping its representation of the sEMG to be more and more invariant to nuisance variables.

Personalizing handwriting models improves performance

While generic models allow a neuromotor interface to be used with little to no setup, performance can be improved for a particular individual by personalizing the generic model to data from that participant. Personalization has shown benefits to accuracy for related problems in automatic speech recognition in language models34 and acoustic models35 as well as speech enhancement36. We explored personalization for the handwriting task through the fine-tuning of all of the generic model’s parameters using additional supervised data from a set of 40 held-out participants not included in the training data of the generic model. For each participant, we held out three sessions of data (Methods) and then trained personalized models for 300 epochs without early stopping on varying amounts of data from their remaining sessions (Fig. 5a).

a, Schematic of the supervised handwriting decoder personalization. Predictions before and after personalization are shown above and below example prompts (such as ‘howdy!’) for two participants (left and right). The green and purple font denotes correct and incorrect character predictions, respectively. b, The mean performance (n = 40 test participants) of models pretrained on varying numbers of participants (red line) and fine-tuned on varying amounts of personalization data for each test participant (shades of blue). The dashed lines show power law fits (Methods). c, The relative reduction in offline CER that personalization provides beyond a given generic model, for varying amounts of pretraining participants and personalization data. The dashed lines show the relative improvements calculated from the power law fits in b. d, The relative increase in the number of pretraining participants that matches CER reduction from fine-tuning on varying amounts of personalization data (Methods), for generic models with varying amounts of pretraining participants. A value of 1 indicates doubling the number of pretraining participants. The dashed lines show the relative increases calculated from the power law fits in b. e, The relative reduction in offline CER (beyond the 60.2-million-parameter 6,527-participant pretrained generic model) achieved for each test participant (rows) by personalizing on 20 min of data from every other test participant (columns), sorted by the diagonal values. f, The relative reduction in CER achieved for each test participant (n = 40) by fine-tuning on 20 min of personalization data, as a function of the pretrained generic model CER for that test participant (60.2-million-parameter model), across various numbers of pretraining participants. Improvements from personalization are correlated with the CER of the pretrained generic model. We show the range of Pearson correlation coefficients across numbers of pretraining participants and the median P value (two-sided test); the maximum P value over all fits is 0.0035.

Fine-tuning generic models improved their average offline CER for all amounts of additional data and for all numbers of pretraining participants (Fig. 5b). Even for generic models trained on 6,400 participants, using just 20 min of personalization data resulted in a 16% improvement in the median performance (Fig. 5c). In all cases, more personalization data led to further reductions in the average per-user CER across the personalized participants. However, across all generic models, as the generic model was pretrained with data from more participants, the absolute and relative improvement in CER from personalization decreased (Fig. 5c), indicating that there are diminishing returns to personalizing already performant generic models.

Personalizing models is therefore an alternative to expanding the generic corpus size to decrease a model’s CER on the target participant (Fig. 5d). For example, for the model pretrained on the smallest corpus of 25 participants (or 1,900 min), personalization with 20 min of data from the target participant was equivalent to training a generic model with 14,000 min of additional data from other participants—7× as much data as in the original pretraining corpus. However, as more data from other participants are added, the effective enhancement of the generic training corpus achieved through personalization diminishes. Adding 14,000 min of pretraining data is equivalent to 20 min of personalization data for the 25 participant model and only about 1 min for the 200 participant model.

While personalization improved performance on the target participant, model performance improvements from personalization caused the model to overfit to the target participant and did not transfer across participants. For the most performant generic model trained (6,527 participants, 60.2 million parameters), personalizing on one participant and evaluating on another participant generally had a negative impact on performance when compared to the generic model performance (Fig. 5e). Personalization on the same participant improved the performance in 88% of the participants and led to a relative improvement of 8.35 ± 2.36% (median ± s.e.m. over participants), whereas data from one participant used to personalize another participant improved performance on only 7% of such participant pairs and led to an average relative decrease of 8.86 ± 0.53% (median ± s.e.m., taken across each evaluation participant after averaging across personalized models; Methods).

Personalization disproportionately improved the performance of poorly performing participants across all generic models (Fig. 5f). For example, for generic models pretrained with 6,527 participants, personalization provided larger relative gains for participants with higher generic model CER (Fig. 5f) and more moderate gains or occasional regressions for those with already low CERs. In Extended Data Fig. 10, we show that these regressions can be mitigated with early stopping during fine-tuning, albeit at the cost of increased data required for validation.

Overall, these results highlight clear trends and trade-offs for personalization, facilitating the rational design of data collection. We expect that personalization will provide a practical solution for enhancing the average per-user performance when further scaling generic data collection to achieve a target performance level is prohibitive. Moreover, personalization can effectively address the long tail of users experiencing poor performance with the generic model, as it ensures considerable relative performance improvements for these users.

Discussion

Here, we introduce an easily donned/doffed wrist-based neuromotor interface capable of enabling a diverse range of computer interactions for novel users. We developed a scalable data-collection framework and collected a large training corpora across diverse participants (Fig. 1). We used supervised deep learning to produce generic sEMG models (Fig. 2) that overcome issues that have long stymied generalization in BCIs and sEMG systems. The resulting sEMG decoders enabled continuous control, discrete input and text entry in closed-loop evaluations without the need for session- or participant-specific data or calibration (Fig. 3). A dissection of intermediate representations in the discrete-gesture neural-network decoder highlighted its ability to disentangle nuisance parameters related to band placement and behavioural style (Fig. 4). Finally, we demonstrated improvements to handwriting decoding performance with additional personalization data (Fig. 5). Together, this work defines a framework for building generic interaction models using non-invasive biological signals.

Related work in HCI and BCI

The work presented here sits at the nexus between HCI and BCI. The HCI community has placed significant emphasis on advancing gestural input for various technology applications by deploying machine-learning-backed solutions for differing sensing modalities such as computer vision (for example, Kinect, Meta Quest) inertial measurement units37,38, sEMG24,39,40, bio-acoustic signals41, electrical impedance tomography42, electromagnetic signals43 and ultrasonic beamforming44. The most direct antecedent of our work uses the discontinued commercial sEMG Myo armband (worn on the forearm) for gesture detection, and wrist movement39, in datasets with more than 600 participants45,46. However, to date, sEMG-based approaches have typically been offline or necessitated within-session or participant-specific calibration, limiting their real-world use47.

Our non-invasive sEMG work has intimate connections to BCI. EEG-based BCI systems (notably, spellers) can achieve impressive bitrates of 100–300 bits per minute48 (versus 528 bits per minute for our handwriting decoder). However, EEG performance generally lags behind other BCI modalities due to issues with signal quality, interpretation and lack of standardized hardware or software49. As a result, efforts have been focused on small models and relatively small datasets (for example, <50 users50).

Intracortical BCI offers higher signal-to-noise ratio, but has been limited to single-participant studies due to nonstationarities in recordings and over sessions5,11,12,29. While the field of BCI is transitioning to neural network decoders4,29,51,52, it remains focused on solving these calibration issues, which are largely a function of limited data. Given that sEMG signals derive from the summed activity of motor unit firing, it is possible that sEMG-decoding methods such as those described here can guide methods development for intracortical BCI systems. The large-scale approaches demonstrated here may provide direction to the larger BCI field, such as BrainGate2,4 or Neuralink53.

Comparison to HCI baselines

To contextualize the absolute performance of our sEMG decoders, we compared their performance to both common input methods and those using similar gestures as our sEMG decoders use: a MacBook trackpad and motion capture ground truth wrist angles for 1D continuous control, a Joy-Con game controller for discrete grid navigation and open-loop prompted handwriting for text entry. In each case, these baseline devices outperform our sEMG decoders.

However, we note that these baseline interfaces cannot fulfil the same role as an always-available sEMG wristband, as they require cumbersome equipment: tracking wrist angles requires multiple calibrated cameras, using a laptop trackpad or a gaming controller encumbers the hand, and handwriting requires a pen, paper and a surface. For tasks in which constant availability is important (such as on-the-go scenarios), the reductions in current decoder performance may therefore be acceptable.

Regardless, we expect further improvements in sEMG decoding through continued development of user familiarity/skill over time, improved models (including through personalization), post-processing and hardware innovations for superior sensing. We also note that the gestures used with our sEMG decoders are novel, and we found that coaching typically improved sEMG decoder performance (Methods). We expect user proficiency to grow with increased familiarity with the sEMG-RD and underlying gestures.

Future directions

Our sEMG decoder enables direct intentional motor signal detection from the muscle, thereby opening directions in novel and accessible computer interactions. For example, such a decoder could be used to directly detect an intended gesture’s force, which is generally unobservable with existing camera or joystick controls. While we demonstrated accurate, fully continuous control over only one degree of freedom, it is also likely that joint control of multiple degrees of freedom is achievable through additional, separate biomimetic mappings such as adding ulnar/radial deviation of the wrist for vertical control. Moreover, the sensitivity of sEMG to detect signals as subtle as putative individual MUAPs (Fig. 1b and Extended Data Fig. 2) enables the creation of extremely low-effort controls—an important innovation with a potential impact for people with a diverse range of motor abilities or ergonomic requirements54. Explorations of interactions in neuromotor signal space—as opposed to gesture space—may enable entirely new forms of control, for example, by exploring the limits of novel muscle synergies or interaction schemes that directly depend on individual motor unit recruitment or firing-rate control.

As a research platform, the sEMG-RD and associated software tooling could enable study of the effects of neurofeedback on motor unit activity for novel human–machine interactions55,56, the learning of novel motor skills57 or the limits and mechanisms of motor unit control58.

Finally, in the clinic, the ability to design interactions that require only minimal muscular activity, rather than performance of a specific movement, could enable viable interaction schemes for those with reduced mobility, muscle weakness or missing effectors entirely59, as well as the development of effective closed-loop neurorehabilitation paradigms60. It is unclear whether the generalized models developed here and trained on able-bodied participants will be able to generalize to clinical populations, although early work appears promising54. Personalization can be applied selectively to users for whom the generic model works insufficiently well due to differences in anatomy, physiology or behaviour. However, all of these new applications will be facilitated by continued improvements in the sensing performance of future sEMG devices, increasingly diverse datasets covering populations with motor disabilities, and potentially combining with other signals recorded at the wrist, such as IMU or biosignals.

Methods

Hardware

sEMG-RD

The sEMG devices consisted of two primary subcomponents: a digital compute capsule and an analogue wristband (Extended Data Fig. 1). The digital compute capsule comprised the battery, antenna for Bluetooth communication and a printed circuit board that contained a microcontroller, an analogue-to-digital converter and an inertial measurement unit. The analogue wristband comprised discrete links that each housed a multilayer rigid printed circuit board that contained the low-noise analogue front-end circuits and gold-plated electrodes. We manufactured the sEMG-RD device in four sizes. The analogue front end applied 20-Hz high-pass and 850-Hz low-pass filters to the data.

These printed circuit boards were inserted into Nylon 12 PA 3D printed housings and then strung together with a multilayer flexible printed circuit board along with a strain-relieving fabric. An elastic nylon cord was routed continuously between the links and was tied together at the wristband gap to form a clasp and tensioning mechanism. Finally, the digital compute capsule was connected to the analogue wristband through a connector on the flexible printed circuit board and fastened together with screws for mechanical stability. The device underwent a biocompatibility testing process to ensure its safety. The band is easily donned at the wrist with the only requirements being that the compute capsule is on the dorsal side and the gap is near the ulna bone.

Data collection

MRI scan

To visualize the position of the sEMG-RD’s electrodes relative to wrist anatomy, we collected a high-resolution anatomical MRI scan (Siemens Magnetom Verio 3T) from a consenting participant’s right forearm. We collected axial scans along the forearm, beginning from just distal to the wrist and ending just distal to the elbow. The scan was collected pursuant to an IRB governed study protocol conducted by Imperial College London.

Participant experience

All data collection was done at either Meta’s internal data-collection facilities or at third-party vendor sites. Study recruitment and participant onboarding was performed according to protocol(s) approved by an external IRB (Advarra). All studies began by providing the participants with information about the study protocol and asking them to review and sign an IRB-reviewed consent form before beginning the study. The participants were provided with the opportunity to ask questions before their participation and were able to discontinue their participation at any time. On-site research administrators monitored participants during the study protocol(s) to ensure participant well-being. The participants were financially compensated for their time participating in the study.

Collection at scale

The participants visited data-collection and laboratory facilities to perform the study protocols. On a given day, there were up to 300 participants who partook in a study. Once a participant was in the facility, measurements of the wrist and hand were taken, including the forearm circumference and wrist circumference. Next, we fitted them with an appropriately sized band to collect sEMG data; small, 130–148 mm; medium, 148–169 mm; large, 169–193 mm; and extra large, 193–220 mm.

All of the participants received general coaching in the form of a study introduction, in-person demonstration of the correct and incorrect movements, and general supervision of participant compliance by research assistants. Study sessions lasted around 2–3 h (including rests and briefing). All responses and information provided during the study were collected and stored using de-identification technique(s) in a secure database.

While all collection occurred in controlled environments, training and testing datasets demonstrated large variance along band placement, sweating, skin condition, demographic diversity, local climate and other axes.

Prompted study design

All of our tasks were framed as supervised machine learning problems. For the handwriting and discrete-gesture recognition tasks, we relied on prompting to obtain approximate ground truth for our data, rather than direct instrumentation using physical sensors. While prompt labels depend on participant compliance, we found that instrumentation imposed constraints on what could be explored, as dedicated sensors need to be built for each individual modelling task. Furthermore, the use of sensors such as gloves or pressure sensitive pads limited the ecological validity of the signal, as physical sensors can restrict the movement range, poses and conditions examined.

For the wrist task, we used motion capture to continuously track the participant’s wrist angle (see below). In this case, we used a mixture of open-loop prompting (as for the discrete-gesture and handwriting tasks) and closed-loop interactions, in which participants performed cursor control tasks in which the cursor’s position was determined from their wrist angles tracked in real time (see below).

Training and evaluation protocols were implemented in a custom, internal software framework that took advantage of the abilities of Lab.js, an established open-source web-based study builder61. This framework orchestrated both the presentation of task-specific prompter applications and the recording of annotations from these applications. The framework was developed using TypeScript and the task-specific prompters were built on the React framework.

We created the overview figure of our data-collection approach in Fig. 1a using a photograph taken at our data-collection facility as a reference, which was then traced and edited in Procreate, with additional colour and graphical elements added in Adobe Photoshop.

Real-time data-collection system

Data collection for our studies was performed using an internal framework for real-time data processing that supports data collection, offline model training, and benchmarking and online evaluation. At its core, the framework offers an engine for defining and scheduling a data-processing graph. On the periphery, it provides well-defined APIs for real-time performance monitoring and interaction with consumer applications (for example, prompting software, applications for stream visualization).

For data collection, our internal platform served as the host for recording real-time signals and annotations to a standardized data format (that is, HDF5). For offline model training and benchmarking, our internal platform provides an API for batch processing of data corpora. This helps to generate featurized data from the recorded raw-signals and apply model inference for offline evaluation. To ensure online and offline parity, the internal platform also supports running the same sequence of processing steps on real-time signals for online evaluation.

Offline training data corpora

Wrist corpus

The wrist decoder training corpus included simultaneous recordings of sEMG and ground truth flexion-extension wrist angle (measured with motion capture) from 162 participants, 96 of whom recorded 2 sessions (both sessions from each of these participants were included in the same train or test split to which they were assigned). To track flexion-extension and ulnar-radial deviation wrist angles, we placed two light (16 g) 3D printed rigid bodies on the back of the hand and on the digital compute capsule of the sEMG-RD. Each of these rigid bodies had three retroreflective markers attached, which together defined a 3D plane that was tracked in 3D in real time (60 Hz) with <1 mm resolution using 18–30 PrimeX 13 W cameras (OptiTrack). We used the relative orientation of these two planes to calculate the flexion-extension and ulnar-radial deviation wrist angles. Only the flexion-extension angle was used for training and evaluating wrist decoders.

Each session consisted of an open-loop stage, a calibration stage and a closed-loop stage, in which the participants controlled a cursor that determined its position from these two wrist angles. Throughout all stages, the participants were instructed to keep their hand in a ‘laser pointer’ posture, with a loose fist in front of the body, thumb on top and elbow at approximately 90°.

In the open-loop stage, the participants performed centre-out wrist deflections in eight possible directions (four cardinal directions and four intercardinal directions) following a visual prompt (Extended Data Fig. 4a), for a total of 40 repetitions (5 per direction) in a pseudorandomized order.

In the closed-loop stage, the participants were asked to perform two tasks to the best of their abilities: a cursor-to-target task and a smooth pursuit task. In both tasks, the flexion-extension and radial-ulnar deviation wrist angles were normalized by their range of motion (measured in a calibration stage), centred by the neutral position (measured by prompting the user to hold a neutral wrist angle), and then respectively mapped to the horizontal and vertical position of a cursor on the screen, in real-time (60 Hz). This mapping consisted of simply scaling the (normalized and centred) wrist angles by a constant gain, gx. To encourage both small and large wrist movements, two different gains were used: gx = 2.0 pixels per normalized radian (half of range of motion) and gx = 4.0 pixels per normalized radian (quarter of range of motion). Gains larger than 1.0 were required for every user to be able to reach the corners of the workspace.

In the cursor-to-target task, the participants were prompted to move the cursor to one of the equally sized rectangular targets presented on the screen. During each trial, one of the targets was highlighted, and the participant was instructed to move the cursor towards that target. The target was acquired when the cursor remained within the target for 500 ms. Once a target was acquired, the rectangular target disappeared, and one of the remaining targets was prompted, initiating the next trial, in a random sequence. Once all of the targets were acquired, a new set of targets was presented. Three different target configurations were used: horizontal (10 targets presented side-by-side along the horizontal axis, with the cursor confined to this axis; Extended Data Fig. 7a), vertical (10 targets presented one on top of the other along the vertical axis, with the cursor confined to this axis) and 2D (25 targets presented in a 5 × 5 square grid; Extended Data Fig. 4b). These three configurations were presented in this order in a block structure. In the horizontal target configuration block, the participants had to acquire all 10 horizontal targets, and repeat this 10 times, for a total of 100 trials. The first 5 repetitions (50 trials) were performed with the lower cursor gain and the last 5 repetitions (50 trials) were performed with the higher cursor gain. The vertical target configuration block followed the same structure, and the 2D target configuration block consisted of 4 repetitions (for a total of 100 trials), with the first 2 performed with the lower cursor gain and the last 2 with the higher cursor gain.

Finally, in the smooth pursuit task, the participants were instructed to move the cursor to follow a moving target on the screen as closely as possible (Extended Data Fig. 4c). Each trial consisted of a 1-min random target trajectory, generated by taking a random combination of 0.1 Hz to 0.25 Hz sinusoids (with randomly sampled phases) along the horizontal and vertical axes. The participants performed a total of four trials, the first two of which were performed with the lower cursor gain and the last two with the higher cursor gain.

Only data within these task stages (open-loop, cursor-to-target and smooth pursuit) were used for model training and offline evaluation. All data outside of these stages were excluded from the model training and test sets. We also excluded data from the cursor-to-target task with the vertical target configuration, as the flexion-extension wrist angle was mostly constant during this task.

Discrete-gesture corpus

The discrete-gesture training corpus was composed of data from 4,900 participants. As noted in the main text, there were nine prompted gestures: index and middle finger presses and releases, thumb tap and thumb left/right/up/down swipes. Each session consisted of stages in which combinations of gestures were prompted at specific times (Extended Data Fig. 4d,e). These combinations usually included the full set of trained gestures but, in some stages, were restricted to specific subsets (for example, pinches only, thumb swipes only). During data collection for these stages, the participants were asked to hold their hand and arm in one of a range of postures (hand in front, palm facing in/out/up, hand in lap, arm hanging by side, forearm pronated inwards) or to translate/rotate their arms while completing gestures. In around 10% of stages, instead of prompting specific timing, the participants were asked to complete sequences of 3–5 gestures at their own pace. About one-third of the training corpus was composed of a range of null data in which participants were either asked to generate specifically timed null gestures (such as snaps, flicks) or to engage in more loosely prompted longer-form null behaviours (such as typing on a keyboard). On average, gestures occur in around 6% of samples. The gestures were unevenly distributed, with thumb gestures being more frequent. Given that an event has occurred, individual gesture probabilities range from around 9% to 13%. When considering the entire dataset including null cases, the probability of correctly guessing any specific gesture falls below 1%.

Handwriting corpus

The handwriting recognition corpus comprised sEMG recordings from a total of 6,627 participants. The data were collected in short blocks, during which the participants were prompted to write a series of randomly selected items, including letters, numbers, words, random alphanumeric strings or phrases (Extended Data Fig. 4f,g). The participants were prompted with spaces inserted both implicitly and explicitly between words. In implicit space prompting, the participants advance from one word to the next naturally as with pen and paper writing. In explicit space prompting, prompts with a right dash character would be presented after each word, instructing the participants to perform a right swipe with their index finger that would later be remapped to a space. This can constrain the modelling problem, avoiding the need for the model to infer spaces implicitly by relying on factors such as the linguistic context of the text being written. We sampled phrases from a dump of Simple English Wikipedia in June of 2017, the Google Schema-guided Dialogue Dataset62 and the Reddit corpus from ConvoKit63, after filtering to remove offensive words and phrases. Each participant contributed varying amounts of data, but approximately 1 h and 15 min each on average. Each block was performed in one of three randomly chosen postures: seated writing on a surface, seated writing on their leg as the surface or standing writing on their leg. Note that we did not have ground truth information about the fidelity with which participants wrote these prompts but, for a subset of participants, handwriting was performed with a Sensel Morph touch surface device. Visual examinations of a subset of the Sensel recordings suggested that approximately 98% of prompted characters were executed successfully.

sEMG preprocessing

Putative motor unit action potential waveform estimation

Figure 1b shows the spatiotemporal waveforms of MUAPs evoked by subtle contractions of the thumb and pinky extensors in one participant. For each digit, the participant selected the sEMG channel with maximum variance during sustained contractions based on visual inspection of the raw signals. Down-selecting to one channel enabled greater acuity for visual biofeedback during data collection. Subsequently, the participant was prompted to alternate between resting and performing sustained contractions of the chosen digit for three repetitions while receiving visual feedback about the raw sEMG signal on the selected channel. Each rest and movement prompt was 10 s long with 1 s interprompt intervals. The participant used the visual feedback on the selected channel to titrate the amount of generated force to recruit as few motor units as possible with each contraction64,65.

We estimated the MUAP spatiotemporal waveforms W (W ∈ \({\mathbb{R}}\)L×C, where L is the number of samples (40) and C is the number of channels (16)) for each digit using a simple offline spike-detection algorithm. The sEMG traces were first preprocessed by filtering with a second-order Savitzky–Golay differentiator filter with a width of 2.5 ms (5 samples). The filtered sEMG was rectified to improve the alignment of detected MUAPs, averaged over channels, then smoothed with a 2.5 ms Gaussian filter to obtain a 1D sEMG envelope. Spikes were detected by peak finding on the sEMG envelope using scipy.signal.find_peaks with prominence=0.5 (ref. 66). MUAPs were extracted using a 20-ms-long window across all sEMG channels, centred on each peak. The waveforms shown in Fig. 1b were obtained from the selected channel for thumb extension (12; blue) and pinky extension (14; pink) using all MUAPs detected during the second prompted movement period; no attempt was made to cluster MUAPs into different units. For visualization, the opacity of each trace was scaled as 1/(1 + |ai − median(a)|), where ai is the peak-to-peak amplitude of the ith MUAP and a is the amplitudes of all detected MUAPs for each contraction.

MPF features

The wrist and handwriting generic sEMG decoders used custom features extracted from the raw sEMG; we refer to this feature set as MPF features. To obtain these features, we first rescaled the sEMG by 2.46 × 10−6, to normalize the s.d. of the noise to 1.0 (this value was determined empirically). Motivated by the need to remove motion artifacts67, we then applied a 40 Hz high-pass filter (fourth-order Butterworth) to the sEMG recordings sampled at 2 kHz. We then extracted the squared magnitude of the cross-spectral density with a rolling window of T sEMG samples and a stride of 40 samples (20 ms). We used T = 200 samples (100 ms) for the wrist decoder and T = 160 samples (80 ms) for the handwriting decoder. The cross-spectral density was chosen to preserve cross-channel relationships in the spectral domain. We estimated the magnitude of cross-spectral density by first taking the outer product (over channels) of the discrete Fourier transform of the signal (64 sample (32 ms), stride of 10) with its complex conjugate. We then binned the result into 6 frequency bins (0–62.5, 62.5–125, 125–250, 250–375, 375–687.5, 687.5–1,000 Hz). We summed this product over each frequency bin, and took the square of the absolute value of the sum over frequencies. This produced a set of 6 symmetric and positive definite 16 × 16 square matrices that update every 40 samples, for an output frequency of 50 Hz. Building on robust results in the EEG space for this class of features, we applied a log-matrix operation on each of these matrices68. Finally, the diagonal and the first three off-diagonals (rolled over the matrix edge to account for the band being circular) were preserved and half-vectorized for each matrix, and then concatenated across the 6 frequency bins, producing a single 384 (6 × 4 × 16) dimensional vector for each 80 ms window. An implementation for both the cross spectral density estimation and taking the matrix logarithm can be found in the pyRiemann Python toolbox69.

Discrete-gesture time alignment

As all discrete-gesture data collection was performed by prompting participants, we had access to only approximate timing of the gesture execution (that is, the time at which the participant was prompted to perform the gesture). However, training sEMG decoding models to infer when the participant performs a gesture required more precise alignment of labels with the signal to be effective. While a task like handwriting used an alignment free loss (that is, connectionist temporal classification, CTC) and would be applicable in this task as well, forced-alignment enabled us to gain much finer control over the latency of the detections produced by our models, which was critical for practical use of discrete gestures as control inputs.

When gestures were well isolated, that is, when the intergesture interval was greater than the uncertainty of the timing, existing solutions from the literature could be readily deployed on sEMG data, leading to robust inference of gesture timing70. However, realistic data collection involved rapid sequence of gestures in close succession, which made identification of timing of individual gestures a challenging problem and required a dedicated solution. We therefore developed an approach to infer the precise timing of the gestures.

Our approach was to infer the timing of all gestures in a sequence, defined as a series of consecutive gestures for which uncertainty bounds overlap. We did this by searching for the sequence of gesture timings that best explained the observed data according to a generative model of our MPF features.

First, for the purposes of this timing adjustment stage, we defined the generative model for a set of K gesture instances as the sum of gesture-specific templates centred at corresponding event times, tk, with additive noise:

$$x(t)={\sum }_{k=0}^{K}{\phi }_{k}(t-{t}_{k})+n(t)$$

where x(t) is our features over time, ϕk(t) is a prototypical spatiotemporal waveform for gesture of index k (that is, the gesture template for the class of gesture corresponding to event k) and n(t) is a noise term. We note that this generative model is only valid for ballistic gesture execution and power-based features. We also note that templates are shared across executions of the same gesture type, but specific to each participant and band placement.

We define the generative inference as the joint optimization of gesture templates and times at which each gesture occurred. For each recording, we solved this through an iterative algorithm: we first estimated the templates based on prompted times, then inferred timestamps of the gesture sequence, and repeated with new inferred event times until convergence (that is, when the timestamp updates across iterations of the EM algorithm were smaller than a tolerance value).

Templates were estimated by an EMG analogue of the regression-based estimator of the event-related potential (rERP), to disentangle overlapping contributions of gestures performed in a fast sequence71. Timings were obtained by the following optimization problem:

$${\min }_{{t}_{k}={\rm{0..}}.K}{\int }_{t}{(x(t)-{\sum }_{k=0}^{K}{\phi }_{k}(t-{t}_{k}))}^{2}{\rm{d}}t$$

We optimized this numerically through a beam search algorithm, subject to additional ad hoc constraints that bounded how far the adjusted times could deviate from the prompted times based on priors from the data-collection protocol.

Direct application of the above procedure produced timestamps that were referenced to the session template, and there was an indeterminacy as to the timing offset within the gesture, which can vary due to initial conditions. To better standardize alignment of template timing across individuals, we performed a global recentring step at the end of timestamp estimation. Specifically, we found the time of maximal correlation between the session template (that is, for a particular participant) and a global template (grand average of all templates across participants).

Gesture-trigged sEMG activations

To inspect the structure of sEMG activations across gestures and participants (Fig. 2b), we used EMG covariance features. Specifically, we concatenated the 0-, 1- and 2-diagonals of the sEMG covariance matrix over a 300 ms window centred on each gesture, yielding a 48 × 60-dimensional feature space. To produce the embeddings, we ran t-SNE in two dimensions with perplexity 35 on the flattened feature space.

Single-participant discrete-gesture modelling

Training details

To train the single-participant models for the discrete-gesture classification task, we selected 100 participants who had completed at least five sessions of data collection and selected five of those sessions. We then randomly picked four of these sessions for training and the remaining held-out session for testing. From these four sessions we randomly created nested subsets of two, three and all four sessions to train three different models for each participant. Given the limited amount of training data per model, we used the MPF features and a small neural network as described below.

Architecture

The single-participant discrete-gesture model took as input the MPF features. The network architecture consisted of (a) one fully-connected (FC) layer with Leaky ReLU activation function followed by (b) cascaded time-depth separable (TDS) blocks72 across time scales and (c) three more FC layers to produce a logit value for each of the nine discrete gestures to be predicted. For (b), we used two TDS blocks per time-scale: at each scale s, an AveragePool layer with kernel size 2s was applied to the output of (a) and fed to a TDS block with dilation 2s. The output was then added to the output of scale s − 1 (if it existed) and passed through another TDS block with dilation 2s as the output of scale s to be used by the next scale s + 1 (if it exists) or subsequent layers. We used 6 scales (s = 0, …, 5), and the feature dimension was set to 256 for all TDS blocks and all but the very last FC layer.

Optimization

We used the standard Adam optimizer with the following learning rate schedule: the learning rate increased linearly from 0 to 1 × 10−3 over a five-epoch warm-up phase, then underwent a one-time decay to 5 × 10−4 after epoch 25, and remained constant thereafter. Each model was trained for 300 epochs to avoid under- or over-fitting for single-user models, based on previous empirical observations. A binary cross-entropy loss was used as with the generic model.

Offline evaluation

To evaluate the performance of each model on the given held-out sessions, we followed the same procedure described under the ‘Discrete gestures’ part of the ‘Generic sEMG decoder modelling’ section. In brief, we triggered gesture detections on the corresponding model probability crossing a threshold of 0.35, filtered all detected gestures through debouncing and state machine filtering, and then used the Needleman–Wunsch algorithm to match each ground-truth label with a corresponding model prediction. We then quantified performance using the FNR, defined as the proportion of ground-truth labels for which either the matched model prediction is incorrect or there is no matched model prediction. We calculated the FNR independently for each gesture and then took the average over the nine gestures. We used FNR rather than CLER (the metric used for generic models) owing to the very small number of events detected for some poorly performing models, which lead to a large number of labels without a matched model prediction, which are ignored by the CLER metric.

Generic sEMG decoder modelling

Related deep learning architectures and approaches

The three HCI tasks described here—continuous wrist angle prediction, discrete action recognition and the transcription of handwriting into characters—represent related but distinct time-series modelling and recognition tasks. Machine learning and, specifically, deep learning approaches have become extremely popular solutions to these problems, including convolutional models73, recurrent neural networks74 and streaming transformers30.

As an example of the similarity between our tasks and established machine learning problems, consider the relationship between handwriting recognition from sEMG and automatic speech recognition (ASR) from audio waveforms. Both tasks map continuous waveform signals (with dimensionality equal to the number of microphones or sEMG channels) at a fixed sample rate, to a sequence of tokens (phonemes or words for ASR, characters for our sEMG-RD). Components of our modelling pipeline have analogues in ASR, including feature extraction, data augmentation, model architecture, loss function, decoding and language modelling. As noted below, each of these modelling pipeline components required substantial domain-specific modification for sEMG models.

For feature extraction, ASR typically uses log mel filterbanks; we used our analogous MPF features (see the section ‘MPF features’), as discussed below. For data augmentation, we used the ASR technique of SpecAugment75, which applies time- and frequency-aligned masks to these spectral features during training. A popular model architecture for ASR is the Conformer30, which provides the advantages of attention-based processing in a form that is compatible with causal time-series modelling. We found that this method worked well for sEMG-based handwriting recognition as well. A popular loss function for ASR is CTC76, which allows neural networks to be trained from waveforms and their textual transcriptions, without the need for a precise temporal alignment. As we similarly had pairs of sEMG recordings and transcriptions without precise temporal alignment, we also used CTC to train our models. When decoding models at test time, ASR typically uses a beam search77 to approximate the full forward-backward algorithm lattice78 while still incorporating predictions from a language model, biasing decoding towards more likely character and word sequences. Experimentation presented in this work used ‘greedy’ CTC decoding, although beam decoding with language modelling in our decoders would have been possible79.

In addition to ASR, we drew from an established literature of machine learning approaches for EEG and EMG analysis that explores different signal featurizations and both classical and deep learning architectures. In the case of EMG, more expressive raw sEMG or time-frequency decomposed features (for example, Fourier or Wavelet features) have been shown to achieve stronger performance than coarser temporal statistics like RMS power80,81. In the case of EEG, MPF features68 have proven to be a simple and robust featurization achieving state of the art, or near state of the art, performance for many tasks10. In agreement with the literature, we find that MPF features offer clear advantages on the wrist classification task over RMS power (Extended Data Fig. 6). As MPF features are computed across a sliding window of 100 ms, which is comparable to the temporal extent of our discrete gestures, we chose to instead use raw EMG features for the discrete-gestures task.

Both EMG interfaces and BCIs have been approached with a variety of different learning architectures in the literature, including both classical machine learning approaches (for example, random forest, support vector machine) and deep-learning-based approaches81. While the choice of modelling approach is problem dependent, in general, for large datasets, deep learning approaches outperform more classical machine learning techniques82.

Wrist

To train wrist decoders, we trained a neural network to predict instantaneous flexion-extension wrist angle velocities measured by motion capture (see the ‘Wrist corpus’ section above). We consistently held out a fixed set of 10 participants for validation and 22 participants for testing, and varied the number of training participants from 20 to 130.

Architecture

The wrist decoder network architecture took as input our custom MPF features of the sEMG signal. These features were passed through a rotational-invariance module, which comprised a fully connected layer with 512 hidden units and LeakyReLU activation. This module was applied to sEMG channels that were discretely rotated by +1, 0 and −1 channels, and the resulting outputs were then averaged over the rotation process. This output was then passed through two LSTM layers of 512 hidden units each, a LeakyReLU activation, and a final linear layer producing a 1D output. For the smaller network architecture reported in Fig. 2e, we used only 16 hidden units in the initial MLP and LSTM, and only 1 rather than 2 LSTM layers. A forward pass of the larger architecture required 1.2 million floating point operations (FLOPs) per output sample.

Optimization

We trained each network with the Adam optimizer for a maximum of 300 epochs, with a learning rate of 1 × 10−3. We used an L1 loss function and a batch size of 1,024, with each sample in the batch consisting of 4 (contiguous) seconds of recordings. We evaluated the test performance of the training checkpoint with the lowest L1 loss of the validation data. Training the largest model on the largest training set took 36 s per epoch, for a total of 3 h on a single NVIDIA A10G Tensor Core GPU.

Discrete gestures