Introduction

Synthetic aperture imaging has revolutionized high-resolution observations across diverse fields, from radar and sonar to radio astronomy. By coherently combining signals from multiple small apertures, it can achieve resolution unattainable by single-aperture receivers. These achievements rely fundamentally on precise timing synchronization between received signals from separated apertures1. However, translating synthetic aperture techniques to optical domain presents significant challenges due to the much shorter wavelength of light, which demands stringent sub-wavelength synchronization precision. Conventional optical implementations depend heavily on interferometric methods that establish phase synchronization between apertures2,3,4,5,6,7,8,9. There approaches often require intricate optical setups and precise alignment maintenance, limiting their practical scalability outside controlled laboratory settings. Alternative approaches like Fourier ptychography synthesize larger apertures in reciprocal space without direct interferometric measurements10,11,12,13,14,15,16. However, this method struggles to reconstruct wavefields exhibiting substantial phase variations or 2π wraps17,18, making accurate reconstruction mathematically infeasible22,23. Wavefront sensing techniques offer another non-interferometric approach to recover phase19,20,21,22,23,24,25, yet conventional wavefront sensors measure phase gradients rather than absolute phase values, making them suitable mainly for smoothly varying phase aberrations20,22. Crucially, establishing wavefield relationships between separate receivers remains a fundamental barrier to practical systems.

Here, we introduce multiscale aperture synthesis imager (MASI), an imaging architecture that transforms how synthetic aperture imaging can be implemented at optical wavelengths. MASI builds conceptually on the multiscale approach introduced in gigapixel imaging26,27,28,29, where complex imaging challenges are broken down into tractable sub-problems through parallelism. While previous multiscale approaches focused primarily on expanding field of view, MASI applies the multiscale paradigm to enhance resolution by coherently synthesizing apertures in real space. To achieve this goal, MASI employs a distinctive measurement-processing scheme where image data is captured at the diffraction plane, while computational synchronization and coherent fusion are performed at the object plane in real space. At the diffraction plane, MASI utilizes a distributive array of coded sensors to acquire lensless diffraction data without reference waves or interferometric measurements. The recovered wavefields from individual sensors are then digitally padded and propagated to the object plane for coherent synthesis. The key innovation enabling MASI is a computational phase synchronization method, which iteratively tunes the relative phase offsets between sensors to maximize the total energy in the reconstructed object. This process functions analogously to wavefront shaping techniques in adaptive optics30,31,32,33,34,35, where phase elements are optimized to deliver maximum light to target regions. Unlike current approaches that perform synthesis in reciprocal space and require overlapping measurement regions to establish phase coherence, MASI implements alignment, phase synchronization, and coherent fusion entirely in real space, eliminating the need for overlapping measurement regions between separated apertures. This fundamental shift allows distributed sensors to function independently while still contributing to a coherent synthetic aperture that overcomes the diffraction limit of a single receiver. By decoupling physical measurement from computational synthesis, MASI transforms what would be an intractable optical synchronization problem into a manageable computational one, enabling practical, scalable implementations of synthetic aperture imaging at optical wavelengths.

Results

Principle of MASI and computational synchronization

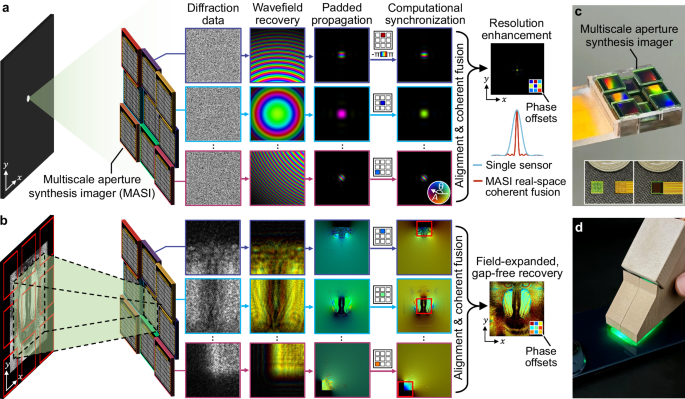

Figure 1 illustrates the operating principle and implementation of MASI. In Fig. 1a, we demonstrate how MASI surpasses the diffraction limit of a single sensor by coherently fusing wavefields in real space, without requiring reference waves or overlapping measurement regions between receivers. The process begins with capturing raw intensity patterns using an array of coded sensors positioned at different diffraction planes. Each sensor incorporates a pre-calibrated coded surface that enables robust recovery of complex wavefield information via ptychographic reconstruction. After recovering the wavefields, we computationally pad them and propagate them to the object plane in real space for alignment and synthesis.

a Resolution enhancement with MASI. Lensless diffraction patterns of a point source are captured by nine coded sensors (first column). These images are processed to recover the complex wavefields (second column), which are then padded and propagated to the object plane (third column). Through computational phase synchronization (fourth column), MASI synchronizes wavefields from different sensors by optimizing their relative phase offsets to maximize energy in the reconstructed object, without requiring any overlapping measurement regions between individual sensors. In the rightmost panel, the nine color blocks in the bottom right inset represent the recovered phase offsets of individual sensors, where the phase values are coded with color hues. b Field of view expansion with MASI. As the padded wavefields are propagated from the coded surface plane to the object plane, diffraction naturally expands the field of view beyond individual sensor dimensions, enabling reconstruction despite physical gaps between sensors. c MASI prototype with a compact array of coded sensors. The insets show the coded image sensor and its integration with a customized ribbon flexible cable. d Reflection-mode configuration, where a laser beam illuminates the object surface at ~45 degrees.

An important step enabling MASI’s performance is to properly synchronize the wavefields from individual sensors that operate independently without overlapping measurement regions. As shown in the fourth column panels of Fig. 1a, we address this challenge by implementing computational phase synchronization between individual sensors. We designate one of the sensors as a reference and computationally determine the phase offsets for all remaining sensors through an iterative optimization process (Methods). The color blocks in the fourth column panels of Fig. 1a represent these recovered phase offsets, with different color hues indicating different phase values. By maximizing the energy concentration in the reconstructed image, this approach ensures constructive interference between all sensor contributions despite their physical separation, eliminating the need for overlapping measurements or reference waves that constrain conventional techniques. With proper synchronization, the real-space coherent fusion in MASI significantly improves resolution compared to what is achievable with a single sensor in the rightmost panel of Fig. 1a (also refer to Supplementary Figs. S1-S2 and Note 1). In contrast with conventional approaches that perform aperture synthesizing at reciprocal space, MASI operates entirely through real-space alignment, synchronization, and coherent fusion, effectively transforming a distributed array of independent small-aperture sensors into a single large virtual aperture.

An enabling factor for MASI’s multiscale strategy is the ability to accurately recover wavefield information using individual sensors. As demonstrated in Supplementary Fig. S3, conventional phase retrieval methods like Fourier ptychography suffer from non-uniform phase transfer function36 that drops to near-zero values for low spatial frequencies, making them blind to slowly varying phase components, such as linear phase ramps and step transitions18. In contrast, MASI’s individual coded sensor successfully recovers both the step phase transition and the linear phase gradient with only a constant offset from ground truth. This robust performance stems from the coded surface modulation, which converts phase variations—including low-frequency aberrations—into detectable intensity variations21,43. For example, a linear phase ramp is converted into a spatial shift of the modulated pattern, while other slowly varying phase variations manifest as distortions in the modulated pattern. Supplementary Fig. S4 illustrates how Fourier ptychography fails when attempting synthetic aperture imaging of complex objects with multiple phase wraps. This demonstrates that without proper phase recovery capabilities at the individual sensor level, conventional methods cannot achieve successful synthetic aperture imaging.

Figure 1b demonstrates MASI’s field expansion capability. As the recovered wavefields from individual sensors are digitally padded and propagated back to the object plane, diffraction naturally expands each sensor’s field of view beyond its physical dimensions, effectively eliminating gaps in the final reconstruction in the rightmost panel in Fig. 1b (also refer to Supplementary Figs. S5-S6 and Note 1). Figure 1c shows the MASI sensor array, which consists of nine coded sensors arranged in a grid configuration. This multiscale architecture—breaking the imaging challenge into parallel, independent sub-problems—enables each sensor to operate without overlaps with others. During operation, piezoelectric stages introduce sub-pixel shifts ( ~ 1–2 μm) to individual sensors for ptychogram acquisition37, enabling complex wavefield recovery and pixel super-resolution reconstruction38,39 from intensity-only diffraction measurements. These shifts are orders of magnitude smaller than the millimeter-scale gaps between adjacent sensors, ensuring completely independent operation that could scale to long-baseline optical imaging, similar to distributed telescope arrays40 in radio astronomy. In MASI, sensors can be positioned on surfaces at different depths and spatial locations without requiring precise alignment. The design tolerance dramatically simplifies system implementation while maintaining the ability to synthesize a larger virtual aperture. The physical prototype in Fig. 1d demonstrates the system’s compact form factor and practical deployment in a reflection configuration, where the sensor array is placed at a 45-degree tilted plane.

The imaging model of MASI can be formulated as follows. We first denote \(O(x,y)\) as the object exit wavefield in real space. If the object is a 3D object with a certain non-planar shape, \(O(x,y)\) refers to its 2D diffractive field above the 3D object. With the 2D exit wavefield, one can digitally propagate it back to any axial plane and locate the best in-focus position to extract the 3D shape. With \(O(x,y)\), the wavefield arriving at the sth coded sensor with a distance \({h}_{s}\) can be written as:

$${W}_{s}\left(x,y\right)=O(x,y){ * {psf}}_{{free}}({h}_{s})$$

(1)

where \( * \) denotes convolution, and \({{psf}}_{{free}}({h}_{s})\) represents the free-space propagation kernel for a distance \({h}_{s}\). Because each sensor is placed at a laterally shifted position (\({x}_{s}\), \({y}_{s}\)) and has a finite size, we extract a portion of \({W}_{s}\left(x,y\right)\) that falls onto the sth coded sensor with m rows and n columns:

$${W}_{\!\!\!s}^{{crop}}\left(1:m,1:n\right)={W}_{\!\!\!s}({x}_{s}-\frac{m}{2}:{x}_{s}+\frac{m}{2},{y}_{s}-\frac{n}{2}:{y}_{s}+\frac{n}{2})$$

(2)

The intensity measurement acquired by the sth coded sensor can be written as:

$${I}_{s,j}\left(x-{x}_{j},y-{x}_{j}\right)={\left|\left\{{W}_{s}^{{crop}}(x,y)\cdot {{CS}}_{s}(x-{x}_{j},y-{x}_{j})\right\}{ * {psf}}_{{free}}(d)\right|}^{2}$$

(3)

where \({{CS}}_{s}\left(x,y\right)\) represents the coded surface of the sth coded image sensor, and ‘\({ * {psf}}_{{free}}(d)\)’ models the free-space propagation over a distance d between the coded surface and the sensor’s pixel array. Here, the subscript j in \({I}_{s,j}\left(x,y\right)\) represents the jth measurement obtained by introducing a small sub-pixel shift (\({x}_{j}\), \({y}_{j}\)) of the coded sensor using an integrated piezo actuator (Methods). Physically, this process encompasses two main steps. The wavefield \({W}_{s}^{{crop}}\) is first modulated by the known coding pattern \({{CS}}_{s}\) upon arriving at the coded surface plane, and then the resulting wavefield propagates a short distance d before reaching the detector.

With a set of acquired intensity diffraction patterns \(\{{I}_{s,j}\}\), the goal of MASI is to recover the high-resolution object wavefield \(O(x,y)\) that surpasses the resolution achievable by a single detector. Reconstruction occurs in two main steps. First, the cropped wavefield \({W}_{s}^{{crop}}(x,y)\) is recovered from measurements \(\{{I}_{s,j}\}\) using the ptychographic phase-retrieval algorithm41,42. The recovered wavefield is then padded to its original un-cropped size, forming \({W}_{s}^{{pad}}\left(x,y\right)\). Next, each padded wavefield is numerically propagated back to the object plane in real space. The accurate positioning of each coded sensor (\({x}_{s}\), \({y}_{s}\), \({h}_{s}\)) is critical for proper alignment and is determined through a one-time calibration experiment (Methods and Supplementary Note 2). Using these calibrated parameters, individual object-plane wavefields are aligned and coherently fused into a single high-resolution reconstruction through computational wavefield synchronization:

$${O}_{{recover}}(x,y)={\sum }_{s}[({{e}^{i\cdot {\varphi }_{s}}\cdot W}_{s}^{{pad}}\left(x,y\right)){ * {psf}}_{{free}}({-h}_{s})]$$

(4)

where \({\varphi }_{s}\) is the phase offset for the sth coded sensor. Supplementary Note 3 details our iterative computational phase compensation method that adjusts the unknown \({\{{\varphi }_{s}}\}\) to maximize the integrated energy of the fused reconstruction\(\,{O}_{{recover}}\). Supplementary Fig. S7 demonstrates the principle underlying this approach, showing that for objects with both brightfield and darkfield contrast, the total synthesized intensity consistently reaches its maximum when all sensors have their correct phase offsets. This behavior enables our coordinate descent algorithm in Supplementary Fig. S8, which sequentially optimizes each sensor’s phase while maintaining computational efficiency. The effectiveness of this approach is validated in a simulation study in Supplementary Fig. S9, which shows that our method successfully recovers high-fidelity reconstructions from severely distorted initial states, achieving near-zero errors compared to ground truth objects. The proposed computational phase synchronization approach parallels wavefront shaping techniques in adaptive optics30,31,32,33,34, ensuring constructive interference and maximum energy recovery. Alternative optimization metrics, such as darkfield minimization or contrast maximization, can also be employed depending on the imaging context. The real-space coherent synchronization in Eq. (4) leads to a resolution enhancement that is unattainable by any single receiver alone, effectively synthesizing a larger effective aperture. By decoupling the phase retrieval and sensor geometry requirements, MASI accommodates flexible sensor placements with minimal alignment constraints, realizing a practical platform for high-resolution, scalable optical synthetic aperture imaging.

Experimental characterization

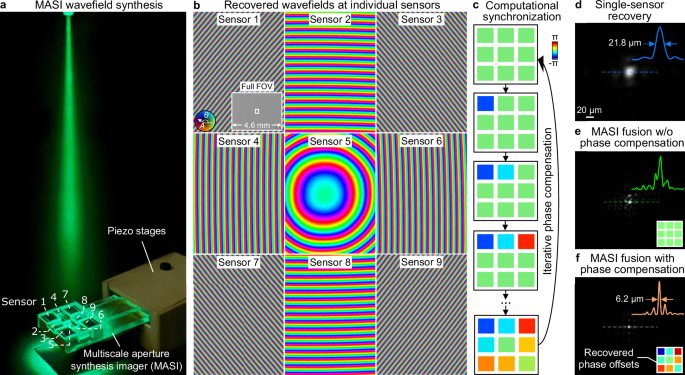

To evaluate and characterize the performance of MASI, we conducted experimental validation under both transmission and reflection configurations. In Fig. 2, we employed a transmission configuration using point-like emitters as test objects. As shown in Fig. 2a, the MASI prototype was positioned to capture diffraction patterns from the single-mode fiber-coupled laser, with piezo actuators enabling small lateral shifts of the sensor array (~1 µm shift per step) to ensure measurement diversity for ptychographic reconstruction. The point-source object served two purposes: it provided validation of resolution improvements through analysis of reconstruction, and it enabled calibration of each sensor’s relative position and distance parameters (\({x}_{s}\), \({y}_{s}\), \({h}_{s}\)). Figure 2b presents the zoomed-in views of the reconstructed complex wavefields from individual sensors of the MASI. The insets of Fig. 2b also show the full fields of view of reconstructions (labeled as ‘Full FOV’). These recovered wavefields exhibit distinct fringe patterns corresponding to their respective positions relative to the point object.

a Schematic of using MASI in a transmission configuration. The numbered coded sensors (1–9) are positioned on an integrated piezo stage that introduces controlled sub-pixel shifts. b Complex wavefields recovered from individual sensors of MASI. The color map presents the phase information from -π to π. The wavefields shown in the main panels are zoomed-in view of the area indicated by the white box in the ‘Full FOV’ (full field of view) inset. c The iterative phase compensation process, where phase offsets of individual sensors are digitally turned to maximize the integrated intensity of the object. The nine color blocks represent the recovered phase offsets of individual sensors. d The recovered point source using Sensor 5 alone. The limited aperture of a single sensor results in a broadened point source reconstruction. e Result of coherent synthesis without proper phase offset compensation. f MASI coherent synthesis with optimized phase offsets obtained from the computational phase compensation process. The synthesized aperture provides substantially improved resolution. In addition to validating resolution gains, this point-source experiment also provides calibration data (\({x}_{s}\), \({y}_{s}\), \({h}_{s}\)) for each sensor, enabling precise alignment in subsequent imaging tasks. Supplementary Movie 1 visualizes the iterative phase compensation process for computational wavefield synchronization.

To coherently synthesize independent wavefields from different sensors, we implemented the computational phase compensation procedure in Fig. 2c, which iteratively optimizes each sensor’s global phase offset to maximize the integrated intensity at the object plane (Supplementary Note 3). The nine color blocks here represent the recovered phase offsets for the nine coded sensors, with different color hues indicating different phase values. In the synchronization process, we also employed field-padded propagation to extend the computational window beyond each sensor’s physical dimensions for robust real-space alignment. Figures 2d–f demonstrate the effectiveness of MASI implementation. A single sensor’s reconstruction in Fig. 2d shows the point spread function broadening due to its aperture’s diffraction limit. Unsynchronized multi-sensor fusion in Fig. 2e yields limited improvement. In contrast, MASI’s computational synchronization in Fig. 2f substantially improves resolution. The nine color blocks in the insets of Figs. 2e, f show the phase offsets before and after optimization. These results confirm that MASI’s computational synchronization effectively extends imaging capabilities beyond individual sensor limitations. Supplementary Movie 1 further illuminates the iterative phase synchronization process shown in Fig. 2c.

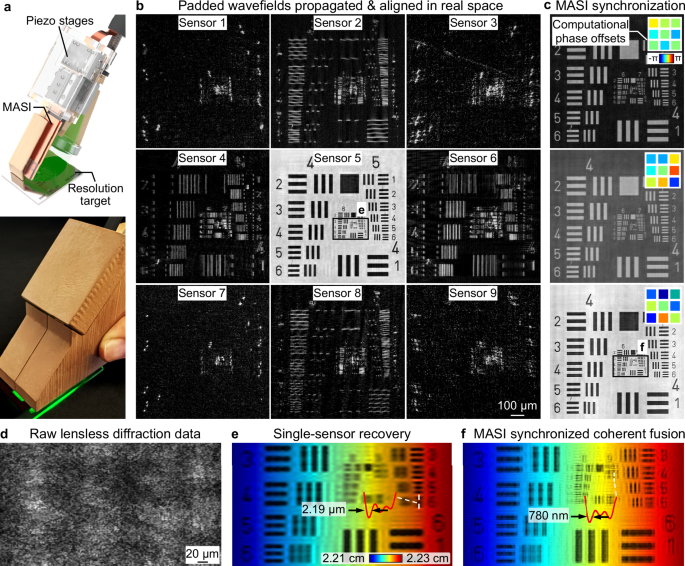

In Fig. 3, we validated MASI in a reflection-mode configuration using a standard resolution test chart positioned at a ~ 45-degree angle relative to the sensor array. The experimental setup is illustrated in Fig. 3a, where a laser beam illuminates the resolution target and the diffracted wavefield is captured by the MASI. Figure 3b shows the padded, propagated wavefields from all nine sensors at the object plane, each capturing complementary spatial information of the resolution target. Figure 3c shows the computational phase synchronization process, visualized through different phase offset combinations in the top right insets. One can tune the phase offsets of different sensors to generate additional contrast like the darkfield image in the top panel of Fig. 3c. Comparing the raw diffraction data (Fig. 3d) with single-sensor recovery (Fig. 3e) and synchronized MASI coherent fusion (Fig. 3f) reveals the dramatic resolution enhancement achieved through computational wavefield synthesis. The color variation in Figs. 3e, f represents depth information ranging from 2.21 to 2.23 cm, resulting from MASI’s tilted configuration relative to the resolution target. To accurately handle the tilted configuration in this experiment, we implemented a tilt propagation method as demonstrated in Supplementary Fig. S10 (Methods).

a Schematic of the MASI prototype capturing reflected wavefields from a standard resolution test chart. b Padded wavefields from all nine sensors propagated to the object plane, revealing distinct information captured by each sensor. Each sensor contributes unique high-frequency details of the resolution target, demonstrating the distributed sensing capability of MASI. c Computational phase synchronization process showing different visualization contrasts achieved by varying phase offsets. The 3×3 color blocks in each inset represent the phase values applied to individual sensors, with different hues indicating different phase values. d Raw lensless diffraction data of a zoomed-in region of the resolution target (same region highlighted in the Sensor-5 panel of b). e Single-sensor reconstruction with the raw data in d, resolving linewidths of ~2.19 µm. The color map represents depth information (2.21–2.23 cm) resulting from MASI’s tilted configuration relative to the resolution target. f MASI coherent fusion after computational synchronization, resolving 780 nm linewidths at ~2 cm ultralong working distance.

As shown in Fig. 3d, e, quantitative analysis through line traces confirms that MASI resolves features down to 780 nm at a ~2 cm working distance, whereas single-sensor reconstruction is limited to approximately 2.19 µm resolution. This combination of sub-micron resolution and centimeter-scale working distance represents an advancement over conventional imaging approaches, which typically require working distances of one millimeter or less to resolve features at this scale. In Supplementary Fig. S11, we also show different sensor configurations and the corresponding wavefield reconstructions, demonstrating how different sensor combinations affect the resolution. Particularly notable is how the coherent fusion of sensors arranged in complementary positions provides directional resolution improvements along the corresponding spatial dimensions, enabling tailored resolution enhancement for specific imaging applications.

Computational field expansion

Unlike conventional imaging systems, which are often constrained by lens apertures or sensor sizes, MASI leverages light diffraction to recover information at regions outside the nominal detector footprint. This field expansion can be understood as a reciprocal process of wavefield sensing on the detector. When our coded sensor captures diffracted light, it recovers wavefronts arriving from a range of angles, each carrying information about different regions of the object. These angular components contain spatial information extending beyond the physical sensor boundaries. During computational reconstruction, we perform the conjugate operation by back-propagating these captured angular components to the object plane. By padding the recovered wavefield at the detector plane before back-propagation, we effectively allow these angular components to retrace their propagation paths to regions outside the sensor’s direct field of view. This process is fundamentally governed by the properties of wave propagation, the same waves that carry information from extended object regions to our small detector can be computationally traced back to reveal those extended regions. The field expansion arises naturally as the padded recovered wavefields are numerically propagated from the diffraction plane to the object plane (Fig. 1b), effectively reconstructing parts of the object not directly above the sensor.

We also note that both the original and extended wavefield regions maintain the same spatial frequency bandwidth. For the wavefield directly above the coded sensor, the recovered spatial frequency spectrum is centered at baseband including the zero-order component. As we computationally extend to regions beyond the sensor through padding and propagation, the bandwidth remains constant but shifts to different spatial frequencies based on the angular relationship between the extended location and sensor position. This principle is demonstrated in Fig. 3, where Sensor 5 captures baseband frequencies for the resolution target directly above it, while peripheral sensors capture high-frequency bands of the same region through their extended fields. This distributed frequency sampling across multiple sensors is precisely what enables super-resolution in MASI.

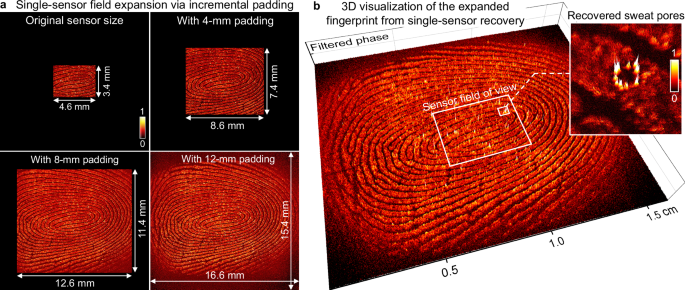

Figure 4a demonstrates the field expansion capability when imaging a fingerprint using a single sensor in MASI. Using 532 nm laser illumination at a 19.5 mm working distance, a single 4.6 × 3.4 mm sensor captures only a small portion of the fingerprint’s diffraction pattern. By padding the reconstructed complex wavefield and propagating it to the object plane, we can expand the imaging area from the original sensor size of 4.6 × 3.4–16.6 × 15.4 mm (with 12 mm padding). Supplementary Movie 2 visualizes the field expansion process. To enhance visualization of surface features across this expanded field, the recovered phase maps undergo processing of background subtraction as illustrated in Supplementary Fig. S11a, which improves the contrast of the fine features. Figure 4b shows a 3D visualization of the expanded fingerprint phase map covering an area of 16.6 × 15.4 mm, with the inset highlighting resolved sweat pores. Supplementary Fig. S12 further demonstrates the versatility of this approach across different materials including plastic, wood, and polymer surfaces, each revealing distinct micro-textural details. This capability demonstrates how MASI’s computational approach transforms a small physical detector into a much larger virtual imaging system with enhanced phase contrast visualization.

a To expand the imaging field of view in MASI, we computationally pad the recovered wavefield at the diffraction plane and propagate it to the object plane. With different padding areas, the reconstruction region grows from the original 4.6 × 3.4 mm sensor size to 16.6 × 15.4 mm, allowing a much broader view of the fingerprint to be revealed without additional data acquisition. The color scale represents phase values from 0 to 1. b 3D visualization of the fingerprint across the expanded field, highlighting the detailed fingerprint ridges and the location of individual sweat pores. Supplementary Movie 2 visualizes the field expansion process via incremental padding.

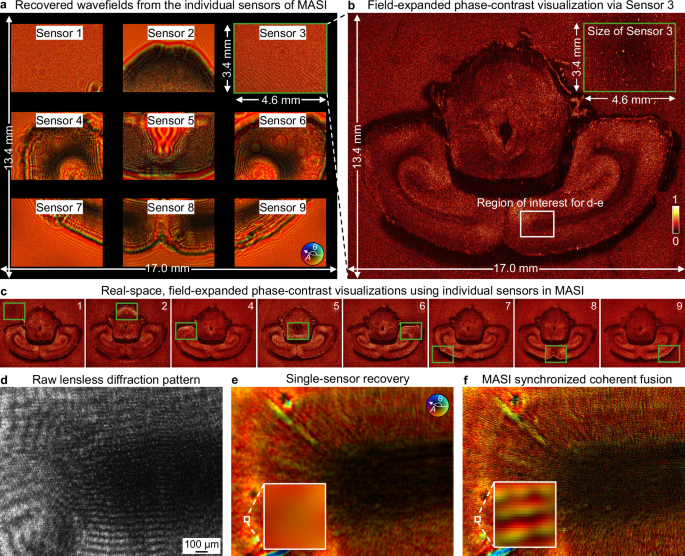

Figure 5 further validates MASI’s capability for high-resolution, large-area phase-contrast imaging of a mouse brain section. Figure 5a displays the recovered complex wavefields from all 9 individual sensors in the MASI device, each capturing a 4.6 × 3.4 mm region. Figure 5b demonstrates the field-expanded recovery using just a single sensor (Sensor 3), where computational padding and propagation transform a small 4.6 × 3.4 mm sensor area into a comprehensive 17.0 × 13.4 mm phase-contrast visualization of the entire brain section. The recovered field of view is much larger than the physical sensor area highlighted by the green box in Fig. 5b. The speckle-like features visible outside the brain tissue arise from air bubbles in the mounting medium, a sample preparation artifact common when mounting large tissue sections. Figure 5c shows similar field-expanded recoveries from each individual sensor, with green boxes indicating their physical dimensions and locations. Figures 5d–f present a detailed comparison between MASI lensless raw data (Fig. 5d), single-sensor recovery (Fig. 5e), and MASI coherent synchronization of all sensors (Fig. 5f) for a region of interest in the brain section. The MASI coherent synchronization resolves the myelinated axon structure radiating outward from the central ventricle. This computational field expansion, combined with MASI’s ability to operate without lenses at long working distances, enables a paradigm for wide-field, high-resolution imaging that overcomes traditional optical system limitations43.

a Complex wavefields recovered at each of the coded sensors in a lensless transmission setup. Each individual sensor captures only a fraction of the object’s diffracted field. The dark regions present the gaps between individual sensors. b Real-space phase image of the brain section after propagation of Sensor 3’s recovered wavefield to the object plane, showing that even a single sensor image expands to cover the entire brain section. The color scale represents phase values from 0 to 1. c Field-expanded recovered from individual sensors in MASI. Each recovered phase image highlights distinct aspects of the same sample. d–f Comparative analysis of the region of interest (white box in b): raw lensless diffraction pattern in gray scale (d), single-sensor recovery (e), and MASI coherent synchronization of all sensors (f). The brightness of the recovered wavefields indicates amplitude (A), and hue indicates phase (θ), as defined by the color wheel. The coherent synchronization clearly resolves radiating myelinated axon bundles extending from the ventricle, while the single-sensor recovery shows limited resolution of these neural pathways, as highlighted in the insets.

The reported field expansion capability presents intriguing applications in data security and steganography. Since features outside the physical sensor area become visible only after proper computational padding and back-propagation, MASI creates a natural encryption mechanism for information hiding44. For example, a document could be designed where critical information—authentication markers, security codes, or confidential data—is positioned beyond the sensor’s direct field of view. When capturing the raw intensity image, this information remains completely absent from the recorded data, creating an inherent security layer where the very existence of hidden content is concealed. This is demonstrated in Fig. 5, where Sensor 3’s raw data and direct wavefield recovery give no indication that the computational reconstruction would reveal an entire brain section. Only an authorized party with knowledge of the correct wavefield recovery parameters, propagation distances, coded surface pattern, and padding specifications can computationally reconstruct and reveal this hidden content. This physics-based security approach offers advantages over conventional digital encryption by leaving no visible evidence that protected information exists in the first place.

Computational 3D measurement and view synthesis

Conventional lens-based 3D imaging typically relies on structured illumination techniques, such as fringe projection, speckle pattern analysis, or multiple-angle acquisitions45,46,47. Similarly, perspective view synthesis often requires light field cameras with microlens arrays or multi-camera setups to capture different viewpoints46,47,48. Here, we demonstrate that MASI enables lensless 3D imaging, shape measurement, and flexible perspective view synthesis through computational wavefield manipulation. Unlike the conventional lens-based approaches, MASI extracts three-dimensional information and generates multiple viewpoints through pure computational processing of the recovered complex wavefield.

The concept behind MASI’s 3D imaging capability leverages the fact that a complex wavefield contains the complete optical information of a 3D scene. For 3D shape measurements, we digitally propagate the recovered wavefield to multiple axial planes throughout the volume of interest. At each lateral position, we evaluate a focus metric that quantifies local sharpness across all axial planes49. By identifying the axial position with maximum gradient value for each lateral point, we create a depth map where each pixel’s value represents the axial coordinate of best focus. This approach effectively transforms wavefield information into precise height measurements, as objects at different heights naturally focus at different propagation distances. The resulting 3D map reveals microscale surface variations across the entire field of view.

For perspective view synthesis, MASI employs a different approach than conventional light field methods. We first propagate the reconstructed wavefield from the object plane at real space to the reciprocal space using Fourier transformation. In the reciprocal space, different angular components of light are spatially separated. By applying a filtering window to select specific angular components and then inverse-propagating back to real space, we synthesize images corresponding to different viewing angles. Shifting this filtering window effectively changes the observer’s perspective, allowing virtual ‘tilting’ around the object for visualization.

Figure 6 demonstrates MASI’s 3D measurement capabilities. In Fig. 6a, we show the recovered wavefield of a bullet cartridge using MASI. The recovered complex wavefield contains rich phase information that encodes the object’s 3D topography. Figure 6b shows an all-in-focus image produced by our digital refocusing approach that combines information from multiple depth planes into a single visualization. This image provides both sharp features and depth information. Figure 6c shows different viewing angles generated by shifting the filtering window position in reciprocal space, revealing surface features that might be obscured from a single perspective. Figure 6d shows the recovered 3D height map revealing the firing pin impression and microscopic surface features, capturing critical ballistic evidence that could link a specific firearm to a cartridge casing. To demonstrate the versatility of MASI’s 3D measurement capabilities, Fig. 6e shows objects with varying feature heights and dimensions, including a LEGO brick with raised lettering, a coin with fine relief details, and a battery with subtle topography. The ability to generate 3D measurements and synthetic viewpoints through purely computational means represents an important advantage over conventional lens-based 3D imaging approaches.

a MASI recovered wavefield of a bullet cartridge, with zoomed region showing detailed wavefield information. The brightness of the recovered wavefields indicates amplitude (A), and hue indicates phase (θ), as defined by the color wheel. b All-in-focus image with depth color-coding from 0.4 mm (blue) to 4.7 mm (red), providing comprehensive visualization of the cartridge’s 3D structure. c Synthetic perspective views generated by computationally filtering the wavefield at the reciprocal space, where different angular components of light are spatially separated. The color indicates the depth map as in (b). d MASI recovered 3D map of the bullet cartridge, clearly revealing the firing pin impression and surface details for ballistic forensics. The color scale presents height from 0 to 100 µm. e Demonstration of MASI’s 3D measurement capabilities across objects with varying features. Left: LEGO brick with color scale indicating height from 0 to 120 µm; center: a coin with color scale indicating height from 0 to 100 µm; right: a battery with color scale indicating height from 0 to 30 µm. In each case, the 3D topography is reconstructed without mechanical scanning, highlighting MASI’s potential for non-destructive testing and precision metrology. Supplementary Movies 3-4 visualize the 3D focusing process. Supplementary Movie 5 visualizes the generated different perspective views of the 3D object post-measurement.

Discussion

We have introduced MASI, a lensless and scalable imaging architecture that transforms optical synthetic aperture imaging by computationally addressing the longstanding synchronization challenge. Unlike traditional systems that rely on precise interferometric setups, MASI employs distributed arrays of independently functioning coded sensors whose complex wavefields are computationally synchronized and fused entirely in real space. This multiscale approach allows MASI to surpass the diffraction limit of individual sensors without the stringent physical synchronization and overlapping measurement constraints that have historically limited practical deployment.

A key concept enabling MASI’s capabilities is its computational phase synchronization strategy that fundamentally transforms how aperture synthesis can be implemented. While conventional synthetic aperture systems establish phase coherence through overlapping measurement regions10,37 or interferometry50, MASI strategically optimizes only a single global phase offset per sensor. This design choice is critical as each individual sensor’s ptychographic reconstruction recovers hundreds of megapixels of complex data, attempting pixel-level phase optimization would create an intractably large parameter space riddled with local minima. By restricting the degrees of freedom to just one parameter per sensor, MASI achieves a computationally efficient synchronization process that can readily scale to much larger arrays. By maximizing the integrated energy of the reconstructed wavefield, MASI ensures constructive interference among independently acquired wavefields—analogous to wavefront shaping techniques in adaptive optics but implemented entirely in software. Consequently, MASI eliminates the need for reference waves or overlapping measurement areas, significantly simplifying implementation and enabling flexible, distributed sensor placement—capabilities previously unattainable without complex interferometric configurations.

The scalable capability of MASI shares conceptual similarities with the Event Horizon Telescope (EHT), which synthesizes a large-scale aperture from separated radio receivers40. While the EHT maintains phase coherence through atomic clocks and precise timing synchronization, MASI replaces this hardware complexity with computational phase synchronization. In MASI, each sensor operates as an independent unit without requiring any overlap or shared measurement regions with neighboring sensors. The architectural independence enables flexible sensor placement at various separations. This makes MASI particularly attractive for long-baseline optical imaging applications where maintaining interferometric coherence would be prohibitively challenging.

Like the sparse apertures employed in EHT, MASI faces the challenge of incomplete frequency coverage, the physical gaps between sensors result in certain spatial frequencies not being recorded, analogous to the missing baselines in interferometric telescope arrays. Drawing inspirations from black hole imaging via EHT, MASI can potentially incorporate object-plane priors or constraints40, regularization techniques40, as well as neural field representation35 to partially compensate for missing frequency information while preserving high-fidelity data from the measured regions.

Beyond addressing frequency gaps, MASI demonstrates inherent robustness to noise through its measurement strategy. The multiple sub-pixel shifted measurements acquired by each sensor provide overdetermined constraints that help suppress noise during iterative phase retrieval37. While our current implementation employs standard ptychographic reconstruction algorithms41 without explicit regularization, the system’s noise resilience could be further enhanced for low-light environments. Future implementations could incorporate feature-domain optimization51,52 and advanced regularization strategies, such as total variation constraints for preserving edge features or sparsity priors in appropriate transform domains53,54, particularly beneficial for imaging under low-light conditions. These enhancements would suppress the noise and help to interpolate the missing frequency content problem discussed above.

The real-space coherent fusion approach of MASI also differs from traditional reciprocal-space aperture synthesis methods4,10. By propagating the recovered wavefields back to the object plane and coherently fusing them, MASI naturally achieves two advantages. First, it eliminates the need for complicated reciprocal-space alignment or calibration, simplifying the overall imaging workflow. Second, this computational approach intrinsically encodes three-dimensional depth information, allowing MASI to achieve lensless 3D imaging and synthetic viewpoint generation without axial scanning.

MASI’s scalability depends primarily on the imaging configuration. For far-field applications, the array size has minimal physical constraints, potentially enabling long-baseline configurations with widely separated sensors. However, for near-field imaging, practical limits arise from the angular response of image sensor pixels. Standard image sensors maintain high sensitivity (>0.9 relative response) for incident angles below less than 25°, but efficiency drops significantly beyond 45 degrees55. Using 60-degree as a practical limit, the minimum working distance \({wd}\) scales as \({wd}=d/(2\cdot \tan \left(60^\circ \right))\approx 0.3d\), where \(d\) is the array diameter. For example, a 10 cm array would require a minimum working distance of ~3 cm to maintain adequate signal at peripheral sensors. To mitigate these limitations, peripheral sensors can be tilted toward the object, similar to how LED arrays are tilted in Fourier ptychography to improve light delivery efficiency56. By redirecting each sensor’s angular acceptance cone toward the object plane, we can maintain near-normal incidence even for sensors at extreme array positions. Additionally, the vignetting effect caused by angular response drop-off is predictable and can be computationally compensated during reconstruction. Since the pixel angular response characteristics can be well-characterized55, the signal attenuation at each sensor position can be modeled and corrected based on the known incident angles.

MASI’s demonstrated capability to resolve sub-micron features at ultralong working distances (~2 cm) can advance current optical imaging capabilities. Traditional optical microscopes typically require specialized objectives and short working distances (less than a millimeter) to achieve comparable resolutions. In contrast, MASI’s lensless architecture maintains high spatial resolution even at centimeter-scale working distances, expanding its practical utility in fields, such as industrial inspection, biological imaging, and forensic analysis, where sample accessibility and large-area imaging capabilities are critical.

Another achievement by MASI is the computational field expansion capability, as demonstrated with fingerprint and brain section imaging. By padding the reconstructed wavefields and back-propagating them computationally, MASI transforms limited sensor areas into much larger virtual imaging systems. This approach leverages the intrinsic reciprocal relationship between angular sensing and spatial reconstruction: angular information captured at the sensor plane naturally encodes spatial features beyond the sensor’s immediate field-of-view. This computational field expansion capability also offers intriguing applications in data security and steganography. Because information outside the physical sensor area becomes visible only through computational reconstruction, MASI creates a natural physical-layer encryption mechanism. Hidden information embedded outside the direct sensor footprint remains entirely undetectable in the raw captured data, effectively concealing its existence. Only authorized parties possessing the correct computational parameters, such as coded aperture patterns, padding extent, and propagation distances, can reveal this concealed information. This inherent security feature could form the basis of novel verification methods for secure documents, anti-counterfeiting measures, and covert communications channels.

In MASI, coherent illumination is essential to produce high-contrast diffraction patterns that enable robust ptychographic reconstruction. In current implementation, laser diode is used to generate coherent illumination. For narrowband illumination with partial coherence (such as LEDs), reconstruction is indeed feasible, LEDs have been successfully used as sources for both Fourier ptychography10 and spatial-domain lensless ptychography57. These implementations typically employ mixed-state ptychographic algorithms58 to account for partial coherence effects. For broadband illumination, while direct imaging would suffer from wavelength-dependent propagation and chromatic blur, narrow-band filters can be incorporated in the detection path to select specific wavelength ranges.

Future developments of MASI include extending the operational wavelength into infrared, terahertz, or X-ray regimes, which could open entirely new domains of remote sensing, security scanning, and advanced materials characterization. Incorporating polarization detection offers another dimension that would enable polarimetric imaging of birefringent or dichroic samples59,60, adding diagnostic power for biological tissues and advanced composites. MASI’s architecture also extends to endoscopic applications, where multiple thin fiber bundles could function as distributed coded sensors61,62. By computationally synthesizing wavefields from independently positioned fiber bundle tips, MASI could achieve super-resolution imaging through narrow channels. Scalability remains one of MASI’s most compelling advantages, its computational phase synchronization readily extends to larger sensor arrays with only linear increases in complexity, contrasting with the burdens faced by conventional interferometric systems. This feature could facilitate the construction of long-baseline optical arrays for remote sensing or aerial surveillance. Furthermore, the ability to place sensors at flexible separations and angles creates new possibilities for multi-perspective imaging, high-throughput industrial inspections, and planetary monitoring systems. The computational synchronization principle developed in MASI could also transform existing synthetic aperture radar and sonar systems, which currently rely on precise coherence maintenance over long baselines. By adopting MASI’s post-measurement phase synchronization approach, these systems could potentially operate with relaxed hardware synchronization requirements, enabling more flexible deployment scenarios while maintaining or even enhancing imaging performance through computational means.

Methods

MASI prototype

The MASI prototype integrates an array of image sensors (AR1335, ON Semiconductor), each with 13 megapixels with 1.1 µm pixel size. To implement ptychography for wavefield recovery, we replace the coverglass of the image sensor with a coded surface with both intensity and phase modulation. These coded surfaces function as deterministic probe patterns analogous to those used in conventional ptychography, enabling complex wavefield recovery from intensity-only measurements17,37. Two compact piezoelectric stages (SLC-1720, SmarAct, measured 22 × 17 × 8.5 mm) were adopted to provide controlled lateral movement of the MASI device. The range of movement is about 30 by 30 μm with 1–2 μm random varying step size. During operation, the MASI system captured images at 20 frames per second under continuous motion of the piezo stage, enabling efficient data acquisition without requiring positioning. Sample illumination is provided by a 10 mW laser diode, with exposure times in the millisecond range to minimize motion blur during the continuous acquisition process. In current implementations, we typically acquire 300 frames as a ptychogram dataset in ~15 s. The MASI’s compact form factor and straightforward optical design facilitate both laboratory measurements and field deployment scenarios.

Calibration experiment and real-space alignment

Coded sensor calibration is important for MASI’s coherent wavefield synthesis. Our calibration procedure consists of two major steps: coded surface characterization and sensor position determination. First, we characterized each sensor’s coded surface pattern using blood smear samples as test objects. By acquiring and reconstructing diffraction patterns from these test objects under controlled conditions, we precisely mapped the amplitude and phase modulation characteristics of each sensor’s coded surface42. With the coded surfaces fully characterized, we developed a robust one-time calibration procedure to determine the three-dimensional positioning parameters \(\left({x}_{s}{,y}_{s}{,h}_{s}\right)\) for each sensor in the array. This calibration employs point source objects that generate well-defined spherical wavefields. The calibration process follows three progressive refinement steps: 1) Initial axial distance estimation: we propagate each sensor’s recovered wavefield through multiple planes and identify the position of maximum intensity concentration, providing a first approximation despite potential truncation artifacts. 2) Lateral position determination: We cross-correlate the back-propagated point-source reconstructions between each peripheral sensor and a designated reference sensor (typically the central sensor), establishing relative lateral displacements with sub-pixel precision63. 3) Parameter refinement through phase optimization: Leveraging the known properties of spherical wavefronts, we construct a phase-based optimization function that minimizes the difference between each recovered wavefield’s unwrapped phase and the theoretical spherical wavefront model. This optimization significantly improves calibration accuracy by incorporating phase information and accommodating the three-dimensional nature of the sensor array. Detailed calibration procedures are provided in Supplementary Note 2.

Computational phase synchronization

A key innovation of MASI lies in its ability to synchronize the phase of independently recovered wavefields requiring no overlapping measurement regions. Conventional synthetic aperture systems typically depend on shared fields of view or interferometric measurements to establish phase relationships, posing major scalability challenges. In MASI, each sensor recovers only a local wavefield with an arbitrary global phase offset. We designate one sensor (e.g., the central sensor) as a reference and assign it zero phase offset. For the remaining sensors, we iteratively determine optimal offsets by maximizing the total intensity of the fused wavefield at the object plane:

$$\left\{{\varphi }_{s}\right\}={{{{\rm{argmax}}}}}_{{\varphi }_{s}}{\sum }_{x,y}{\left|{O}_{{recover}}\left(x,y\right)\right|}^{2}$$

(5)

This procedure is implemented by adjusting each sensor’s phase offset sequentially while keeping the others fixed, and converges within a few iterations. By aligning wavefields to maximize constructive interference, MASI ensures robust phase synchronization purely through computation, circumventing the need for carefully matched optical paths. Supplementary Note 3 and Supplementary Movie 1 detail the algorithm and show its convergence in practical experiments.

Tilted-plane wavefield propagation

In reflection-mode imaging, a tilted sensor plane introduces additional complexity because the measured wavefield no longer aligns with the sample surface. MASI addresses this by applying a tilt-plane wavefield correction in the Fourier domain, which transforms the measured tilted wavefield into an equivalent horizontal-plane representation. Suppose the spectrum of the recovered wavefield on the tilted sensor plane is \({\hat{W}}_{{tilt}}\left({k}_{x}^{{\prime} },{k}_{y}\right)\), where \({k}_{x}^{{\prime} }\) is the frequency variable corresponding to the sensor’s tilted \(x\) axis. We can convert \({\hat{W}}_{{tilt}}\left({k}_{x}^{{\prime} },{k}_{y}\right)\) to the horizontal-plane spectrum \(\hat{W}\left({k}_{x},{k}_{y}\right)\) via

$$\hat{W}\left({k}_{x},{k}_{y}\right)={\hat{W}}_{{tilt}}\left({k}_{x}^{{\prime} }\cos \theta -{k}_{z}^{{\prime} }\sin \theta,{k}_{y}\right)$$

(6)

where \({k}_{z}^{{\prime} }={({k}^{2}-{k}_{x}^{{\prime} 2}-{k}_{y}^{2})}^{1/2}\) enforces the free-space dispersion relationship in the tilted coordinate system. Because this transformation modifies the coordinate scaling, an amplitude correction factor (Jacobian) is required for compensating for the altered sample density.:

$$J\left({k}_{x}^{{\prime} },{k}_{y}\right)=\cos \theta+\frac{{k}_{x}^{{\prime} }}{{k}_{z}^{{\prime} }}\sin \theta$$

(7)

An inverse Fourier transform then yields the horizontal plane wavefield64:

$$W\left(x,y\right)={{{{\mathscr{F}}}}}^{-1}\left\{\hat{W}\left({k}_{x},{k}_{y}\right)\cdot \left|J\left({k}_{x}^{{\prime} },{k}_{y}\right)\right|\right\}$$

(8)

This approach systematically compensates for the sensor’s geometric tilt, reconstructing the reflection-mode wavefield as if captured by a non-tilted, horizontal sensor array. By incorporating these spectral transformations, MASI retains its lensless simplicity while supporting flexible sensor orientations in a wide range of reflection-based imaging scenarios. Supplementary Fig. S9 shows the tilted propagation method for the resolution target captured in reflection mode.

3D measurement

To generate accurate 3D maps from MASI measurements, we utilize the complex wavefield’s ability to be digitally refocused to different depths. After the wavefield is recovered and coherently fused, we numerically propagate it across a range of axial positions to form a stack of amplitude images. At each lateral position in the field of view, we identify the optimal focus plane by maximizing an amplitude gradient metric49, which peaks when local features are sharply focused. By recording the depth at which each pixel’s focus metric is maximized, we obtain a continuous 3D surface profile of the sample. Using a standard axial resolution characterization methodology65, we quantified MASI’s depth discrimination capability to be approximately 6.5 μm, as demonstrated in Supplementary Fig. S13. This axial resolution enables precise 3D topographic mapping across various sample types and geometries. Additional 3D reconstructions showcasing this capability for different objects are presented in Supplementary Figs. S14-S16, with dynamic visualizations of the 3D focusing process available in Supplementary Movies 3-4.

MASI’s performance characteristics offer unique advantages compared to conventional 3D profilometry methods. While standard techniques, such as interferometry and confocal microscopy can achieve high axial resolution, they typically require short working distances and have restricted field of view. MASI provides a compelling alternative by achieving micron-level axial resolution with centimeter-scale working distances and fields of view. Furthermore, the ability to computationally generate multiple viewing perspectives post-measurement, as shown in Fig. 6 and Supplementary Fig. S17, distinguishes MASI from conventional profilometry systems that typically provide only top-down height maps. This combination of long working distance, large field of view, and computational flexibility positions MASI as a versatile alternative for 3D metrology applications.

Post-measurement perspective synthesis

After reconstructing the high-resolution object wavefield \({O}_{{recover}}(x,y)\) by MASI, we synthesize different perspectives through numerical processing in the far-field domain. Specifically, the reconstructed complex wavefield is directly propagated to the far-field region by performing a Fourier transform operation. A rectangular pupil function \({pupil}({k}_{x},{k}_{y})\) is then applied at different lateral positions to selectively sample specific angular components, effectively shifting the viewing angle. Inverse Fourier transforming the pupil-filtered wavefield yields \({O}_{{view}}\left(x,y\right)\), corresponding to each chosen viewpoint:

$${O}_{{view}}\left(x,y\right)={{{{\mathscr{F}}}}}^{-1}\left\{{{{\mathscr{F}}}}\left[{O}_{{recover}}\left(x,y\right)\right]\cdot {pupil}\left({k}_{x},{k}_{y}\right)\right\}$$

(9)

By simply adjusting the pupil’s position, one can generate diverse perspectives without additional data acquisition or hardware modifications. Supplementary Fig. S17 and Supplementary Movie 5 illustrate these synthetic perspectives post-measurement.

Data availability

The data supporting the findings of this study are available from the corresponding author upon request.

Code availability

The computational phase compensation algorithm is described in detail in Methods and Supplementary Information. The source codes are available from the corresponding author upon request.

References

Moreira, A. et al. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 1, 6–43 (2013).

Thurman, S. T. & Bratcher, A. Multiplexed synthetic-aperture digital holography. Appl. Opt. 54, 559–568 (2015).

Huang, Z. & Cao, L. Quantitative phase imaging based on holography: trends and new perspectives. Light.: Sci. Appl. 13, 145 (2024).

Alexandrov, S. A., Hillman, T. R., Gutzler, T. & Sampson, D. D. Synthetic aperture Fourier holographic optical microscopy. Phys. Rev. Lett. 97, 168102 (2006).

Hillman, T. R., Gutzler, T., Alexandrov, S. A. & Sampson, D. D. High-resolution, wide-field object reconstruction with synthetic aperture Fourier holographic optical microscopy. Opt. Express 17, 7873–7892 (2009).

Kim M. et al. High-speed synthetic aperture microscopy for live cell imaging. Opt. Lett. 36, 148−150 (2011).

Di, J. et al. High resolution digital holographic microscopy with a wide field of view based on a synthetic aperture technique and use of linear CCD scanning. Appl. Opt. 47, 5654–5659 (2008).

Zheng, C. et al. High spatial and temporal resolution synthetic aperture phase microscopy. Adv. Photonics 2, 065002–065002 (2020).

Lee, S. et al. High-resolution 3-D refractive index tomography and 2-D synthetic aperture imaging of live phytoplankton. J. Opt. Soc. Korea 18, 691–697 (2014).

Zheng, G., Horstmeyer, R. & Yang, C. Wide-field, high-resolution Fourier ptychographic microscopy. Nat. Photonics 7, 739 (2013).

Dong, S. et al. Aperture-scanning Fourier ptychography for 3D refocusing and super-resolution macroscopic imaging. Opt. Express 22, 13586–13599 (2014).

Holloway, J., Wu, Y., Sharma, M. K., Cossairt, O. & Veeraraghavan, A. SAVI: synthetic apertures for long-range, subdiffraction-limited visible imaging using Fourier ptychography. Sci. Adv. 3, e1602564 (2017).

Wang, C., Hu, M., Takashima, Y., Schulz, T. J. & Brady, D. J. Snapshot ptychography on array cameras. Opt. Express 30, 2585–2598 (2022).

Zhang, Q. et al. 200 mm optical synthetic aperture imaging over 120 meters distance via macroscopic Fourier ptychography. Opt. Express 32, 44252–44264 (2024).

Zuo, C., Sun, J., Li, J., Asundi, A. & Chen, Q. Wide-field high-resolution 3D microscopy with Fourier ptychographic diffraction tomography. Opt. Lasers Eng. 128, 106003 (2020).

Horstmeyer, R., Chung, J., Ou, X., Zheng, G. & Yang, C. Diffraction tomography with Fourier ptychography. Optica 3, 827–835 (2016).

Wang, T. et al. Optical ptychography for biomedical imaging: recent progress and future directions. Biomed. Opt. Express 14, 489–532 (2023).

Wang R. et al. Spatially-coded Fourier ptychography: flexible and detachable coded thin films for quantitative phase imaging with uniform phase transfer characteristics. Adv. Opt. Mater. 12, 2303028 (2024).

Primot, J. Theoretical description of Shack–Hartmann wave-front sensor. Opt. Commun. 222, 81–92 (2003).

Go, G.-H. et al. Meta Shack–Hartmann wavefront sensor with large sampling density and large angular field of view: phase imaging of complex objects. Light.: Sci. Appl. 13, 187 (2024).

Soldevila, F., Durán, V., Clemente, P., Lancis, J. & Tajahuerce, E. Phase imaging by spatial wavefront sampling. Optica 5, 164–174 (2018).

Guo, Y. et al. Direct observation of atmospheric turbulence with a video-rate wide-field wavefront sensor. Nat. Photonics 18, 935–943 (2024).

Wu, Y., Sharma, M. K. & Veeraraghavan, A. WISH: wavefront imaging sensor with high resolution. Light. Sci. Appl. 8, 44 (2019).

Wang, C., Dun, X., Fu, Q. & Heidrich, W. Ultra-high resolution coded wavefront sensor. Opt. express 25, 13736–13746 (2017).

Wang, B.-Y., Han, L., Yang, Y., Yue, Q.-Y. & Guo, C.-S. Wavefront sensing based on a spatial light modulator and incremental binary random sampling. Opt. Lett. 42, 603–606 (2017).

Brady, D. J. et al. Multiscale gigapixel photography. Nature 486, 386–389 (2012).

Brady, D. J. & Hagen, N. Multiscale lens design. Opt. express 17, 10659–10674 (2009).

Tremblay, E. J., Marks, D. L., Brady, D. J. & Ford, J. E. Design and scaling of monocentric multiscale imagers. Appl. Opt. 51, 4691–4702 (2012).

Fan, J. et al. Video-rate imaging of biological dynamics at centimetre scale and micrometre resolution. Nat. Photonics 13, 809–816 (2019).

Yu Z. et al. Wavefront shaping: a versatile tool to conquer multiple scattering in multidisciplinary fields. Innovation 3, 100292 (2022).

Baek, Y., de Aguiar, H. B. & Gigan, S. Phase conjugation with spatially incoherent light in complex media. Nat. Photonics 17, 1114–1119 (2023).

Yaqoob, Z., Psaltis, D., Feld, M. S. & Yang, C. Optical phase conjugation for turbidity suppression in biological samples. Nat. Photonics 2, 110–115 (2008).

Vellekoop, I. M. & Mosk, A. P. Focusing coherent light through opaque strongly scattering media. Opt. Lett. 32, 2309–2311 (2007).

Haim, O., Boger-Lombard, J. & Katz, O. Image-guided computational holographic wavefront shaping. Nat. Photonics 19, 44–53 (2025).

Feng, B. Y. et al. NeuWS: neural wavefront shaping for guidestar-free imaging through static and dynamic scattering media. Sci. Adv. 9, eadg4671 (2023).

Zuo, C. et al. Transport of intensity equation: a tutorial. Opt. Lasers Eng. 135, 106187 (2020).

Rodenburg J., Maiden A. Ptychography. In: Springer Handbook of Microscopy). (Springer, 2019).

Batey, D. et al. Reciprocal-space up-sampling from real-space oversampling in x-ray ptychography. Phys. Rev. A 89, 043812 (2014).

Zhang, H. et al. Field-portable quantitative lensless microscopy based on translated speckle illumination and sub-sampled ptychographic phase retrieval. Opt. Lett. 44, 1976–1979 (2019).

Akiyama, K. et al. First M87 event horizon telescope results. IV. Imaging the central supermassive black hole. Astrophys. J. Lett. 875, L4 (2019).

Maiden, A., Johnson, D. & Li, P. Further improvements to the ptychographical iterative engine. Optica 4, 736–745 (2017).

Jiang, S. et al. Spatial-and Fourier-domain ptychography for high-throughput bio-imaging. Nat. Protoc. 18, 2051–2083 (2023).

Park, J., Brady, D. J., Zheng, G., Tian, L. & Gao, L. Review of bio-optical imaging systems with a high space-bandwidth product. Adv. Photonics 3, 044001 (2021).

Bian, L. et al. Large-scale scattering-augmented optical encryption. Nat. Commun. 15, 9807 (2024).

Zuo, C. et al. Deep learning in optical metrology: a review. Light.: Sci. Appl. 11, 1–54 (2022).

Park, J.-H., Hong, K. & Lee, B. Recent progress in three-dimensional information processing based on integral imaging. Appl. Opt. 48, H77–H94 (2009).

Wu, J. et al. An integrated imaging sensor for aberration-corrected 3D photography. Nature 612, 62–71 (2022).

Martínez-Corral, M. & Javidi, B. Fundamentals of 3D imaging and displays: a tutorial on integral imaging, light-field, and plenoptic systems. Adv. Opt. Photonics 10, 512–566 (2018).

Bian, Z. et al. Autofocusing technologies for whole slide imaging and automated microscopy. J. Biophotonics 13, e202000227 (2020).

Rosen, P. A. et al. Synthetic aperture radar interferometry. Proc. IEEE 88, 333–382 (2000).

Zhang, S. et al. FPM-WSI: fourier ptychographic whole slide imaging via feature-domain backdiffraction. Optica 11, 634–646 (2024).

Zhao Q. et al. Deep-ultraviolet Fourier ptychography (DUV-FP) for label-free biochemical imaging via feature-domain optimization. APL Photonics 9, 090801 (2024).

Liu N., Zhao Q., Zheng G. Sparsity-regularized coded ptychography for robust and efficient lensless microscopy on a chip. arXiv preprint arXiv:230913611, (2023).

Loetgering, L. et al. PtyLab. m/py/jl: a cross-platform, open-source inverse modeling toolbox for conventional and Fourier ptychography. Opt. Express 31, 13763–13797 (2023).

Jiang, S. et al. Resolution-enhanced parallel coded ptychography for high-throughput optical imaging. ACS Photonics 8, 3261–3271 (2021).

Song, P. et al. Freeform illuminator for computational microscopy. Intell. Comput. 2, 0015 (2023).

Li, P. & Maiden, A. Lensless LED matrix ptychographic microscope: problems and solutions. Appl. Opt. 57, 1800–1806 (2018).

Thibault, P. & Menzel, A. Reconstructing state mixtures from diffraction measurements. Nature 494, 68–71 (2013).

Song, S. et al. Polarization-sensitive intensity diffraction tomography. Light.: Sci. Appl. 12, 124 (2023).

Yang, L. et al. Lensless polarimetric coded ptychography for high-resolution, high-throughput gigapixel birefringence imaging on a chip. Photonics Res. 11, 2242–2255 (2023).

Song, P. et al. Ptycho-endoscopy on a lensless ultrathin fiber bundle tip. Light. Sci. Appl. 13, 168 (2024).

Weinberg, G., Kang, M., Choi, W., Choi, W. & Katz, O. Ptychographic lensless coherent endomicroscopy through a flexible fiber bundle. Opt. Express 32, 20421–20431 (2024).

Guizar-Sicairos, M., Thurman, S. T. & Fienup, J. R. Efficient subpixel image registration algorithms. Opt. Lett. 33, 156–158 (2008).

Jiang, S. et al. High-throughput lensless whole slide imaging via continuous height-varying modulation of a tilted sensor. Opt. Lett. 46, 5212–5215 (2021).

Liao, Y. H. et al. Portable high-resolution automated 3D imaging for footwear and tire impression capture. J. forensic Sci. 66, 112–128 (2021).

Acknowledgements

This work was partially supported by the National Institute of Health R01-EB034744 (G. Z.), the UConn SPARK grant (G. Z.), National Science Foundation 2012140 (G. Z.), and Department of Energy SC0025582 (G. Z.). The content of the article does not necessarily reflect the position or policy of the US government, and no official endorsement should be inferred.

Ethics declarations

Competing interests

G. Z. is a named inventor of a related patent application. Other authors declare no competing interests.

Peer review

Peer review information

Nature Communications thanks Gordon Wetzstein and the other anonymous reviewer(s) for their contribution to the peer review of this work. [A peer review file is available].

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, R., Zhao, Q., Wang, T. et al. Multiscale aperture synthesis imager. Nat Commun 16, 10582 (2025). https://doi.org/10.1038/s41467-025-65661-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41467-025-65661-8