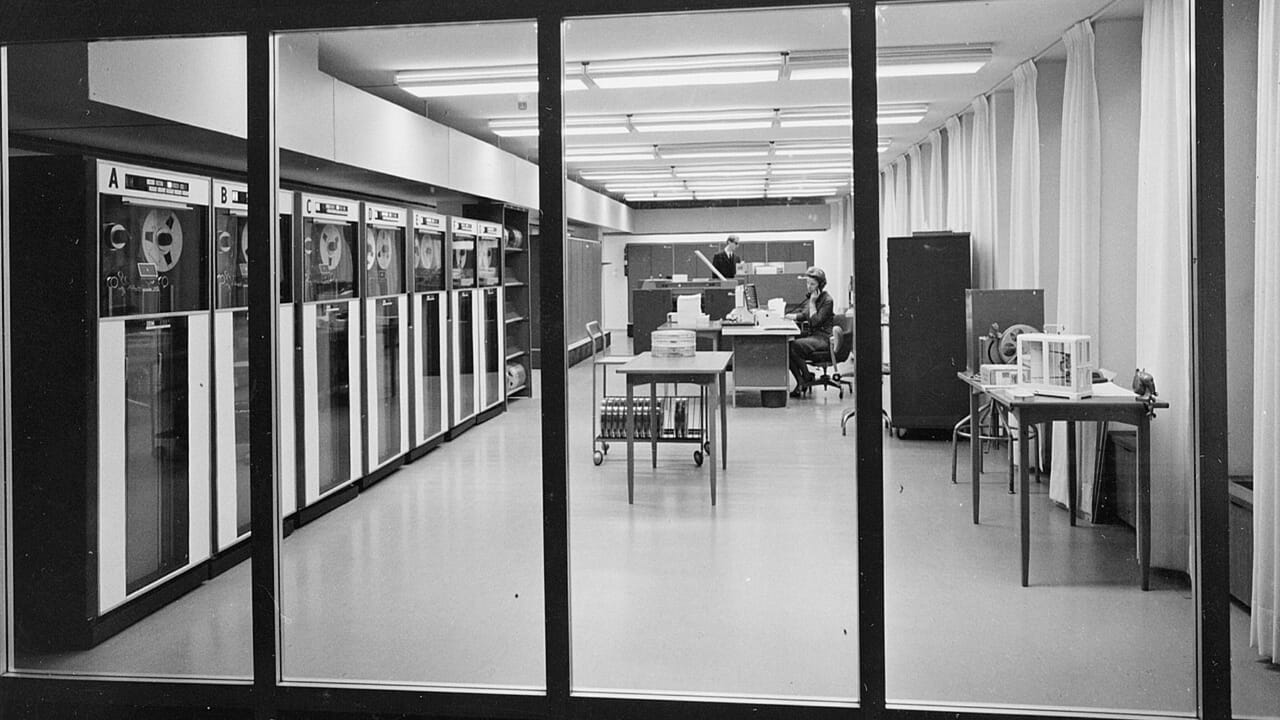

A 1963 photograph of Postbanken's third-floor data center, located in Stockholm. DigitaltMuseum.org

Many discussions of data centers overlook how the industry has evolved. That’s a shame, because without its history, it’s difficult to understand why data centers operate the way they do today and where they’re headed next.

Let’s look back at the data center industry by tracing key milestones in its development, from its origins in 1946 to the present.

1946: The First “Data Center” Emerges

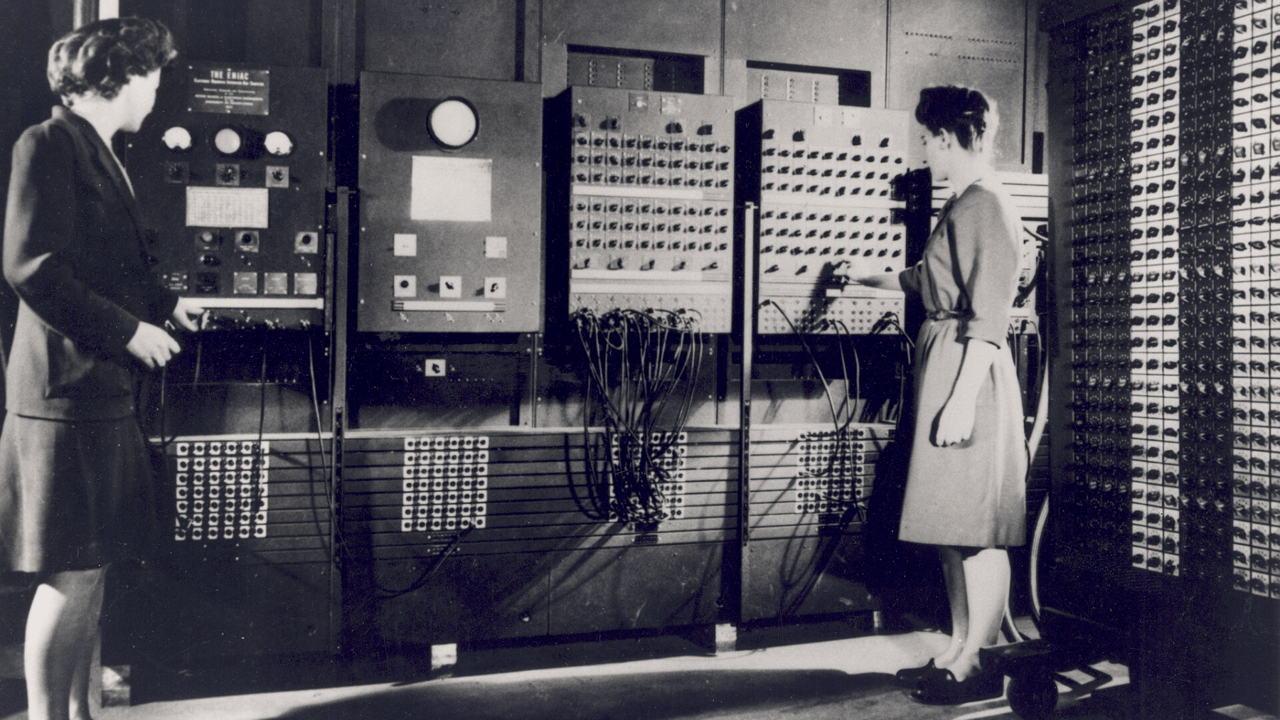

In 1946, ENIAC – the first fully functioning digital computer – entered service. ENIAC was a computer, not a “data center” in the modern sense. However, its roughly 1,800-square-foot footprint and dedicated power and cooling familiarized the world with the notion that running a computer required a substantial, specialized environment. Its forced-air cooling was among the earliest dedicated cooling provisions for large-scale computing.

For decades after, before microcomputers matured, every computer was so large and resource-intensive that it functioned as its own data center.

Related:Neoclouds vs. Hyperscalers: Will AI’s Specialized Clouds Prevail?

Two programmers operating ENIAC's main control panel. (Credit: Historic Computer Images)

1964: The Birth of Mainframes (IBM System/360)

IBM’s System/360 revolutionized computing by introducing a family of compatible mainframe computers. This milestone marked the beginning of standardized computing platforms, which laid the groundwork for modern enterprise data centers.

Georgetown University Medical Center Professor Robert Ledley with an IBM 360. (Credit: Wikimedia Commons)

1971: Microprocessors Go Mainstream

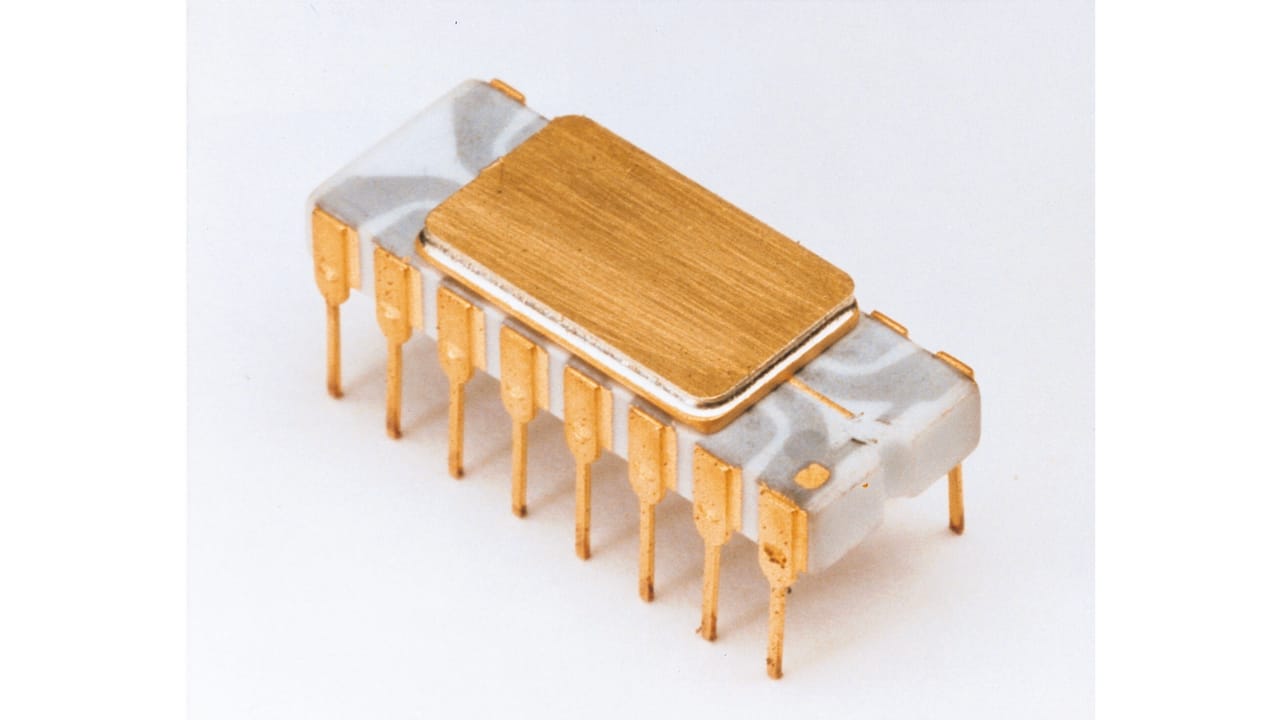

Intel’s 4004, released in 1971, was the first commercial microprocessor. Others followed later in the decade, including the first x86 processor in 1978 – an architectural standard still widespread in modern data centers.

Microprocessors mattered because they enabled smaller computers. As organizations deployed growing numbers of these mid-sized systems (larger than today’s servers or PCs but far smaller than machines like ENIAC), they needed dedicated spaces to house, power, and cool them. Those rooms became the earliest facilities to resemble modern data centers, shifting the model from single, monolithic systems to fleets of servers.

An Intel 4004 microprocessor. (Credit: Intel)

1981: The Personal Computer Revolution

The release of the IBM PC on August 12, 1981, democratized computing, leading to a surge in demand for networked systems and, eventually, the need for centralized data centers to manage and store data for these distributed devices.

IBM Personal Computer, 1981, with manuals; Museum of Applied Arts, Frankfurt am Main. (Credit: Wikimedia Commons)

1989: The Web Takes Shape

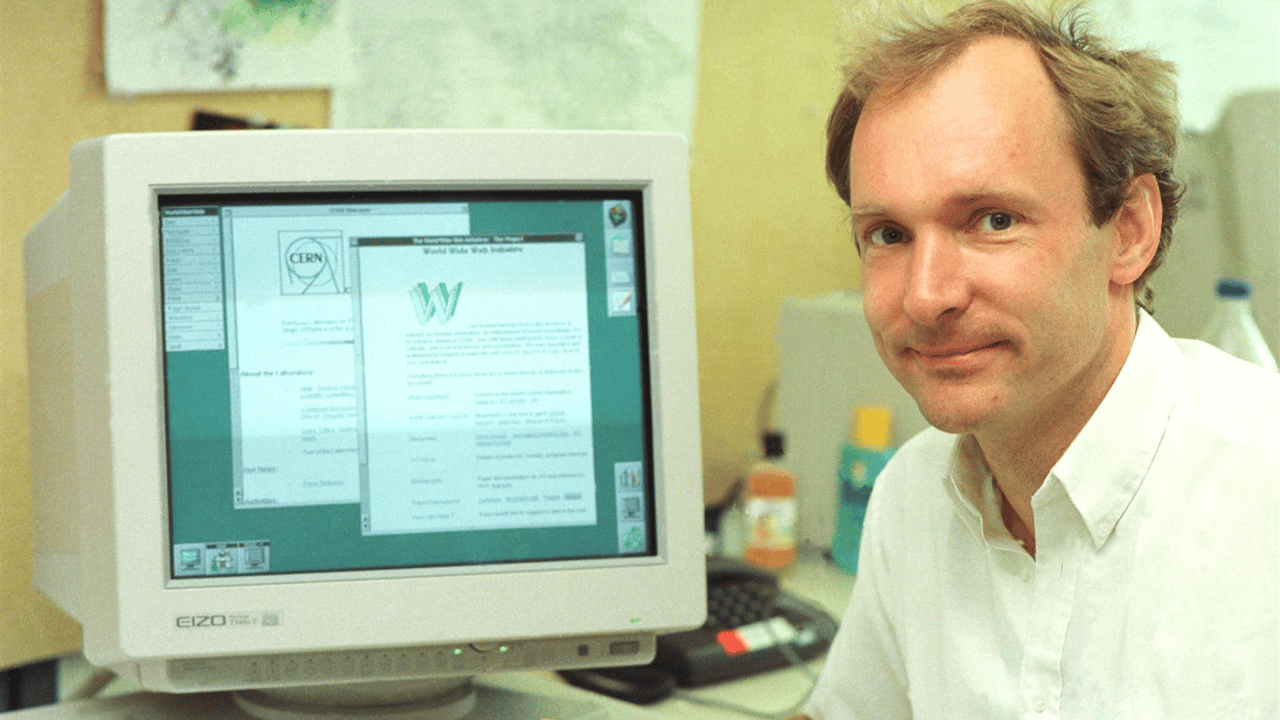

The World Wide Web was conceived in 1989. Its immediate impact on data centers was modest, but by the mid-1990s, the Web’s rapid growth drove surging demand for servers to host websites and, increasingly, online applications and services. That, in turn, expanded the need for data centers to serve as homes for those servers.

Related:Data Center vs. Server Farm: What’s the Difference, Exactly?

Had the Web never come into being, it’s hard to imagine data centers becoming the fixtures of digital life they are today, simply because the global need to deploy and interconnect millions of servers would have been far smaller.

Tim Berners-Lee, inventor of the World Wide Web. (Credit: CERN)

1998: VMware Catalyzes x86 Virtualization

Demand for data center capacity grew further with the arrival of enterprise-grade virtualization on x86 hardware, touched off by VMware, founded in 1998. VMware Workstation debuted in 1999, and server-class products (GSX/ESX) in the early 2000s brought virtualization to mainstream enterprise infrastructure.

By creating virtual servers on physical servers – using widely available, cost-effective x86-based servers – businesses could partition infrastructure into smaller, more flexible units. This dramatically improved server utilization, scalability, and agility, accelerating investment in data centers to support expanding virtualized fleets.

Early VMware product packaging featuring a photograph of company founders. (Credit: Computer History Museum)

Late 1990s: Colocation Goes Mainstream

Around the same time, colocation providers entered the market and rapidly expanded it. Equinix, for example, launched in 1998, following a wave of earlier and contemporaneous providers.

Colocation reshaped data center history by offering a new consumption model: Instead of building dedicated facilities, businesses could rent space, power, and cooling in shared data centers.

Related:Data Center Modernization: Alternatives to Expensive Retrofits

.png?width=1280&auto=webp&quality=80&disable=upscale)

A screenshot of Telehouse America’s website from June 2004. (Credit: The Wayback Machine)

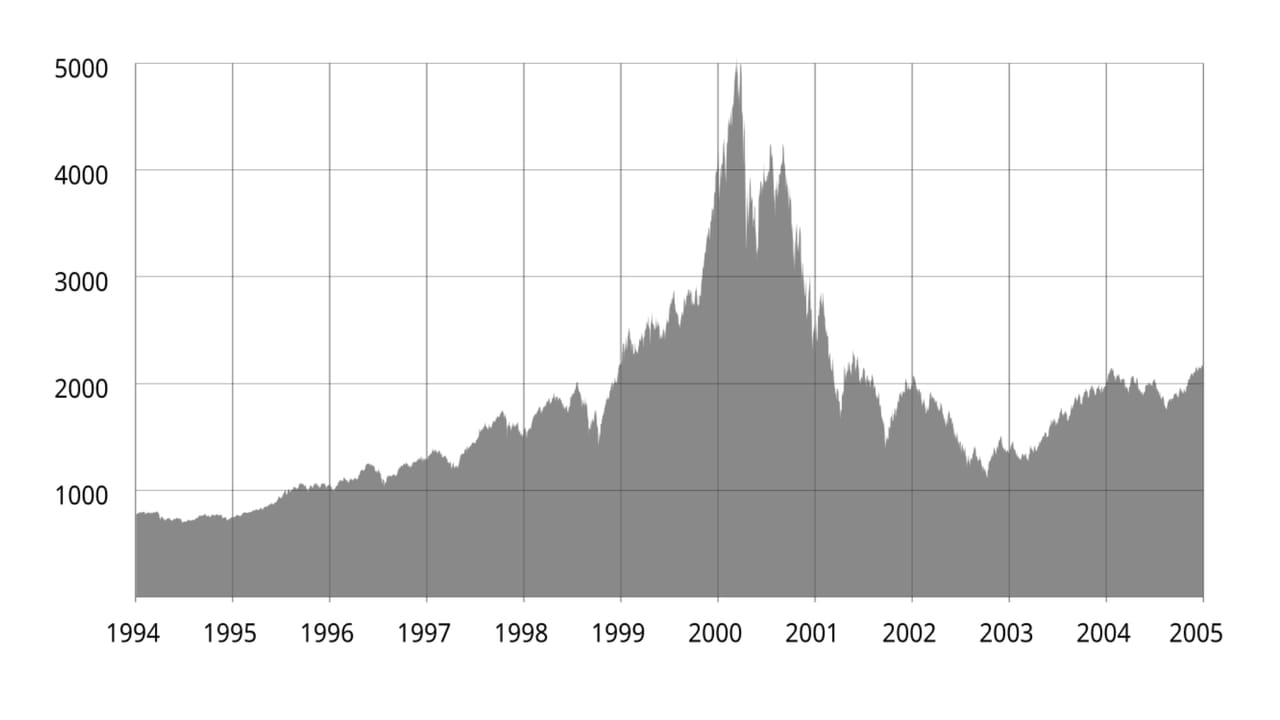

2000: The Dot-Com Boom and Bust

The dot-com era saw a massive expansion in data center construction to support the growing internet economy. While the bubble burst, it left behind a foundation of infrastructure that would later be leveraged for the cloud and modern web services.

The NASDAQ Composite index experienced a significant surge in 2000, followed by a sharp decline due to the bursting of the dot-com bubble. (Source: Wikimedia Common)

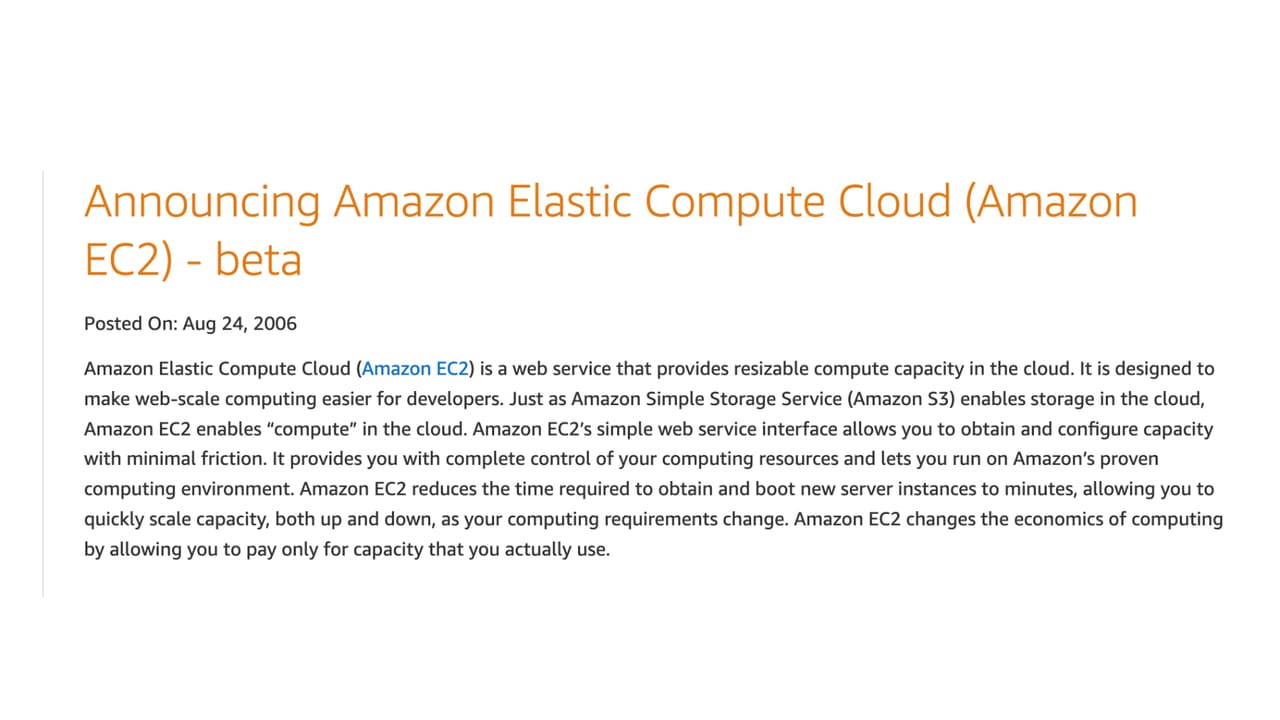

2006: The Public Cloud Enters the IaaS Era

Amazon Web Services (AWS) began releasing foundational services in the mid-2000s, starting with Simple Queue Service (SQS) in 2004 and Simple Storage Service (S3) in early 2006. The inflection point came later in 2006 with the launch of Amazon Elastic Compute Cloud (EC2), which introduced on-demand, elastic computing and effectively kicked off the IaaS era.

This new consumption model accelerated hyperscale data center buildouts, as providers invested in massive facilities to deliver elastic compute and storage at a global scale.

A 2006 press release announcing the launch of Amazon EC2. (Credit: AWS)

2010: The Rise of Hyperscale Data Centers

Companies including Google, Facebook, and Microsoft began building hyperscale data centers to support their massive user bases. These facilities introduced innovations in energy efficiency, modular design, and scalability that continue to shape the industry.

Google Data Center in Council Bluffs, Iowa. (Credit: Google)

2013: Cloud Native Kicks Off

Docker’s 2013 debut popularized container-based application deployment. While far from the first container platform, Docker made them mainstream.

For data centers, this accelerated the shift to cloud-native computing – applications designed to run across large, distributed environments. Operating many interoperating servers became more critical than ever, reinforcing the need for robust data center capacity.

Solomon Hykes presenting Docker in 2013.

2017: Edge Computing Gains Traction

The rise of IoT and latency-sensitive applications drove the adoption of edge computing. This milestone highlights the shift from centralized data centers to distributed architectures closer to end users.

An example of an autonomous tractor working on the field. (Credit: Alamy)

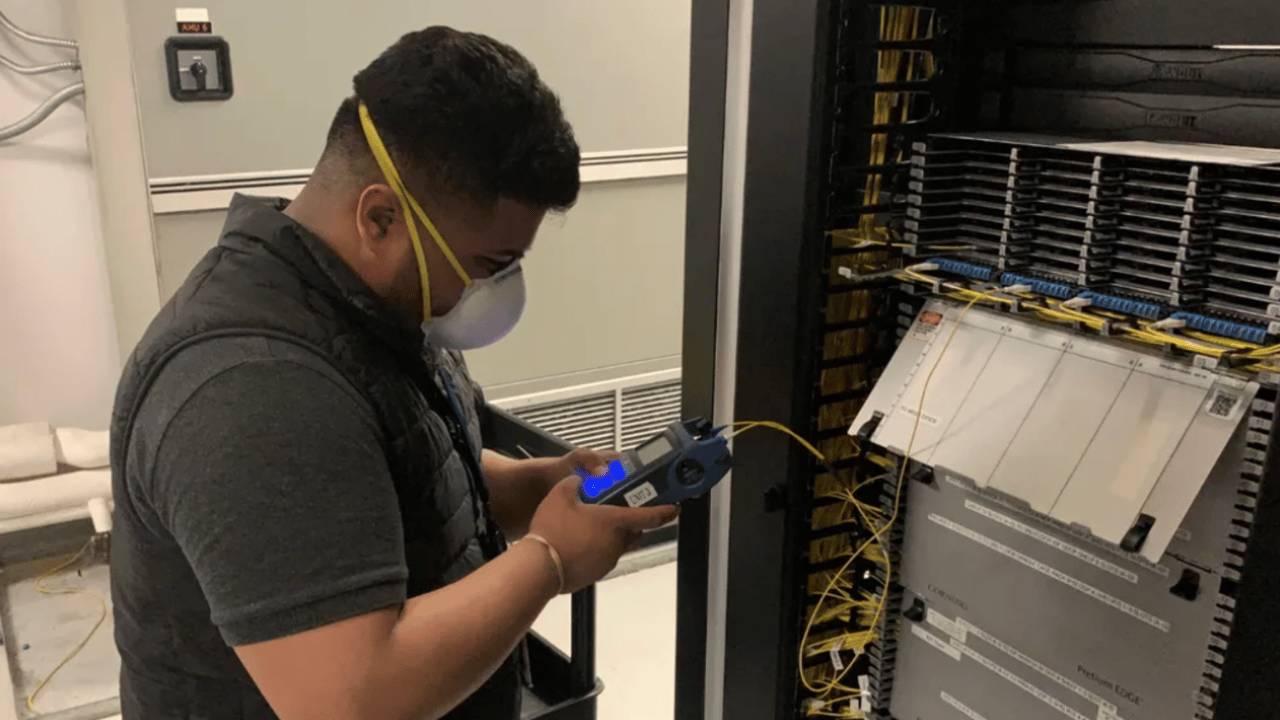

2020: The COVID-19 Pandemic and Remote Work

The pandemic accelerated digital transformation globally, leading to unprecedented demand for cloud services, video conferencing, and remote work solutions. This surge placed immense pressure on data centers to scale rapidly.

A CoreSite engineer testing a cross-connect for acceptable light loss at the company’s Chicago data center during the pandemic. (Credit: CoreSite)

2022: Generative AI Goes Mainstream

Arguably, the most recent major milestone in data center history was in 2022, when OpenAI launched ChatGPT.

The first generative AI service to become available for mass consumption, ChatGPT heralded the rise of a fundamentally new type of AI technology. Generative models are driving significant changes in data center design and operations while creating pressing new challenges in scalability and power management, as operators struggle to keep pace with AI’s staggering energy demands.

OpenAI CEO Sam Altman departs after the Senate AI Insight Forum, at the US Capitol, in Washington, DC, on September 13, 2023. (Credit: Alamy)

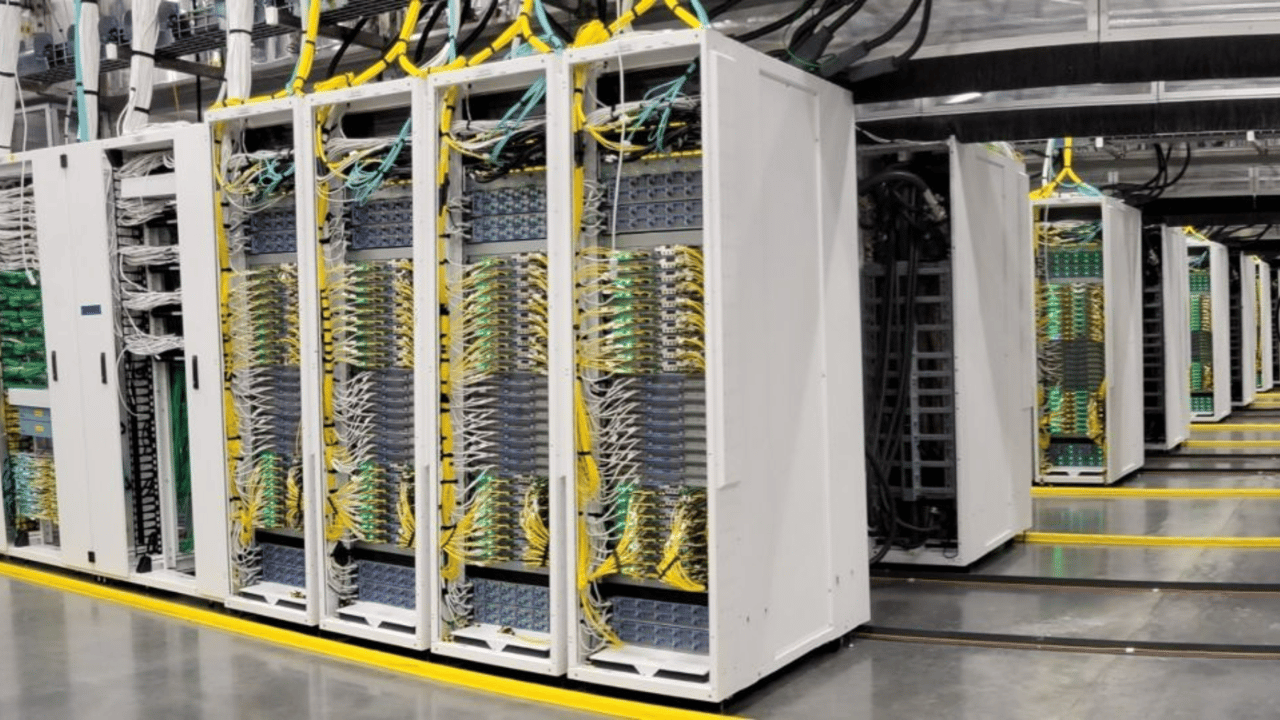

2024: AI Data Centers Become a Distinct Class

By 2024, “AI data centers” emerged as a distinct architectural class, purpose-built to host accelerated computing for training and inference at unprecedented scale. Hyperscalers, colocation providers, and new AI-focused clouds converged on designs optimized for large GPU clusters.

High-density AI clusters in a Microsoft data center. (Credit: Microsoft)

2025 and Beyond: Enter the Neoclouds

A new cohort of providers – often called “neoclouds” – is reshaping how organizations consume infrastructure from 2025 onward. Rather than competing as general-purpose hyperscalers, neoclouds focus on specialized value: AI-first GPU fleets and HPC-as-a-service, sovereign and industry-regulated clouds with strict data residency, sustainable and locality-optimized capacity, and bare-metal platforms tuned for performance or cost transparency.

Neocloud provider Lambda collaborated with Prime Data Centers to deploy high-density NVIDIA AI infrastructure at Prime's flagship LAX01 AI-ready data center campus in Vernon, Calif. (Credit: Lambda)