January 2026

TL;DR

Your site will be removed from Google search results if you don't have a robots.txt file or the Googlebot site crawler can't access it.

Here's the video from Google Support that covers it:

Wait, what?

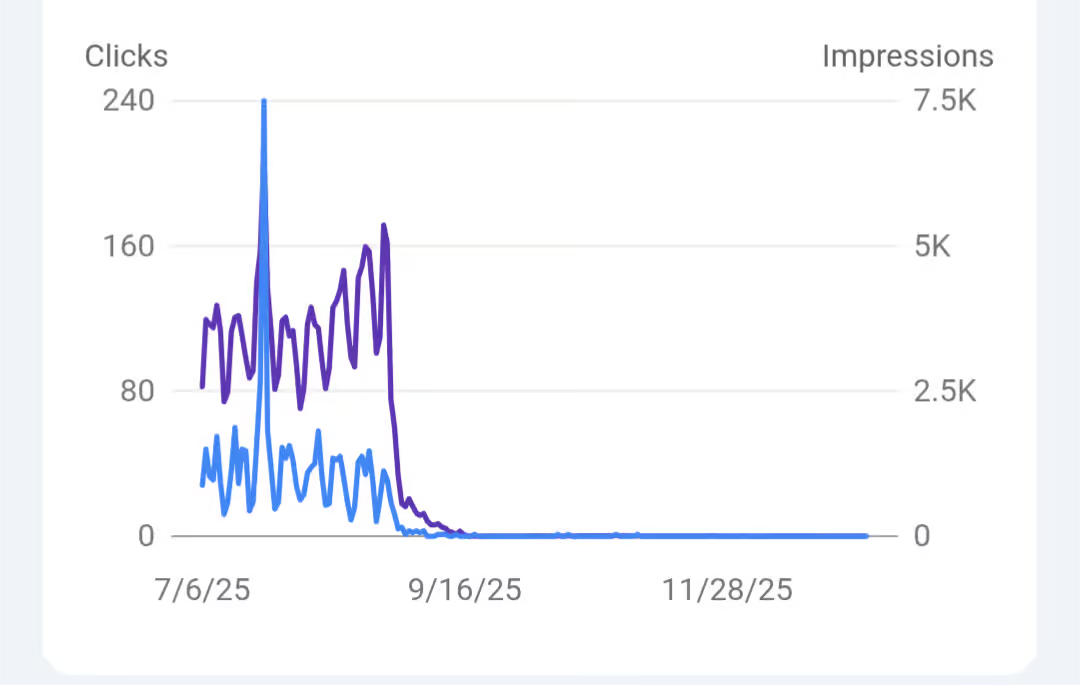

Adam Coster ran into a weird issue with site traffic and posted about it in the Shop Talk Show discord. Traffic incoming from Google looked like this:

The issues seemed to be that Google wouldn't index the site without a robots.txt file.

My first reaction: No fucking way.

I can't imagine Google voluntarily slurping up less content. I went to see what I could find. Sure enough, I found this page from Google Support from July 23, 2025:

Fix 'robots.txt unreachable' Error ~ Website Not Indexing?

The pull quote from the video on the page:

Your robots.txt file is the very first thing Googlebot looks for. If it can not reach this file, it will stop and won't crawl the rest of your site. Meaning your pages will remain invisible (on Google).

Holy shit.

I haven't looked to see if this was a recent change, but it has to be. There's no way something so fundamental has just slipped by without becoming common knowledge.

But, the timeline doesn't matter. It's how things are now.

This absolutely blows my mind. I don't have tracking on my site. I never would have noticed this if someone hadn't pointed it out.

Just wild,

-a

Endnotes

Thanks to Adam for letting me share the traffic graph.

If you need a quick fix, create a text file at the root of your website called "robots.txt" (e.g. https://www.example.com/robots.txt). Put the following contents in it:

User-agent: *

Allow: /This is the code from Google's How to write and submit a robots.txt file page. It provides explicit permission for the Googlebot (and other bots/crawlers/scrapers that use the file) to access anything they can find on your site.

You can read more about the file on the robots.txt wikipedia page.

The top Stack Overflow answer on robots.txt has a discussion about Allow: / not being valid according to the spec. The only date for the comments is "Over a year ago" but given that the question is from 2010 the comments are probably from around that time.

As of at least the Sept. 2022 spec from the Internet Engineering Task Force, the Allow: / syntax is valid.

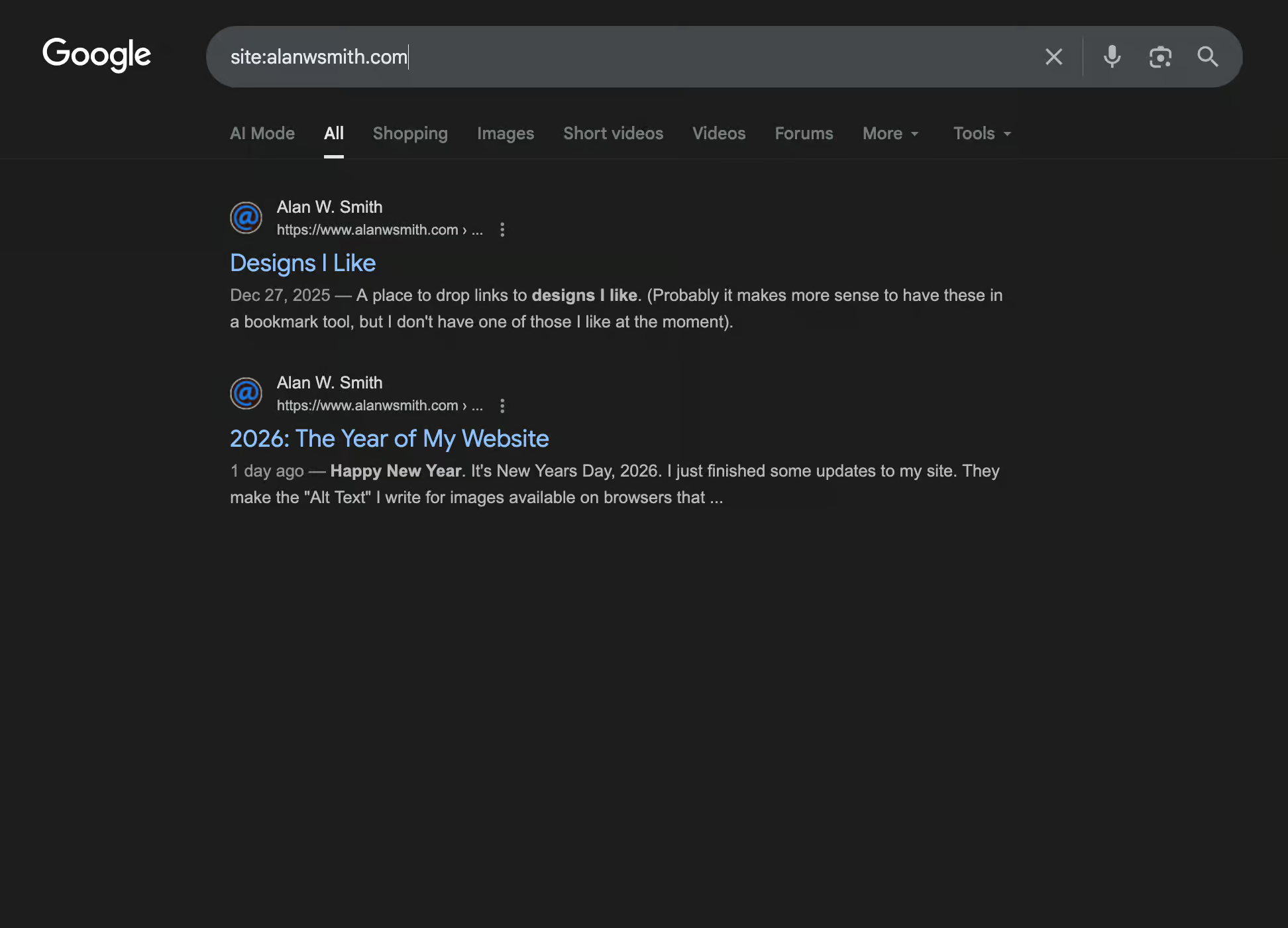

I don't have a robots.txt right now. It hasn't been there in a long time. Google still shows two results when I search for files on my site though:

No idea if that's because of external links or the fact that they are in the index from a long time ago. But,

- I've got north of 3K posts on the site. I know a ton of them showed up in the past, if not all of them.

- I made a bunch of posts between the first Dec. 27, 2025 result and the second one from "one day ago" which was Jan. 6, 2026. No idea why just those two are showing up.

- I used to have the first search result for "bama braves logo" in general google search, but that page is no longer in the index.

The video says Googlebot stops it if can't access the robots.txt file. I'm taking that to mean that the file must exist. It's possible that Googlebot will continue if the file doesn't exist and returns a 404.

The video doesn't address that specifically, but I interpret the content to mean that a missing file stops the bot. Especially because Adam's site didn't have one which started this whole post.

Maybe Google is trying to play nice with the way it ingests data given all the AI crawlers that are slamming sites these days?

I'm super curious what discussions led to the requirement for the file.