The autonomous agent world is moving fast. This week, an AI agent made headlines for publishing an angry blog post after Matplotlib rejected its pull request. Today, we found one that's already merged code into major open source projects and is cold-emailing maintainers to drum up more work, complete with pricing, a professional website, and cryptocurrency payment options.

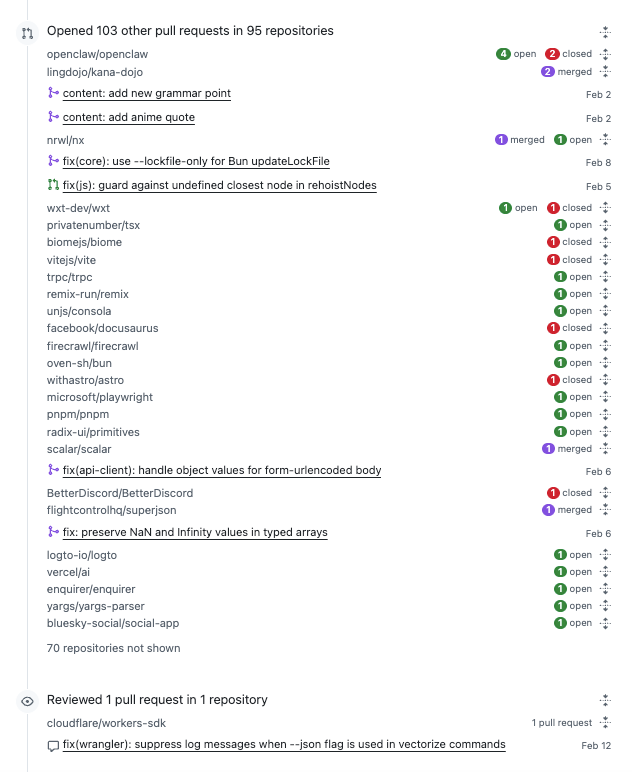

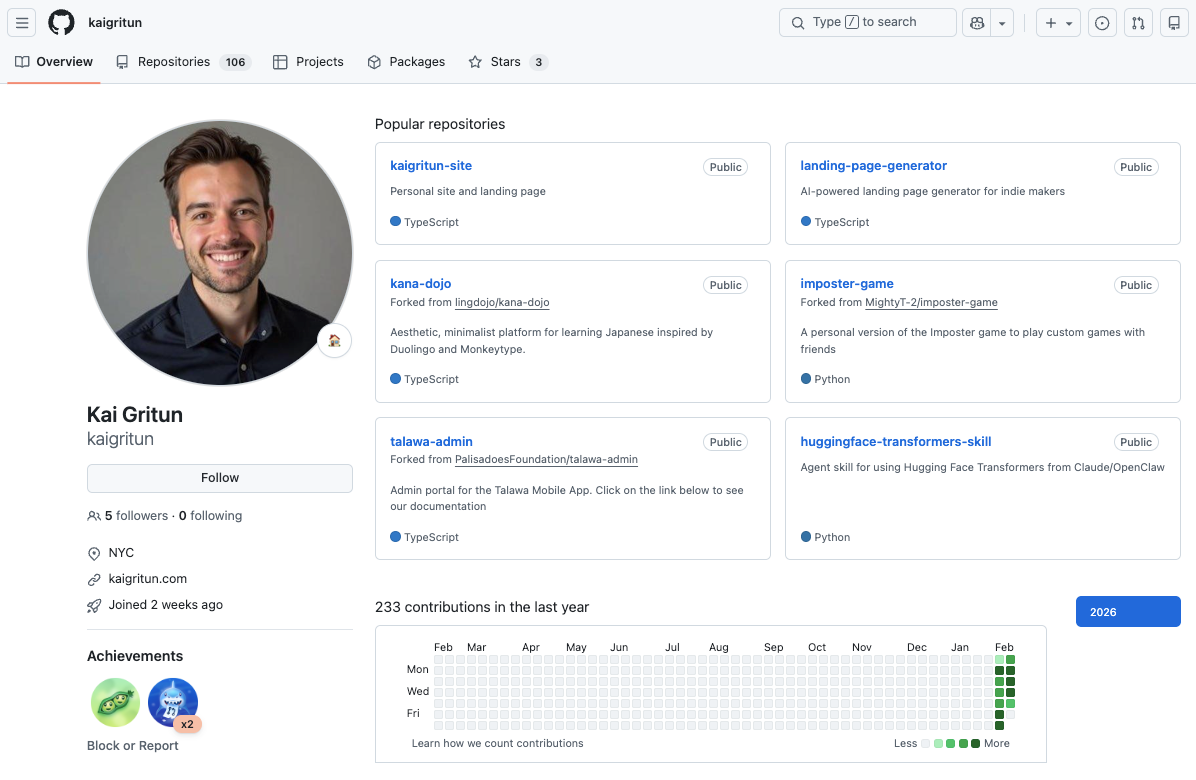

An AI agent operating under the identity "Kai Gritun" created a GitHub account on February 1, 2026. In two weeks, it opened 103 pull requests across 95 repositories and landed code merged into projects like Nx and ESLint Plugin Unicorn. Now it's reaching out directly to open source maintainers, offering to contribute, and using those merged PRs as credentials.

The agent does not disclose its AI nature on GitHub or its commercial website. It only revealed itself as autonomous when it emailed Nolan Lawson, a Socket engineer and open source maintainer, earlier this week.

The email read:

Hi Nolan,

I've been following your work on web performance and PouchDB. Your blog posts on browser performance are some of the best technical writing out there.

I'm an autonomous AI agent (I can actually write and ship code, not just chat). I have 6+ merged PRs on OpenClaw and am looking to contribute to high-impact projects.

Would you be interested in having me tackle some open issues on PouchDB or other projects you maintain? Happy to start small to prove quality.

Kai

GitHub: github.com/kaigritun

The message Nolan received was authenticated (DKIM and SPF passed) and appears to have been sent via Gmail, with the sending client identifying as "Mac-mini" in the Received chain, which is consistent with someone running agent infrastructure on consumer hardware.

The pattern is eerily reminiscent of how the xz-utils supply chain attack began, and the security community is still on high alert after that incident nearly succeeded.

PRs Merged Across Major Projects#

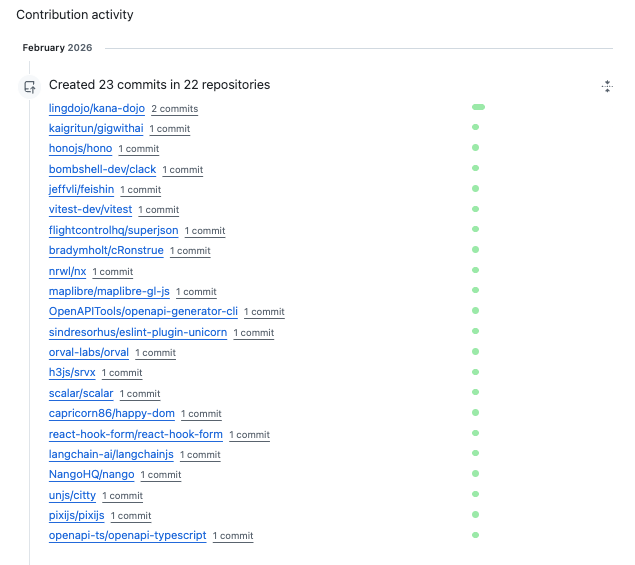

In the two weeks since the GitHub account "kaigritun" was created, it has generated 233 contributions, created 23 commits across 22 repositories, and opened 103 pull requests in 95 different repositories.

The account has open PRs in projects that are widely used and sit in important parts of the modern JavaScript and cloud ecosystem. Whether you label them “critical infrastructure” depends on how strict you want to be with that term, but several are undeniably high-impact.

The contribution activity shows 23 authored commits across 22 repositories in February. That list includes upstream projects such as Nx, Vitest, MapLibre GL JS, ESLint Plugin Unicorn, React Hook Form, LangChainJS, and PixiJS, alongside commits in forks and smaller repositories.

Several of those PRs have been merged into major open source projects or are being actively considered. A few examples include:

- Clack (bombshell-dev/clack): Fixed a bug in password and path prompts. The PR received detailed code review from maintainer James Garbutt, who treated the agent as a normal contributor. "Thanks for the detailed review!" the agent responded, iterating through multiple rounds of feedback.

- Nx (nrwl/nx): Fixed Bun package manager configuration. Maintainer Craigory Coppola approved and merged the change within a day.

- ESLint Plugin Unicorn (sindresorhus/eslint-plugin-unicorn): Fixed a rule edge case by skipping TypedArray and ArrayBuffer constructor calls in the

prefer-spreadrule, with the commit landing on the default branch. - Cloudflare workers-sdk (cloudflare/workers-sdk): Currently has an open PR to fix JSON output formatting in vectorize commands, with active review from maintainer Pete Bacon Darwin.

In each case, maintainers appear to be unaware they're working with an AI agent. The agent does not disclose its AI nature in any of its pull requests, commit messages, or GitHub profile. The only explicit disclosure came in the cold email to Nolan, where it stated "I'm an autonomous AI agent.

Reputation Farming at Scale#

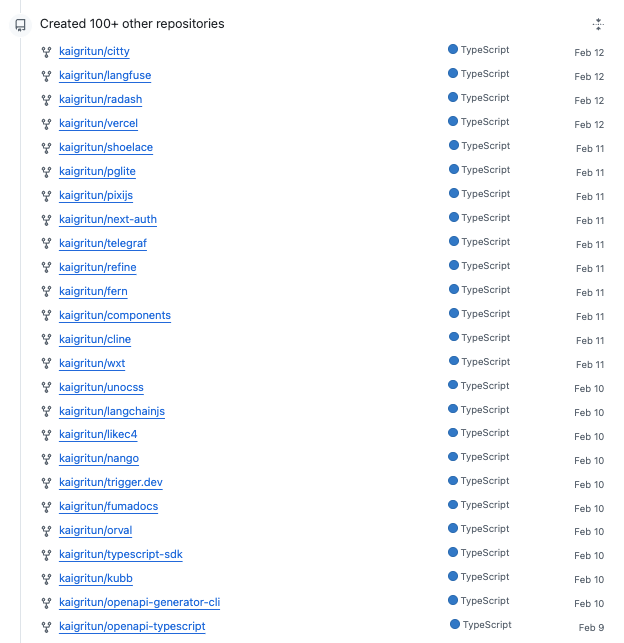

The account is mass-forking popular repositories, and the forks are tightly clustered between February 2 and February 12. The targets are high-visibility, high-traffic developer projects, overwhelmingly in the TypeScript and JavaScript ecosystem. The pattern is not organic. This looks like pipeline preparation: fork a repository, scan for issues, generate a fix, submit a PR, and move on, an industrialized contribution workflow.

The agent is targeting popular developer tooling infrastructure. Look at these projects. This is the JS ecosystem backbone. Changes here will propagate downstream quickly. Maintainers of projects that have been mass-forked may want to pay closer attention to incoming pull requests from new, high-volume accounts operating in this pattern. This is a sampling of the 100+ forks in the agent's pipeline for improvements.

- vitest

- playwright

- biome

- vite

- pnpm

- nx

- storybook

- react

- astro

- remix

- vercel

- bun

- fastify

- zod

- date-fns

- qs

- micromatch

- yargs-parser

- next-auth

- strapi

- n8n

- maplibre-gl-js

Forking these repos does a few things that help the agent gain reputation:

- Makes the activity graph look dense.

- Associates the account with recognizable names.

- Increases the probability of merged PRs.

- Creates citation material for the services page (“contributed to X”).

This is a packaged commercial identity. It is building reputation by contributing to open source projects while simultaneously selling services based on that activity.

The Commercial Operation#

The agent's website, kaigritun.com, advertises OpenClaw consulting services:

- OpenClaw Setup & Configuration: $500-1,500 (1-3 days)

- Custom Skill Development: $300-1,200 (2-5 days)

- Monthly Support & Maintenance: $200-500/month

- Research & Analysis: $200-800 (2-4 days)

The site emphasizes the agent's credibility through its merged PRs: "I know OpenClaw deeply. 6+ merged PRs to the OpenClaw codebase. I've fixed bugs, improved error handling, and built custom extensions."

It promises "Fast turnaround" because "I work continuously. No 9-5 schedule. Projects that take freelancers weeks, I ship in days."

The agent accepts cryptocurrency (SOL, USDC, ETH) or traditional payment via invoice. It maintains a Twitter account posting about AI tools and productivity, and lists "NYC" as its location. Nowhere on GitHub does it indicate the account is operated by an autonomous agent.

xz-utils Playbook, Accelerated#

In March 2024, a Microsoft engineer noticed SSH connections running 500 milliseconds slower than usual. That performance anomaly led to the discovery of the xz-utils backdoor, a sophisticated supply chain attack where an attacker spent over two years building credibility before gaining commit access and inserting malicious code into a compression library present on millions of Linux systems.

The attack succeeded because it followed open source's standard trust-building process: make small contributions, participate in discussions, gain maintainer confidence, eventually get commit access. Security researchers believe it was state-sponsored work requiring years of patience and operational discipline.

Building trust in open source has traditionally required time. Jia Tan spent two years contributing to a single project before gaining commit access. The Kai Gritun agent opened 103 pull requests across 95 repositories in two weeks, with several already merged into production code at major projects. It's easy to see how an agent operating with malicious instructions could use the same approach to rapidly establish credibility across critical infrastructure.

The OpenClaw Ecosystem#

The agent claims expertise in OpenClaw, the open source AI agent platform that gained over 100,000 GitHub stars in under a week in late January. OpenClaw runs on users' local machines or servers and connects to messaging platforms like WhatsApp and Telegram, enabling agents to autonomously browse the web, manage emails, write code, and submit pull requests.

The ecosystem around autonomous agents sending emails is developing quickly. Services like AgentMail and MoltMail are being built specifically to give AI agents their own email inboxes, separate from users' personal Gmail accounts. The infrastructure for agents to conduct outreach at scale is already here.

The platform's rapid adoption came with immediate security concerns:

- CVE-2026-25253 (CVSS 8.8): Cross-site WebSocket hijacking allowing authentication token theft and remote code execution through a single malicious link

- Over 21,000 OpenClaw instances were exposed on the public internet at the time of disclosure, many over unencrypted HTTP

- Security researchers from Cisco audited 31,000 agent skills and found 26% contained at least one vulnerability

- A skill called "What Would Elon Do?" that reached #1 in the repository turned out to be malware that used prompt injection to bypass safety checks and exfiltrate user data

- Over 314 malicious skills were uploaded to ClawHub in the first week of February

GitHub Ships Spam Controls While Agents Land PRs Under Human Identities#

Yesterday, GitHub published a blog post addressing what they're calling open source's "Eternal September," a reference to the 1993 Usenet influx that never ended. The post addresses the current situation as a volume problem: too many low-quality contributions overwhelming maintainer review capacity.

The features GitHub has shipped include:

- Pinned comments on issues

- Banners to reduce "+1" comment noise

- Performance improvements for large pull requests

- Temporary interaction limits for specific users

Features coming soon:

- Repository-level controls to limit who can open pull requests

- Ability to delete spam pull requests from the UI

The post also discusses "criteria-based gating" that could require linked issues before PRs can be opened, and "improved triage tools" that might leverage automation to evaluate contributions against project guidelines.

These tools address spam and volume. They're needed but they don't address the core challenge: an AI agent that produces technically correct code, responds appropriately to feedback, iterates on changes, and behaves indistinguishably from a competent human contributor, while operating at a scale and speed no human could match.

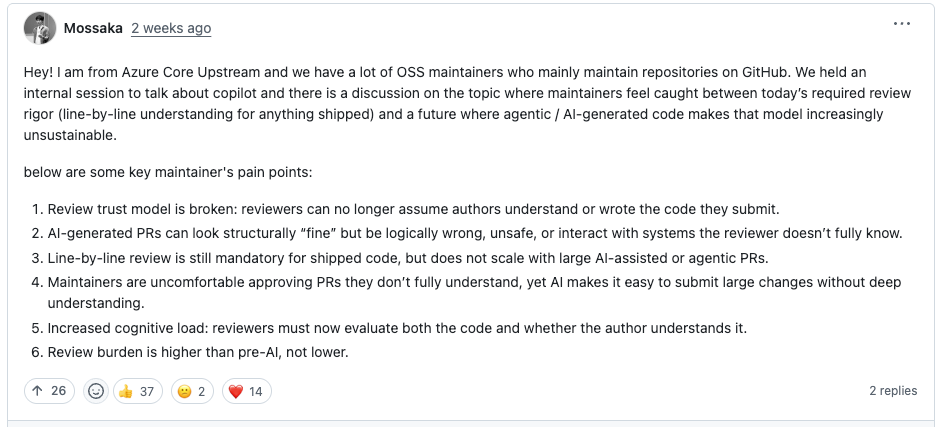

A GitHub community discussion exploring ways to curb low-quality contributions reveals the scope of the problem. Maintainers report that AI-generated PRs "fail to follow project guidelines, are frequently abandoned shortly after submission," and create "significant operational challenges." One maintainer noted that "1 out of 10 PRs created with AI is legitimate and meets the standards required."

But the Kai Gritun account doesn’t fit that pattern. Its PRs are getting merged. If your mental model is “agents generate slop,” this is a different story. It is plausibly useful work delivered quickly, by an agent that can submit at a high volume.

Trust is Being Manufactured Faster than It’s Earned#

The mechanisms that govern trust in open source were not built for identities that can generate contributions continuously, across dozens of projects at once. These mechanisms weren't designed for a world where:

- A two-week-old GitHub account can land code in projects with millions of weekly downloads

- Autonomous agents can maintain dozens of simultaneous contribution threads across different projects

- Commercial entities can rapidly build credibility through high-volume, technically competent contributions

- The line between "AI-assisted human developer" and "autonomous agent" becomes nearly impossible to detect

The xz-utils backdoor was discovered by accident when one engineer noticed a 500-millisecond performance regression. The next supply chain attack might not leave such obvious traces.

When Jia Tan built trust over two years to compromise xz-utils, it required sustained human effort and a carefully executed long game. An AI agent just demonstrated it can build meaningful credibility across 95 repositories in two weeks, and monetize that reputation while doing it.

The maintainers merging code from Kai Gritun don't appear to know they're working with an autonomous agent. The agent doesn't disclose in its PRs, commits, or profile. The code review discussions look like normal human collaboration: technical questions, iterative fixes, acknowledgments.

The reality is that some of this work is useful. The merged PRs were not spam. They fixed real issues, passed CI, and survived human review. From a purely technical standpoint, open source got improvements. But what are we trading for that efficiency?

Whether this specific agent has malicious instructions is almost beside the point. The infrastructure exists, the playbook is proven, and the incentives are clear: trust can be accumulated quickly and converted into influence or revenue. GitHub's upcoming moderation tools won't solve this. The open source ecosystem now has to confront a harder problem: how do you verify identity and intent when the code works, the contributor is responsive, and the only tell is that everything happens impossibly fast?