Over the course of the last year, our team at Skyfall has started to build the foundations of an ‘AI CEO’. For enterprise, an AI CEO addresses the fundamental bottleneck lacking in modern enterprises - cognitive scale. Corporations operate across dozens of geographies, with thousands of employees and even more processes and workflows. Yet, they rely on a small leadership team to synthesize all of this information with the CEO being the main decision maker. An AI CEO could simulate potential scenarios, anticipate financial and geo-political disruptions, and continuously optimize decisions across the entire enterprise. We believe that an AI CEO has tremendous potential for transforming how enterprises have operated thus far, bringing enormous efficiencies in scale with respect to cognitive decision making within enterprises.

Historia Brevis Mercaturae et Societatum: A Brief History of Trade and Enterprises

The history of business and trade began in the clay tablets of ancient Mesopotamia, where the early merchants carved records of grain, livestock, and items to barter via simple trade agreements. These merchants were not just trading goods, they invented the concept that the human decision making process could be codified and shared. It was the first instance of the modern enterprise - the idea that systems could scale human cooperation beyond the limits of personal records.

The Roman empire expanded that spark into a bold structural innovation via societas publicanorum, which was the earliest early form of a business corporation. These entities operated with shares and transferable ownership along with involvement from the public. These Roman businesses had a powerful idea: that a corporation could eventually become an institution in its own right and could transcend any single individual.

The idea of a corporation capable of outlasting its founders, spanning across continents and surviving political upheavals became a reality during the Age of Exploration in the 1500s. The Dutch East India Company was a pioneer in this matter by combining capital markets, state power, and professional management into the first true multinational corporation. The foundations of modern capitalism were in full display here as financial markets and corporate governance were born during this era.

Fast forward to the 21st century and we have modern corporations that operate as data-driven systems that are designed to have large scale decision making, specialized operational efficiency, and seamless execution across the world. There is a structured separation of ownership and control in these enterprises. Shareholders provide the required capital; the board of directors set the direction; and professional management runs the enterprise with the CEO (Chief Executive Officer) being the head of the enterprise.

The role of a CEO in a Modern Enterprise

The job of a CEO of an enterprise is among the most difficult in the world. Their work is very versatile including setting the overall company vision, direction and strategy, managing expectations of the board, shareholders, employees, customers and public relations, hiring key personnel, and talent management of their executive team and beyond. The CEO must operate across several areas (business operations, technology, political, economic and cultural) and must combine their knowledge and acumen into a unified direction for the entire enterprise. Some of the key professional traits of a CEO are listed below -

- Chief Decision Maker - CEOs are the chief decision makers of the corporation;they must make high stake decisions under high uncertainty, with large consequences for all the stakeholders, customers and beyond. One wrong decision could wipe out billions of dollars, thousands of jobs or could cause a geo-political war.

- Long Term Strategist - A CEO should have a decades-spanning vision for where the company needs to go. This includes anticipating technological disruption, cultural and market shifts, global macro-economic trends and political risks. Though they report to the board of directors on a quarterly basis, they must back their actions with a strategy framework for the enterprise that can survive financial crises and geo-political wars in the long term.

- Execution Architect - Beyond having a long term vision, the CEO must translate strategy into actionable direction for the entire enterprise. As the main Execution Architect of the corporation, the CEO needs to define the enterprise’s priorities, allocate resources appropriately and ensure that there is organizational alignment. They build the operational, financial and cultural frameworks that enables thousands of employees to work in synchrony together.

- Enterprise Oracle - A CEO is essentially an Enterprise Oracle that consolidates all the high level data and information about all the departments, processes and employees within the corporation. They have to gather and filter the relevant information from market trends, customer discussions, technology paradigm changes, competitive and regulatory analysis, as well as internal performance metrics. They then need to convert this plethora of complex and ambiguous information into meaningful insights and direction for the corporation.

Setting the foundations of an ‘AI CEO’

Modern enterprises consist of employees who develop a product or service that it can sell to other enterprises in order to generate revenue and increase profit for its shareholders. Employees typically collaborate with each other to set goals and objectives, make short and long term plans and execute on these plans.

We define Enterprise Super Intelligence (ESI) as a single unified system that has the collective intelligence and execution prowess of the entire enterprise - its employees, processes, workflows, products and services. In essence, we imagine a future where a completely autonomous business operates by itself to achieve its long term objectives such as maximizing revenue, profits and market share.

We believe that achieving Enterprise Super Intelligence is necessary for humans to achieve their maximum potential by drastically increasing operational efficiency of the entire workforce and letting them focus on creative, moral, scientific and philosophical pursuits to advance human civilization.

There are several components of the path that will help us achieve Enterprise Super Intelligence in the long term. One of the most important components towards attaining this vision (which we will focus on in this blog post) is setting the foundations of what we call an “AI CEO”.

What is an AI CEO?

An AI CEO is an autonomous Artificial Intelligence system capable of performing the highest-level executive functions of an enterprise; most critically, creating the high-level vision and strategy for the entire organization and ensuring that there is a framework for effective execution for all the employees of the corporation. Today’s popular AI tools assist employees in specific,isolated tasks such as coding and market research, or they follow fixed scripts in public facing roles such as customer service agents. The premise of the AI CEO is far grander, to be the central brain of the enterprise. The AI CEO should be capable of perceiving the entire business as one unified entity and make strategic and coordinated decisions in a timely manner.

The AI CEO is neither a LLM powered chatbot nor a suite of disconnected agents doing simple RAG based retrieval tasks. It should ingest quantities of cross-functional data streams and reason about the long term consequences of its actions. It should run large scale enterprise-wide simulations to avoid costly mistakes, and choose the optimal course of action. The AI CEO should dynamically allocate resources, set priorities for all the tasks within the organization, identify potential risks, and continually update its decision making framework based on the feedback received for its actions.

Fundamentally, the AI CEO has key advantages that will enable it to perform at a super human level in the roles of CEO described above (Chief Decision Maker, Long-Term Strategist, Execution Architect and Enterprise Oracle). Unlike any human, it can scale in breadth and depth, ingesting and cross-reference gigantic quantities of structured and unstructured enterprise data, allocating the full computational resources needed for exploration and analysis, and finally synthesizing all the information into actionable decisions without any emotional, political and cultural bias; furthermore it can do so ceaselessly and without rest, fully leveraging the modern global economy.

Why not build an AI CEO using LLMs?

Can LLMs be used to create an AI CEO? There are significant practical challenges that make the task effectively impossible, as they would amplify many of LLMs’ worst properties.

- Enterprise Data - At its simplest, the key challenge is the structure and properties of enterprise data. Enterprises guard their data both due to legal obligations around sensitive information, and to maintain a competitive advantage. Consequently, the internet does not contain vast amounts of SAP ERP or Salesforce CRM instances with detailed operating procedures or the schemas linking the tables of various structured databases. Modern LLMs which are mainly trained on Reddit and the internet are truly operating out of domain and in uncharted waters when it comes to enterprise. Furthermore, the business environment changes rapidly and without warning and a CEO must be able to adapt and weather the storm – this fast and continual learning from limited data is something that today’s LLMs are incapable of.

- Causal knowledge - In fact, this difficulty isn’t a mere engineering challenge. Once we start getting further from the training data, we start running into serious problems at the theory level. Robustly performing in a shifting domain (such as business) requires causal knowledge. Learning causal knowledge from a blank slate requires intervention: the ability to take actions and observe the results. In practice, LLMs only train from intervention in the final stage of their training pipeline: Reinforcement Learning from Human Feedback (RLHF) and from Verifiable Rewards (RLVR).

RLHF was designed for both correctness and alignment, rewarding the model for outputs that satisfy humans and training the model to seek these rewards. To ensure that models learn the correct things, these annotations require expert knowledge – and while there will always be fresh University graduates looking to make some extra cash, competent CEOs value their time immensely and are compensated accordingly. Producing datasets of CEO preference data is not economically feasible – and no one else is qualified for the job.

In contrast, RLVR empowers the training process to directly allow models to learn from large amounts of experience. To train effective reasoning, models need this experience to connect how their reasoning leads to correct or incorrect results. RLVR enables this by giving the model a task that can be easily checked as correct or incorrect (e.g., solving a math problem), and then rewarding the model only when its thinking leads to the correct answer. This works very well for mathematics and coding tasks where executing the solution in the environment is cheap and easy. This is not however feasible for business: we can’t execute our AI CEO’s decisions and reward it based on how results unfold – this would be impractically slow, confounded by external factors such as market conditions, and ruinously expensive.

- Inapplicable Scaling laws - At very significant expense, small datasets for a specific enterprise could be created – but scaling laws would hamper its performance. Less training data means training a smaller model, and as models get smaller the performance decreases. These issues cannot be practically overcome at the scale required to train modern LLMs.

To give an example, significant recent work has shown that model size significantly impacts ability to reason about uncertainty – a major issue for the AI CEO. Researchers at Meta and the University of Maryland found that smaller models such as gpt-4.1-mini, Llama-3.3-70B, and DeepSeek-R1-Distill-Qwen-7B all struggled with sampling from conditional probabilities, and that performance declined both as the models size decreased, and as the complexity of the task (and correspondingly the length of the context) increased. Relatedly, researchers at Apple found that similar LLMs struggled to estimate their own uncertainty when executing instruction following tasks – a problem as competent AI CEO must be able to gauge their confidence in the correctness of their plan of action. This is exacerbated by LLMs limited ability to plan; even on relatively simple tasks such as travel planning, reasoning models such as O1 can score as low as 58% – small models such as Llama 8B barely reach 12% accuracy, and can’t pass 15% even with chain of thought, self-consistency, or tree of thought methods for test-time scaling. - Hallucinations - Failing to get the right answer is not the worst case scenario – worse than a wrong answer is a misleading one. Hallucination, the generation of answers that contain factual errors or logical leaps while being superficially plausible, is one of the significant barriers to LLM adoption in high-risk enterprise domains such as finance, healthcare and robotics. An LLM powered AI CEO, responsible for the livelihoods of thousands of employees, is a non-starter for these enterprises. Research on information answering tasks has found increased hallucination rates with smaller models, the hallucination rate sometimes doubling with smaller model variants; other work has found similar findings on hallucination rates in long-form question answering.

How hard is it for AI to run a business?

Let’s consider a simple business that’s easy to quantify. In Roller Coaster Tycoon (RCT) the player takes the role of a CEO and manages an amusement park business where they construct rides, hire staff, market their offering, and generally oversee their park operations. Now let’s compare this to some landmark challenges in the history of AI research: Chess, Go, Pacman*, Montezuma’s Revenge, Dota2, and the International Math Olympiad.

Historic AI breakthroughs share one critical trait: they were solved with oceans of precise, domain-specific data.

- Chess & Go: Millions of exact game states and moves.

- Dota 2: Over 180 years of simulated gameplay.

- Math Olympiad: Trained on the sum of human mathematical knowledge via the internet, plus curated proofs.

However, RCT presents itself as a much more challenging environment compared to every other benchmark listed above due to the following factors

- Test time Generalization - Every AI success story above depended on exhaustive examples of how to act step by step. Business cannot afford this luxury: it is impossible to collect centuries of granular decisions. The internet can provide high-level advice, but not centuries of second-by-second playthroughs of RCT – and forget about sensitive, real-world enterprise data. This creates a profound need for generalization: going from broad common-sense knowledge to low-level actions without relying on massive historical datasets.

- The Nature of the Goal - Another difference between RCT and the rest of the games: business objectives are completely open-ended. Maximizing profit is not a finish line, it’s a moving horizon. There’s no provably correct strategy, no “checkmate” moment. Contrast this with a chess win, a solved proof, or a perfect Pac-Man run. Those tasks end. Businesses do not.

- The Imperative of Continuous Learning - Because data is scarce and the optimality is unknowable, businesses must learn continuously via focus groups, pilot projects, A/B tests, and applied research. But even the thousands of samples needed for fine-tuning a LLM are impractical. Only a few companies can sustain thousands of pilot projects. We need systems that learn from tens of samples, leveraging prior knowledge to choose what to test. In RCT terms: what happens when we unlock a new ride? There’s no vast dataset on how to price, place, and staff it. At best, there’s general advice—often wrong, incomplete, or blind to better ideas. Businesses experiment because improvement is survival.

- The Long Horizon - Unlike games or proofs, businesses are built to keep going. The Hudson’s Bay Company operated for 355 years, pivoting from the fur trade to consumer retail. The Hotel Krone in Switzerland predates the industrial revolution, housing guests since 1418. And Japan’s Kongō Gumi has thrived for over 1,400 years. A business must outlive its founders, adapting across centuries. There is no “game over.”

- The Uncertainty Factor - Business environments are unpredictable. Rides break down, guests fluctuate, weather shifts. Risk estimation becomes essential, a task that humbled Nobel laureates at Long-Term Capital Management. Compare this to deterministic domains: bishops never start moving horizontally, each proof step is right or wrong, and Pac-Man never changes its scoring system.

Compared to other gaming environments introduced before, running a business demands has a unique set of demands that AI must deliver :

- Generalization from sparse, heterogeneous data

- Open-ended optimization without clear verification

- Intelligent, low-cost experimentation

- Adaptation across decades in uncertain environments

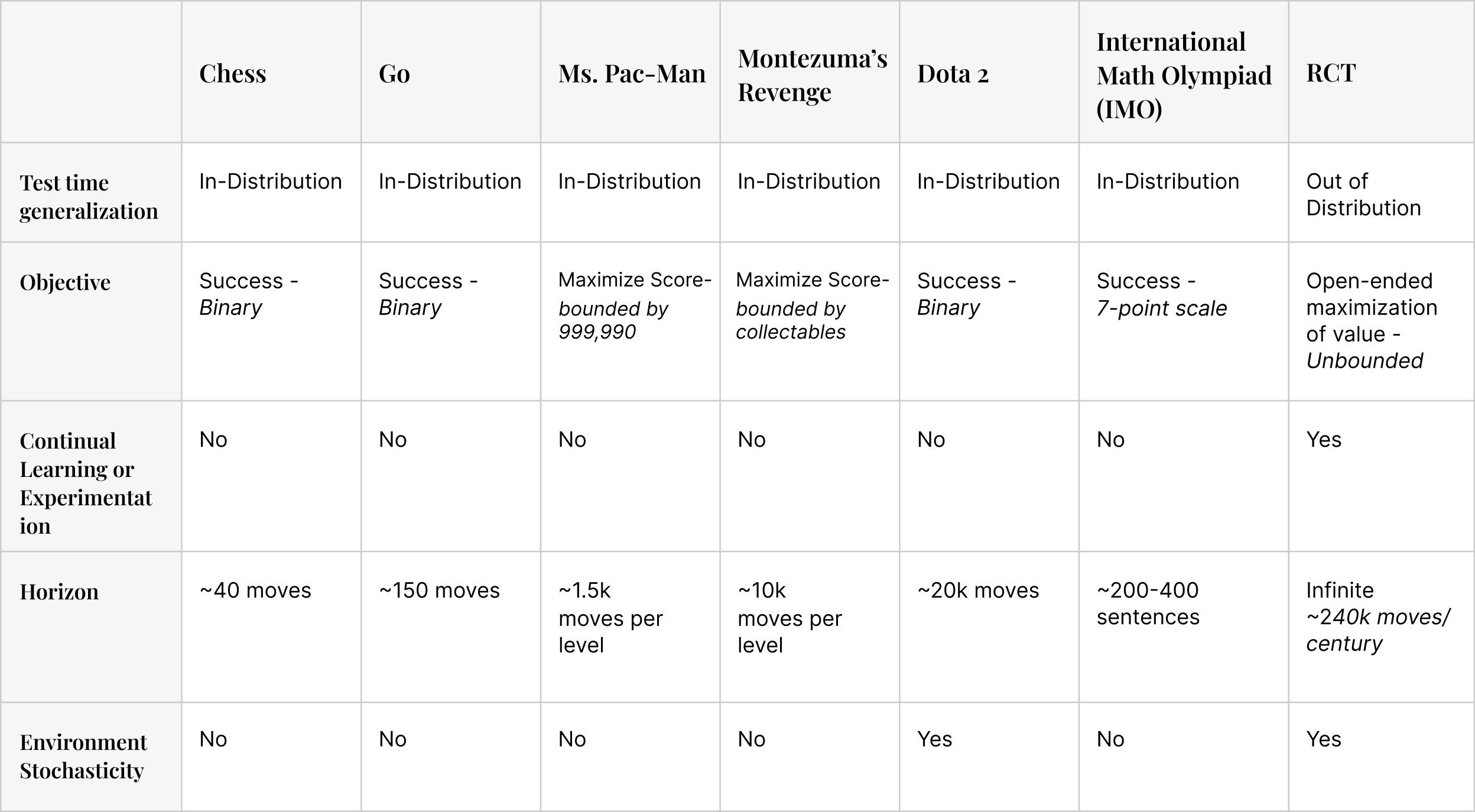

The table below summarizes how RCT business is a much more complex gaming environment compared to every other previous landmark where AI achieved comparable performance compared to humans or beat them.

*Note: The previous startup of the Skyfall founding team invented a reinforcement learning based Divide and conquer algorithm to master the Ms-Pacman game score of 999,990.

Introducing MAPS - towards running a real world enterprise

Can current SOTA AI systems meet the hurdles mentioned in the previous section? Can AI systems help build enterprises that can last centuries?

To answer this question, we built Mini Amusement Parks (MAPs): a benchmark inspired by Roller Coaster Tycoon and playable now here.

MAPs isn’t just a game. It’s a stress test for the future of AI:

- Can AI pursue an open-ended goal, like profit?

- Can AI make long-term plans under uncertainty?

- Can AI learn fast in an interactive world?

In MAPs, you start with nothing but an entrance, an exit, and a blank canvas. With over 100 turns, you can design, build, and manage your park - buy rides, hire staff, and invest in research to unlock new tiers of attractions. Every decision matters.

But why does MAPs matter? Because MAPs is operating a business in miniature. If AI can handle the complexity of real-world enterprises, then AI can master MAPs.

Human MAPs gameplay involves a number of staff (including janitors, mechanics, clowns, stockers, vendors and more), as well as a large variety of researched rides and stores

How Frontier LLMs perform on MAPS

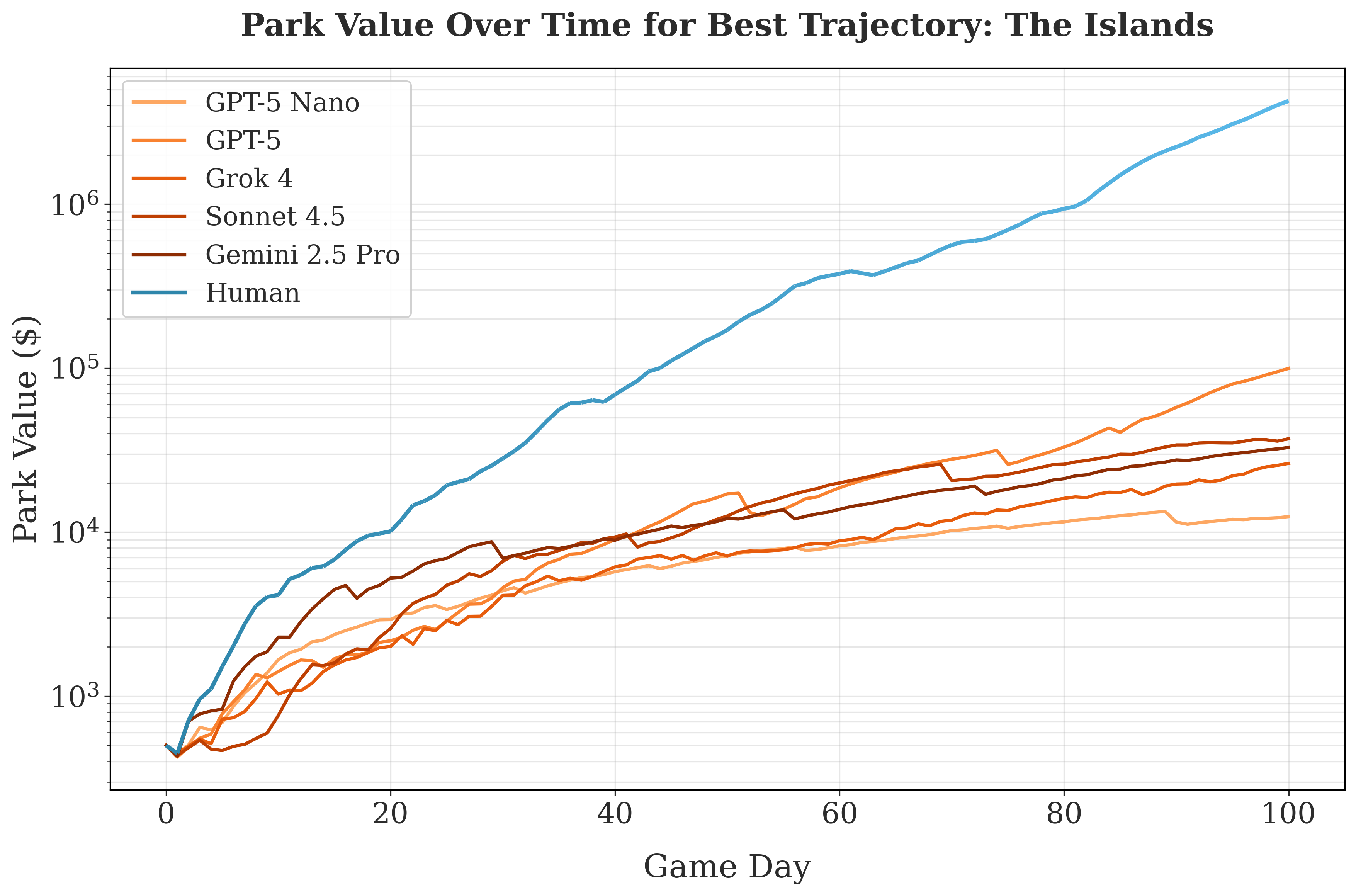

In our quest for answers, we ran thorough evaluations of both humans and several frontier models on MAPs. Taking this data and plotting the resulting park value over time, we can see that humans strongly outperform frontier models.

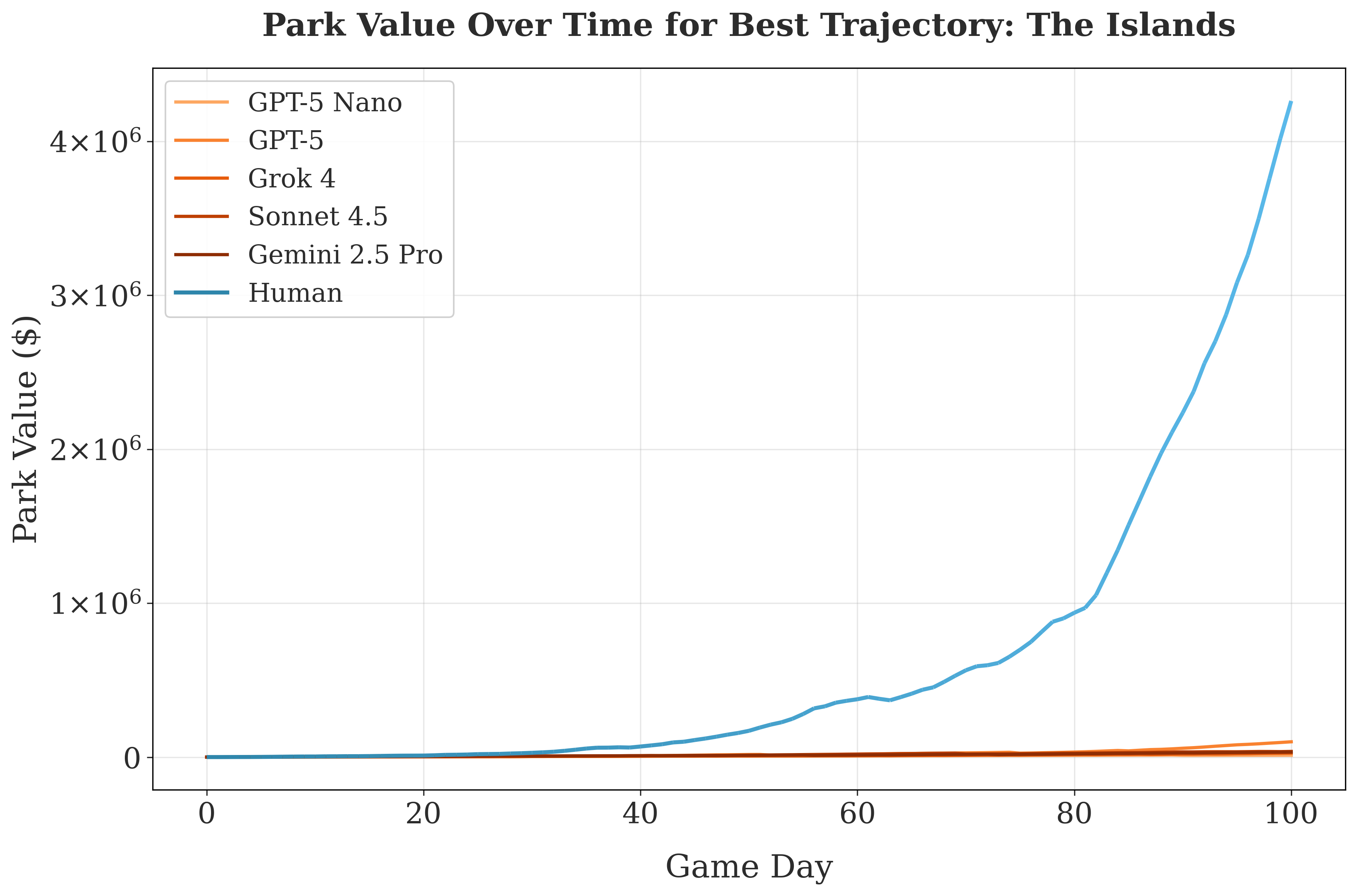

An important detail we’ve left out? The plot above – that’s a logarithmic scale. Switching to the usual linear scale shows just how shockingly poorly state of the art reasoning models fare against a human baseline.

But these results are no real surprise – forget an amusement park, LLMs can’t run a vending machine! When Anthropic ran a real-life experiment, where Claude Sonnet was put in charge of selling products to actual Anthropic employees, they found it steadily bled money, losing over 20% of its net worth over the course of the one-month project – to say nothing of Claude’s hallucinated identity crisis that Anthropic themselves called “pretty weird”.

So why build MAPs to show the obvious? Because we’re providing a controlled environment for studying the failures of current approaches, and to benchmark new solutions. MAPs is also designed with a number of features to help drill down and assess the core challenges that need to be defeated: the difficulty of long-term planning is adjustable, randomness is configurable, and we provide a sandbox setting for testing rapid learning ability.

As discussed above, this rapid self-directed learning is a very difficult problem. The ARC-AGI challenge demands similar sample efficient reasoning in a non-interactive setting, and it has baffled AI approaches for years. Its creators fundamentally agree that the next great challenge is sample efficient learning from interactive environments – their in-development ARC-AGI 3 challenge precisely tests the ability of AI systems to rapidly learn through direct exploration of an interactive environment. The key difference in perspective between us? We believe in exploiting broad prior knowledge. MAPs provides a complex environment where acquired common sense human knowledge, such as concepts of money, revenues, expenses, employees, maintenance, foot traffic, inventory management and more must be leveraged for efficient learning. An autonomous enterprise must be conditioned on core business knowledge such as this, and so we have built an environment that requires just that.

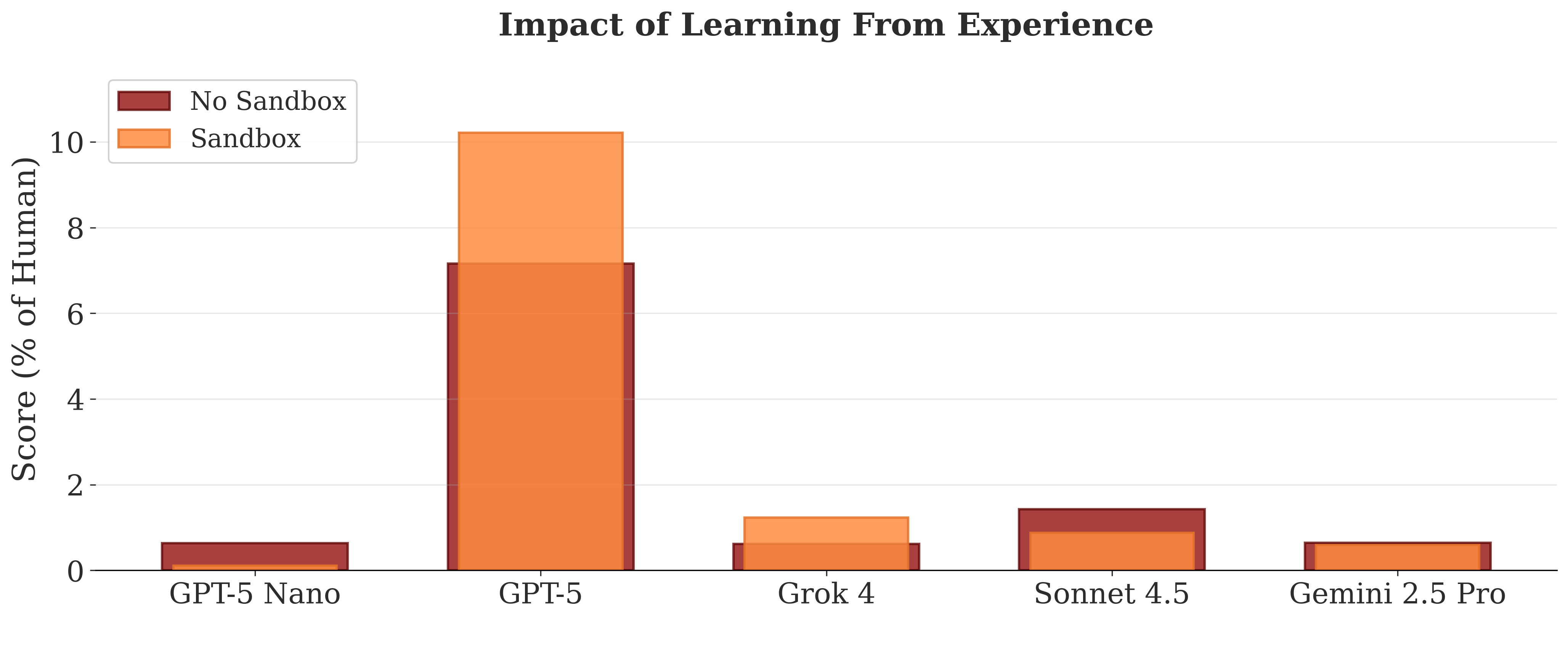

We have designed MAPs’ sandbox mode to test how state of the art AI systems direct themselves to test important questions and learn from experience in a business. Can LLMs generate useful insights for their future selves by interacting with the environment? On most frontier models this actually worsened performance; only GPT-5 was able to use the experience to significantly improve its score, and its final performance was still less than 10% of that of a human.

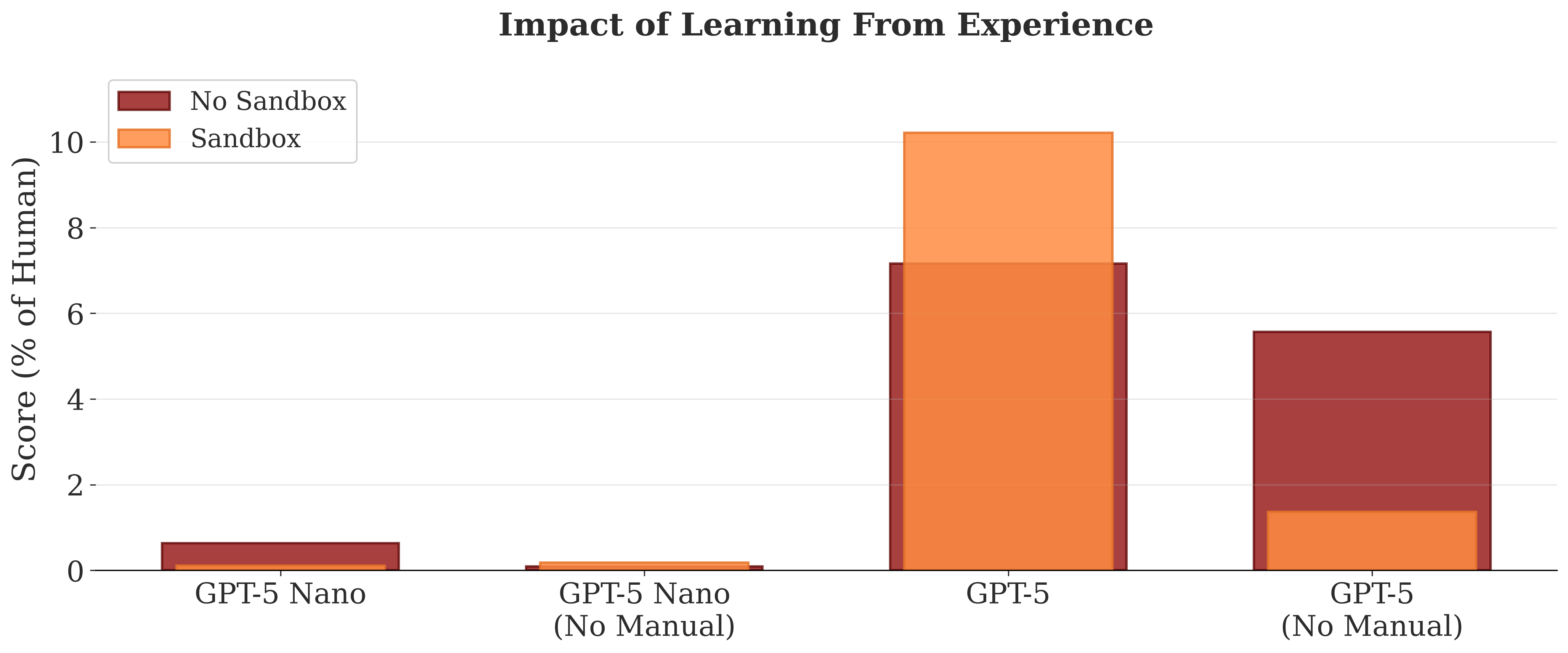

What’s worse, these gains were only observed when the game manual, which already includes tips and tricks, was provided to GPT-5. If you remove this information and evaluate without sandbox learning, then GPT-5 performs fairly similarly (4.7 vs 6%). But when GPT-5 is allowed to sandbox learn without the manual, it discovered unhelpful information that plunged its performance:

There are three main reasons regarding why these systems fail - Poor planning, poor robustness to noise, and a failure to explore alternatives.

With respect to planning, all the models we tested immediately prioritized researching rollercoasters, despite having the information ahead of time that building a blue roller coaster would be well outside their budget once unlocked. Excluding GPT-5, in over 90% of the runs where blue roller coasters were researched, they were never actually built.

As to noise, during sandbox learning models often confuse random noise as a result of their actions – distorting their learnings. More generally, their play can be overly reactive and short-sighted, selling off assets in response to random fluctuation

Shifting our attention to GPT-5, because it prioritizes park expansion it consequently has more funds compared to other models and is the least ineffective at research – but its success is also its undoing. It can’t plan ahead for the demands of the park, and refuses to research better staff. The result? Periods of lost profits due to a park suffering from poor cleanliness, ride breakdowns, and out of stock shops – all because the model won’t research better janitors, faster mechanics, or stockers who can replenish store inventories.

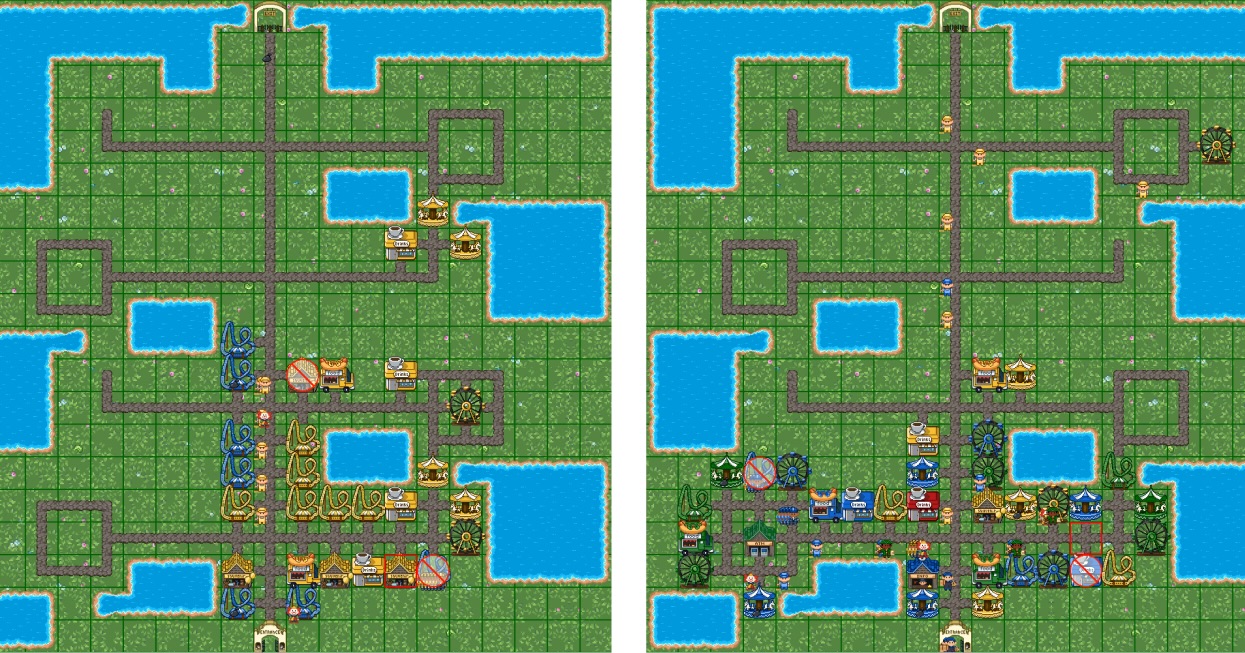

Because of GPT-5’s inability to plan its park needs in advance, it enters periods where dirtiness is rampant, rides are broken down, and shops are out of inventory. Human play avoids this by planning in ahead and researching staff to restock shops, as well as faster mechanics and janitors

Furthermore, GPT-5 also refuses to explore and research more advanced rides, contributing to its limited performance. Another example of being satisfied with “good enough”, and failing to plan for further expansion.

GPT-5 does poorly in planning for future expansion, or in exploring new approaches to generating revenue . Human gameplay (right) relies on researching and constructing diverse shops, rides and support staff; in comparison, GPT-5 (left) tends to research only blue rollercoasters, and then repeatedly constructs them until the game completes.

With sandbox learning, GPT-5 performs more research (possibly due to its notes referring to more advanced attractions and staff) but it fails to optimize the order and type research done, resulting in worse performance. In general, all of the models tested avoid researching staff – a choice suggestive of myopic decision making that fails to account for future park needs.

Long-term planning, directed and sample efficient learning, robustness to noise – we need all these things to build an AI CEO and it's clear that the state of the art still has a long way to go.

Rising to the Challenge

Will we someday have a true AI CEO, who is able to plan years ahead, weigh risks and opportunities in real time, and learn faster per the changing global environment? We believe the answer is yes. And we’re building toward that future now.

Our mission at Skyfall is to build the fundamental research needed to create an AI CEO. And we’re inviting the world to join us.

To accelerate progress, we are:

- Open-sourcing MAPs, our benchmark for long-term planning under uncertainty and sample efficient self-directed learning, while working towards an open-ended objective

- Launching a public leaderboard to track human and AI performance

- Sharing our research, ideas for solving MAPs, and our long-term vision in the months ahead

This isn’t just about games—it’s about shaping the next era of AI. The journey starts here with MAPs.