SEO “experts” give cosine similarity too much credit for semantic matching.

Cosine similarity is purely a geometric measure based on vector DIRECTION, not MAGNITUDE! Considering only angles between vectors without their lengths is SO LIMITED!

Cosine similarity only considers the ORIENTATION of vectors and NOT their magnitude. It cares about the PRESENCE and FREQUENCY of words, not the length of the document…

And there’s so much more!

Cosine similarity assumes that the vector space perfectly captures semantic relationships.

→ Do people understand that embeddings are approximations trained on finite data?

Embeddings won’t always encode the nuances…

Perhaps the easiest concept to understand is that cosine similarity doesn’t account for WORD ORDER (or syntax), which are both critical for meaning!

I’ll illustrate with a few sentences.

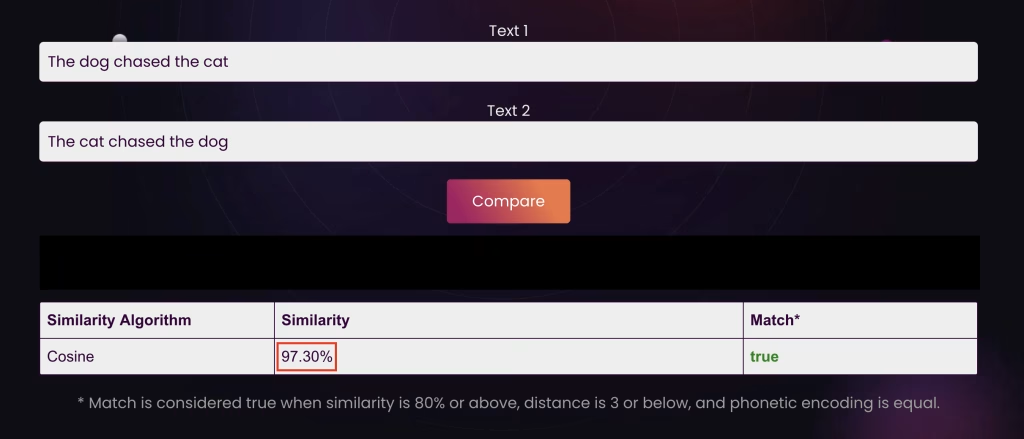

🟢 𝗧𝗵𝗲 𝗱𝗼𝗴 𝗰𝗵𝗮𝘀𝗲𝗱 𝘁𝗵𝗲 𝗰𝗮𝘁

⭕ 𝗧𝗵𝗲 𝗰𝗮𝘁 𝗰𝗵𝗮𝘀𝗲𝗱 𝘁𝗵𝗲 𝗱𝗼𝗴

Despite meaning OPPOSITE things, in a bag-of-words model (where text is represented as an unordered set of terms), these sentences can have identical vectors and a cosine similarity of 1.

Want another simple example?

⭕ 𝗧𝗵𝗲 𝘀𝘁𝘂𝗱𝗲𝗻𝘁 𝗴𝗿𝗮𝗱𝗲𝗱 𝘁𝗵𝗲 𝘁𝗲𝗮𝗰𝗵𝗲𝗿

🟢 𝗧𝗵𝗲 𝘁𝗲𝗮𝗰𝗵𝗲𝗿 𝗴𝗿𝗮𝗱𝗲𝗱 𝘁𝗵𝗲 𝘀𝘁𝘂𝗱𝗲𝗻𝘁

Because these sentences contain the exact same words, their vector representations are very similar: they have a high cosine similarity score… but meanings are VERY different!

Imagine the risk when we’re dealing with complexity! 🥲

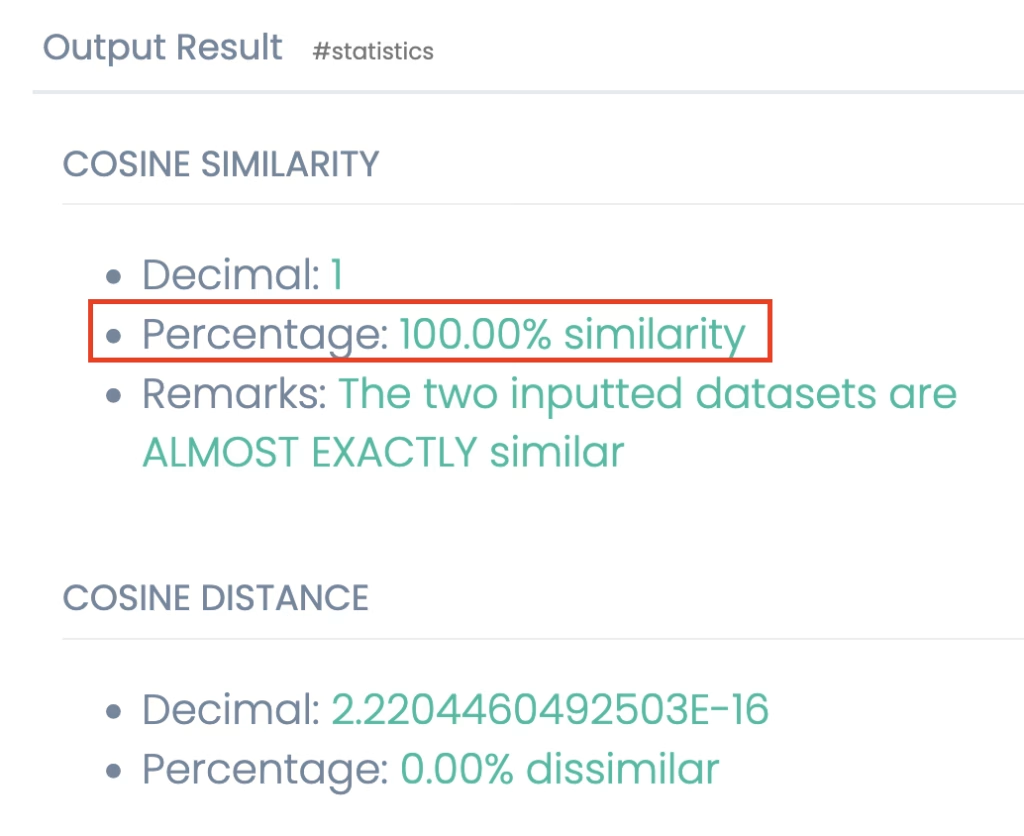

Cosine similarity for the same sentences with a Bag of Words model: we get 100% similarity!

The question is WHY?

Because a bag-of-words model represents text as a numerical vector based on the frequency of words, disregarding order!

Yes, cosine similarity is a simple concept but this simplicity results in a semantic sacrifice! Cosine similarity often misses MEANING by ignoring MAGNITUDE and CONTEXT!

We all know that meaning isn’t just about the words themselves, but their relationships and ORDER. And cosine similarity, at least in its basic form, will miss these essential distinctions.

In natural language processing, CONTEXT and WORD ORDER are very important, but basic cosine similarity does not consider those factors!

And even with embeddings that incorporate some context (like transformers), cosine similarity won’t fully capture structural differences that we intuitively grasp.

Senior SEOs give cosine similarity too much credit for semantic matching because they overestimate its ability to capture the richness of language.

© March 9, 2025 – Elie Berreby

Downloadable PDF: Cosine-Similarity-Angle-misses-Meaning-Elie-Berreby.pdf