It's confusing to see Stack Exchange spend so much of their teams' time delivering this feature, when it’s clear to me that there is no desire for such a mechanism amongst the community - this is especially apparent given the beta announcement from this summer is (as of this writing) the 22nd 23rd lowest-scoring non-deleted question on Meta Stack Exchange of more than 100k+ total questions here.

From my perspective, this is not solving an articulable problem or providing any sort of value-add for the average user. Speaking for me personally:

- If I'm looking to AI for assistance in answering a question I have, there is much more value for me in using my IDE's built-in tools to interface with something like GitHub Copilot - if, for no other reason, that its responses can be contextualized using my project's existing codebase.

- Where the feature appears in the homepage UI is extremely intrusive and pushes the actual content of the homepage further down, which feels very counterintuitive. It only adds to the unnecessary clutter that was added last year.

Like many others, I am not interested in using this feature or having it further clutter my homepage. How do I opt out or otherwise turn this feature off?

1,7751 gold badge9 silver badges11 bronze badges

26

Why did I need to be pinged about this? I don't recall ever being pinged about new Stack Exchange features before. Was this announcement post not enough? Were you hoping to notify people about the AI Assist without them needing to come here and seeing just how overwhelmingly the community is against it? The fact that the notification takes me directly to the AI Assist, rather than this announcement, makes me feel like that's the case.

It really doesn't help the feeling, that's been building over the past few years, that you're only pretending to listen to our feedback on new features, and then forcing them onto us anyway, no matter how much we say we don't want or need them.

4,6404 gold badges16 silver badges32 bronze badges

11

Attribution, where art thou?

A vast majority of Stack Overflow users want their posts read and be useful to other users. If Stack Overflow itself has an assistant feature at the top that supposedly searches through posts but never show where it got its answer or just gives irrelevant posts that puts off the searcher, Stack Overflow will lose both askers and answerers IMO.

If AI Assist on Stack Overflow is not grounded in Stack Overflow posts or does not surface Stack Overflow posts or has to rely on the underlying LLM (that used Stack Overflow posts in pre-training) to generate a response that rephrases Stack Overflow answers without attribution, what is the point?

I'll give an example.

Case 1: No reference

If it is truly searching through Stack Overflow posts, then it should include links to the actual Stack Overflow posts it used, but it doesn't. The question below: "how to make a function a member of an instance of a class in Python" was already asked and answered on Stack Overflow 14 years ago. In fact, if you look at AI Assist's answer, the code is identical to the accepted answer there. OpenAI's model has definitely seen this Q/A. So shouldn't AI Assist show this answer as a supporting document? Apparently not.

Case 2: Irrelevant reference

If an explicit reference is asked (note that the question is otherwise identical to above), a supporting post is given but it doesn't answer the question. Also the AI Assist's generation doesn't seem to reference the retrieved post either because the generated solution is different from the one in the linked post.

1,9873 gold badges7 silver badges15 bronze badges

13

Slowly and bit by bit AI services are taking StackOverflow over. It's okay as a product, the usual LLM without much attribution plus a bit of handpicked content from the past. But it's also pushing the human interactions on this site to the side, more and more and more. This will decrease, not increase new questions and even more so new answers, unfortunately.

I want my contributions to be seen and read by other humans. I don't want my contributions to be used by a machine and maybe a little bit of attribution given somewhere. I especially don't want the AI service to be placed right on the site where I might contribute content, right above that content. At least I don't want to contribute for free to such an arrangement.

StackOverflow missed the chance in the last couple of years to be a strong voice for user generated content from users for users. Instead it looks increasingly like any LLM service, with a preference for a single data source and with a database of questions from the past. I wish more energy would have been invested in making asking and answering easier, like duplicate detection, question improvements, .. The AI generated content service has the top spot now.

To also give some specific feedback: I tried to search for the stopwatch function in Julia, i.e. this question with the same title. My input was: "How to implement a stopwatch function in Julia?". It didn't find anything and produced an AI generated answer without any attribution.

And it's still quite slow I would say. Something common like "How to format a string in Python?" takes 12s to start printing and 25s to finish (and links to a zero scored, rather unhelpful answer). Other LLM services need 2s, search engines <1s.

4

Why does it always end with "If this didn't help, you can ask your question directly to the community." ?

The input placeholder shows "Ask me anything", so I asked "How can I bake a cake without eggs?"

It gave me some weird results but still ended with

Next Steps

- Try a simple wacky cake recipe (search "wacky cake eggless recipe") and compare texture.

- If you want, tell me what kind of cake (flavor/texture) you want and I can suggest a specific eggless recipe and measurements.

If this didn't help, you can ask your question directly to the community.

Even though baking cakes is not on-topic, it still blindly links the user to Stack Overflow's ask page.

13

A question for Stack Overflow (the company):

Do you believe that using GenAI is at all meaningful?

If your answer to that question is 'yes', then here's some free AI consulting for you, provided by AI Assist:

Q: If re-designing the Stack Overflow home page, would it be a good idea to put an AI prompt on top of the main page and move the "Ask Question" button out to the far right?

AI-generated answer

Short summary

No—not without careful testing. An AI prompt and moving Ask Question to the far right each have trade-offs that could harm clarity, discoverability, and community workflows.Key trade-offs

- Discoverability vs. distraction

Putting an AI prompt at the top makes AI features obvious but can distract or steer newcomers away from asking canonical, well-formed questions.- Primary action hierarchy

The Ask Question button is a core action. Moving it to the far right reduces visual prominence and likely lowers question quality/volume.- Trust & transparency

Prominent AI entry points must clearly state scope, limitations, and provenance to avoid users trusting incorrect answers.- Community norms

Emphasizing AI could change posting behavior and moderation load (more low-quality or AI-sourced posts).

Source: https://stackoverflow.com/ai-assist

Conclusions:

- Even your new feature itself thinks that it was badly designed.

- Even GenAI knows the site and products better than you do.

EDIT:

Illustration of the user interface given to the Ask Question button:

stackoverflow.com/specific_tag:

9,4151 gold badge34 silver badges41 bronze badges

31

On my first visit:

On to more serious matters (coming from a non-SO, mostly non-technical user):

-

AI Assist isn't meant to compete with those tools [like ChatGPT], it's meant to improve search & discovery experience on SO via natural language, chat interface

(Taken from a comment.)Then why does it ask me what I'd like to learn today, giving the impression it's capable of teaching me things? Can this be changed to something more well-suited, like "how can I help you find what you're looking for?"?

-

By showcasing a trusted human intelligence layer in the age of AI, we believe we can serve technologists with our mission to power learning and affirm the value of human community and collaboration.

Yes, injecting what I believe is emphatically non-human 'intelligence' into things is a good way to affirm the value of human community and collaboration. Like tax forms in Christmas gift wrappings. Promotional, empty phrases like these makes my eyes roll. You're introducing information to your communities, so please keep it honest and clear.

What is this "trusted human intelligence layer" exactly? What's "honest" about it?

Moving on from my answer to the former announcement, it seems it can now access the entire network. Thanks, that's a nice upgrade for all of us "technologists"!

However, putting this to the test, the answer to my first practical question is quite useless:Q: How can I protect artwork on paper?

AI: Binder clips + a small protective sheet behind the clip are low-cost, reversible, and minimally invasive—good for rentals.

And some additional advice on how to hang paper stuff, which is not what I asked for. It found an answer on DIY, while I expected and hoped to trigger it to find useful information on Arts & Crafts.

Is this indicative of its capabilities, or is it just focused more on the "technologists" among us?

A new aspect of this AI assistant has to do with conversations, and I rarely use chat: what exactly are those conversations? Do they include comment sections beneath questions and answers? Is it this AI's strength to collect disparate comments from different sections on the site based on a query?

11.3k2 gold badges26 silver badges77 bronze badges

12

If AI Assist is linked to from non-SO sites, then it should be relevant for those sites and understand what votes are

I am a heavy user of CrossValidated, the statistics Q&A site under the SE banner, and that site now has a prominent "AI Assist" link in the left hand sidebar, underneath the (utterly site-specific) "Home", "Questions" and "Unanswered" links, and above the (again utterly site-specific) "Tags" and "Saves" links.

So when I saw that link in this specific place, it seemed obvious to me that by clicking on it, I would be sent to something like https://stats.stackexchange.com/ai-assist or something like that... at least somewhere that would be specific to statistics questions. Y'know, basic UI and such?

Well, no. The link goes to the general https://stackoverflow.com/ai-assist/ URL.

So, just for kicks, I asked it for "Any thoughts on the Mean Absolute Percentage Error?" Because we have a kinda well-voted question on this topic at CV: What are the shortcomings of the Mean Absolute Percentage Error (MAPE)?

Unfortunately, AI Assist apparently does not read CV, or badly so, because in its answer it gave one link to a question with zero upvotes on Math.SE (Mean Absolute Percentage Error), and to a different question on CV with, again, zero upvotes (Absolute Average deviation in percentage calculation) as of right now.

Might it be possible that AI Assist does not quite understand the concept of, y'know, votes in the SE system?

V2BlastStaffMod

9,0924 gold badges42 silver badges87 bronze badges

3

I won't add to comments on the usefulness or quality of AI Assist.

But I can say it's out of character with the network, and I believe SE is "quiet quitting" the community to become another chatgpt frontend.

For a Q&A system let’s be honest about just how huge of a change this is to the format. And this is amplified by the fact that the contributors are so against it. It not only changes how information is exchanged, it will be impossible to have constructive discussions with the community about it’s refinement over time. Think about what message that sends to those contributors that made SE what it is today. Its a hostile and alienating change.

This has always been a network of humans asking questions, and humans answering them. AI Assist now places human "answerers" in competition with another type of answerer: AI.

And it's not a fair competition when AI is pinned to the top of the page and offers plausible-sounding quantity over quality. If SE expects this to just happily co-exist with human answers, ... how? Where are the hero callouts explaining how the world's top experts are here at your fingertips in this community? Well SE doesn't care about the community unfortunately. This will drift one way or another, and given the trajectory, will turn SE into humans asking questions and getting AI answers at maximum throughput. Bravo, a chatgpt frontend.

So why is it out of character? (off the top of my head...)

- Most obvious: Official policy has banned generative AI. And that policy has been useful and well-received. AI Assist not only reverses that course completely, but promotes it to the top of the page. Make it make sense, considering the rigor that has gone into creating policies tuned for this Q&A format.

- Even if attribution works perfectly, it demotes SE from an often primary source of knowledge to secondary. What used to be the definitive source now is reduced to aggregate slop

- Reputation is an important feature of the site; not a zero sum but at least it's comparable and thresholds mean something as we compare human against human. Adding a giga-answerer that is effectively a black hole of reputation feels more like a cancer than a feature.

- SE has spent years refining workflows and rules carefully tuned to interactions between askers & answerers. Introducing this basically clobbers a bunch of this consideration. And will the AI participate in these meta discussions? Or is SE expecting the community to carefully advocate for AI Assist in shaping policy. I'm not saying that change is bad in general, but this is a flag worth noticing.

IMO SE is just "quiet quitting" the community.

8435 silver badges11 bronze badges

5

A major blunder of 2025 is confusing a situation where LLM is needed, with a situation where search is needed.

This is such a blunder.

6964 silver badges12 bronze badges

4

Attribution on copied code

What does this mean?

34.7k8 gold badges73 silver badges125 bronze badges

14

I've seen it said multiple times that the primary goal of this chat is to improve discoverability of existing answers, but if that's the case, why does the homepage now have two "search" boxes next to each other?

I'm not sure I'd want the original search replaced entirely, but surely there's a better place for this that avoids pushing the actual content even further down the homepage.

1,0356 silver badges11 bronze badges

6

Asking the AI agent for sources both works and doesn't work:

Ideally, sources should be displayed automatically at the end of the response so that users are able to see the context of the information (which has value for validating any AI response) and also gives some measure of attribution. It would be really nice if there was a way of the user saying the information was useful and led to the answer(s) receiving an up-vote in response. This way, the users providing the value will feel valued.

6,0233 gold badges13 silver badges47 bronze badges

1

Why?

How is it better than say ChatGPT?

Honestly I doubt it. I am sure this thing is dumb, slow, made by rookies and will quickly fall behind in competition with OpenAI, xAI, etc.

As for announcement, I don't really care how this version is better than previous, nor I am kin to see how you re-implemeting basic AI chat features (sharing conversation? omg, so cool!) here. What exactly is selling point of this feature, wasting precious devs time on this buggy and more and more "experimental" web-site?

I visit this site mostly:

- to ask a question

- to entertain myself: answering easy question myself, learning, checking hot meta.

I am rarely googling for answers myself and never ever searching for anything using search here.

I am happy with answers provided say by Grok. It uses SO under the hood, but also reddit, msdn, any link I ask him to check, etc.

I am looking forward to use AI agents, rather than chats.

Why should I use AI Assistant?

Or is this feature not for me, but .. for who?

2,31314 silver badges18 bronze badges

9

feature-request status-completed

Lack of guidance on reporting problematic chats shared to them

I created a chat, made a sharing link, and opened that link in a private window (i.e., signed out). While the conversation was fine, if it was problematic in a T&S sense (e.g., CoC violations), I don't see an obvious way to report it.

Random folks may not know of the Contact form, which wasn't linked there. There's Provide Feedback > T&S, but it's not obvious what that does (adds a tag in a database? files a support ticket to T&S? something else?).

Can you add guidance for signed-out users who received a sharing link for what to do if it's offensive/problematic? One path is just add a link to the Contact form.

2

When listing retrieved posts, please use the icon of the SE site where the retrieved post comes from instead of the generic SE icon:

4

- What is "log in"? I am logged into SO.

- What is this white rectangle constanly popping up for a short moment with busy indicated and then disappearing?

3

Community best practices for removing the AI Assist chatbox

The AI Assistant box on the StackOverflow homepage is unwanted by many users. Many users want to remove the AI Assist chatbox. In the comments of this question, @AshZade (a staff member) said "We don't have plans to provide toggles turn off any AI Assist components." Therefore, we should have community best-practices for removing the AI Assist chatbox.

Currently, I am using the uBlock Origin extension for Firefox with the following custom filters:

stackoverflow.com##.mb12.g8.d-flex

stackoverflow.com##.mb24.bg-white.w100.wmx7

This is fragile - a small change in the layout of the homepage would make the filter no longer work. Of course, it's better than letting the AI chatbox remain.

I'm marking this answer as community wiki - please edit in more reliable ways of removing the AI Assist chatbox.

Alterative options:

- Log out (@user400654) - the AI Assist box is only shown to logged-in users. Note that in the mobile view, the StackExchange icon with the log out option is not shown, so switch to desktop view to log out.

2

I compared the kimi.com results to the Stack Overflow results for the query "How do I concatenate strings in python?"

Response from Stack Overflow's AI Assist - I have to share the entire conversation, so click the link to see it.

Response from Kimi + its "Thinking" section is pasted below.

Observations

- Kimi is much faster - even opening the shared conversation link from AI Assist had a pause to it that is annoying.

- Kimi does a better job at laying out all the different options with some explanation of when it makes sense to use them, where the AI assist result was hard to skim for a quick answer.

- AI Assist returns results that have recommendations from humans, but the direct quotes are addressing a specific question that is not my question, so it's more difficult to read and understand their relevance. For example "This explains your TypeError..." I didn't ask anything about a type error. The usefulness of the quoted answers suffers when they are presented without the context of the question.

- Stack Overflow's next steps are confusing. It's telling me "Replace commas with + or use f-strings for small concatenations." and nothing in my prompt has anything to do with commas.

Conclusions

I don't see any reason to use this flavor of AI for questions like "How do I concatenate strings in Python?" It's slow and the results have a lot of stuff in them irrelevant to my prompt. I would much rather use it as a search agent for Stack Exchange sites. The information in the AI Assist results may be better in some respects, but it is presented so poorly it is hard to absorb.

Thinking complete The user is asking how to concatenate strings in Python. This is a straightforward programming question. I should provide clear, practical examples of different methods for string concatenation in Python.

Let me think about the main methods: Using the + operator Using the += operator Using the join() method (especially for lists of strings)

String Concatenation in Python

Here are the main methods to concatenate strings in Python, from most common to more specialized:

1. Using the

+Operator (Most Common)Works for joining a small number of strings.

first = "Hello" last = "World" full = first + " " + last # "Hello World" # Can also use with literals greeting = "Hi" + " " + "there" # "Hi there"Important: All operands must be strings. This will fail:

# ❌ Error: age is an integer message = "Age: " + 30 # TypeErrorFix it by converting to string:

# ✅ Works message = "Age: " + str(30) # "Age: 30"2.

+=Operator (In-place Concatenation)Adds to an existing string variable.

text = "Hello" text += " " text += "World" # "Hello World"3.

join()Method (Best for Lists/Tuples)Most efficient for concatenating many strings or an iterable.

words = ["Python", "is", "awesome"] sentence = " ".join(words) # "Python is awesome" # With a separator csv = ",".join(["a", "b", "c"]) # "a,b,c" # No separator combined = "".join(["a", "b", "c"]) # "abc"Performance: Much faster than

+in loops.4. F-strings (Python 3.6+)

Cleanest for mixing strings and variables. Also does automatic conversion.

name = "Kimi" age = 30 message = f"Hello, {name}. You are {age} years old." # "Hello, Kimi. You are 30 years old."Can include expressions:

result = f"2 + 2 = {2 + 2}" # "2 + 2 = 4"5.

format()MethodWorks in older Python versions.

name = "Kimi" age = 30 message = "Hello, {}. You are {} years old.".format(name, age)6.

%Formatting (Legacy)Old-style formatting, still seen in some codebases.

name = "Kimi" age = 30 message = "Hello, %s. You are %d years old." % (name, age)

Best Practices

Scenario Recommended Method Few strings (2-3) +or f-stringsMany strings in a loop join()Mixing variables/literals f-strings Building a large string gradually join()or list +join()Python < 3.6 compatibility format()Performance Tip: Never concatenate in a loop with

+. Use a list andjoin():# ❌ Bad - creates new string each iteration result = "" for s in large_list: result += s # ✅ Good - much faster result = "".join(large_list)

32.6k7 gold badges63 silver badges135 bronze badges

3

If developers are so pre-disposed to asking questions to an LLM now days rather than Stack Overflow, why do we need to present our own version of an LLM that performs worse than effectively all existing LLM's devs use, on platform, and pretend it is a better search when it isn't even good at searching (it just took 45 seconds to return an on-site result that included two old outdated answers and then a 3rd answer with alternatives from the LLM that was correct.

What is this even solving? How are people putting up with how slow this is enough to use it?

The whole purpose of anything released for stack overflow the site should be that it is a better solution for the site than you can get elsewhere. But all this seems to do is take forever to load and then show off just how outdated the network's content is.

19.8k8 gold badges49 silver badges76 bronze badges

4

Certain prompts take me to an unusable page:

In the above screenshot, the "up arrow" button is disabled and there's nothing I can do. I can type in the "Ask me anything" box, but I cannot submit my input.

Other prompts yield complete nonsense:

4

How long are chats stored?

I’m curious about both how long they remain viewable to users and how long they’re kept server-side. The UI only says “recent” chats, but I’m not sure whether “recent” means one day, one year, the last 50 chats, or something else.

IMHO keeping all chats while enabling the user to delete some or all of their chats is the best option.

2

The name of this "feature" (which users didn't ask for, as was pointed out elsewhere) is wrong.

It should be called LLM assist and not "AI assist".

There is no intelligence in the statistical prediction that LLMs are used for, so it is wrong and misleading to call it "artificial intelligence" or "AI assist".

9,6751 gold badge30 silver badges60 bronze badges

1

Useful feature for some, surely. But to echo requests in other comments, some of us would like a way to turn this feature off or remove it from the top of our Q&A site pages.

But I mainly came here to point out this "clever" use of Terms and Conditions:

You retain full ownership of your AI Inputs, as applicable, but you grant to Stack Overflow a worldwide, perpetual, irrevocable, non-exclusive, sublicensable, right to use, copy, modify, distribute, and store the AI Inputs.

It really sounds like you own it and we only get to pretend we do. There should be a way to opt out of granting you full license to use our inputs.

At what point do you decide to tell it like it is, rather than hide behind clever manipulations of terms of service?

"You pay your mortgage and retain the deed to your home and full ownership, but you grant us a worldwide, perpetual, irrevocable, non-exclusive, right to use, cohabitate, modify, lease, and use for storage". Who really owns it?

4

Improve styling on small viewport (mobile)

Please spend some time making this work on small viewport and mobile devices.

Mobile screenshot 1

Button should be centered

AI Assist title wraps

The following message can't be seen on touch devices

No matching posts were found on the Stack Exchange Network, so this answer was generated entirely by AI

It should also have a

cursor: pointerto indicate there is some information behind it

Mobile screenshot 2

- Buttons should be on a single line

- Please reduce the amount of useless white space

General mobile points

- After the LLM finished the answer the input box gets automatically focuses, causing the keyboard to pop up and fill up half your screen. Since the answer just finished, no need to add focus to the input field.

5,07311 silver badges31 bronze badges

2

A couple things.

First, can you share some information about how the LLM is being trained? Presumably some sort of RAG is being used to ensure that the output is as up-to-date as possible with questions that are being posted. Likewise, I would like to presume that a degree of tuning has taken place to ensure that the output is tailored to the audience and typical problem domain as opposed to most LLMs (i.e., "training on all of the things").

Second, my typical use case for Stack Overflow is trying to find answers to fairly edge case scenarios and the LLM does not appear to be very well tuned for that given the following scenario of testing for functionally zero in C++, with this question in mind (granted search also fails here as well). Which is to say, who exactly is this for? Given that it's framed as learning something I would have to presume it's biased towards the most commonly asked questions - which is fair - but doesn't do much to improve the discoverability of long tail programming questions.

I get the impression is that Stack Overflow is trying to continue to improve the long standing reputation that it's not for beginners (Reddit r/learnprogramming link). This is a commendable goal to be sure, but how the LLM is being integrated into the homepage is likely serving to alienate long time users - query how many of the 2497 beta badge holders are still active - and the same for more experienced programmers looking for answers to the long tail questions.

12.5k5 gold badges46 silver badges79 bronze badges

3

About a year ago I asked this - "Is Stack Exchange planning to force users to ask their questions to an LLM before allowing posting on the site?":

Inspired by this question I was skipping through the recent blog post once again overhyping AI as The Answer To Life, The Universe And Everything Else.

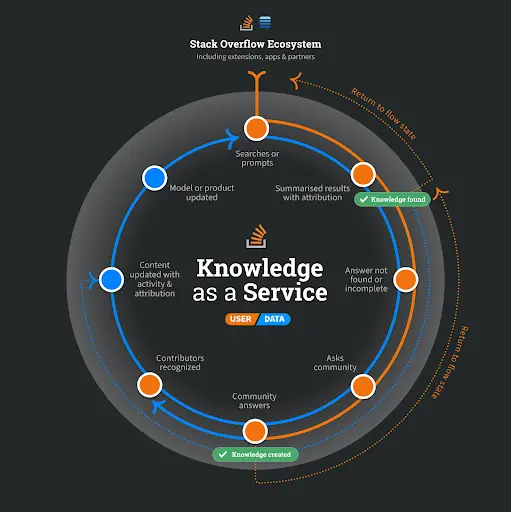

Leaving aside the robo-review scenarios, I noticed one more weird implication in this picture:

Here we can see what Stack Exchange describes as their "Ecosystem". In this scenario the company describes a quite specific flow.

- A question is asked to the LLM.

- If the LLM can answer (or can at least hallucinate an answer while tricking the user into thinking their problem is solved) then the process ends here.

- Only if the LLM isn't able to provide an adequate answer the question is then posted for the community to answer.

Now, this looks quite similar to "Requiring users to check ChatGPT before posting their question", something that someone already tried to propose and was already met with quite a bit of backlash by the Meta community.

Is this the future the company is planning? Or is the image only meant to illustrate how some future additional tool will integrate with the site?

And obviously got employee after employee swearing you would never dare, that I am the bad one for assuming bad intent etc etc etc....

Fast forward to today...

As Lundin pointed out, this new "layout" seems to purposely "hide" the Ask Question button, while giving plenty space to the new no-one-asked-for AI-Based feature.

I already know that some white knight will point out that "you can still ask questions" and "this is not the same" but... please, spare me the devil advocating here. You perfectly know what that question meant, and you knew back then too.

So, I ask you again, more bluntly this time.

Do you still claim is not your purpose to put your poor AI-based solution above human provided answer? Do you claim that hiding the Ask Button while giving your new AI toy the central focus was just a coincidence? Do you claim you would not prefer users to first use your AI parrot and only after that proved ineffective finally move to post an actual question?

And more importantly... do you think we are blind to you actual goals?

40.1k6 gold badges85 silver badges161 bronze badges

10

I re-ran my test from the "rebuilt for attribution" post (i.e. will the tool find an answer I know exists on Space Exploration StackExchange). Overall, this version seems better. It did find what I expected it to. It then ad-libbed...badly. Judge for yourself.

Overall, much worse for my needs than typing "RPOP space" into the existing StackExchange search bar (or, even better, using Google search instead, even in these dark times).

Good

The attribution to the post is correct, the quote seems correct (I checked and did not immediately detect discrepancies), and the relevant part of the post (which was more expansive than just the question I asked "AI Assist").

Bad

The generated summary is reminiscent of things I used to write as a schoolchild when asked to summarize something I didn't understand in my own words. The phrasing is awkward (the parenthetical in the first sentence doesn't really follow from the words that precede it) and the second sentence is arguably incorrect, though I can see why it would be put that way.

I think it just looks really, really bad when sat right next to a summary written by the world's foremost expert on the topic (at the time, at least).

I'd expect there to be other topics throughout the network where exactly that would happen as well: you've got a direct quote from The Person, who graciously donated their time, inside a blockquote with slightly gray text, next to a higher-contrast (flat-black?) inaccurate restatement. It's almost like something out of a satirical documentary where the laugh in the scene is getting the expert to wonder why the hell they've allowed themselves to be put in this situation next to a buffoon.

The "key trade offs" section that follows is entirely bollocks. Honestly every time I look back at it I get angry. What weird text to invent. What an odd thing to put focus on.

Not that I honestly expect it from what is ultimately a glorified next-token machine, but the original post has (as it should!) a link back to the primary source. Those links are omitted in the blockquote, and though the primary source is correctly mentioned in "Further Learning" it's much worse attribution than I'd given in the sourced Q+A. Hyperlinks have existed around as long as I have. We should use them.

Though I understand that this experiment is deployed on StackOverflow right now, and maybe I'm misusing it by specifically asking it to look at other parts of the network (prompted by seeing a comment that said it did have training on that), but it digging up posts from Space Exploration SE and then inviting people to ask follow-up questions on StackOverflow in the last (boilerplate?) sentence seems exemplary of the State of the Thing.

If this didn't help, you can ask your question directly to the community.

1,5803 silver badges13 bronze badges

3

You must log in to answer this question.

Explore related questions

See similar questions with these tags.