I was flipping through a Linear Algebra textbook today - particularly spurred to it due to my increasing efforts in picking up more AI/ML related topics.

Somehow - given the way textbook presented these techniques stripped off most of the historical context - I couldn’t resist doing some digging on my own.

Introduces the idea of “counting board” - behaves like an “augmented matrix”.

Input System of Equations:

\(\begin{aligned} 2x + 3y - z = 5 \\ - x + 4y + 2z = 6 \\ 3x - y + z = 4 \end{aligned}\)

Augmented Matrix:

\( \left[ \begin{array}{ccc|c} 2 & 3 & -1 & 5 \\ -1 & 4 & 2 & 6 \\ 3 & -1 & 1 & 4 \end{array} \right] \)

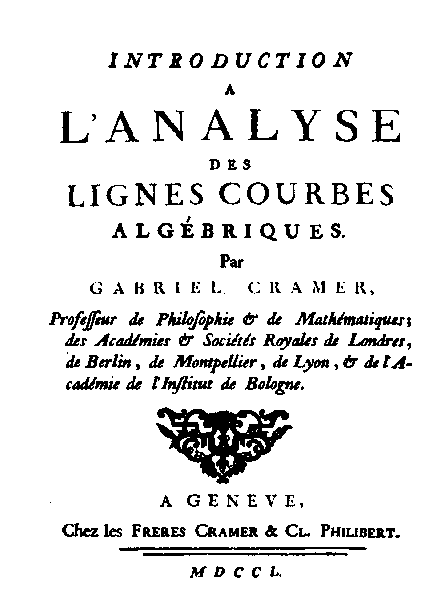

He uses determinants to solve systems with unique solutions based on his system.

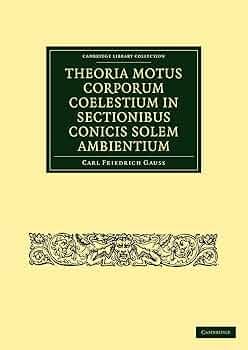

A detailed account of how Gauss’s method came to be - is explained in this page: How ordinary elimination became Gaussian elimination. Interestingly - there is a Newton’s notebook connection to the topic, and a lot of backstory behind how the method got published.

In the following page from Sylvester’s “Additions to the Articles on a New Class of Theorems” (link) - we can find the first reference to the word “Matrix”. Matrix is derived from “mater” - which in latin stands for mother. He visualized matrix as mother’s womb, which gives birth to many determinants - smaller matrices derived from the original by removing rows and columns.

His work directly influenced the development of the Ax = b notation for representing system of equations we use today

So there we have it: roughly 2,000 years of accumulated human thought culminating in linear algebra — powering modern GPUs, AI, and much of today’s computational world.

It’s hard not to pause at the scale of the intellectual structure we inherit, and how casually we now build on top of it.