Fifteen years ago, when I first started promoting Story Points at the company I worked for, they felt like a breath of fresh air. They were relatively simple, the estimation process was fun, and I even found myself explaining to the company president why we spent a whole day “playing cards”—it was our first-ever Planning Poker session. In other words, I was a Story Point neophyte.

I have since abandoned Story Points. Not because I stopped liking them for some arbitrary reason, but because I now have the data to prove they do not work as a planning tool.

I developed a “Story Points Validator” module in my OrgAI platform. Using it, I analyzed years of historical data from 43 teams across several departments. This gave me 1204 atomic, monthly observations to compare estimated Story Points against the actual time spent on tasks.

The conclusions were brutal and clear.

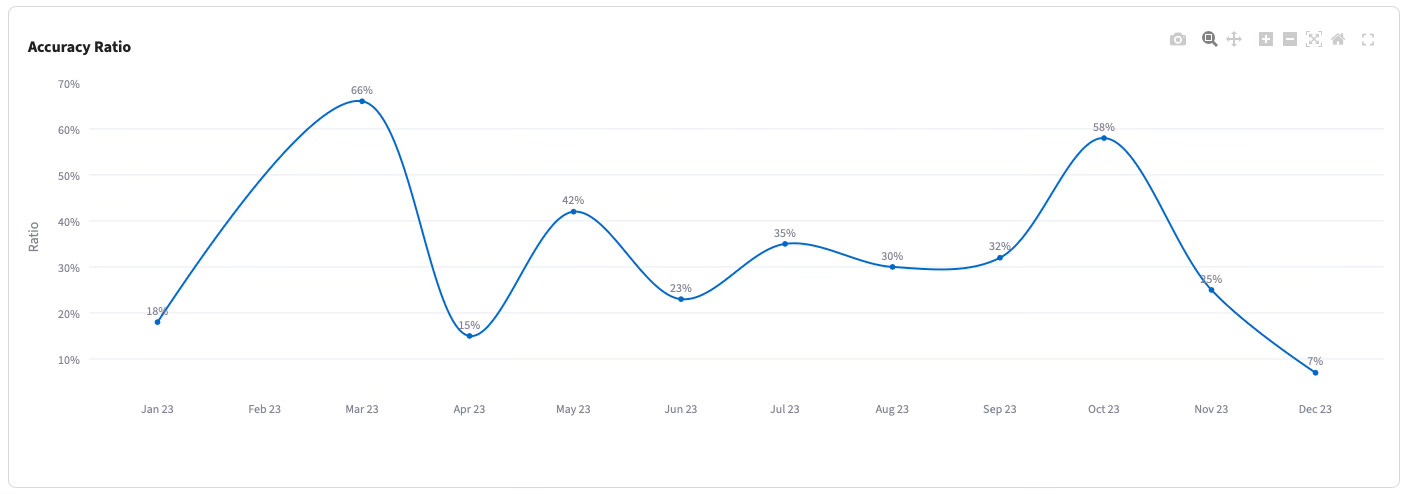

Low Accuracy: At their absolute best, teams were able to correctly estimate the “size” of a task for about 70% of their work. However, this was a rare peak. The accuracy usually moves in the 20-40% corridor.

High Instability: The accuracy was never stable. It fluctuated wildly month-over-month, with no visible trend, sometimes dropping or rising dramatically. I observed 100% accuracy only 7 times out of 1204 observations.

Underestimation is the Norm: Teams both overestimate and underestimate, but there is a clear and strong tendency to underestimate the size of tasks. This aligns perfectly with the well-documented optimism bias that affects all estimation activities.

More Effort Does Not Help: The amount of time teams spent in estimation ceremonies had no statistically significant correlation with the quality of their estimates. A 5-person team spending an hour a week on estimation wastes 20 hours a month on an activity that has no real impact on planning accuracy.

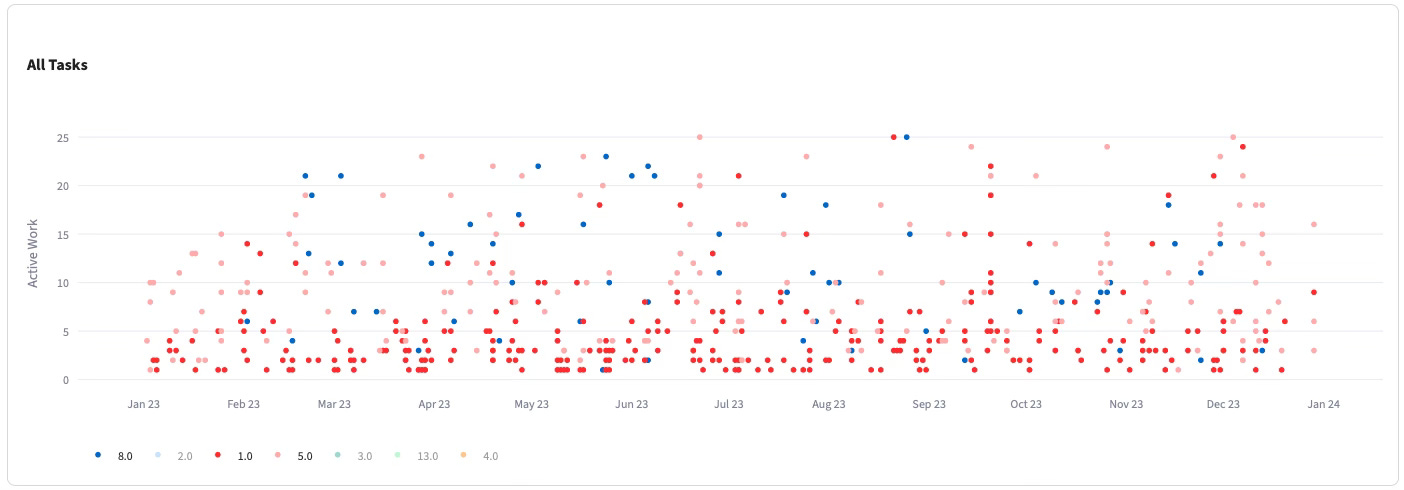

But the most damning evidence is this: the distribution of actual work time for tasks assigned the same Story Point value is completely random.

Tasks estimated as 1, 5, or 8 Story Points (as examples) could all take anywhere from 1 to dozens of working days. There were no distinct “layers” of effort that correlated with the Story Point buckets. The expectation I had 15 years ago, that SPs would neatly categorize work by size, was proven false by the data. The only observable trend was that tasks estimated as 1-3 SP had the highest chance of being genuinely small.

The purists say: You are not supposed to correlate Story Points with time! Their value is in the discussion and for relative sizing!

This is a naive argument that ignores business reality. The ultimate purpose of any sizing exercise is to answer the question when can we have this? or how much can we fit into this timeframe?. A tool that, by definition, refuses to answer this question is a useless tool for planning. It is like a car with no speedometer—it might be moving, but no one knows how fast or when it will arrive.

If Story Points are a broken tool, what is the alternative? My research points to a few clear principles.

Use Fewer, Data-Driven Buckets. If you must size tasks, use very few buckets (e.g., Small, Medium, Large) and define them using your historical cycle time percentiles, not guesswork.

Do Not Estimate Small Tasks. Focus your team’s precious time on breaking down large tasks into smaller, well-defined ones. A good rule of thumb: a valuable task should have as few acceptance criteria as possible, ideally 1, but up to 3 is OK.

Forecast, Do Not Estimate. To predict a delivery date for a batch of tasks, use probabilistic forecasting based on your team’s historical throughput and the number of tasks in the batch.

Stop “Improving” a Broken Method. Do not invest more time in trying to “unify” or “improve” your Story Point estimation process. The chances of it working are minimal. Invest that time in building a better forecasting system based on real data.

Abandon Derived Metrics. For clarity, do not use any metric derived from Story Points—committed vs. completed, velocity, or anything else. If the foundation (SPs) is this unreliable, using derived metrics is a waste of time for everyone involved and encourages teams to game a broken system.

In summary, Story Points are an inherently unstable tool. The problem is not with how we practice it; the problem is with the tool itself. Instead of investing in better Story Points, it is better to invest in a better way of forecasting—one based on throughput and probability.

Are you tired of endless Planning Poker sessions that lead to meaningless numbers and unreliable plans? It is time to switch from estimation theater to data-driven forecasting. I offer a focused engagement to help your teams implement a forecasting system based on their real, historical data, so you can finally get predictable answers to when will it be done?.

Let’s schedule a no-commitment call to discuss how.