This week I learned something that finally made “transfer learning” click. I had always heard that you can hit strong accuracy fast by reusing a pretrained model, but I had never built it end-to-end myself. The interesting part is not just the accuracy. It is why it works: those early convolutional layers are basically generic visual feature extractors, and it is a waste to throw them away and start over.

I will walk through the exact steps I took to reach about 90% accuracy on a flowers dataset with VGG16, without training a huge CNN from scratch. If you are a CS person with limited DS background, this is a low-friction way to see deep learning behave like a power tool instead of a research project.

What surprised me

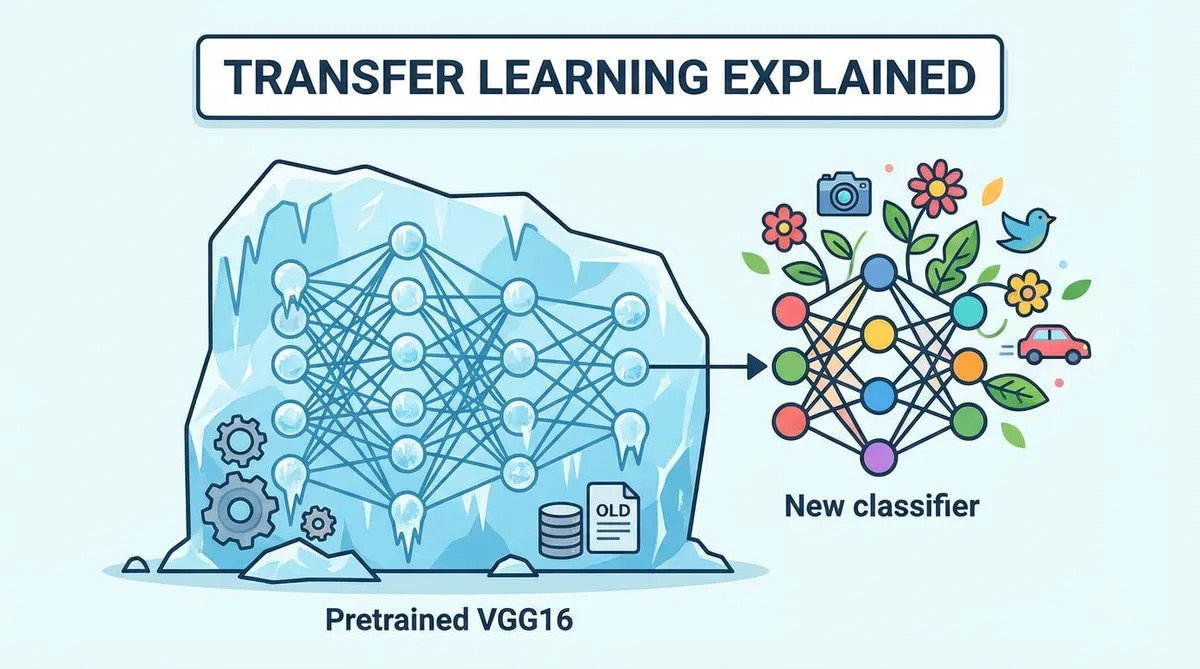

I expected transfer learning to be a small optimization. It is not. It is a totally different workflow. Instead of treating CNN training as a fresh blank slate, you treat it as adaptation: freeze a giant feature extractor, add a tiny classifier head, and let it learn just enough to map those features to your labels. That single change shifts the cost from “weeks of training” to “minutes on Colab.”

Goal: use a pretrained neural network (transfer learning) to classify three flower types with about 90% accuracy.

Google Colab setup

Colab gives you free GPUs and a convenient environment. Upload your challenge folder and open your notebook:

- Open Google Drive.

- Go into the

Colab Notebooksfolder. - Drag and drop this challenge folder.

- Right-click the notebook and select

Open with->Google Colaboratory.

Enable GPU acceleration:

Runtime -> Change runtime type -> Hardware accelerator -> GPU

Mount your Drive and place Colab in the project context:

from google.colab import drive drive.mount('/content/drive') import os os.mkdir('/content/drive/MyDrive/Colab Notebooks/data-transfer-learning') os.chdir('/content/drive/MyDrive/Colab Notebooks/data-transfer-learning')

What is a pre-trained neural network?

Convolutions detect patterns in images: edges, textures, and shapes. The key idea is that these early features are useful across tasks. Whether you are classifying dogs, cars, or flowers, you still need edge detectors and texture filters. A pretrained model is basically a reusable visual feature engine.

Two big advantages:

- Training is faster because most parameters are frozen.

- You get a mature architecture trained on massive datasets.

Meet VGG16

VGG16 is a classic CNN from Oxford’s Visual Geometry Group. It achieved 92.7% top-5 accuracy on ImageNet (14 million images, 1000 classes). It replaced large kernels with stacks of 3x3 kernels and became a deep, stable feature extractor.

We will:

- Load VGG16 with ImageNet weights.

- Remove the original fully connected layers.

- Add a small classifier head.

- Train only the head on flowers.

Data loading and preprocessing

The dataset is a small flowers collection. You can load it directly into Colab or mount it from Drive. For simplicity, here is the direct download path:

!wget flowers-dataset.zip !unzip -q -n flowers-dataset.zip

Load and split the data:

from keras.utils import to_categorical from tqdm import tqdm import numpy as np import os from PIL import Image def load_flowers_data(loading_method): data_path = 'flowers/' classes = {'daisy':0, 'dandelion':1, 'rose':2} imgs = [] labels = [] for (cl, i) in classes.items(): images_path = [elt for elt in os.listdir(os.path.join(data_path, cl)) if elt.find('.jpg')>0] for img in tqdm(images_path[:300]): path = os.path.join(data_path, cl, img) if os.path.exists(path): image = Image.open(path) image = image.resize((256, 256)) imgs.append(np.array(image)) labels.append(i) X = np.array(imgs) num_classes = len(set(labels)) y = to_categorical(labels, num_classes) p = np.random.permutation(len(X)) X, y = X[p], y[p] first_split = int(len(imgs) /6.) second_split = first_split + int(len(imgs) * 0.2) X_test, X_val, X_train = X[:first_split], X[first_split:second_split], X[second_split:] y_test, y_val, y_train = y[:first_split], y[first_split:second_split], y[second_split:] return X_train, y_train, X_val, y_val, X_test, y_test, num_classes X_train, y_train, X_val, y_val, X_test, y_test, num_classes = load_flowers_data('direct')

A quick sanity check:

Baseline CNN (from scratch)

Before transfer learning, build a small CNN to understand the baseline. It usually struggles on this dataset unless you tune heavily.

from keras import Sequential, Input, layers from keras.optimizers import Adam def load_own_model(): model = Sequential() model.add(Input((256, 256, 3))) model.add(layers.Rescaling(1./255)) model.add(layers.Conv2D(32, kernel_size=10, activation='relu')) model.add(layers.MaxPooling2D(4)) model.add(layers.Conv2D(32, kernel_size=8, activation='relu')) model.add(layers.MaxPooling2D(4)) model.add(layers.Conv2D(32, kernel_size=6, activation='relu')) model.add(layers.MaxPooling2D(4)) model.add(layers.Flatten()) model.add(layers.Dense(100, activation='relu')) model.add(layers.Dense(3, activation='softmax')) return model model = load_own_model() model.compile(optimizer=Adam(), loss='categorical_crossentropy', metrics=['accuracy']) history = model.fit(X_train, y_train, epochs=10, validation_data=(X_val, y_val), verbose=1) res = model.evaluate(X_test, y_test) print(f"test_accuracy = {round(res[-1],2)*100} %")

This typically lands around the 60-75% range. Good enough for learning, not great for production.

Transfer learning with VGG16

1) Load the base model

from keras.applications.vgg16 import VGG16 def load_model(): model = VGG16(weights='imagenet', include_top=False, input_shape=(256, 256, 3)) return model model = load_model() model.summary()

2) Freeze the convolutional layers

def set_nontrainable_layers(model): model.trainable = False return model

3) Add a new classifier head

from keras import layers, models def add_last_layers(model): trained_model = model flattening_layer = layers.Flatten() first_dense = layers.Dense(100, activation='relu') output_layer = layers.Dense(3, activation='softmax') result = models.Sequential([ trained_model, flattening_layer, first_dense, output_layer ]) return result

4) Build and compile the full model

from keras import optimizers def build_model(): model = load_model() model = set_nontrainable_layers(model) model = add_last_layers(model) model.compile(optimizer=optimizers.Adam(learning_rate=1e-4), loss='categorical_crossentropy', metrics=['accuracy']) return model

5) Preprocess with VGG16’s expected input

VGG16 expects images preprocessed in a specific way. Do not normalize with 1/255 like in your custom CNN. Instead:

from keras.applications.vgg16 import preprocess_input X_train_preproc = preprocess_input(X_train) X_val_preproc = preprocess_input(X_val) X_test_preproc = preprocess_input(X_test)

6) Train with early stopping

from keras.callbacks import EarlyStopping early_stopping = EarlyStopping(monitor='accuracy', patience=5) model = build_model() history = model.fit( X_train_preproc, y_train, epochs=10, validation_data=(X_val_preproc, y_val), verbose=1, callbacks=[early_stopping] )

7) Evaluate

model.evaluate(X_test_preproc, y_test)

With a bit of luck and stable splits, you should see accuracy near 90%. That was the coolest part for me: the base model already contains a huge amount of visual knowledge, and you are just adapting it.

Why this works (intuition)

The pretrained model acts like a universal feature extractor. The flowers dataset is too small to learn robust filters from scratch, but it is enough to learn a small mapping from “generic features” to “our labels.” Freezing the base layers keeps those features intact, and training only the head avoids overfitting. This is why transfer learning is such a standard recipe in applied ML.

What I would try next

If I had more time this week, here is how I would push it further:

- Unfreeze and fine-tune: after convergence, unfreeze the last few convolutional blocks and train with a very low learning rate.

- Data augmentation: random flips, rotations, and color jitter can reduce overfitting.

- Modern backbone: replace VGG16 with ResNet, EfficientNet, or MobileNet for better tradeoffs.

- Hyperparameter search: tune learning rate and batch size.

Wrap-up

The new thing I learned is that transfer learning is not a small hack. It is a strategy shift: reuse a trained visual system, then teach it your specific labels. For small datasets, it is the difference between “not great” and “surprisingly strong.” If you want a fast, practical win in deep learning, this is the shortest path I have found.