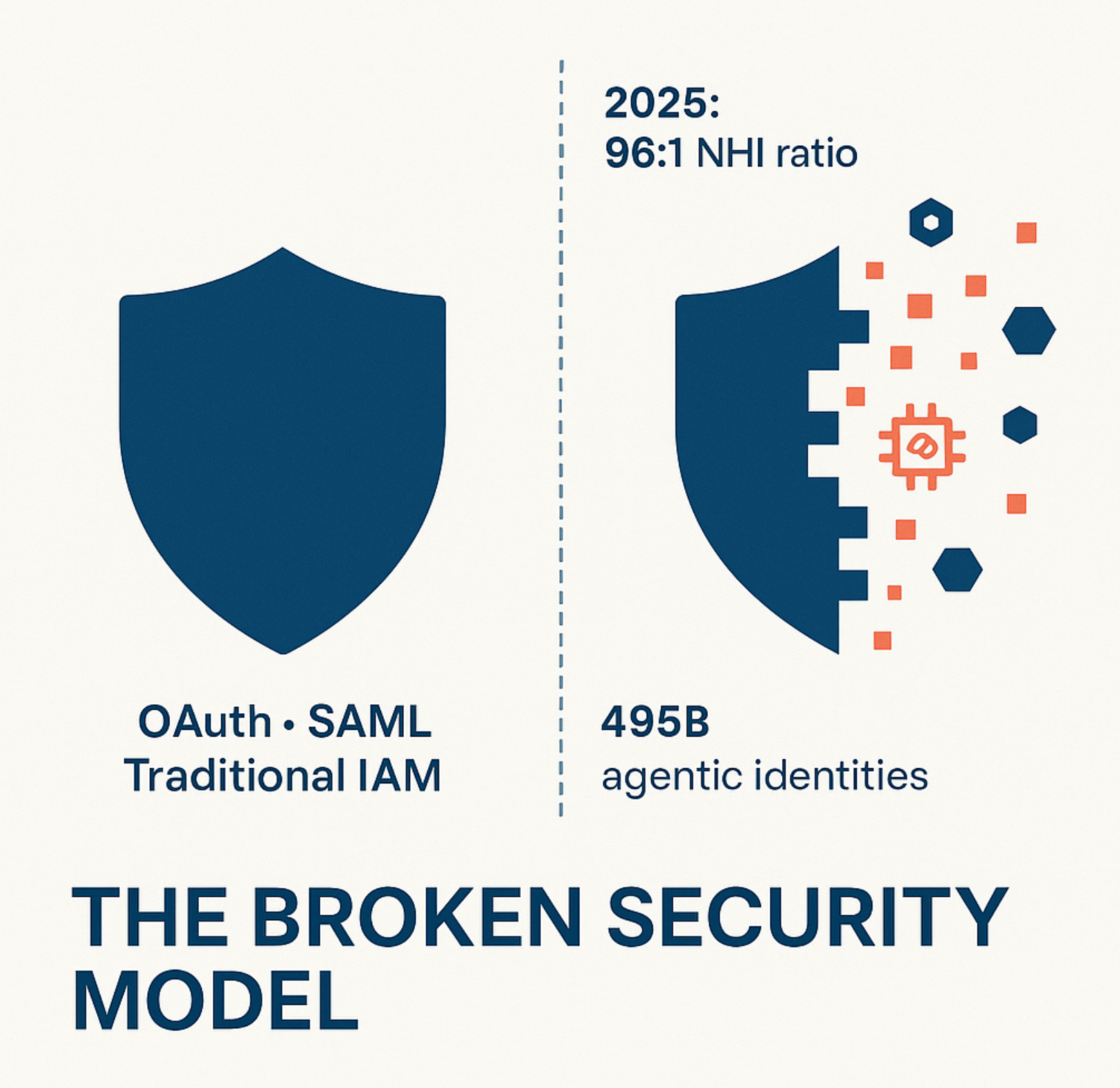

Remember when managing digital identities was straightforward? You had employees, contractors, maybe some service accounts, and that was it. Your IAM team knew exactly who (or what) needed access to what, and traditional tools like OAuth and SAML handled authentication just fine.

Those days are over.

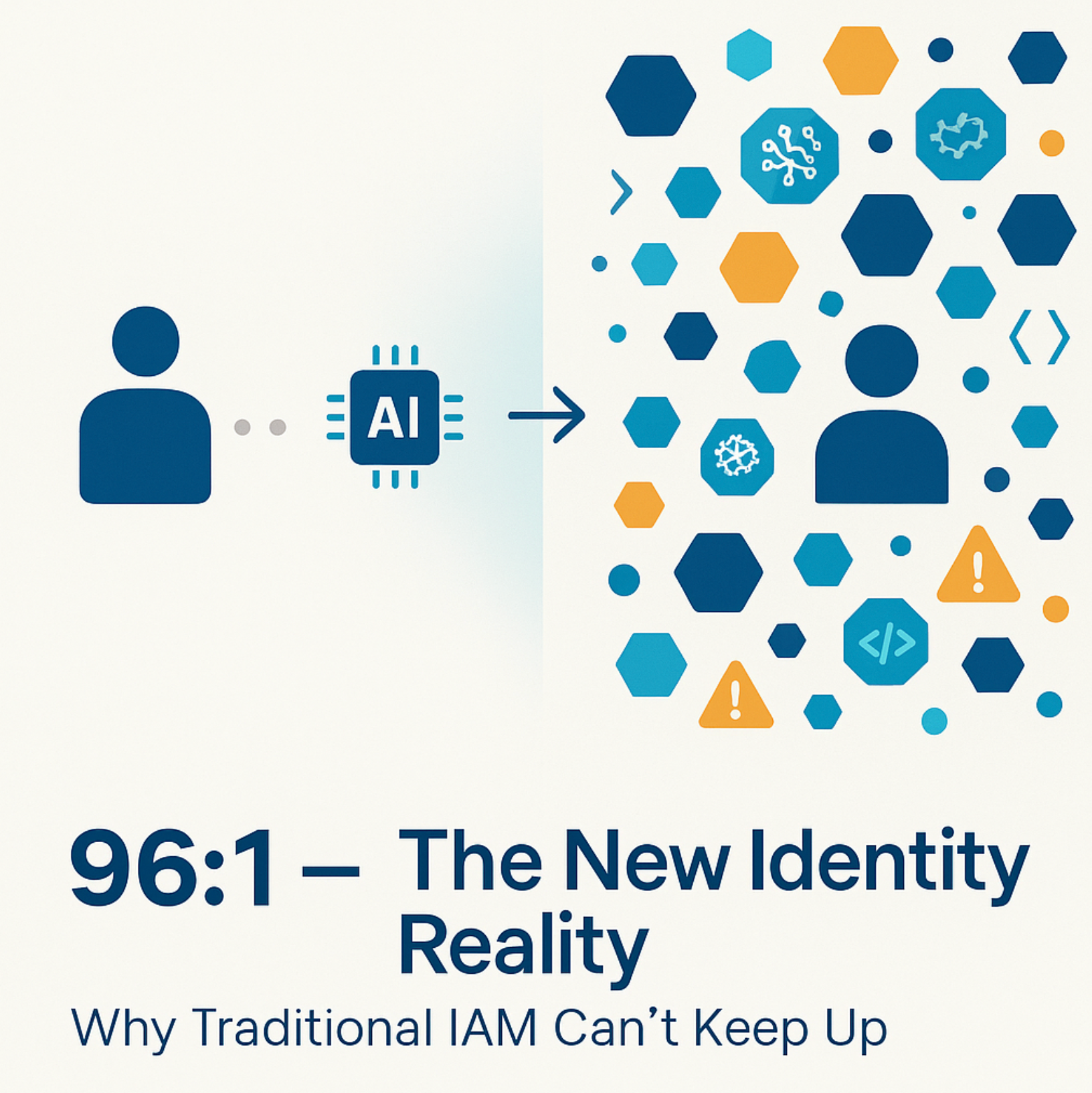

We're living through a fundamental shift in how digital identity works, and most organizations aren't ready for it. Right now, three forces are converging to create what I call the "perfect storm" of identity chaos: exploding numbers of non-human identities, the rise of AI agents making autonomous decisions, and developers using AI to write code at unprecedented speeds through "vibe coding."

Here's the reality check: companies now have about 96 non-human identities for every human employee in financial services—and some analysts predict this ratio will hit 80:1 across all industries within two years. Meanwhile, 25% of Y Combinator's latest batch of startups had codebases that were 95% AI-generated. And Gartner predicts that by 2028, one-third of enterprise applications will include autonomous AI agents.

This isn't just about scale. It's about a fundamental mismatch between how we've traditionally managed identities and the reality of what's happening in our systems right now.

Understanding the New Players in Your Digital Ecosystem

Non-Human Identities: The Invisible Army

Do you know how many non-human identities (NHIs) exist in your organization today?

If you're like most security leaders, the honest answer is no. And that's a problem.

Non-human identities—service accounts, API keys, OAuth tokens, certificates, bot accounts, and secrets—have always existed. But their numbers have exploded. In many organizations, these machine identities now outnumber humans by a factor of 50 to 1, with some environments showing ratios as high as 96:1 in financial services.

What makes this especially dangerous is that these identities operate continuously, without breaks, without oversight, and often with more privileges than they need. Unlike human identities that go through HR systems and have clear lifecycles (onboarding, role changes, offboarding), non-human identities are often created on the fly during deployments, forgotten about, and never properly managed.

Here's what keeps me up at night: in a recent survey, 40% of organizations couldn't identify who owns their non-human identities. Think about that. Nearly half of companies have identities with access to critical systems, and nobody knows who's responsible for them.

The numbers tell a sobering story. Over 23.7 million secrets were found exposed on public GitHub repositories in 2024 alone. And here's an ironic twist: repositories with GitHub Copilot enabled leaked secrets 40% more often than those without it.

AI Agents: Autonomy at Scale

If non-human identities are the infrastructure layer, AI agents are the autonomous workforce that's being built on top of them.

AI agents aren't just fancy chatbots. They're autonomous software entities designed to perceive their environment, make decisions, take action, and learn from outcomes—often without human intervention. They can schedule meetings, approve expenses, analyze data, manage infrastructure, and even make financial decisions.

According to recent research, 79% of organizations have already adopted AI agents to some extent. The volume of agentic identities is expected to exceed 45 billion by the end of 2025—that's more than 12 times the number of humans in the global workforce.

But here's where it gets complicated: AI agents need identities to function. They need to authenticate themselves, access data, call APIs, and interact with other systems. The problem is that the identity frameworks we've built for humans—and even for traditional machine identities—weren't designed for entities that can reason, learn, and make autonomous decisions.

Traditional IAM systems assume intent, context, and ownership. But AI agents often operate without clear ownership. They spin up dynamically, exist temporarily, and can delegate authority to other agents. They might start with one set of permissions in the morning and need completely different access by afternoon based on the tasks they're handling.

And the scary part? In a recent survey, 80% of IT professionals reported witnessing AI agents act unexpectedly or perform unauthorized actions. Despite this, only 10% of organizations report having a well-developed strategy for managing these agentic identities.

Vibe Coding: Speed Without Security

Now let's talk about how a lot of these AI agents and applications are being built in the first place.

"Vibe coding" has become one of the hottest trends in software development. The concept is simple: use AI tools like ChatGPT, Claude, GitHub Copilot, Cursor, or specialized platforms like Lovable to generate code through natural language prompts—without deeply understanding or reviewing what's being created.

For non-technical founders and experienced developers alike, it's revolutionary. One developer built a multiplayer game in 10 days that earned $38,000 and scaled to 89,000 players. The promise is clear: faster iteration, more experimentation, democratized development.

But there's a hidden cost that's only now becoming apparent.

Research from Veracode analyzing over 100 large language models across 80 coding tasks revealed a shocking finding: 45% of all AI-generated code introduces security vulnerabilities. These aren't obscure edge cases, either. LLMs failed to protect against common attacks like Cross-Site Scripting in 86% of cases and Log Injection in 88% of cases.

The problem isn't that AI is inherently bad at coding. It's that AI generates what you ask for, not what you forget to ask for. Unless security is explicitly in the prompt, it's usually ignored. LLMs are trained on vast repositories of human-generated code from places like GitHub—which means they learn to reproduce both secure practices and widespread vulnerabilities.

I've seen this firsthand in our work at GrackerAI. Developers using AI to rapidly prototype solutions often inadvertently create what security researchers call "silent killer" vulnerabilities—code that functions perfectly in testing but contains exploitable flaws that bypass traditional security tools.

For example, a developer recently used AI to build a SaaS app and proudly shared their progress on social media. Within days, they were reporting security vulnerabilities. The day after that, they were under active attack.

Why Traditional IAM Is Failing

The identity and access management tools we've relied on for years—OAuth, SAML, Active Directory, traditional IGA systems—were built for a different era. They assumed:

- Identities are relatively static: Employees join, stay for years, and eventually leave. Service accounts might last even longer.

- Access patterns are predictable: A finance employee needs access to financial systems. A developer needs access to development tools.

- Authentication happens occasionally: Users log in at the start of their day and stay authenticated until they log out.

- Humans are in the loop: Every important decision involves a person who can be held accountable.

None of these assumptions hold true anymore.

AI agents might exist for minutes or hours, not months or years. Their access needs change dynamically based on the tasks they're performing. They don't "log in" once a day—AI workloads initiate 148 times more authentication requests per hour than human users. And many agents are designed specifically to make decisions and take actions without humans in the loop.

OAuth and SAML provide coarse-grained access control that can't adapt to the ephemeral, evolving nature of AI-driven automation. Traditional IAM tools focus on the identity lifecycle of human identities and often completely overlook machine identities, let alone autonomous agents.

This creates persistent blind spots:

- Limited visibility: Most security teams lack centralized visibility into NHIs across cloud and on-premises systems, making it nearly impossible to assess exposure or enforce policy.

- Weak credential hygiene: Static credentials, long-lived tokens, and hard-coded secrets remain common. They're often reused across environments without rotation.

- Excessive access: NHIs frequently receive broad, persistent privileges because their roles aren't clearly defined, massively expanding the blast radius if they're compromised.

- No lifecycle management: Unlike human identities managed through HR systems, NHIs are created ad hoc and rarely reviewed or decommissioned when no longer needed.

The Convergence: Where Three Crises Meet

Here's where things get really concerning: these three trends aren't happening in isolation. They're reinforcing each other, creating a security challenge that's greater than the sum of its parts.

Consider this scenario, which is playing out in organizations right now:

A developer uses vibe coding to rapidly build a new customer service agent. The AI tool generates the code, which includes several API integrations and database connections. The code works beautifully in testing, so it gets deployed to production quickly—because speed to market matters.

But the AI-generated code has some issues:

- it uses overly permissive service accounts (because that was the easiest path)

- hardcodes some credentials (because the AI saw that pattern in its training data)

- doesn't implement proper input validation (because the developer didn't explicitly ask for it).

Now this customer service agent is live. It's an autonomous AI agent that can access customer data, process refunds, and integrate with multiple backend systems. It makes decisions on its own, operates 24/7, and handles thousands of interactions daily.

Nobody on the security team knows it exists. It's not in any identity registry. Its credentials have never been rotated. It has more access than it needs. And because it was vibe-coded, it has multiple security vulnerabilities that traditional scanning tools didn't catch.

This isn't a hypothetical. This is happening right now in enterprises around the world.

The Real-World Impact

Let's ground this in some concrete examples of what's actually happening:

The Cloudflare Incident: Attackers compromised misused non-human credentials to gain persistent access to critical systems. The breach involved under-protected API keys and misconfigured workloads.

The U.S. Treasury Attack: Poorly managed service accounts gave attackers quiet, sustained access to sensitive government systems—all because machine identities lacked proper oversight.

The Lovable Vulnerability: The Swedish vibe coding platform Lovable had 170 out of 1,645 applications with security vulnerabilities that allowed anyone to access personal information—a 10% vulnerability rate directly tied to AI-generated code.

The GitHub Copilot Paradox: Despite being designed to help developers write better code, repositories using Copilot leaked secrets 40% more often than those without it—because the tool makes it easier to write more code faster, including code that inadvertently exposes sensitive information.

The Shadow AI Problem: 45% of financial services organizations admit that unsanctioned AI agents are already creating identity silos outside formal governance programs. These "shadow AI" deployments are the new shadow IT, except they have autonomous decision-making capabilities.

What Organizations Must Do Now

I've spent my career building and scaling tech companies, and I can tell you that the solution isn't to stop using AI or to slow down innovation. That ship has sailed, and honestly, it shouldn't come back. The productivity gains and competitive advantages are too significant.

Instead, we need to fundamentally rethink how we approach identity management for this new reality. Here's my roadmap, based on what I've learned:

Phase 1: Discovery and Visibility (Start Now)

You can't secure what you can't see. The first step is getting complete visibility into your identity landscape:

Conduct a comprehensive NHI inventory: Map every service account, API key, token, certificate, and secret across your entire environment—cloud, on-premises, SaaS applications, and development tools. Tools from vendors like Oasis Security, Astrix, and CyberArk can help automate this discovery.

Identify vibe-coded applications: Look for applications that were built rapidly using AI tools. These need extra security scrutiny. Check your repositories for signs of AI-generated code (certain patterns, comments, or structures are telltale signs).

Map your AI agent deployments: Know every AI agent operating in your environment, what data it can access, what actions it can take, and who owns it. If you're using Microsoft's ecosystem, leverage Microsoft Entra Agent ID to start building this registry.

Establish ownership: For every identity you discover, assign a clear owner. This might be harder than it sounds—many NHIs have been orphaned by team changes or project completions. But without ownership, you have accountability.

Phase 2: Immediate Risk Mitigation

While you're building your long-term strategy, there are tactical steps you can take right now to reduce risk:

Rotate all long-lived credentials: Move from static, permanent credentials to short-lived tokens wherever possible. Implement automated rotation for credentials that must persist. If you can't rotate them yet, at least audit who has access to them.

Implement just-in-time access: Instead of granting persistent privileges, use just-in-time access models where permissions are granted only when needed and automatically revoked afterward.

Enable continuous monitoring: Set up real-time monitoring of NHI activity. Look for anomalies like unusual access patterns, attempts to access new resources, or authentication from unexpected locations. Tools like CrowdStrike, Microsoft Defender, or Wiz can help with this.

Establish emergency revocation protocols: Have a clear process for immediately revoking access when an identity is compromised. This needs to work across all systems and be executable in minutes, not hours.

Review and reduce permissions: Apply the principle of least privilege ruthlessly. Every identity should have only the minimum access required to perform its function. Start with your most critical systems and work outward.

Phase 3: Strategic Transformation

Now you're ready to build a truly agentic-ready identity infrastructure:

Adopt dynamic, context-aware authentication: Move beyond static permissions to dynamic trust models that evaluate context in real-time. This means considering factors like: What is the identity trying to access? When are they accessing it? From where? What's the risk profile of the current task? What's the identity's behavior history?

Implement Zero Trust for machine identities: Extend Zero Trust principles to non-human identities and AI agents. This means: Never trust, always verify—even for internal identities. Verify explicitly using multiple factors. Use least-privilege access with just-in-time provisioning. Assume breach and segment access accordingly.

Build for agent lifecycle management: Create processes specifically designed for the ephemeral nature of AI agents: Automated provisioning when agents are created. Dynamic permission management based on agent tasks. Automated deprovisioning when agents complete their work. Clear audit trails showing what each agent did and why.

Prepare for quantum threats: While quantum computers capable of breaking current encryption are still years away, the time to prepare is now. Start planning your transition to quantum-resistant cryptography, especially for credentials and secrets that need long-term protection.

Integrate security into the development workflow: For vibe-coded applications, security can't be an afterthought. Implement: Automated security scanning in CI/CD pipelines. Mandatory code reviews specifically focused on AI-generated code. Security-focused prompting techniques when using AI coding tools. Training for developers on common vulnerabilities in AI-generated code.

The Emerging Solutions Landscape

The good news is that the industry is responding. New approaches and technologies are emerging specifically designed for this new reality:

Purpose-Built NHI Management Platforms: Companies like Oasis Security, Astrix, and others have built platforms specifically for discovering, managing, and securing non-human identities. These tools go beyond traditional IAM to handle the unique requirements of machine identities.

Agentic Identity Frameworks: Microsoft's Entra Agent ID automatically assigns unique identities to agents created in Copilot Studio or Azure AI Foundry, treating them as first-class citizens alongside human identities. Workday's Agent System of Record (ASOR) integrates with Microsoft Entra to provide business context and governance for AI agents.

Model Context Protocol (MCP): While not secure by default, MCP provides a standardized framework for agent communications. Microsoft is delivering broad first-party support for MCP across its agent platform, making it easier to build interoperable, governed agent systems.

Unified Identity Security Fabrics: Platforms from Okta, CyberArk, and others are evolving to provide unified visibility and control across human, non-human, and agentic identities under a single control plane.

AI-Powered Security Scanning: New tools specifically designed to scan AI-generated code for vulnerabilities are emerging. These complement traditional application security testing tools by understanding the unique patterns and risks in LLM-generated code.

Standards Development: OpenID Foundation and other standards bodies are actively working on specifications for agentic AI identity. The industry recognizes that we need common frameworks to ensure interoperability and security.

The Skills and Cultural Shift Required

Technology alone won't solve this problem. Organizations need to evolve their teams and culture:

New Roles Are Emerging: "AI Identity Architects" and "NHI Security Engineers" are becoming distinct specializations. These roles require expertise in both traditional IAM and the unique challenges of autonomous systems.

Security Teams Need Upskilling: Your IAM team needs to understand AI agents, their behavior patterns, and their unique security requirements. They also need to grasp the vulnerabilities commonly introduced by AI-generated code.

Developers Need Security Training: As vibe coding becomes more common, every developer needs baseline security knowledge. They need to know how to prompt AI tools for secure code, how to review AI-generated code for vulnerabilities, and when to call in security expertise.

Executive Ownership Is Critical: This isn't just an IT problem. Managing agentic identities requires executive-level attention, with clear ownership at the CISO level and regular reporting to the board.

Culture of Accountability: You need to establish a culture where every AI agent, every vibe-coded application, and every non-human identity has a clear owner who's accountable for its security and behavior.

Looking Ahead: 2026 and Beyond

This is just the beginning. As I look ahead, I see several trends that will shape the future:

Continued Exponential Growth: Non-human identities will continue to multiply. Some projections show ratios reaching 100:1 or even higher as AI adoption accelerates and microservices architectures proliferate.

Increasing Autonomy: AI agents will become more sophisticated and autonomous. The agents of 2026 will be able to do things we can't even imagine today, which means their identity and access requirements will be even more complex.

Regulatory Pressure: Governments are starting to pay attention. The EU's AI Act already classifies some vibe coding implementations as "high-risk AI systems" requiring conformity assessments. Expect more regulation around AI governance, identity management, and accountability.

Convergence of Physical and Digital Identity: As AI agents interact with physical systems (IoT devices, robots, autonomous vehicles), the line between digital and physical identity will blur. Your identity framework will need to span both domains.

AI-Driven Identity Attacks: Just as we're using AI to build systems, attackers are using AI to compromise them. We'll see increasingly sophisticated identity-based attacks that leverage AI to evade detection and move laterally through systems at machine speed.

Consolidation and Integration: The NHI security market is hot right now, with major acquisitions like CyberArk buying Venafi for $1.54 billion. Expect continued consolidation as identity platforms expand to encompass human, machine, and agentic identities under unified platforms.

The Choice We Face

Here's the reality: we're at a crossroads.

One path leads to continuing with our current approaches—treating non-human identities as an afterthought, letting AI agents proliferate without proper governance, and hoping that our existing security tools will somehow catch up. That path leads to breaches, compliance failures, and potentially catastrophic security incidents.

The other path requires us to acknowledge that identity management has fundamentally changed. It requires investment in new tools, new skills, and new processes. It requires treating machine and agentic identities with the same rigor we apply to human identities—maybe even more, given their scale and autonomy.

I've spent over a decade building identity and access management solutions. I've seen firsthand how the industry evolves, often in response to crises rather than proactively. We have an opportunity right now to get ahead of this wave rather than being swept up by it.

The organizations that succeed in the agentic era won't be the ones with the most AI agents or the fastest vibe-coded applications. They'll be the ones that figure out how to harness these powerful capabilities while maintaining robust security, clear governance, and accountable operations.

Your Next Steps

If you're a CISO, CTO, or security leader, here's what I recommend you do this week:

- Assess your current visibility: Do you know how many non-human identities exist in your environment? Can you identify all your AI agents? Do you know which applications were vibe-coded? If you can't answer these questions with confidence, that's your starting point.

- Start the conversation: Bring together your IAM team, your security team, your development team, and your business leaders. Make sure everyone understands that this isn't just a technical problem—it's a business risk that requires coordinated action.

- Identify your highest-risk identities: Which non-human identities or AI agents have access to your most sensitive data or critical systems? These should be your first priority for improved security and governance.

- Plan your roadmap: Use the three-phase approach I outlined earlier as a starting framework. Adapt it to your organization's specific needs and risk profile.

- Invest in capability: Whether that means new tools, new team members, or training for existing staff, you're going to need to build capability specifically for managing this new identity landscape.

The identity crisis is here. It's not coming—it's already impacting organizations around the world. But crises also create opportunities. The organizations that figure this out first will have a significant competitive advantage. They'll be able to leverage AI more safely and effectively than their competitors. They'll be able to innovate faster without taking on unacceptable risk.

The question is: will your organization lead this transformation or struggle to catch up?

Key Takeaways

- The scale is staggering: Organizations now manage 45-96 non-human identities for every human employee, with projections showing this ratio will continue to grow dramatically.

- AI agents are fundamentally different: Unlike traditional machine identities, AI agents make autonomous decisions, have dynamic access needs, and operate at machine speed—breaking our traditional identity management models.

- Vibe coding introduces new vulnerabilities: 45% of AI-generated code contains security vulnerabilities, creating a "productivity paradox" where speed gains are offset by security debt.

- Traditional IAM is failing: OAuth, SAML, and legacy IAM tools weren't designed for ephemeral, autonomous, decision-making identities that exist for hours instead of years.

- The convergence multiplies risk: Vibe-coded applications with embedded security flaws, powered by over-permissioned AI agents and unmanaged non-human identities, create a perfect storm of security exposure.

- Solutions exist but require action: New platforms, frameworks, and approaches are emerging specifically for agentic identity management, but they require proactive adoption and organizational change.

- This is a leadership issue: Successfully navigating the agentic era requires executive attention, cross-functional collaboration, and a fundamental rethinking of identity governance—not just new tools.

The time to act is now. The organizations that address this identity crisis proactively will be the ones that thrive in the age of AI agents and autonomous systems.