Wibbley wobbley pictures with neural nets

I was looking at renormalization group stuff for Mertonon in preparation for implementations like... one of these days... when I decided I would poke at things while being weird about the latent space.

Peeps poke at neural net for turbulence modeling but that's not what we're dealing with. There is of course also a chaotician's point of view on net, diffusion in latent space, etc etc. But this way is way dumber and funner, even compared to just the ol' linear interpolation of data points through latent space, which only really has meaning in fitted net.

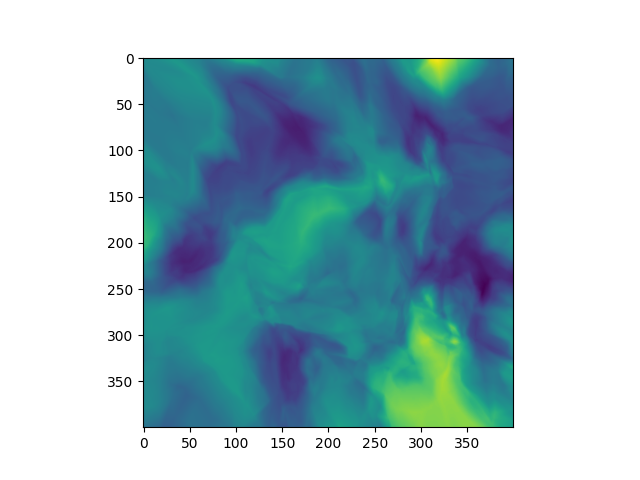

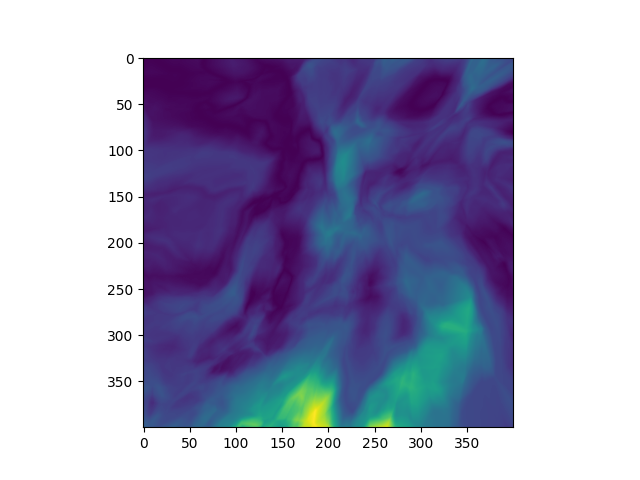

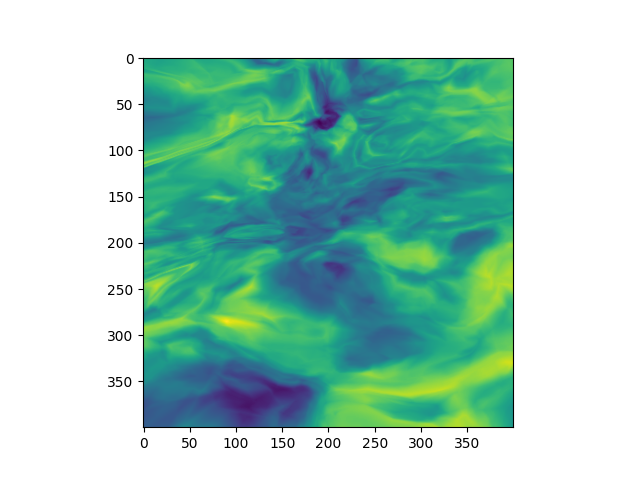

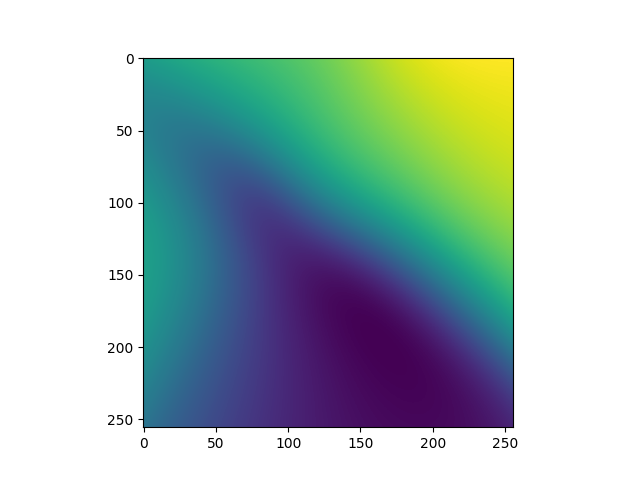

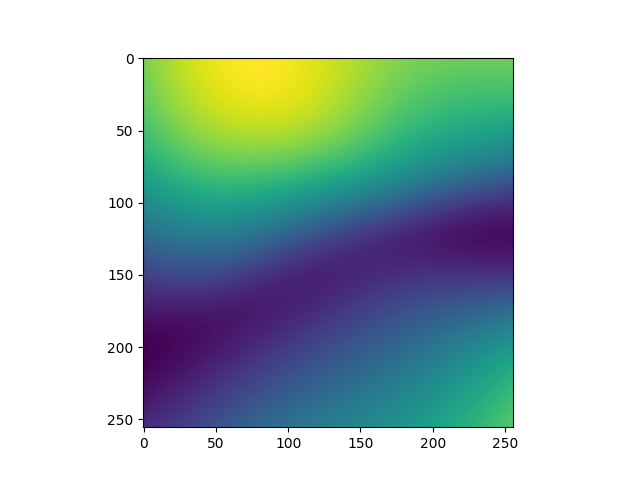

If you 'sweep' an unfitted neural net over 2 dimensions of its high-dimensional input space and read out from an output neuron, you get what seems to be sometimes a turbulent, sometimes a laminar phenomenon. I haven't tested fitted net because I am lazy and all I wanted to do was look at the thing.

But who cares, look at pretty pictures. I do understand the nonlinearity in the actual given images (from arbitrary runs of the included python file grid_sweep.py) is this deranged norm thing, switch it out for the included vaguely normal one if you're feeling it and see what you get.

I don't have, like a Kolmogorov power spectrum yadda yadda 5/3 yadda yadda something something that poem about the fleas but it sure looks like turbulence snapshots to me, from the results of mark 1 eyeball. And here's some more boring laminar-looking ones, from tanh nonlinearity.

Besicovitch dimension analysis and/or fourier analysis coming probably never, I don't really care enough