Click to load video

Feature flags, sometimes called feature toggles or feature switches, are a powerful software development technique that allows engineering teams to decouple the release of new functionality from software deployments.

With feature flags, developers can turn specific features or code segments on or off at runtime without needing a code deployment or rollback. Organizations that adopt feature flags see improvements in key DevOps metrics like lead time to changes, mean time to recovery, deployment frequency, and change failure rate.

At Unleash, we've defined 11 principles for building a large-scale feature flag system. These principles have their roots in distributed systems design and focus on security, privacy, and scalability—critical needs for enterprise systems. By following these principles, you can create a feature flag system that's reliable, easy to maintain, and capable of handling heavy loads.

These principles are:

Let's dive deeper into each principle.

A scalable feature management system evaluates flags at runtime. Flags are dynamic, not static. If you need to restart your application to turn on a flag, that's configuration, not a feature flag.

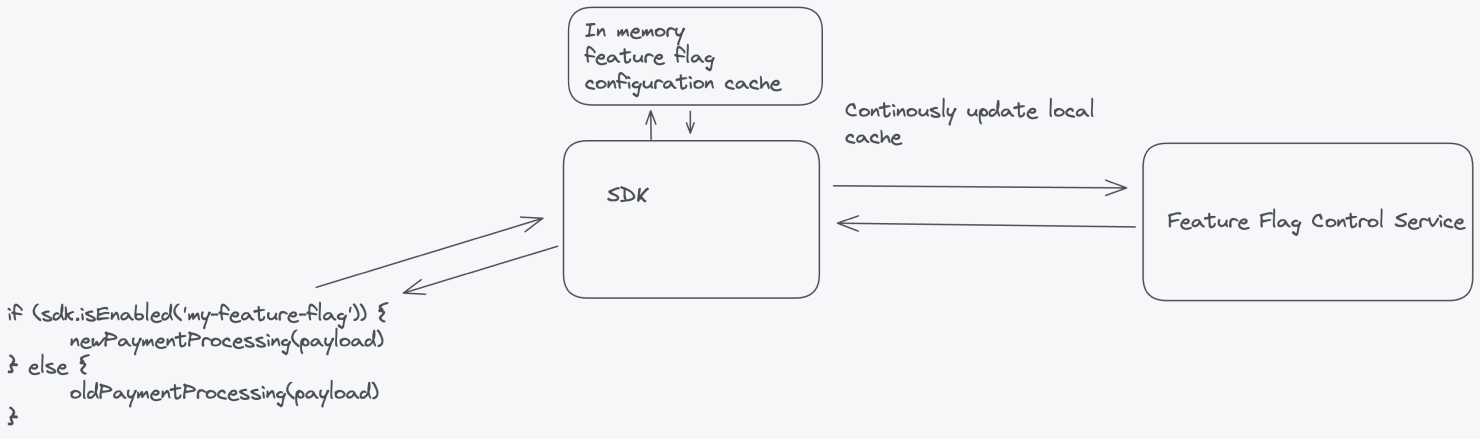

A large-scale feature flag system that enables runtime control should have, at minimum, the following components: a service to manage feature flags, a database or data store, an API layer, a feature flag SDK, and a continuous update mechanism.

Let's break down these components.

The most common use case for feature flags is to manage the rollout of new functionality. Once a rollout is complete, you should remove the feature flag from your code and archive it. Remove any old code paths that the new functionality replaces.

Avoid using feature flags for static application configuration. Application configuration should be consistent, long-lived, and loaded during application startup. In contrast, feature flags should be short-lived, dynamic, and updated at runtime. They prioritize availability over consistency and can be modified frequently.

| Configuration system | Feature flag system | |

|---|---|---|

| Lifetime and runtime behavior | Long-lived, static during runtime | Short-lived, changes during runtime |

| Example use cases |

|

|

To succeed with feature flags in a large organization, follow these strategies:

While most feature flags should be short-lived, there are valid exceptions for long-lived flags, including:

Your application shouldn't have any dependency on the availability of your feature flag system. Robust feature flag systems avoid relying on real-time flag evaluations because the unavailability of the feature flag system will cause application downtime, outages, degraded performance, or even a complete failure of your application.

If the feature flag system fails, your application should continue running smoothly. Feature flagging should degrade gracefully, preventing any unexpected behavior or disruptions for users.

You can implement the following strategies to achieve a resilient architecture:

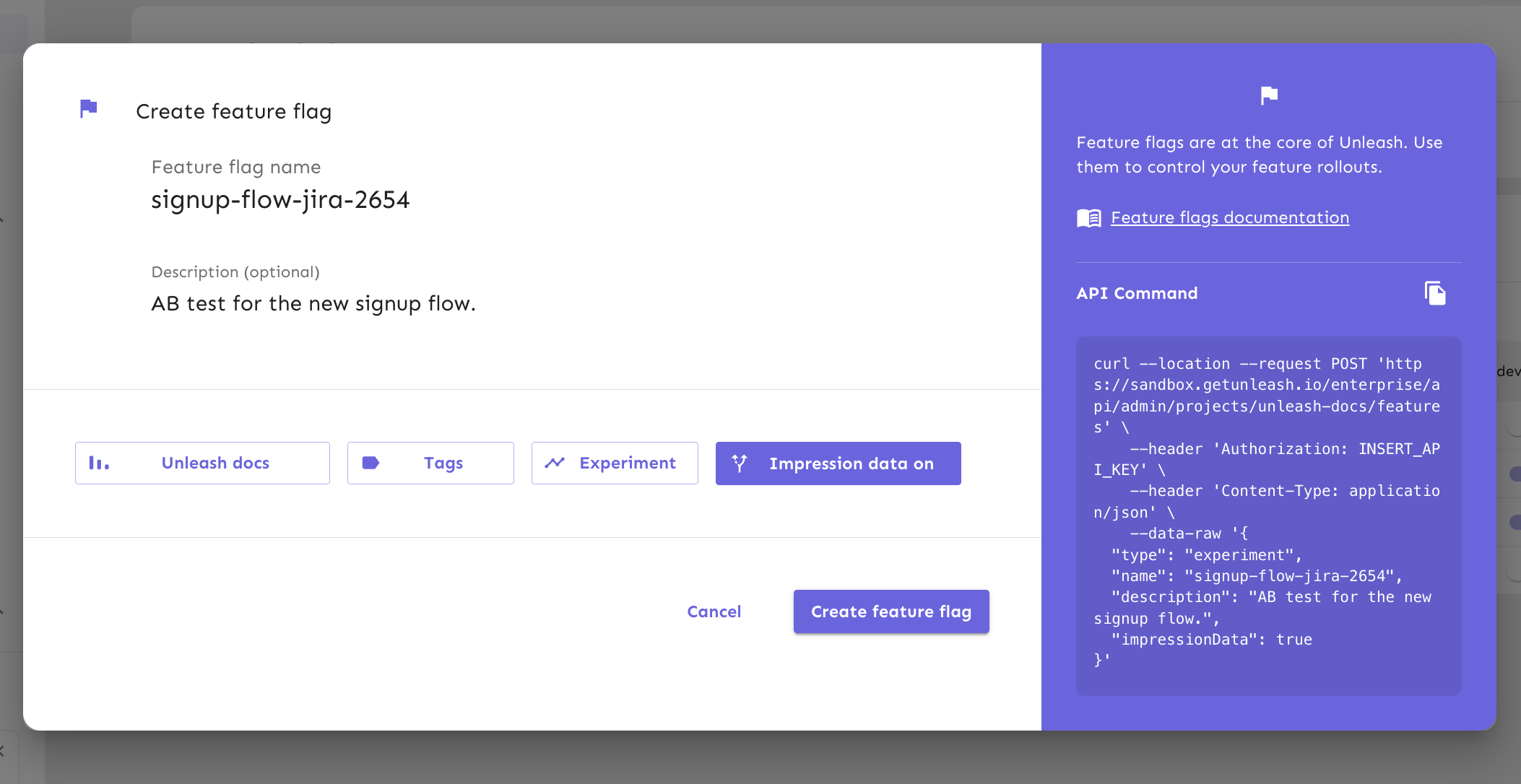

Ensure that all flags within the same Feature Flag Control Service have unique names across your entire system. Unique naming prevents the reuse of old flag names, reducing the risk of accidentally re-enabling outdated features with the same name.

Unique naming has the following advantages:

Making feature flag systems open by default enables engineers, product owners, and support teams to collaborate effectively and make informed decisions. Open access encourages productive discussions about feature releases, experiments, and their impact on the user experience.

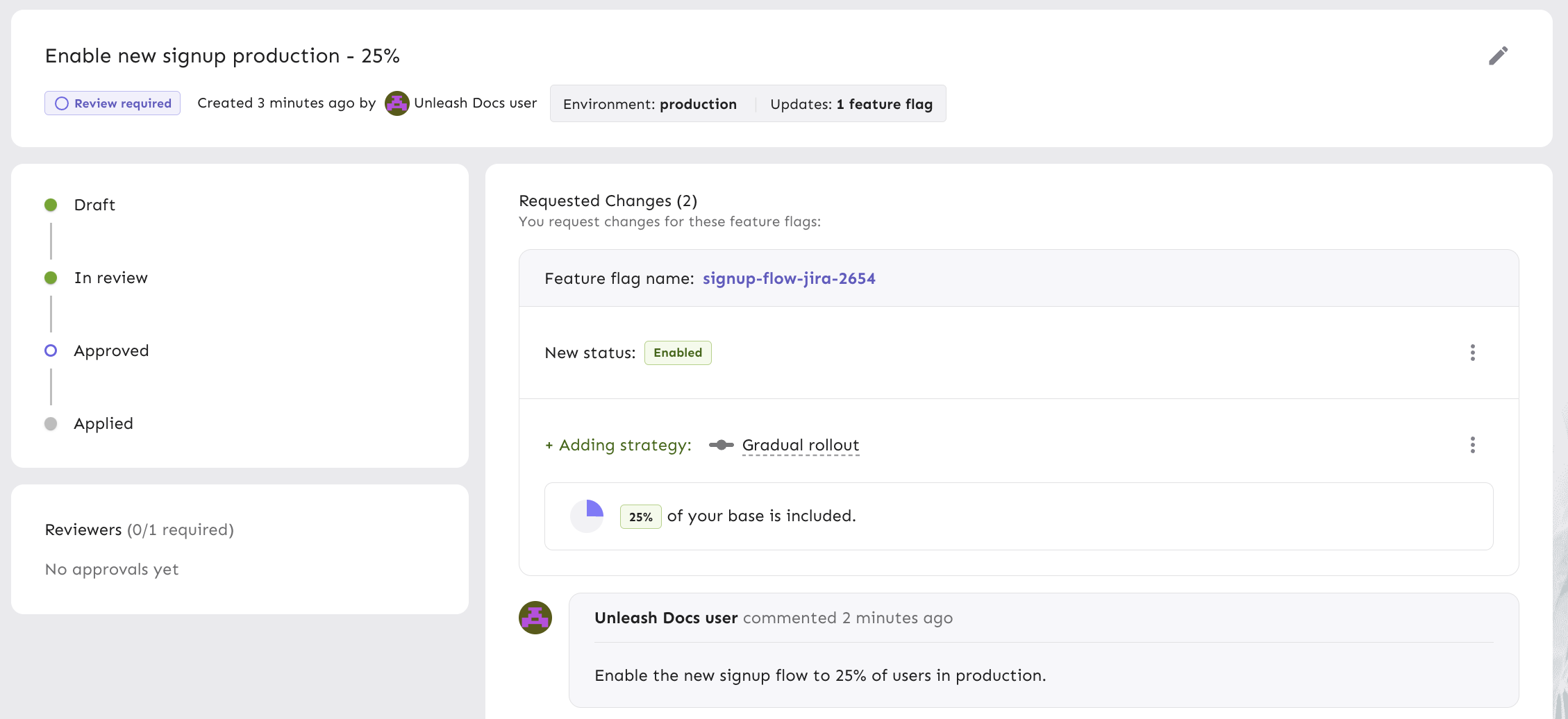

Access control and visibility are also key considerations for security and compliance. Tracking and auditing feature flag changes help maintain data integrity and meet regulatory requirements. While open access is key, it's equally important to integrate with corporate access controls, such as SSO, to ensure security. In some cases, additional controls like feature flag approvals using the four-eyes principle are necessary for critical changes.

For open collaboration, consider providing the following:

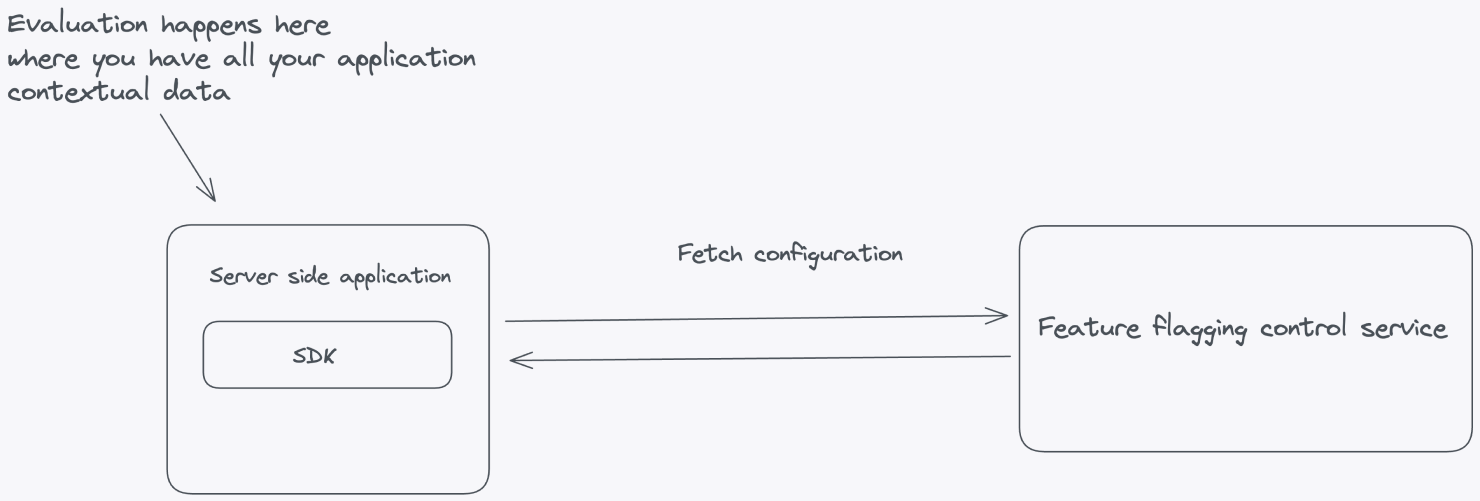

Feature flags often require contextual data for accurate evaluation, which could include sensitive information such as user IDs, email addresses, or geographical locations. To safeguard this data, follow the data security principle of least privilege (PoLP), ensuring that all Personally Identifiable Information (PII) remains confined to your application.

To implement the principle of least privilege, ensure that your Feature Flag Control Service only handles the configuration for your feature flags and passes this configuration down to the SDKs connecting from your applications.

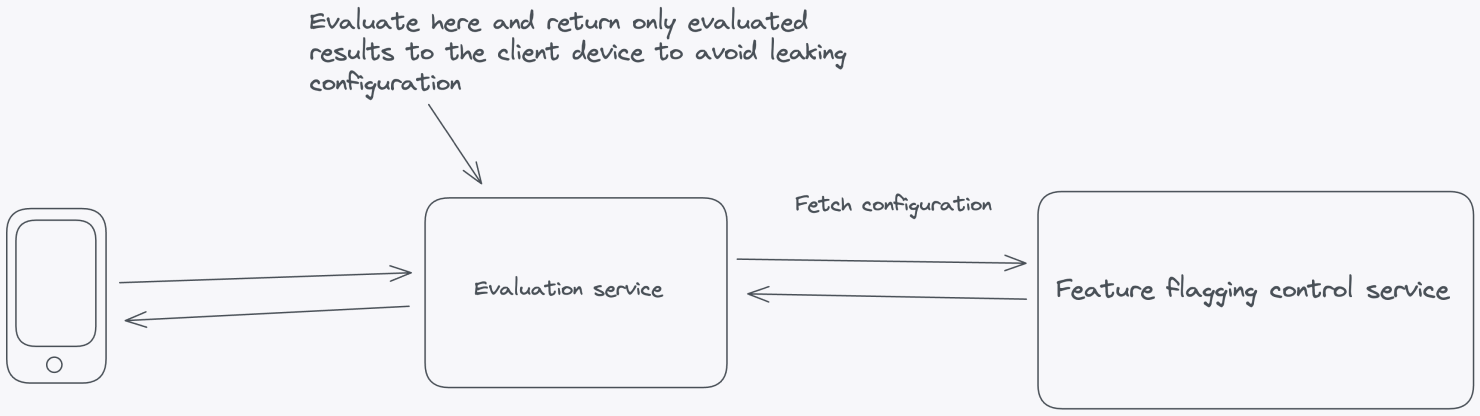

Let's look at an example where feature flag evaluation happens inside the server-side application. This is where all the contextual application data lives. The flag configuration—all the information needed to evaluate the flags—is fetched from the Feature Flag Control Service.

Client-side applications where the code resides on the user's machine in browsers or mobile devices, require a different approach. You can't evaluate flags on the client side because it raises significant security concerns by exposing potentially sensitive information such as API keys, flag data, and flag configurations. Placing these critical elements on the client side increases the risk of unauthorized access, tampering, or data breaches.

Instead of performing client-side evaluation, a more secure and maintainable approach is to evaluate feature flags within a self-hosted environment. Doing so can safeguard sensitive elements like API keys and flag configurations from potential client-side exposure. This strategy involves a server-side evaluation of feature flags, where the server makes decisions based on user and application parameters and then securely passes down the evaluated results to the frontend without any configuration leaking.

In Principle 1, we proposed a set of architectural components for building a feature flag system. The same principles apply here, with additional suggestions for achieving local evaluation. For client-side setups, use a dedicated evaluation server that can evaluate feature flags and pass evaluated results to the frontend SDK.

SDKs make it more convenient to work with feature flags. Depending on the context of your infrastructure, you need different types of SDKs to talk to your feature flagging service. Backend SDKs should fetch configurations from the Feature Flag Control Service and evaluate flags locally using the application's context, reducing the need for frequent network calls.

For frontend applications, SDKs should send the context to an evaluation server and receive the evaluated results. The evaluated results are then cached in memory in the client-side application, allowing quick lookups without additional network overhead. This provides the performance benefits of local evaluation while minimizing the exposure of sensitive data.

Click to load video

For optimal performance, you should evaluate feature flags as close to your users as possible. Building on the server-side evaluation approach from Principle 2, let's look at how evaluating flags locally can bring key benefits in terms of performance, cost, and reliability:

In summary, this principle emphasizes optimizing performance while protecting end-user privacy by evaluating feature flags as close to the end user as possible. This also leads to a highly available feature flag system that scales with your applications.

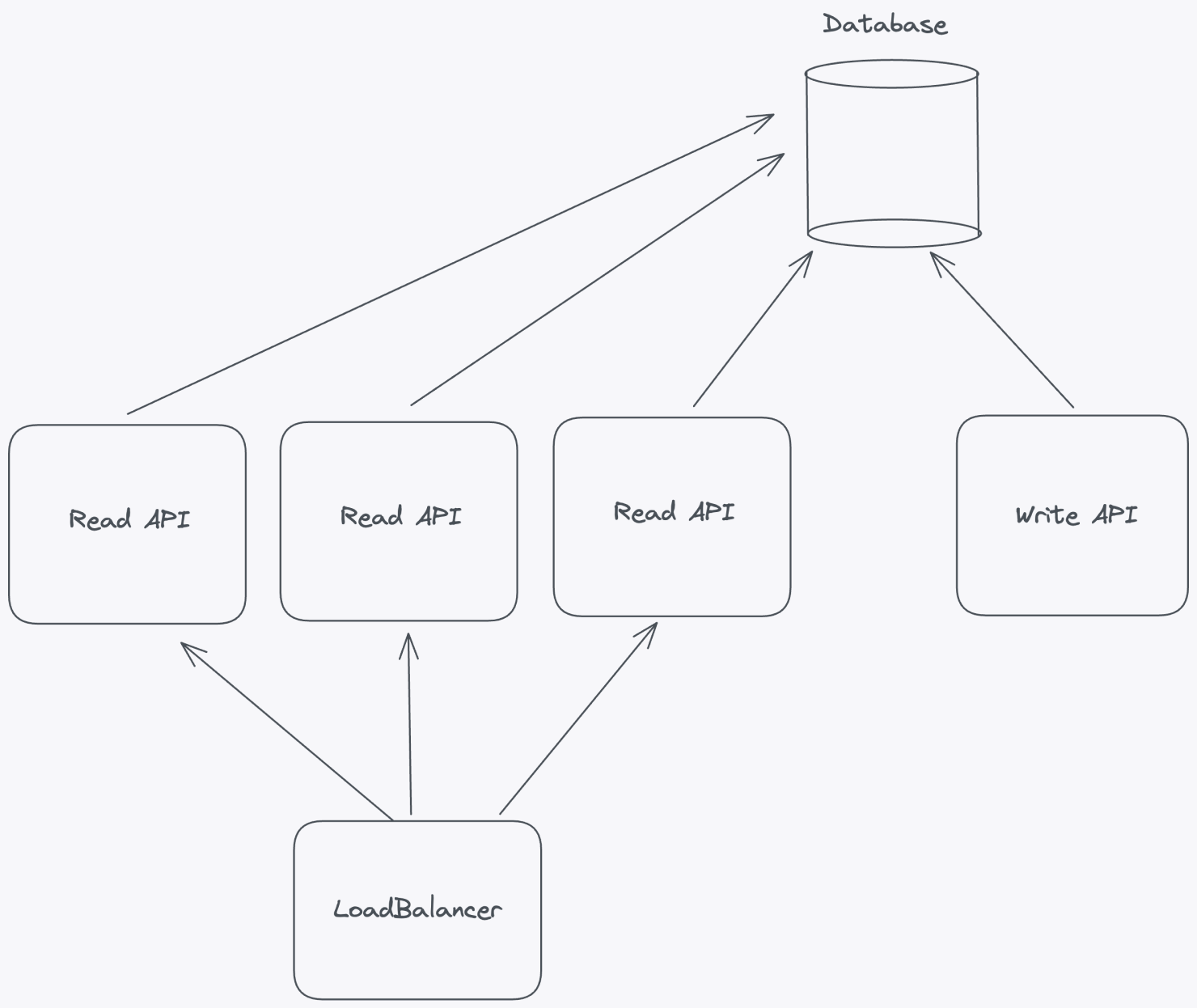

When designing a scalable feature flag system, one of the most effective strategies is to separate read and write operations into distinct APIs. This architectural decision not only allows you to scale each component independently but also provides better performance, reliability, and control.

By decoupling read and write operations, you gain the flexibility to scale horizontally based on the unique demands of your application. For example, if read traffic increases, you can add more servers or containers to handle the load without needing to scale the write operations.

The benefits of decoupling read and write operations extend beyond just scalability; let's look at a few others:

Minimizing the size of feature flag payloads is a critical aspect of maintaining the efficiency and performance of a feature flag system. Payload size can vary based on targeting rule complexity. For example, targeting based on individual user IDs may work with small user bases but becomes inefficient as the user base grows.

Avoid storing large user lists directly in the feature flag configuration, which can lead to scaling issues. Instead, categorize users into logical groups at a higher layer (for example, by subscription plan or location) and use group identifiers for targeting within the feature flag system.

Keeping the feature flag payload small results in:

For more insights into reducing payload size, visit our Best practices for using feature flags at scale guide.

Feature flagging solutions are indispensable tools in modern software development, enabling teams to manage feature releases and experiment with new functionality. However, one aspect that is absolutely non-negotiable in any feature flag solution is the need to ensure a consistent user experience. Feature flagging solutions must prioritize consistency and guarantee the same user experience every time, especially with percentage-based gradual rollouts.

Developer experience is a critical factor to consider when implementing a feature flag solution. A positive developer experience enhances the efficiency of the development process and contributes to the overall success and effectiveness of feature flagging. One key aspect of developer experience is ensuring the testability of the SDK and providing tools for developers to understand how and why feature flags are evaluated.

To ensure a good developer experience, you should provide the following:

Thank you for reading. Our motivation for writing these principles is to share what we've learned building a large-scale feature flag solution with other architects and engineers solving similar challenges. Unleash is open-source, and so are these principles. Have something to contribute? Open a PR or discussion on our GitHub.

To learn more about Unleash architecture and implementation basics, check out this webinar:

Click to load video

An introduction to Unleash Edge product and its scaling capabilities:

Click to load video

This FAQ section addresses common questions about using feature flags effectively and managing them at scale.

Keep flags short-lived and localize the logic. Evaluate a flag once per request and pass the result down instead of re-checking it in multiple places. Use a small wrapper or abstraction layer around your SDK calls, and delete the flag and old code path as soon as the rollout finishes. See Managing feature flags in code for more recommendations.

Teams sometimes think in terms of assigning numeric IDs or indices to feature flags. In practice, this isn't necessary. What really matters is centralized management and unique, descriptive names. A central feature flag service, like Unleash, ensures consistent definitions across systems, makes flags easy to find, and prevents accidental reuse. Unique names provide the same benefits an index would, but in a more human-readable and scalable way.

You can store on/off values in a config file, and your app will read them at startup. But that's closer to static configuration than true feature flagging. Real feature flags are evaluated at runtime with SDKs that fetch updates dynamically. Tools like Unleash provide runtime control, instant rollbacks, and gradual rollouts that config files can't.

Evaluate feature flags once per request and pass the results down, rather than checking the same flag everywhere. Keep the if/else branching simple, use wrappers or helpers to hide SDK details, and remove flags as soon as they're no longer needed. See Managing feature flags in code for more recommendations.

Treat feature flags like technical debt. Give them owners, set expiration dates, and add cleanup tasks to your backlog. Archive old flags after removal so you keep an audit trail. Lifecycle states and other automation in Unleash make the cleanup process much easier.

Use feature branches to isolate development work before it's ready for production. Use feature flags to control exposure after code is deployed: for progressive rollouts, experiments, or kill switches. Many teams combine trunk-based development with flags to reduce merge conflicts while still having control in production.

Set an expiration date and assign an owner when you create the flag. Track cleanup like any other piece of technical debt, and use automation and tooling to surface and clean up stale flags.

Organize flags into projects and environments, enforce unique names, and keep payloads small by using segments instead of large targeting lists. Apply access control and change requests for your production environments and use audit logs for visibility. A management platform like Unleash provides these features out of the box, making it easier to scale.

The key is to keep flags short-lived. Test both code paths while the flag is active, and remove the flag and old path once the rollout finishes. Archive stale flags for audit purposes. In Unleash, lifecycle stages help you identify and clean up unused flags.

Unleash provides extensive SDK support across diverse tech stacks, including server-side languages like Node.js, Java, Go, PHP, Python, Ruby, Rust, .NET as well as frontend frameworks such as React, JavaScript, Vue, iOS, and Android.

In addition, the community maintains SDKs for other languages and frameworks, such as Elixir, Clojure, Dart/Flutter, Haskell, Kotlin, Scala, ColdFusion, and more.

This broad support means you can use the same feature flagging system across backend services, frontend apps, and mobile clients. Other tools exist, but Unleash comes with open-source flexibility and helps you scale from a small team to thousands of developers for any use case.

Evaluate feature flags at the highest level and once per request. Keep the branching or conditional logic simple. Use logging for visibility and remove the flag quickly once it's served its purpose. With Unleash SDKs, you also get local caching and server-side evaluation for performance and security.

Unleash provides a robust Go SDK for implementing feature flags. Other notable open-source options include Go Feature Flag, which is written in Go, and Flagr, also a Go-based microservice for feature flagging.

Complexity comes from keeping too many flags active at once and spreading evaluations throughout the code. The best way to manage this is to keep flags short-lived with clear ownership and a planned end date, then remove them once the rollout is complete. Evaluate each flag once at the highest practical level and pass the result down, so behavior stays consistent. In a microservices setup, perform the evaluation at the edge and propagate the result through the request. Centralize flag names and use a wrapper service around the SDK to handle logging, defaults, and consistency. Finally, archive flags after removal to maintain history without adding clutter.

See Managing feature flags in code for more recommendations.