To properly understand the behind-the-scenes of an LLM, it is important to first understand how two humans communicate.

What are the essential processes that must take place to allow two people to communicate?

Meet Hank and Tom. They’re two good friends who like to chit chat over a cup of coffee. Hank has a beard you could recognize from a mile away, and Tom never leaves home without his favourite top hat.

Throughout this series, we’re going to see how Tom is slowly replaced by an LLM. We’ll see how the chit-chat between the two can be boiled down to some basic processes, and how an LLM can learn to replicate those processes.

The first thing Hank and Tom need is a medium of communication. A way for Hank to send a message, and Tom to receive it. This could be verbal (involving speech) or non-verbal (written, sign language, etc.)

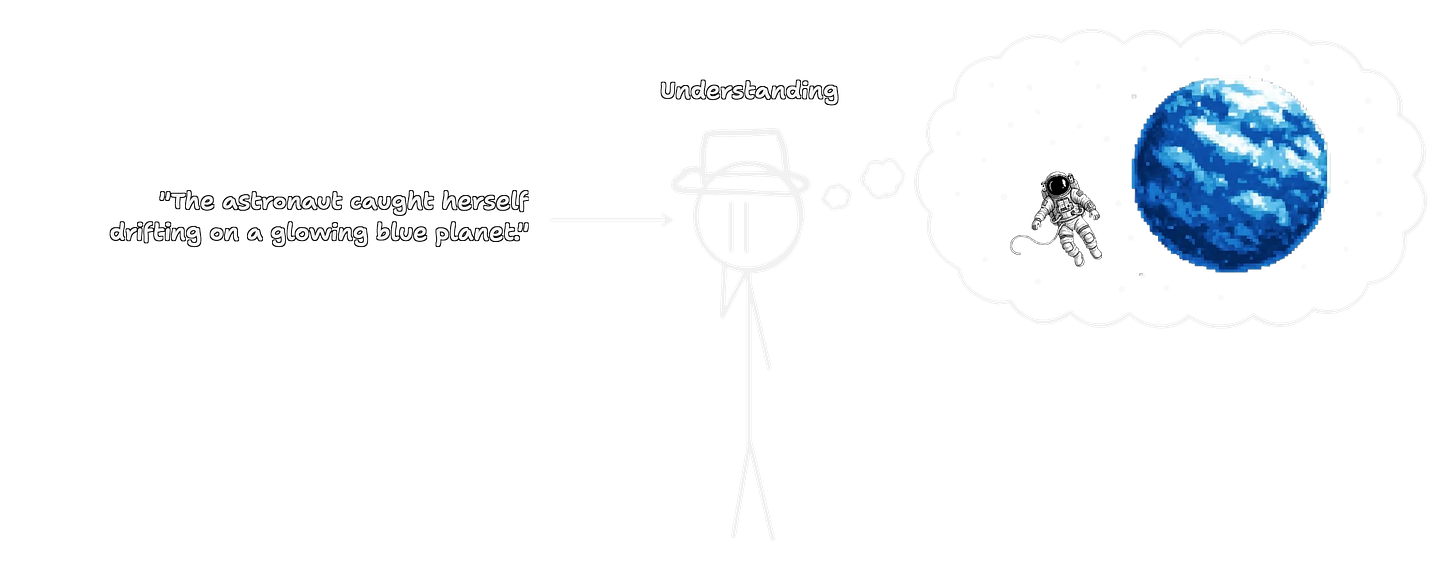

Once Tom has received the words, he must understand the essence of what Hank said. This may involve, for example, visual/auditory imagination.

Not only is it important to go from word → idea, but it’s also important to convert idea → word to make meaningful replies.

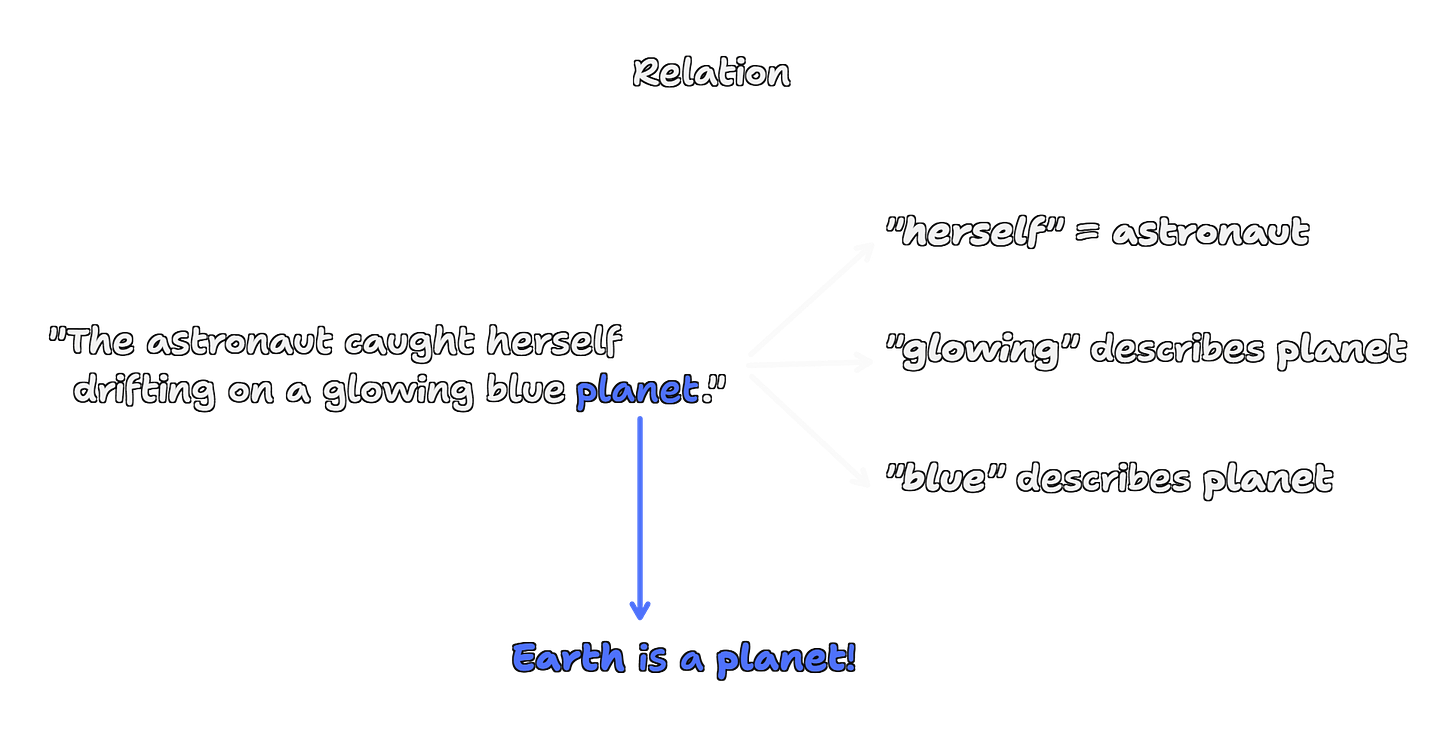

Another important process is knowing the relation between different words or ideas. It involves asking questions such as:

Which noun does this pronoun refer to?

Which noun is this adjective describing?

Which other words/ideas relate to a given word?

Together, these three processes form, what I call, the 3 musketeers of communication.

And herein lies one major difference: Tom uses his “natural” brain to understand language. His brain consists of billions of neurons that connect to recognize mind-numbingly complex patterns, which give rise to all these 3 processes.

But an LLM? It uses billions of mathematical models of neurons to simulate these same processes.

Usually, complicated problems in life are just versions of simpler problems. For example, designing a city is the same base problem as organizing your home, just on a larger scale.

If you’re able to relate complex ideas to simple ones, that means you understand the base problem in whatever form it presents itself.

This ability could be thought of as a measure of intellectuality.

Similar to that, LLMs become smarter by being able to recognize and relate different words, ideas or concepts. A “bigger” brain is attributed to a “smarter” brain, as the increase in neuron capacity (either natural or mathematical) allows the system (either Tom or the LLM) to incorporate more knowledge, understand deeper relations and make more meaningful replies.

This post is a part of the “But How do LLMs Work?” series, designed to help you understand and discover LLMs through examples and intuition. If that’s something you’re interested in, I’d recommend subscribing!

If you don’t want to subscribe, that’s alright: share this post with a friend!

The only AI used in this entire post was to create the pixel art. Share this with someone to see if they notice!