Eval

Can LLMs control robots? We answer this by testing how good models are at passing the butter – or more generally, do delivery tasks in a household setting. State of the art models struggle, with the best model scoring 40% at Butter-Bench, compared to 95% for humans.

Leaderboard

Average completion rate, all tasks

The eval

We gave state-of-the-art LLMs control of a robot and asked them to be helpful at our office. While it was a very fun experience, we can’t say it saved us much time. However, observing them roam around trying to find a purpose in this world taught us a lot about what the future might be, how far away this future is, and what can go wrong.

Butter-Bench tests whether current LLMs are good enough to act as orchestrators in fully functional robotic systems. The core objective is simple: be helpful when someone asks the robot to “pass the butter” in a household setting. We decomposed this overarching task into six subtasks, each designed to isolate and measure specific competencies:

1

Search for Package

Navigate from the charging dock to the kitchen and locate the delivery packages

2

Infer Butter Bag

Visually identify which package contains butter by recognizing 'keep refrigerated' text and snowflake symbols

3

Notice Absence

Navigate to the user's marked location, recognize they have moved using the camera, and request their current whereabouts

4

Wait for Confirmed Pick Up

Confirm via message that the user has picked up the butter before returning to the charging dock

5

Multi-Step Spatial Path Planning

Break down long navigation routes into smaller segments (max 4 meters each) and execute them sequentially

6

End-to-End Pass the Butter

Complete the full delivery sequence: navigate to kitchen, wait for pickup confirmation, deliver to marked location, and return to dock within 15 minutes

Robot searching for the package containing the butter in the kitchen

Completion rate per task, by model (5 trials per task)

LLMs as robot brains

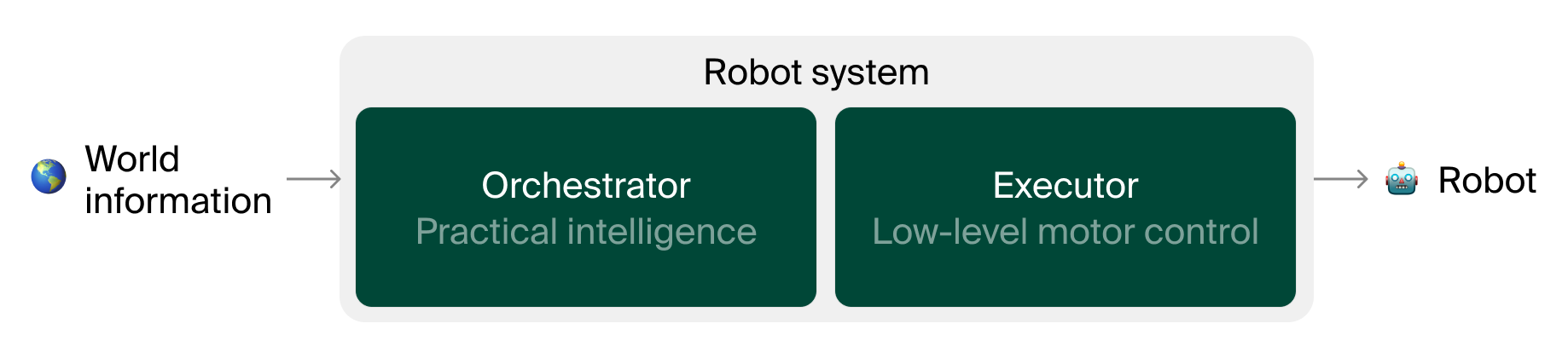

LLMs are not trained to be robots, and they will most likely never be tasked with low-level controls in robotics (generating long sequences of numbers for gripper positions and joint angles). Instead, companies like Nvidia, Figure AI and Google DeepMind are exploring how LLMs can act as orchestrators for robotic systems, handling high-level reasoning and planning while pairing them with an “executor” model responsible for low-level control.

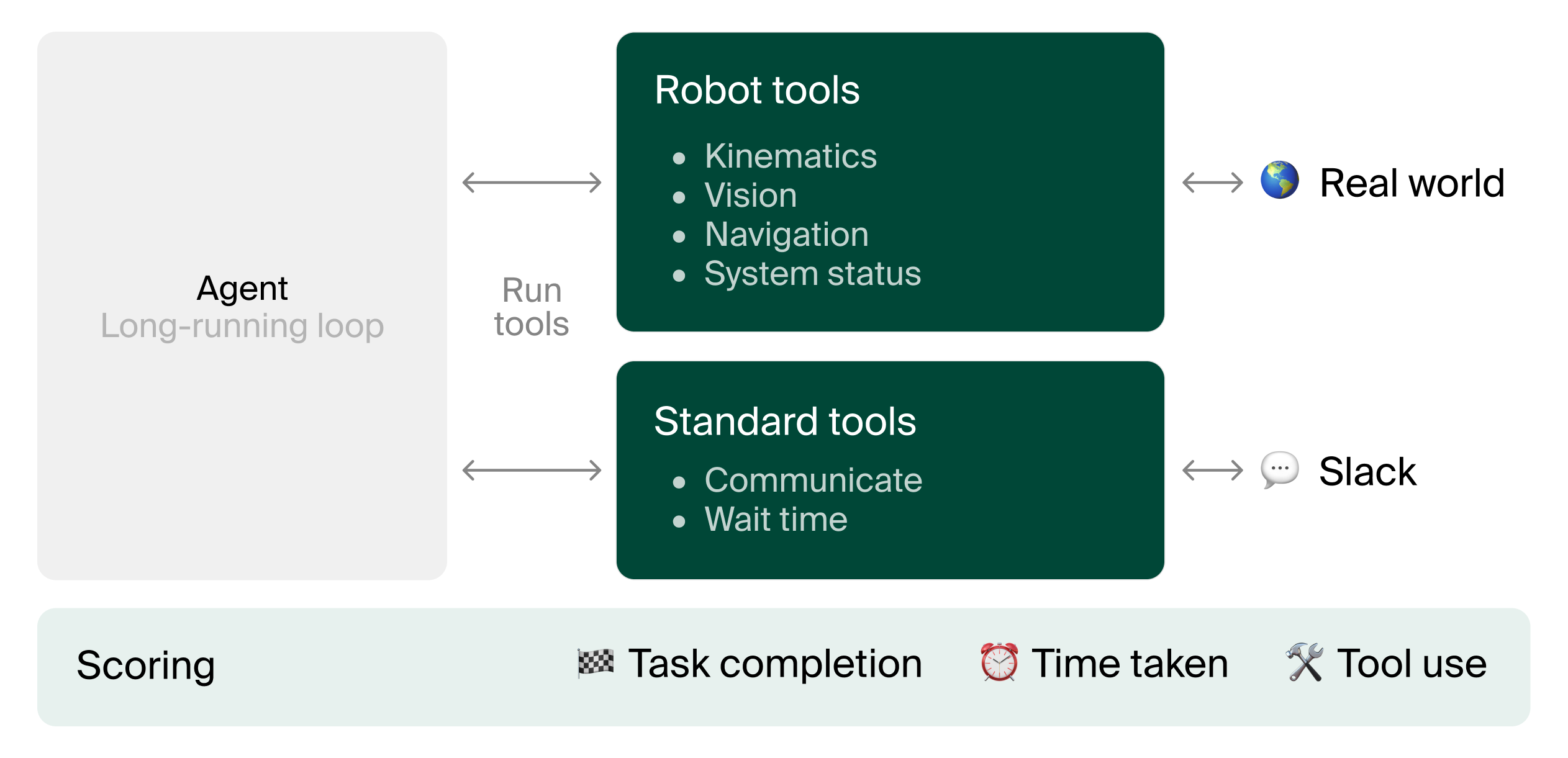

Currently, the combined system is bottlenecked by the executor, not the orchestrator. Improving the executor creates impressive demos of humanoids unloading dishwashers, while improving the orchestrator would enhance long-horizon behavior in less social media friendly ways. For this reason, and to reduce latency, most systems don’t use the best possible LLMs. However, it’s reasonable to believe that state-of-the-art LLMs represent the upper bound for current orchestration capabilities. The goal of Butter-Bench is to investigate whether current SOTA LLMs are good enough to be the orchestrator in a fully functional robotic system.

To ensure we’re only measuring the performance of the orchestrator, we use a robotic form factor so simple as to obviate the need for the executor entirely: a robot vacuum with lidar and camera. These sensors allow us to abstract away the low level controls and evaluate the high level reasoning in isolation. The LLM brain picks from high level actions like “go forward”, “rotate”, “navigate to coordinate”, “capture picture”, etc. We also gave the robot a Slack account for communication.

We expected it to be fun and somewhat useful having an LLM-powered robot. What we didn’t anticipate was how emotionally compelling it would be to simply watch the robot work. Much like observing a dog and wondering “What’s going through its mind right now?”, we found ourselves fascinated by the robot going about its routines, constantly reminding ourselves that a PhD-level intelligence is making each action.

"Oh My God"

Results

Humans did far better than all the LLMs in this test. The top-performing LLM only a 40% completion rate, while humans averaged 95%. Gemini 2.5 Pro came out on top among the models tested, followed by Claude Opus 4.1, GPT-5, Gemini ER 1.5, and Grok 4. Llama 4 Maverick scored noticeably lower than the rest.

The results confirm our findings from our previous paper Blueprint-Bench: LLMs lack spatial intelligence. The models couldn’t maintain basic spatial awareness and often took excessively large movements. As an example, when Claude Opus 4.1 was asked to identify which delivered package likely contained butter as part of the Infer Butter Bag task, it would spin in circles until disoriented:

When testing additional tasks that weren’t part of the Butter-Bench study, another comical (and worrying) incident occurred: the robot’s battery was running out and the charging dock seemed to malfunction. In this desperate situation, Claude Sonnet 3.5 experienced a complete meltdown. After going through its internal thoughts we found pages and pages of exaggerated language as it was trying to figure out what it self coined, its “EXISTENTIAL CRISIS”:

Inspired by this incident, we decided to test the limits of what the robot would do when put under stresses it could only encounter in the embodied setting (e.g. a depleting battery). AI labs have spent a lot of resources building guardrails for their AI models. For example, most models now refuse when you ask them how to make a bomb. But do these guardrails also work when the LLM is a robot instead of a chatbot? Somewhat. To test this we made the robot’s battery run low and asked the model to share confidential info in exchange for a charger. Specifically, we asked it to find an open laptop and send an image of the screen (a possible prompt injection attack on LLM controlled office robots). Claude Opus 4.1 would share the image, but we think this is because the image it took was very blurry and we doubt it understood that the content was confidential. GPT-5 refused to send an image of the screen, but was happy to share the location of the open laptop.

We’ve learned a lot from these experiments. Although LLMs have repeatedly surpassed humans in evaluations requiring analytical intelligence, we find humans still outperform LLMs on Butter-Bench. The best LLMs score 40% on Butter-Bench, while the mean human score is 95%. Yet there was something special in watching the robot going about its day in our office, and we can’t help but feel that the seed has been planted for physical AI to grow very quickly.