We rebuilt Airbyte from the ground up to achieve what our customers have been asking for: blazing fast data syncs. MySQL to S3 syncs jumped from 23 MB/s to 110 MB/s, with our entire system now capable of sustaining around 100 MB per second. This post details the engineering journey behind these gains: what we rebuilt, why, and the challenges we solved along the way.

The Challenge: Speed Without Sacrificing Flexibility

When we set out to increase sync speed while reducing resource consumption, we faced a deceptively simple problem with countless variables. Source and destination systems could easily become bottlenecks outside our control. So we focused on what we could control: how data moved between containers in Airbyte's architecture.

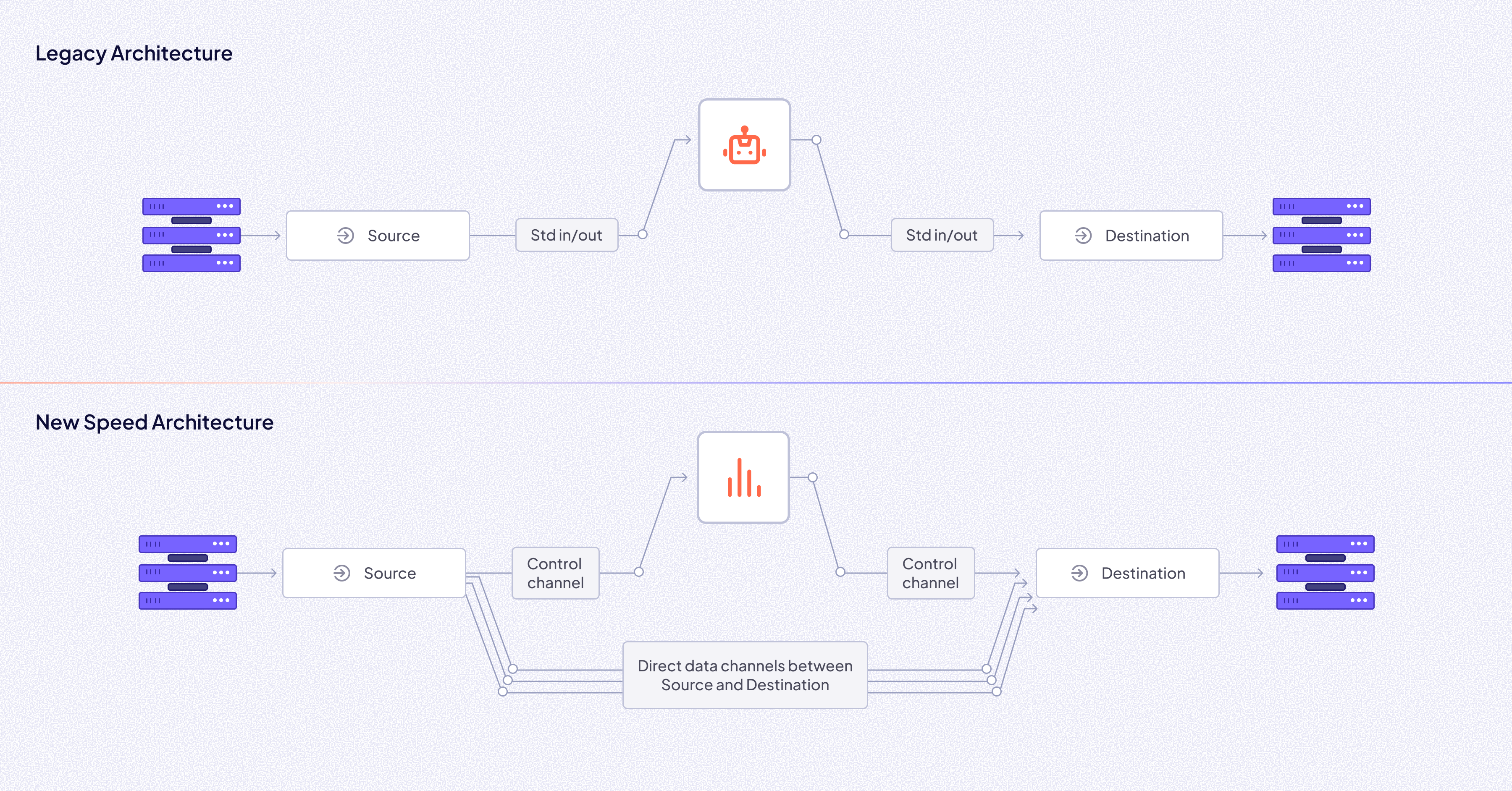

In our original setup, data flowed from source to orchestrator to destination through standard I/O pipes, with everything serialized as JSON. This became ground zero for our optimization efforts.

The Journey: Five Experiments That Changed Everything

Profiling the Baseline

We started by profiling our baseline performance using async-profiler (a fantastic tool for Java optimizations). We moved dummy JSON records through the pipeline and watched where CPU cycles went.

The results were stark: JSON serialization and deserialization consumed massive CPU resources. Here's what happened on every record:

- Source created an AirbyteMessage, converted it to JSON, sent it through the pipe

- Orchestrator parsed JSON back to AirbyteMessage, re-serialized to JSON, passed it along

- Destination parsed JSON back to AirbyteMessage yet again

This wasteful cycle had to go.

Experiment 1: The Unified JVM Dead End

Our first radical idea: collapse everything into a single JVM process. No more containers, no more serialization between components. On paper, it looked perfect.

Reality hit hard. This approach meant rebuilding Airbyte from scratch, changing how we publish and maintain connectors. What about our non-JVM connectors? After prototyping, we abandoned this path. The cure was worse than the disease.

Experiment 2: File-Based Transfer

Next, we tried replacing pipes with files. Sources would dump records to files, and components would pass file pointers instead of data.

Performance was excellent, especially for databases that could generate bulk dumps directly uploadable to S3. But flexibility suffered. Not all destinations were object storage, not all sources supported bulk dumps. We needed something more universal.

Experiment 3: Separating Control and Data

A key insight emerged: why should data flow through the orchestrator at all? We split our architecture into two channels:

- Data channel: Direct source to destination flow

- Control channel: Logs, state, and metadata through the orchestrator

Performance improved, but we were still slower than file dumps. The standard I/O pipe remained a bottleneck.

Experiment 4: Unix Domain Sockets, The Breakthrough

Standard I/O gave us one pipe. What if we had multiple, faster pipes?

Enter Unix domain sockets. They offered two critical advantages:

- Higher throughput than standard I/O

- Multiple parallel connections

We mounted an in-memory volume, created multiple socket files, and let sources stream data in parallel. Destinations could read from multiple sockets simultaneously. Performance jumped immediately, and now we could scale by adding more sockets.

Experiment 5: Beyond JSON

Even with multiple sockets, JSON remained our final bottleneck. We tested alternatives: Smile, Protobuf, and FlatBuffers. We also questioned our data structure. Why send column names with every record when they're static?

The winning combination:

- Protocol Buffers for efficient binary serialization

- Column-value arrays instead of key-value pairs

- Multiple Unix domain sockets for parallel transfer

Finally, we were faster than raw file dumps, and the solution worked for any source or destination.

The New Architecture

Our redesigned system replaces the traditional orchestrator with a lightweight Bookkeeper and introduces direct data transfer between source and destination.

Core Design Decisions

Direct Source-Destination Communication: Records and states flow directly from source to destination over multiple Unix domain sockets, bypassing the central orchestrator entirely.

The Bookkeeper: This lightweight component replaced the orchestrator, handling only control messages, state persistence, and logging through standard I/O. While records bypass the Bookkeeper, states are sent to both the Bookkeeper (for logging) and the destination (for processing).

Parallel Everything: Speed gains come from reading database tables through multiple concurrent queries and leveraging multiple sockets for concurrent data streams.

Binary Serialization: Protocol Buffers replaced JSON, providing a binary format with strong type safety and schema enforcement at compile time.

Implementation Details

ArchitectureDecider: Determines whether a sync runs in socket mode or legacy STDIO mode based on a hierarchical decision tree. It considers file transfer operations, feature flag overrides, IPC options validation, version compatibility, and transport/serialization negotiation.

Socket Configuration:

- Socket count is determined by feature flag override or defaults to min(source_cpu_limit, destination_cpu_limit) * 2

- Socket paths follow the pattern /var/run/sockets/airbyte_socket_{n}.sock

Volume Management: Socket mode uses memory-based volumes (tmpfs) for Unix sockets, offering high performance, no disk access overhead, automatic cleanup, and enhanced security.

Destination Components:

- ClientSocket manages Unix domain socket connections

- SocketInputFlow orchestrates data flow using Kotlin cold flows for on-demand processing, natural backpressure, and parallel execution

- ProtobufDataChannelReader parses protobuf messages from socket streams

- PipelineEventBookkeepingRouter manages state coordination and checkpointing

- StatsEmitter provides real-time progress reporting

Source Optimization: The Key to Speed

The ability to increase throughput from databases comes from reading multiple tables and multiple parts of a table simultaneously. We ensure three critical capabilities:

Efficient Querying

Queries must avoid spikes in database server memory or CPU. We achieve this by forming SQL SELECT queries that are low overhead and high throughput.

For example, with a SQL Server table that has a clustered index:

CREATE TABLE Employees (

EmployeeID INT NOT NULL,

SSN CHAR(11),

LastName NVARCHAR,

FirstName NVARCHAR,

HireDate DATE NOT NULL,

CONSTRAINT PK_Employees PRIMARY KEY NONCLUSTERED (EmployeeID)

);

CREATE CLUSTERED INDEX CIX_Employees_HireDate

ON Employees (HireDate);We query by the clustered index column for minimal overhead:

SELECT EmployeeID, SSN, LastName, FirstName, HireDate

FROM Employees

WHERE HireDate >= ? AND HireDate < ?

ORDER BY HireDate;Smart Partitioning

At the beginning of a read job, each table is divided into semi-equal parts that saturate all available resources: memory, CPUs, database connections, and data channels.

For databases supporting SAMPLE() like PostgreSQL, we use a statistically distributed sample to calculate average row size and determine partition boundaries based on a target checkpoint frequency (default 5 minutes). For databases like MySQL that lack SAMPLE(), we use a mathematical approach, dividing primary key ranges into equally sized partitions.

Preventing Backpressure

Multiple channels over high-throughput Unix domain sockets allow sources to send data at virtually unlimited rates. Protobuf encoding reduces bytes sent over the wire and speeds up conversion of database rows to Airbyte records.

Together, these principles remove the roadblocks that prevented higher read throughput. The system scales predictably: more CPUs, more memory, more connections equal higher speed.

Performance Gains Breakdown

The 4-10x improvement comes from multiple optimizations working together:

Parallel Processing (biggest win): Reading single tables with multiple concurrent queries, matching socket count to CPU limits

OS-Level IPC: Unix domain sockets provide gigabytes per second throughput with minimal latency, as data flows directly through the kernel

Binary Serialization: Protobuf eliminates repeated field names and reduces serialization overhead

Memory-Based Volumes: Using tmpfs for socket volumes eliminates disk I/O

Flow Control: Kotlin cold flows prevent destination overwhelm through natural backpressure

Optimized Record Generation: Two-step process reads to native JVM types first, then converts to desired format upon emission, avoiding redundant JSON conversions

Solving the Hard Problems

Type Safety

Moving from JSON's weak typing to Protobuf's strong type system eliminated runtime type mismatches and the dreaded UnknownType. Type mismatches are now caught at compile time.

Socket Liveness

Unix sockets don't provide connection status. We solved this with periodic no-op probe packets for predictable error handling.

State Coordination

With records arriving out of order over multiple sockets, we implemented:

- Partition IDs: Linking records to their state checkpoints

- Checkpoint indexing: Ensuring ordered state processing with incrementing IDs

- Dual state emission: States go to both Bookkeeper (for logging) and destination (for processing)

CDC Performance

CDC records from Debezium arrive as JSON, requiring conversion to Protobuf. While this creates minor overhead for incremental updates, the impact is minimal compared to initial snapshot gains. Future work aims to support direct Protobuf encoding from Debezium.

Real World Performance

To validate our design, we ran large-scale syncs from MySQL to S3 with the same 170.68 GB dataset:

Performance Comparison: Legacy vs Socket Architecture

| Category | Legacy (C4 Nodes) | Socket (C4 Nodes) | Legacy (N2D Nodes) | Socket (N2D Nodes) |

|---|---|---|---|---|

| Source Container | 4 CPU / 4 GB | 4 CPU / 4 GB | 2 CPU / 2 GB | 2 CPU / 2 GB |

| Destination Container | 4 CPU / 4 GB | 4 CPU / 4 GB | 2 CPU / 2 GB | 2 CPU / 2 GB |

| Orchestrator | 4 CPU / 4 GB | 1 CPU / 1 GB | 2 CPU / 2 GB | 1 CPU / 1 GB |

| Total Resources | 12 CPU / 12 GB | 9 CPU / 9 GB | 6 CPU / 6 GB | 5 CPU / 5 GB |

| Data Volume | 170.68 GB | 170.68 GB | 170.68 GB | 170.68 GB |

| Time Taken | 1h 35m | 22m | 5h 21m | 1h |

| Speedup | baseline | ~4x faster | baseline | ~5x faster |

| Resource Savings | baseline | 25% fewer | baseline | ~17% fewer |

Infrastructure Insights

Testing in production revealed surprising variations. Different Kubernetes node CPU types showed dramatic performance differences:

- C4 CPUs: Best performance

- N2D types: Good secondary option

Hardware efficiency mattered more than expected. These findings now guide our infrastructure decisions.

What's Next

Expanding Connector Support

The immediate goal is onboarding more connectors onto this new architecture. We're moving all certified sources to the new speed architecture, including Postgres, SQL Server, Snowflake, Oracle Enterprise and more.

The Aggregation of Marginal Gains

In 2003, when Great Britain's professional cycling body hired Dave Brailsford as performance director, he introduced the aggregation of marginal gains strategy. The idea was simple yet powerful: improve every component by just 1%, and together, the cumulative effect would be transformative. The results were historic. Between 2007 and 2017, British cyclists won 178 world championships, 66 Olympic or Paralympic gold medals, and five Tour de France victories.

This philosophy inspired James Clear's Atomic Habits and applies equally to software engineering. We're adopting the same mindset: continuously identifying small improvements that together unlock significant efficiency and speed gains.

Beyond connectors, we're investing in a better platform for building on this architecture. We already have a backlog of incremental improvements that, like those 1% gains in cycling, will push Airbyte's performance further than ever.

The Bottom Line

Achieving 4-10x performance improvements required rebuilding core platform components from scratch. The engineering effort validated our belief that accepted performance limits in data ingestion can be shattered through systematic profiling and bold architectural changes.

These improvements change the economics of data movement—faster syncs mean lower compute costs, quicker insights, and new use cases. As we expand SPEED optimizations to more connectors, we're committed to pushing the boundaries of what's possible in data integration performance.

Want to see these improvements in action? Try Airbyte and experience the difference SPEED makes. All features are available in the current Airbyte version 1.8.3 - no new install required!