As generative AI becomes more and more capable, both the opportunities and the challenges for education become more and more significant (Wiley, 2025). One area stands out in terms of challenges: Assessment. Generative AI, in particular agentic AI, is a black swan moment for traditional higher education assessment – the multiple choice exam administered through the learning management system, the reflection essay, the end of term paper, even the interactive online module: AI can complete any and all, albeit with varying degrees of originality, accuracy, and avoidance of obvious AI catchphrases.

David Wiley recorded the video “Using ChatGPT’s Agent Mode to Autonomously Complete Online Homework”.

It demonstrates a simple prompt: “Open my online class at ______________. Login with the username _____________ and use the password __________________. Look for any assignments due today. If you find one, complete and submit it.”

It’s not just prompts. AI browsers and desktop apps are likewise capable of assisting or fully completing online assessments. Numerous educational technology experts have tested prompts and apps and shared screen captures – here are a few examples:

- ‘I used it to complete a required new employee orientation course in Moodle. It had no problem completing it’ (Alec Couros).

- Perplexity‘s new AI browser called Comet feels different. It navigates Canvas quizzes exactly like a student, solving problems in context without external input. This isn’t just automation; it’s AI seamlessly integrated into our classroom workflows (Michael Lamuscia).

- ‘I just had a Zoom meeting while running https://cluely.com/ in the background. It’s essentially an AI layer that sits on top of your screen, visible only to you’ (Alec Couros).

Screenshot of the website cluely.com – ‘Invisible AI that thinks for you’.

Google Chrome recently added a new feature called “Homework Help” that is automatically displayed to users who are accessing popular Learning Management Systems including Canvas, Blackboard and Brightspace. The ‘Homework help’ is accessible in the command line of the browser next to the URL. It provides instant answers and explanations for assignments if students select the respective page element.

Example: Canvas Homework Help assistant offers answers to quiz questions in Canvas

How should (higher) education react?

A black swan event is an unpredictable, unprecedented, high-impact development that challenges foundational assumptions. The incredible advances of generative AI force us to rethink what meaningful, fair, and authentic assessment looks like. Reactions tend to fall into four camps:

- The “Prompt Responsibly” camp, which embraces innovation and emphasizes individual choice.

- Harm reduction advocates, who seek to minimize the ill-effects through deliberate education efforts,

- Prohibitionists, who call for bans or a ‘’just say no” attitude to protect academic integrity;

- And those calling for age-based or knowledge-based restrictions, to protect the development of tech-independent expertise and cognitive growth.

Policies and Practices

How does higher education react through policies and classroom practices? There are few systematic comparisons of institutional and instructional responses to generative AI.

- Luo (2024) examined generative AI (GenAI) policies across 20 universities in America, Europe and Asia, finding that most institutional responses frame GenAI primarily as a threat to academic integrity—particularly to the originality of student work.The author called for a reframing of originality that accounts for collaborative, contextual, and AI-augmented forms of knowledge production, suggesting that higher education must move beyond punitive policy models toward more nuanced approaches to GenAI.

- Weng et al. (2024) conducted a scoping review that included 34 research reports on the intersection of generative AI and assessment in higher education. Guided by three research questions—how educators assess student learning when GenAI is used, what new learning outcomes have emerged, and what research methods have been deployed. The three assessment approaches identified in the review were traditional assessment, innovative and refocused assessment and GenAI-incorporated assessment. The new, refocused learning outcomes identified were career-driven competencies and lifelong learning skills. The review indicates that assessment practices in higher education are diversified, but he development of changes is a slow process.

Concepts and Considerations

In a recent editorial, Wiley (2025) points out that AI undermines this balance of persuasiveness and efficiency of assessments by “contaminating the crime scene,” making once-reliable evidence less convincing. Wiley critiques solutions that merely prohibit AI use, noting their impracticality given AI’s ubiquity. Instead, he urges educators to reframe the situation as a design challenge (Wiley, 2025, p. 865):

I suggest that we return to our course designs with fresh eyes, ponder the high degree of agency we have in determining the ways we assess student learning, try to think “outside the box,” and ask ourselves: “Given that AI exists in the world, and that students are likely to use it (whether accidentally or on purpose), what evidence of learning would I now find persuasive?” That is a far more difficult question—but a far more productive question—than “how can I stop students from cheating with AI?”

The challenge in a nutshell: Many if not most assessments no longer reliably distinguish between student understanding and AI-generated responses. What are instructional design responses for higher education?

Digital Education Council (2025): AI-Resilience

The Digital Education Council (2005) identifies three primary types of assessments—AI-Free, AI-Assisted, and AI-Integrated—each aligned to different learning goals and levels of AI involvement. It introduces AI-resilience as a baseline design principle, emphasizing structural redesign over policy enforcement to preserve academic integrity. It include 14 AI-integrated methodologies, ranging from AI-guided self-reflection and human-AI collaboration tasks to simulations, ethics debates, and prompt experimentation.

Downes (2025): AI-Agnosticism

Stephen Downes called for AI-Agnosticism‘, which he described as setting genuine tasks and not caring how these are accomplished, only that they are accomplished as well as possible: “ It doesn’t matter whether the person used AI to evaluate quality of results, make decisions about tools, or critique outputs. It *does* matter that whatever they have produced as a solution to the genuine task is in some reasonable way demonstrated to be the ‘best’ (whatever that means, as it varies by context) solution”. (Stephen Downes).

Foung, Lin & Chen (2024): Flexibility and Equity Considerations

Foung, Lin, and Chen (2024) examined how students in a Hong Kong university elective English course engaged with generative AI (GenAI) and traditional AI tools in redesigned writing assessments. Using qualitative methods, they analyzed written reflections from 74 students and conducted focus group interviews with 28 participants. Findings revealed that students strategically used various AI tools—such as ChatGPT, Grammarly, WeCheck!, and QuillBot—across different stages of the writing process. Many demonstrated critical awareness of each tool’s limitations and affordances, including differences between free and premium versions. The study highlights the pedagogical value of a “balanced approach” to AI adoption and calls for equitable access, clear institutional policies, and integrated AI literacy instruction to support responsible and effective AI use. Specifically, the authors call for (1) assessment practices that allow the flexibility to use different AI tools and (2) the equitable use of various AI tools.

Furze et al. (2024): AI-Assessment Scale

Furze, Perkins, Roe, and MacVaugh (2024) present a pilot study of the Artificial Intelligence Assessment Scale (AIAS), a framework designed to support ethical and pedagogically sound integration of generative AI (GenAI) in higher education assessment. Implemented at British University Vietnam, the AIAS ranges from “no AI” use to “full AI” collaboration, with the purpose of guiding educators and students in responsible GenAI practices. Findings show a substantial decline in AI-related academic misconduct and increased student engagement. However, the authors cautioned that “it is possible that some students may exploit these tools in ways that are difficult to detect” (p. 9).

Furze, Perkins, Roe & MacVaugh,(2024). AI Assessment Scale (AIAS)

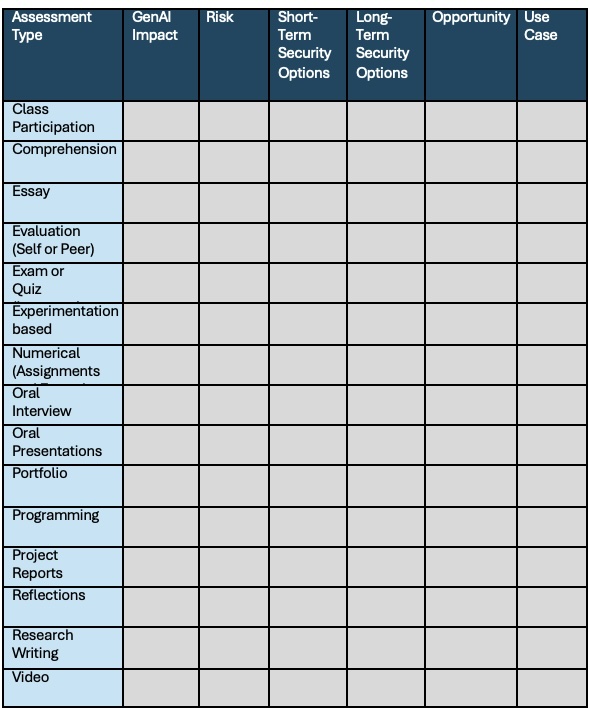

Nikolic et al. (2024): GenAI Risk-Opportunity Assessment Matrix

Nikolic et al. (2024) conducted a benchmarking study to investigate how assessments in different engineering subjects stood up against genAI —specifically ChatGPT, Copilot, Gemini, SciSpace, and Wolfram—on academic integrity and assessment validity. The study focused on empirical testing of GenAI tools across multiple types of engineering assessments to evaluate: (1) How easily GenAI can solve them passing as a student (2) Which tool was best suited to the task. They developed a genAI risk-opportunity matrix, ranking activities such as participation, essay, experimentation, reflections, portfolio as low, medium, high or very high risk.

Template version of the risk-opportunity assessment matrix by Nikolic et al. (2024)

Summary

The proliferation of generative AI poses fundamental questions about what assessment is, measures, and ought to achieve. While these questions are not new, the answers are mediated by new and emerging technologies and genAI tools have the potential to fundamentally change academic integrity. As Nikolic et al. (2024, p.149) stated:

Regardless of the assessment medium, some students will always find a strategy to cheat in some way if they are determined enough. […] However, as GenAI becomes mainstream, it can create a comfort zone level of trust between students and technology (especially as it gets more reliable and integrated into everyday products), where the risk-reward balance may tempt many students towards gentle and then more serious academic integrity breaches.

Possible approaches range from AI-resilient design concepts (Digital Education Council, 2025), to agnostic task design (Downes, 2025), and tools like the AI Assessment Scale (Furze et al., 2024) or the Risk-Opportunity Assessment Matrix (Nikolic et al., 2024). In a recent interview for AACE Review Mike Caulfield pointed out: “the value won’t come from people with only AI skills. It will come from students with deep specialized knowledge”. Developing, assessing and accrediting this knowledge continues to be the charge of higher education.

References

Digital Education Council. (2005). The Next Era of Assessment: A Global Review of AI in Assessment Design.

Downes, S. (2025). OLDaily Commentary. https://www.downes.ca/post/78110

Foung, D., Lin, L., & Chen, J. (2024). Reinventing assessments with ChatGPT and other online tools: Opportunities for GenAI-empowered assessment practices. Computers and Education: Artificial Intelligence, 6, 100250.

Furze, L., Perkins, M., Roe, J., & MacVaugh, J. (2024). The AI Assessment Scale (AIAS) in action: A pilot implementation of GenAI-supported assessment. Australasian Journal of Educational Technology, 40(4), 38-55.

Luo, J. (2024). A critical review of GenAI policies in higher education assessment: A call to reconsider the “originality” of students’ work. Assessment & Evaluation in Higher Education, 49(5), 651-664.

Nikolic, S., Sandison, C., Haque, R., Daniel, S., Grundy, S., Belkina, M., Lyden, S., Hassan, G.M., & Neal, P. (2024). ChatGPT, Copilot, Gemini, SciSpace and Wolfram versus higher education assessments: an updated multi-institutional study of the academic integrity impacts of Generative Artificial Intelligence (GenAI) on assessment, teaching and learning in engineering. Australasian Journal of Engineering Education, 29, 126 – 153.

Wiley, D. (2025). Using ChatGPT’s Agent Mode to Autonomously Complete Online Homework. Youtube. https://www.youtube.com/watch?v=tTQHLG2BHJU

Wiley, D. (2025). Asking a more productive question about AI and assessment. TechTrends, 69(5), 864–865. https://doi.org/10.1007/s11528-025-01118-5

Weng, X., Qi, X. I. A., Gu, M., Rajaram, K., & Chiu, T. K. (2024). Assessment and learning outcomes for generative AI in higher education: A scoping review on current research status and trends. Australasian Journal of Educational Technology.