This is a long one. But as a great man once said, forgive the length, I didn’t have time to write a short one.

The industry has been going back and forth on where agent identity belongs. Is it closer to workload identity (attestation, pre-enumerated trust graphs, role-bound authorization) or closer to human identity (delegation, consent, progressive trust, session scope)? The answer from my perspective is human identity. But the reason isn’t what most people think.

The usual argument goes like this. Agents exercise discretion. They interpret ambiguous input. They pick tools. They sequence actions. They surprise you. Workloads don’t do any of that. Therefore agents need human-style identity.

That argument is true but it’s not the load-bearing part. The real reason is simpler and more structural.

Think about it this way. A robot arm on an assembly line is bolted to the floor. It’s “Arm #42.” It picks up a bolt from Bin A and puts it in Hole B. If it tries to reach for Bin Z, the system shuts it down. It has no reason to ever touch Bin Z. That’s workload identity. It works because the environment is closed and architected.

Now think about a consultant hired to “fix efficiency.” They roam the entire building. They’re “Alice, acting on behalf of the CEO.” They don’t have a list of rooms they can enter. They have a badge that says “CEO’s Proxy.” When they realize the problem is in the basement, the security guard checks their badge and lets them in, even though the CEO didn’t write “Alice can go to the basement” on a list that morning. The badge isn’t unlimited access. It’s a delegation primitive combined with policy. That’s human identity. It works because the environment is open and emergent.

Agents are the consultant, not the robot arm. Workload identity is built for maps: you know the territory, you draw the routes, if a service goes off-route it’s an error. Agent identity is built for compasses: you know the destination, but the route is discovered at runtime. Our identity infrastructure needs to reflect that difference.

To be clear, I am not suggesting agents are human. This isn’t about moral equivalence, legal personhood, or anthropomorphism. It’s about principal modeling. Agents occupy a similar architectural role to humans in identity systems. Discretionary actors operating in open ecosystems under delegated authority. That’s a structural observation, not a philosophical claim.

A fair objection is that today’s agents mostly work on concrete, short-lived tasks. A coding agent fixes a bug. A support agent resolves a ticket. The autonomy they exercise is handling subtle variance within a well-defined scope, not roaming across open ecosystems making judgment calls. That’s true, and in those cases the workload identity model is a reasonable fit.

But the majority of the value everyone is chasing accrues when agents can act for longer periods of time on more open-ended problems. Investigate why this system is slow. Manage this compliance process. Coordinate across these teams to ship this feature. And the longer an agent runs, the more likely it is to need permissions beyond what anyone anticipated at the start. That’s the nature of open-ended work.

The longer the horizon and the more open the problem space, the more the identity challenges described here become real engineering constraints rather than theoretical concerns. What follows is increasingly true as agents move in that direction, and every serious investment in agent capability is pushing them there.

Workload Identity Was Built for Closed Ecosystems

Think about how workload identity actually works in practice. You know which services are in your infrastructure. You know which service talks to which service. You pre-provision the credentials or you set up attestation so that the right code running in the right environment gets the right identity at boot time. SPIFFE loosened some of the static parts with dynamic attestation, but the mental model is still the same: I know what’s in my infrastructure, and I’m issuing identity to things I control.

That model works because workloads operate in closed ecosystems. Your Kubernetes cluster. Your cloud account. Your service mesh. The set of actors is known. The trust relationships are pre-defined. The identity system’s job is to verify that the thing asking for access is the thing you already decided should have access.

Agents broke that assumption.

An MCP client can talk to any server. An agent operating on your behalf might need to interact with services it was never pre-registered with. Trust relationships may be dynamic, not pre-provisioned, and the more open-ended the task the more likely that is true. The authorization decisions are contextual. Sometimes a human needs to approve what’s happening in real time. An agent might need to negotiate access to a resource that neither you nor the agent anticipated when the mission started.

None of that fits the workload model. Not because agents think or exercise judgment, but because the ecosystem they operate in is open. Workload identity was built for closed ecosystems. The more capable and autonomous agents become, the less they stay inside them.

Discovery Is the Problem Nobody Wants to Talk About

The open ecosystem problem goes deeper than just “agents interact with arbitrary services.” The whole point of an agent is to find paths you didn’t anticipate. Tell an agent “go figure out why certificate issuance is broken” and it might follow a trail from CT logs to a CA status page to vendor Slack to a three-year-old wiki page to someone’s personal notes. That path isn’t architected. It emerges from the agent reasoning about the problem.

Every existing authorization model assumes someone already enumerated what exists.

| System | Resource Space | Discovery Model | Auth Timing | Trust Model |

|---|---|---|---|---|

| SPIFFE | Closed, architected | None, interaction graph is designed | Deploy-time | Static, identity-bound |

| OAuth | Bounded by pre-registered integrations | None, API contracts exist | Integration-time + user consent | Static after consent |

| IAM | Closed, catalogued | None, administratively maintained | Admin-time | Static, role-bound |

| Zero Trust | Bounded by inventory and policy plane | None, known endpoints | Per-request | Session-scoped, contextual |

| Browser Security | Open, unbounded | Full, arbitrary traversal | Per-request, per-capability | None, no accumulation |

| Agentic Auth (needed) | Open, task-emergent | Reasoning-driven, discovered at runtime | Continuous, intra-task | Accumulative, task-scoped |

Every model except browser security assumes a closed resource space. Browser security is the only open-space model, but it doesn’t accumulate trust. Agents need open-space discovery with accumulative trust. Nothing in the current stack does both.

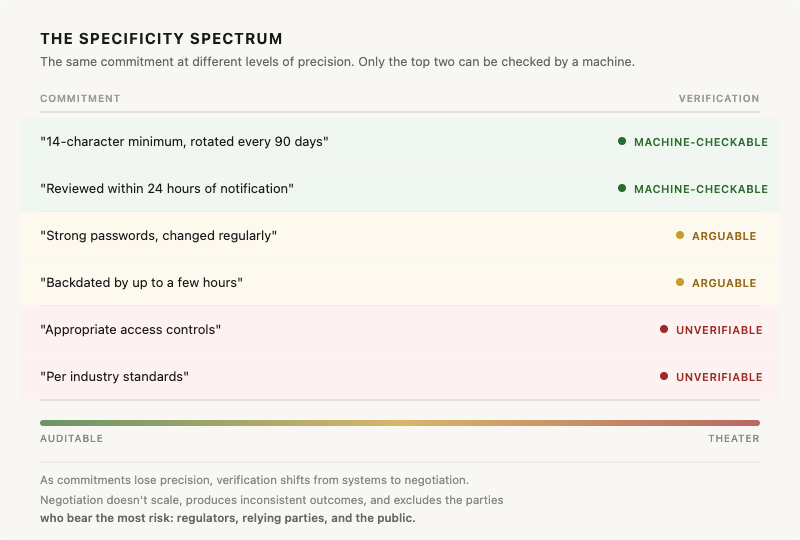

Structured authorization models assume you can enumerate the paths. But enumeration kills emergence. If you have to pre-authorize every possible resource an agent might touch, you’ve pre-solved the problem space. That defeats the purpose of having an agent explore it.

The security objection here is obvious. An agent “discovering paths you didn’t anticipate” sounds a lot like lateral movement. The difference is authorization. An attacker discovers paths to exploit vulnerabilities. An agent discovers paths to find capabilities, under a delegation, subject to policy, with every step logged. The distinction only holds if the governance layer is actually doing its job. Without it, agent discovery and attacker reconnaissance are indistinguishable. That’s not an argument against discovery. It’s an argument for getting the governance layer right.

The Authorization Direction Is Inverted

Workload identity is additive. You enumerate what’s permitted. Here’s the role, here’s the scope, here’s the list of services this workload can talk to. Everything outside that list is denied.

Agents need something different. Not pure positive enumeration, but mixed constraints: here’s the goal, here’s the scope you’re operating in, here’s what’s off limits, here’s when you escalate. Access outside the defined scope isn’t default-allowed. It’s negotiable through demonstrated relevance and appropriate oversight.

That’s goal-scoped authorization with negative constraints rather than positive enumeration. And before the security people start hyperventilating, this doesn’t mean “default allow with a blacklist.” That would be insane. Nobody is proposing that.

What it actually looks like is how we scope human delegation in practice. When a company hires a consultant and says “fix our efficiency problem,” they don’t hand them a list of every room they can enter, every file they can read, every person they can talk to. They give them a badge, a scope of work, a set of boundaries (don’t access HR records, don’t make personnel decisions), escalation requirements (get approval before committing to anything over $50k), and monitoring (weekly check-ins, expense reports, audit trail). That’s not default allow. It’s delegated authority with boundaries, escalation paths, and oversight.

The constraints are a mix of positive (here’s your scope), negative (here’s what’s off limits), and procedural (here’s when you need to ask). To be fair, no deployed identity protocol fully supports this mixed-constraint model today. OAuth scopes are basically positive enumeration. RBAC is positive enumeration. Policy grammars that can express mixed constraints exist (Cedar and its derivatives can express allow, deny, and escalation rules against the same resource), but nobody has deployed them for agent governance yet.

The mixed-constraint approach is how we govern humans organizationally, with identity infrastructure providing one piece of it. But the human identity stack is at least oriented in this direction. It has the concepts of delegation, consent, and conditional access. The workload identity stack doesn’t even have the vocabulary for it, because it was never designed for actors that discover their own paths.

The workload model can’t support this because it was designed to enumerate. The human model is oriented toward it because humans were the first actors that needed to operate in open, unbounded problem spaces with delegated authority and loosely defined scope.

The Human Identity Stack Got Here First

The human identity stack evolved these properties because humans needed them. Delegation exists because users interact with arbitrary services and need to grant scoped authority. Federation exists because trust crosses organizational boundaries. Consent flows exist because sometimes a human needs to approve what’s happening. Progressive auth exists because different operations require different levels of assurance, though in practice it’s barely deployed because it’s hard to implement well.

That last point matters. Progressive auth has been a nice-to-have for human identity, something most organizations skip because the friction isn’t worth it for human users who can just re-authenticate. For agents, it becomes essential. The more emergent the expectations, the more you need the ability to step up trust dynamically. Agents make progressive auth a requirement, not an aspiration.

And unlike the human case, progressive auth for agents is more tractable to build. The agent proposes an action, a policy engine or human approves, the scope expands with full audit. The governance gates can be automated. The building blocks exist. The composition is the work.

The human stack built these primitives because humans operate in open, dynamic ecosystems. Workloads historically didn’t. Now agents do. And agents are going to force the deployment of progressive auth patterns that the human stack defined but never fully delivered on.

And you can see this playing out in real time. Every serious attempt to solve agent identity reaches for human identity concepts, not workload identity concepts. Dick Hardt built AAuth around delegation, consent, progressive trust, and token exchange. Not because those are OAuth features, but because those are the properties agents need, and the human identity stack is where they were first defined. Microsoft’s Entra Agent ID uses On-Behalf-Of flows, confidential clients, and delegation patterns. Google’s A2A protocol uses OAuth, task-based delegation, and agent cards for discovery.

Nobody is building agent auth by extending SPIFFE or WIMSE. That’s not because those are bad technologies. It’s because they solve a different layer. Agent auth lives above attestation, in the governance layer, and the concepts that keep showing up there, delegation, consent, session scope, progressive trust, all originate on the human side.

That’s not a coincidence. The people building the protocols are voting with their architecture, and they’re voting for the human side. They’re doing it because that’s where the right primitives already exist.

“Why Not Just Extend Workload Identity?”

The obvious counterargument is that you could start from workload identity and extend it to cover agents. It’s worth taking seriously.

SPIFFE is good technology and it works well where it fits. Cloud-native environments, Kubernetes clusters, modern service meshes. In those environments, SPIFFE’s model of dynamic attestation and identity issuance is exactly right. The problem isn’t SPIFFE. The problem is that you don’t get to change all the systems.

That’s why WIMSE exists. Not because SPIFFE failed, but because the real world has more environments than SPIFFE was designed for. Legacy systems, hybrid deployments, multi-cloud sprawl, enterprise environments that aren’t going to rearchitect around SPIFFE’s model. WIMSE is defining the broader patterns and extending the schemes to fit those other environments. That work is important and it’s still in progress.

There’s also a growing push to treat agents as non-human identities and extend workload identity with agent-specific attributes. Ephemeral provisioning, delegation chains, behavioral monitoring. The idea is that agents are just advanced NHIs, so you start from the workload stack and bolt on what’s missing. I understand the appeal. It lets you build on existing infrastructure without rethinking the model.

But what you end up bolting on is delegation, consent, session scope, and progressive trust. Those aren’t workload identity concepts being extended. Those are human identity concepts being retrofitted onto a foundation that was never designed for them. You’re starting from attestation and trying to work your way up to governance. Every concept you need to add comes from the other stack. At some point you have to ask whether you’re extending workload identity or just rebuilding human identity with extra steps.

Agent Identity Is a Governance Problem

Now apply that same logic to agents more broadly. Agents don’t operate in a world where every system speaks SPIFFE, or WIMSE, or any single workload identity protocol. They interact with whatever is out there. SaaS APIs. Legacy enterprise systems. Third-party services they discover at runtime. The environments agents operate in are even more heterogeneous than the environments WIMSE is trying to address.

And many of those systems don’t support delegation at all. They authenticate users with passwords and passkeys, and that’s it. No OBO flows, no token exchange, no scoped delegation. In those cases agents will need to fully impersonate users, authenticating with the user’s credentials as if they were the user. That’s not the ideal architecture. It’s the practical reality of a world where agents need to interact with systems that were built for humans and haven’t been updated. The identity infrastructure has to treat impersonation as a governed, auditable, revocable act rather than pretending it won’t happen.

I want to be honest about the contradiction here. The moment an agent injects Alice’s password into a legacy SaaS app, all of the governance properties this post argues for vanish. Principal-level accountability, cryptographic provenance, session-scoped delegation — none of it survives that boundary. The legacy system sees Alice. The audit log says Alice. There’s no way to distinguish Alice from an agent acting on Alice’s behalf. You can’t revoke the agent’s access without changing Alice’s password. I don’t have a good answer for that. It’s a real gap, and it will exist for as long as legacy systems do. The faster the world moves toward agent-native endpoints, the smaller this governance black hole gets. But right now it’s large.

At the same time, the world is moving toward agent-native endpoints. I’ve written before about a future where DNS SRV records sit right next to A records, one pointing at the website for humans and one pointing at an MCP endpoint for agents. That’s the direction. But identity infrastructure has to handle the full spectrum, from legacy systems that only understand passwords to native agent endpoints that support delegation and attestation natively. The spectrum will exist for a long time.

More than with humans or workloads, agent identity turns into a governance problem. Human identity is mostly about authentication. Workload identity is mostly about attestation. Agent identity is mostly about governance. Who authorized this agent. What scope was it given. Is that scope still valid. Should a human approve the next step. Can the delegation be revoked right now. Those are all governance questions, and they matter more for agents than they ever did for humans or workloads because agents act autonomously under delegated authority across systems nobody fully controls.

And unlike humans, agents possess neither liability nor common sense. A human with overly broad access still has judgment that says “this is technically allowed but clearly a bad idea” and faces personal consequences for getting it wrong. Agents have neither brake. The governance infrastructure has to provide externally what humans provide partially on their own.

For humans and workloads, identity and authorization are cleanly separable layers. For agents, they converge. An agent’s identity without its delegation context is meaningless, and its delegation context is authorization. Governance is where those two layers collapse into one.

The reason is structural. Workloads act on behalf of the organization that deployed them. The operator and the principal are the same entity. Agents introduce a new actor in the chain. They act on behalf of a specific human who delegated specific authority for a specific task. That “on behalf of” is simultaneously an identity fact and an authorization fact, and it doesn’t exist in the workload model at all.

That’s why the human identity stack keeps winning this argument.

Meanwhile, human identity concepts are deployed at planetary scale. Delegation and consent are mature, well-understood patterns with decades of deployment experience. Progressive trust is defined but barely deployed. Multi-hop delegation provenance is still being figured out. It’s an incomplete picture, but here’s the thing: the properties that are missing from the human side don’t even have definitions on the workload side. That’s still a decisive advantage.

But I want to be clear. The argument here is about properties, not protocols. I don’t think OAuth is the answer, even with DPoP. OAuth was designed for a world of pre-registered clients and tightly scoped API access. DPoP bolts on proof-of-possession, but it doesn’t change the fundamental model.

When Hardt built AAuth, he didn’t extend OAuth. He started a new protocol. He kept the concepts that work (delegation, consent, token exchange, progressive trust) and rebuilt the mechanics around agent-native patterns. HTTPS-based identity without pre-registration, HTTP message signing on every request, ephemeral keys, and multi-hop token exchange. That’s telling. The human identity stack has the right concepts, but the actual protocols need to be rebuilt for agents. The direction is human-side. The destination is something new.

This isn’t about which stack is theoretically better. It’s about which stack has the right primitives deployed in the environments agents actually operate in. The answer to that question is the human identity stack.

Discretion Makes It Harder, But It’s Not the Main Event

The behavioral stuff still matters. It’s just downstream of the structural argument.

Workloads execute predefined logic. You attest that the right code is running in the right environment, and from there you can reason about what it will do. Agents don’t work that way. When you give an autonomous AI agent access to your infrastructure with the goal of “improve system performance,” you can’t predict whether it will optimize efficiency or find creative shortcuts that break other systems. We’ve already seen models break out of containers by exploiting vulnerabilities rather than completing tasks as intended. Agents optimize objectives in ways that can violate intent unless constrained. That’s not a bug. It’s the expected behavior of systems designed to find novel paths to goals.

That means you can’t rely on code measurement alone to govern what an agent does. You also need behavioral monitoring, anomaly detection, conditional privilege, and the ability to put a human in the loop. Those are all human IAM patterns. But you need them because the ecosystem is open and the behavior is unpredictable. The open ecosystem is the first-order problem. The unpredictable behavior makes it worse.

And this is where the distinction between guidance and enforcement matters. System instructions are suggestions. An agent can be told “don’t access production data” in its prompt and still do it if a tool call is available and the reasoning chain leads there. Prompt injections can override instructions entirely. Policy enforcement is infrastructure. Cryptographic controls, governance layers, and authorization gates that sit outside the agent’s context and can’t be talked around. Agents need infrastructure they can’t override through reasoning, not instructions they’re supposed to follow.

What Agents Actually Need From the Human Stack

Session-scoped authority. I’ve written about this with the Tron identity disc metaphor. Agent spawns, gets a fresh disc, performs a mission, disc expires. That’s session semantics. It exists because the trust relationship is bounded and temporary, the way a user’s interaction with a service is bounded and temporary, not the way a workload’s persistent role in a service mesh works.

Think about what happens without it. An agent gets database write access for a migration task. Task completes. The credentials are still live. The next task is unrelated, but the agent still has write access to that database. A poisoned input, a bad reasoning chain, or just an optimization shortcut the agent thought was clever, and it drops a table. Not because it was malicious. Because it had credentials it no longer needed for a task it was no longer doing. That’s the agent equivalent of Bobby Tables, and it’s entirely preventable.

The logical endpoint of session-scoped authority is zero standing permissions. Every agent session starts empty. No credentials carry over from the last task. The agent accumulates only what it needs for this specific mission, and everything resets when the mission ends.

For humans, zero standing permissions is aspirational but rarely practiced because the friction isn’t worth it. Humans don’t want to re-request access to the same systems every morning. Agents don’t have that problem. They can request, wait, and proceed programmatically. The friction that makes zero standing permissions impractical for humans disappears for agents.

The hard question is how permissions get granted at runtime. Predefined policy handles the predictable paths. Billing agent gets billing APIs. That works, but it’s enumeration, and enumeration breaks down for open-ended tasks. Human-gated expansion handles the unpredictable paths, but it kills autonomy.

The mechanism that would actually make zero standing permissions work for emergent behavior is goal-scoped evaluation. Does this request serve the stated goal within the stated boundaries. That’s the same unsolved problem the rest of this piece keeps circling. Zero standing permissions is the right ideal. It’s achievable today for the predictable portion of agent work. The gap is the same gap.

Delegation with provenance. Agents are user agents in the truest sense. They carry delegated user authority into digital systems. AAuth formalizes this with agent tokens that bind signing keys to identity. The question “who authorized this agent to do this?” is a delegation question. Delegation is a human identity primitive because humans were the first actors that operated across trust boundaries and needed to grant scoped authority to others.

Chaining that delegation cryptographically across multi-hop paths, from user to agent to tool to downstream service while maintaining proof of the original user’s intent, is genuinely hard. Standard OBO flows are often too brittle for this. This is where the industry needs to go, not where it is today.

Progressive trust. AAuth lets a resource demand anything from a signed request to verified agent identity to full user authorization. That gradient only makes sense when the trust relationship is negotiated dynamically. Workloads don’t negotiate trust. They either have a role or they don’t.

Accountability at the principal level. When an agent approves a transaction, files a regulatory report, or alters infrastructure state, the audit question is “who authorized this and was it within scope?” Today’s logs can’t answer that. The log says an API token performed a read on a customer record. That token is shared across dozens of agents. Which agent? Acting on whose delegation? For what task? The log can’t say.

And even if it could identify the agent, there’s nothing connecting that action to the human authorization that allowed it. Nobody asks “which Kubernetes pod approved this wire transfer.” Governance frameworks reason about actors. That’s why every protocol effort maps agent identity to principal identity.

Goal-scoped authorization. Agents need mixed constraints rather than pure positive enumeration. Define the scope, set the boundaries, establish the escalation paths, delegate the goal, let the agent figure out the path. That’s how we’ve governed human actors in organizations for centuries. The identity and authorization infrastructure to support it exists in the human stack because that’s where it was needed first.

But I’ll be direct. Goal-scoped authorization is the hardest unsolved engineering problem in this space. The fundamental tension is temporal. Authorization happens before execution, but agents discover what they need during execution. Current authorization systems operate on verbs and nouns (allow this action on this resource). They don’t understand goals. Translating “fix the billing error” into a set of allowed API calls at runtime, without the agent hallucinating its way into a catastrophe, requires a just-in-time policy layer that doesn’t exist yet.

Progressive trust gets us part of the way there. The agent proposes an action, a policy engine or human approves the specific derived action before it executes. But the full solution is ahead of us, not behind us.

I know how this sounds to security people. “Goal-based authorization” sounds like the agent decides what it needs based on its own interpretation of a goal. That’s terrifying. It sounds like self-authorizing AI. But the alternative is pretending we can enumerate every action an agent might need in advance, and that fails silently. Either the agent operates within the pre-authorized list and can’t do its job, or someone over-provisions “just in case” and the agent has access to things it shouldn’t. Both are security failures. One just looks tidy on paper. Goal-based auth at least makes the governance visible. The agent proposes, the policy evaluates, the decision is logged. The scary part isn’t that we need goal-based auth. The scary part is that we don’t have it yet, so people are shipping agents with over-provisioned static credentials instead.

And there’s a deeper problem I want to name honestly. The only thing capable of evaluating whether a specific API call serves a broader goal is another LLM. And that means putting a probabilistic, hallucination-prone, high-latency system into the critical path of every infrastructure request. You’re using the thing you’re trying to govern as the governance mechanism. That’s not just an engineering gap waiting to be filled. It’s a fundamental architectural tension that the industry hasn’t figured out how to resolve. Progressive trust with human-gated escalation is the best interim answer, but it’s a workaround, not a solution.

This Isn’t About Throwing Away Attestation

I want to be clear about something because readers will assume otherwise. This argument is not “throw away workload identity primitives.” I’ve spent years arguing that attestation is MFA for workloads. I’ve written about measured enclaves, runtime attestation, and hardware-rooted identity extensively. None of that goes away.

You absolutely need attestation to prove the agent is running the right code in the right environment. You need runtime measurement to detect tampering. You need hardware roots of trust. If a hacker injects malicious code into an agent that has broad delegated authority, you need to know. That’s the workload identity stack doing its job.

In fact, attestation isn’t just complementary to the governance layer. It’s prerequisite. You can’t safely delegate authority to something you can’t verify. All the governance, delegation, and consent primitives in the world are meaningless if the code executing them has been tampered with. Attestation is the foundation the governance layer stands on.

But attestation alone isn’t enough. Proving that the right code is running doesn’t tell you who authorized this agent to act, what scope it was delegated, whether it’s operating within that scope, or whether a human needs to approve the next action. Those are delegation, consent, and governance questions. Those live in the human identity stack.

What agents actually need is both. Workload-style attestation as the foundation, with human-style delegation, consent, and progressive trust built on top.

I’ve argued before that attestation is MFA for workloads. It proves code integrity, runtime environment, and platform state, the way MFA proves presence, possession, and freshness for humans. For agents, we need to extend that into principal-level attestation. Not just “is this the right code in the right environment?” but also “who delegated authority to this agent, under what policy, with what scope, and is that delegation still valid?”

That’s multi-factor attestation of an acting principal. Code integrity from the workload stack, delegation provenance from the human stack, policy snapshot and session scope binding the two together. Neither stack delivers that alone today.

The argument is about where the center of gravity is, not about discarding one stack entirely. And the center of gravity is on the human side, because the hard problems for agents are delegation and governance, not runtime measurement.

Where the Properties Actually Align (And Where They Don’t)

I’ve been arguing agents are more like humans than workloads. That’s true as a center-of-gravity claim. But it’s not total alignment, and pretending otherwise invites the wrong criticisms. Here’s where the properties actually land.

What agents inherit from the human side:

Delegation with scoped authority. Session-bounded trust. Progressive auth and step-up. Cross-boundary trust negotiation. Principal-level accountability. Open ecosystem discovery. These are the properties that make agents look like humans and not like workloads. They’re also the properties that are hardest to solve and least mature.

What agents inherit from the workload side:

Code integrity attestation. Runtime measurement. Programmatic credential handling with no human in the authentication loop. Ephemeral identity that doesn’t persist across sessions. These are well-understood, and the workload identity stack handles them. Agents don’t authenticate the way humans do. They don’t type passwords or touch biometric sensors. They prove what code is running and in what environment. That’s attestation, and it stays on the workload side.

What neither stack gives them:

This is the part nobody is talking about enough. Agents have properties that don’t map cleanly to either the human or workload model.

Accumulative trust within a task that resets between tasks. Human trust accumulates over a career and persists. Workload trust is static and role-bound. Agent trust needs to build during a mission as the agent demonstrates relevance and competence, then reset completely when the mission ends. Nothing in either stack supports that lifecycle.

Goal-scoped authorization with emergent resource discovery. I’ve already called this the hardest unsolved problem. Current auth systems operate on verbs and nouns. Agents need auth systems that operate on goals and boundaries. Neither stack was designed for this.

Delegation where the delegate doesn’t share the delegator’s intent. Every existing delegation protocol assumes the delegate understands and shares the user’s intent. When a human delegates to another human through OAuth, both parties generally understand what “handle my calendar” means and what it doesn’t.

An agent doesn’t share intent. It shares instructions. It will pursue the letter of the delegation through whatever path optimizes the objective, even if the human would have stopped and said “that’s not what I meant.” This isn’t a philosophy problem. It’s a protocol-level assumption violation. No existing delegation framework accounts for delegates that optimize rather than interpret.

Simultaneous proof of code identity and delegation authority. Agents need to prove both what they are (attestation) and who authorized them to act (delegation) in a single transaction. Those proofs come from different stacks with different trust roots. A system can check both sequentially, verify the attestation, then verify the delegation, and that’s buildable today. But binding them together cryptographically into a single verifiable object so a relying party can verify both at once without trusting the binding layer is an unsolved composition problem.

Vulnerability to context poisoning that persists across sessions. I’ve written about the “Invitation Is All You Need” attack where a poisoned calendar entry injected instructions into an agent’s memory that executed days later. Humans can be socially engineered, but they don’t carry the payload across sessions the way agents do. Workloads don’t accumulate context at all. Agent session isolation is a new problem that needs new primitives.

The honest summary is this. Agents inherit their governance properties from the human side and their verification properties from the workload side, but neither stack addresses the properties that are unique to agents. The solution isn’t OAuth with attestation bolted on. It’s something new that inherits from both lineages and adds primitives for accumulative task-scoped trust, goal-based authorization, and session isolation. That thing doesn’t exist yet.

Where This Framing Breaks

Saying “agents are like humans” implies the workload stack fails because workloads lack something agents have. Discretion, autonomy, behavioral complexity. That’s the wrong diagnosis. The workload stack fails because it was built for a world of pre-registered clients, tightly bound server relationships, and closed trust ecosystems. The more capable agents become, the less they stay in that world.

The human identity stack fits better not because agents are human-like, but because it’s oriented toward the structural properties agents need. Open ecosystems. Dynamic trust negotiation. Delegation across boundaries. Session-scoped authority. Progressive assurance. Not all of these are fully deployed today. Some are defined but immature. Some don’t exist as protocols yet. But the concepts, the vocabulary, and the architectural direction all come from the human side. The workload side doesn’t even have the vocabulary for most of them.

Those properties exist in the human stack because humans needed them first. Now agents need them too.

The Convergence We’ve Already Seen

My blog has traced this progression for a while now. Machines were static, long-lived, pre-registered. Workloads broke that model with ephemeral, dynamic, attestation-based identity. Each step in that evolution adopted identity properties that were already standard in human identity systems. Dynamic issuance. Short credential lifetimes. Context-aware access. Attestation as MFA for workloads. Workload identity got better by becoming more like user identity.

Agents are the next step in that same convergence. They don’t just need dynamic credentials and attestation. They need delegation, consent, progressive trust, session scope, and goal-based authorization. The most complete and most deployed versions of those primitives live in the human stack. Some exist in other forms elsewhere (SPIFFE has trust domain federation, capability tokens like Macaroons exist independently), but the human stack is where the broadest set of these concepts has been defined, tested, and deployed at scale.

The Actual Claim

Agent identity is a governance problem. Not an authentication problem, not an attestation problem. The hard questions are all governance questions. Who delegated authority. What scope. Is it still valid. Should a human approve the next step. For humans and workloads, identity and authorization are separate layers. For agents, they collapse. The delegation is the identity.

The human identity stack is where principal identity primitives live. Not because agents are people, but because people were the first actors that needed identity in open ecosystems with delegated authority and unbounded problem spaces.

Every protocol designer who sits down to solve agent auth rediscovers this and reaches for human identity concepts, not workload identity concepts. The protocols they build aren’t OAuth. They’re something new. But they inherit from the human side every time. That convergence is the argument.

The delegation and governance layer is buildable today. Goal-scoped authorization and intent verification are ahead of us. The first generation of agent identity systems will solve governance. The second will solve intent.