Fortunately, it turns out you can deterministically transform this character-based FSM into another FSM that works with tokens instead. The following code gives an example of how this can be accomplished in Outlines:

from outlines.fsm.regex import make_deterministic_fsm, create_fsm_index_tokenizer new_fsm, _ = make_deterministic_fsm(fsm) index, _ = create_fsm_index_tokenizer(new_fsm, tokenizer)

The index object is a dictionary that maps the states of the finite state machines to possible transitions; the transitions are represented as a dictionary that maps the allowed tokens to the next state of the FSM we need to be should we sample this token.

The procedure to generate the first token is:

- Pass the prompt to the model, get the next-token probability distribution.

- Start the FSM in state 0. List all the tokens that correspond to a valid transition with

index[0].keys(). - Use the probability distribution to sample one of these tokens, say

X. - Follow the transition that corresponds to this token and move to the corresponding state with

new_state = index[0]["X"]

Let's take a look at this index, and translate the token ids to tokens to understand what is going on:

index_with_tokens = {} for state, transitions in index.items(): transitions = { tokenizer.tokenizer.decode([key]): value for key, value in transitions.items() } index_with_tokens[state] = transitions for state, transitions in index_with_tokens.items(): print(f"{state}: {transitions}")

0: {'{': 1, '{"': 2}

1: {'"': 2}

2: {'na': 4, 'nam': 5, 'name': 6, 'n': 3}

3: {'a': 4, 'ame': 6, 'am': 5}

4: {'me': 6, 'm': 5}

5: {'e': 6}

6: {'"': 7, '":': 8, '":"': 9}

7: {':': 8, ':"': 9}

8: {'"': 9}

9: {'P': 11, 'Paul': 14, 'Pa': 12, 'J': 10, 'Jo': 26, 'John': 14}

10: {'o': 26, 'oh': 27, 'ohn': 14}

11: {'au': 13, 'a': 12, 'aul': 14}

12: {'ul': 14, 'u': 13}

13: {'l': 14}

14: {'","': 17, '",': 16, '"': 15}

15: {',"': 17, ',': 16}

16: {'"': 17}

17: {'age': 20, 'a': 18, 'ag': 19}

18: {'g': 19, 'ge': 20}

19: {'e': 20}

20: {'"': 21, '":': 22}

21: {':': 22}

22: {'20': 24, '2': 23, '3': 23, '30': 24}

23: {'0': 24}

24: {'}': 25}

26: {'hn': 14, 'h': 27}

27: {'n': 14}

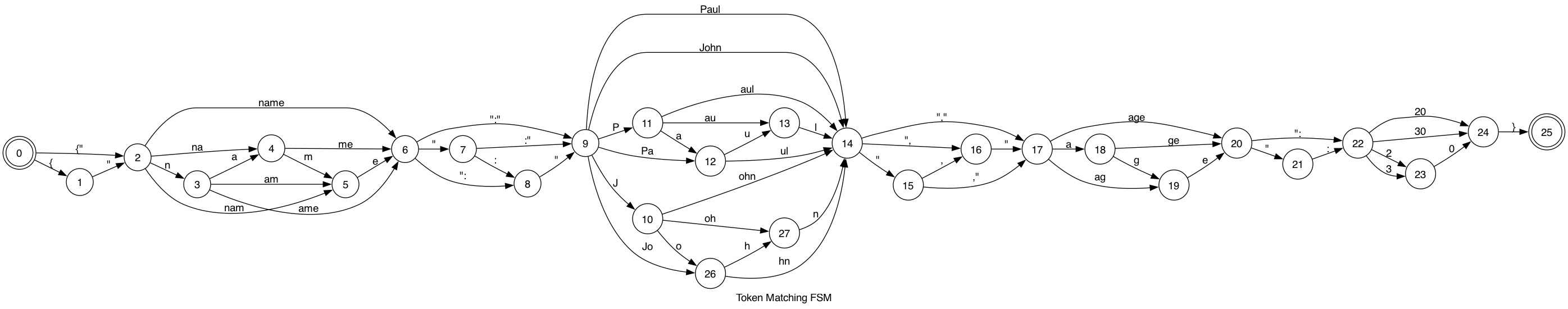

Numbers represent the states of the FSM, and strings the tokens in the model’s vocabulary. We can also visualize this entire FSM, it’s quite a bit more complex than our first one.

Despite this added complexity, walking through this is just as easy as in our original generation example.

It’s essential to note that each transition in our FSM represents a expensive call to the LLM. In vanilla generation all of these calls would also be necessary. Our use of FSMs to represent regular expressions means controlling the output requires virtually no additional cost over vanilla generation. However, we don’t have to settle with simply no added cost: with structured generation we have the potential for much faster generation if we can figure out a way to skip calls to the LLM.